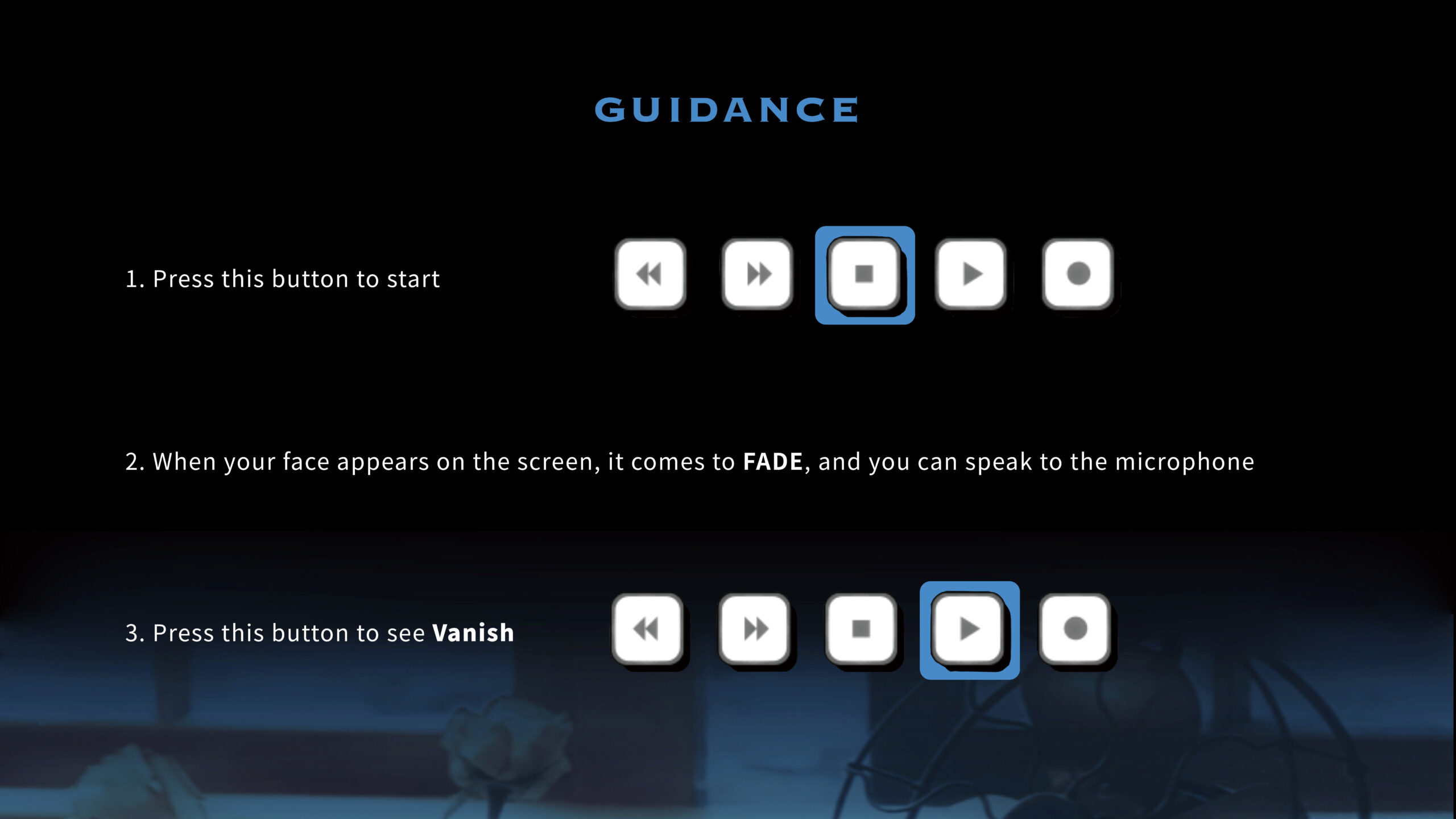

In order to guide the experiencer to be able to switch screens and try out different effects with the help of buttons, I designed a guidance that was inserted into the computer display screen to guide the experiencer through the process.

dmsp-process24

In order to guide the experiencer to be able to switch screens and try out different effects with the help of buttons, I designed a guidance that was inserted into the computer display screen to guide the experiencer through the process.

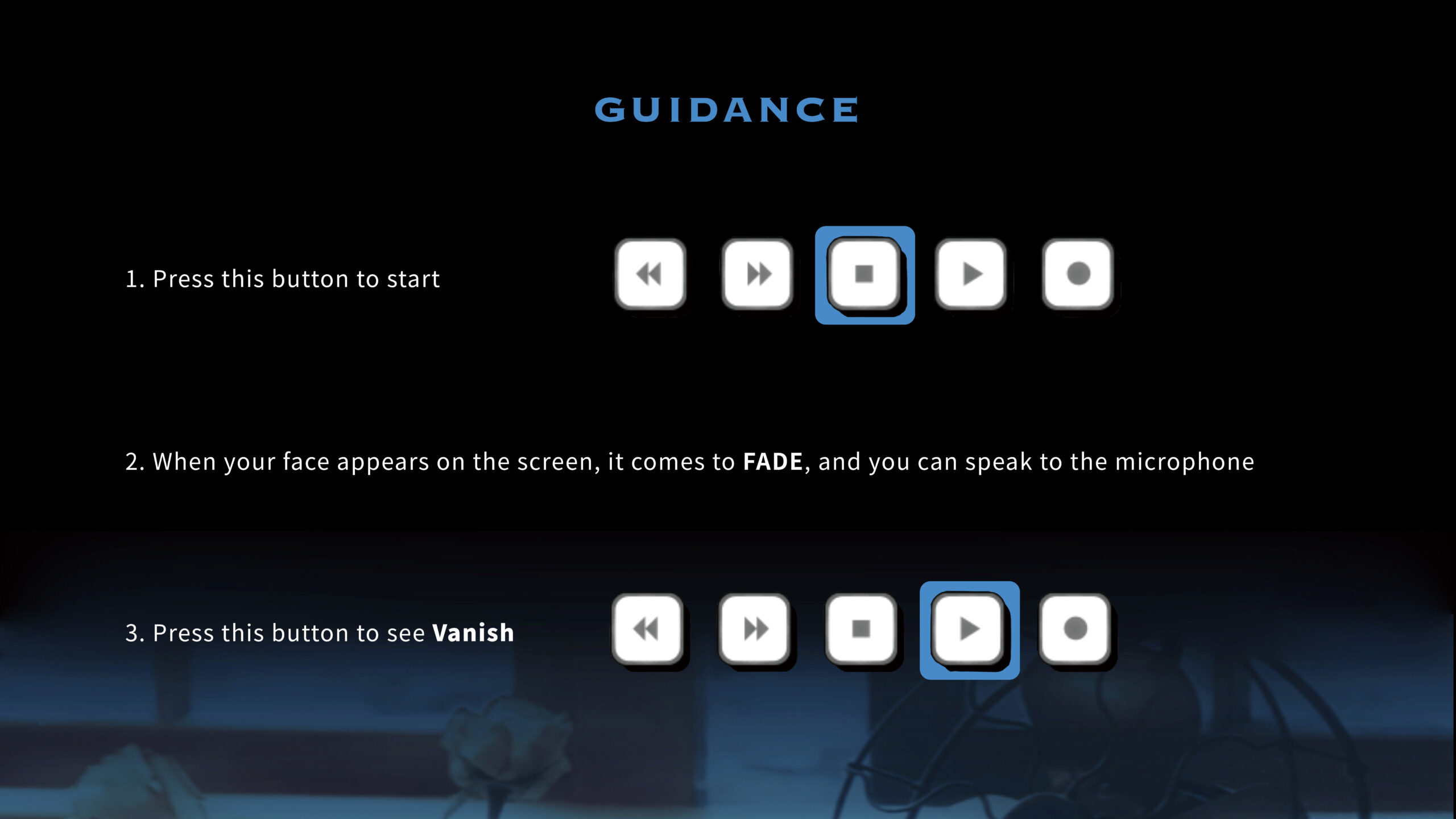

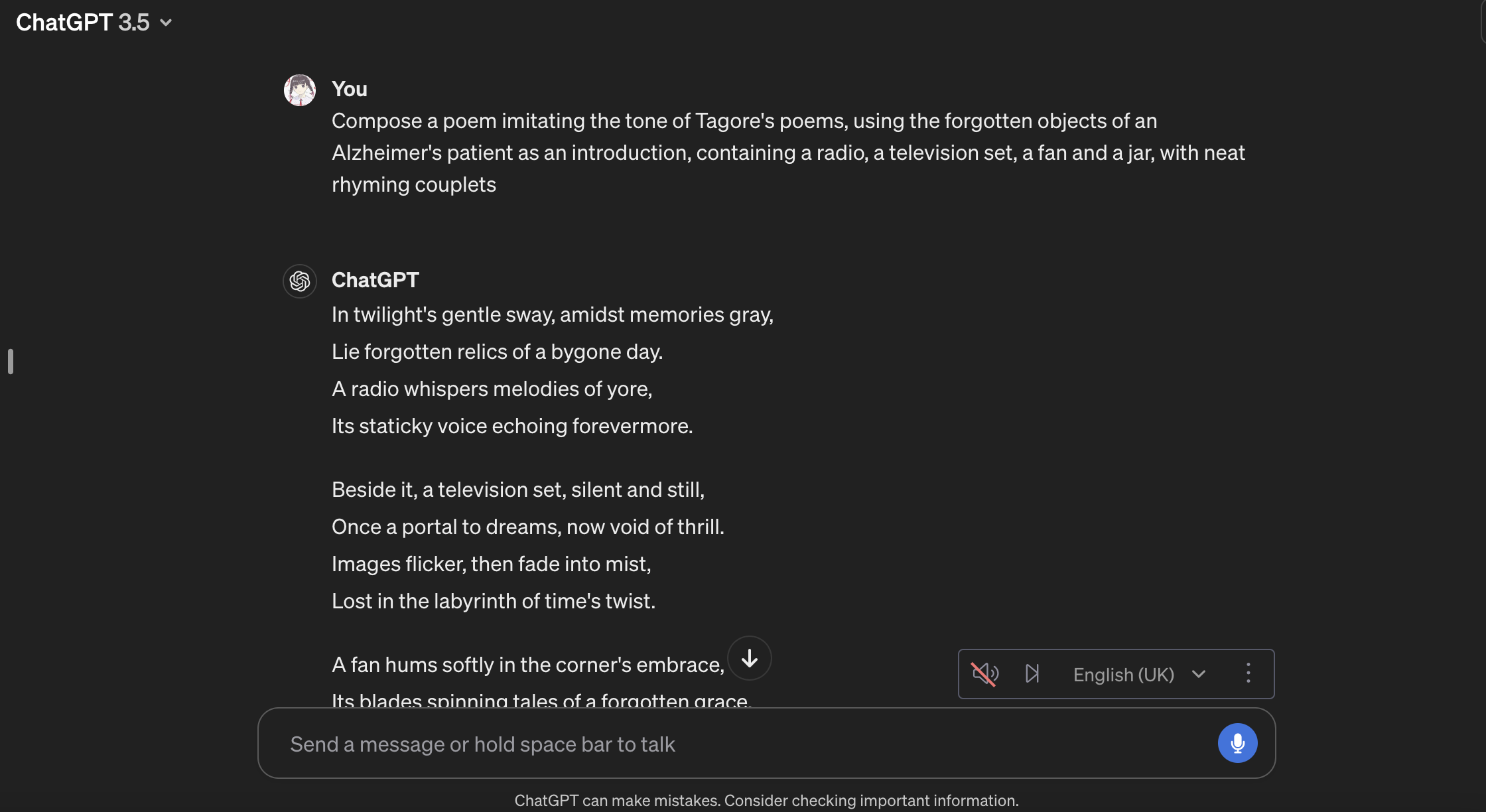

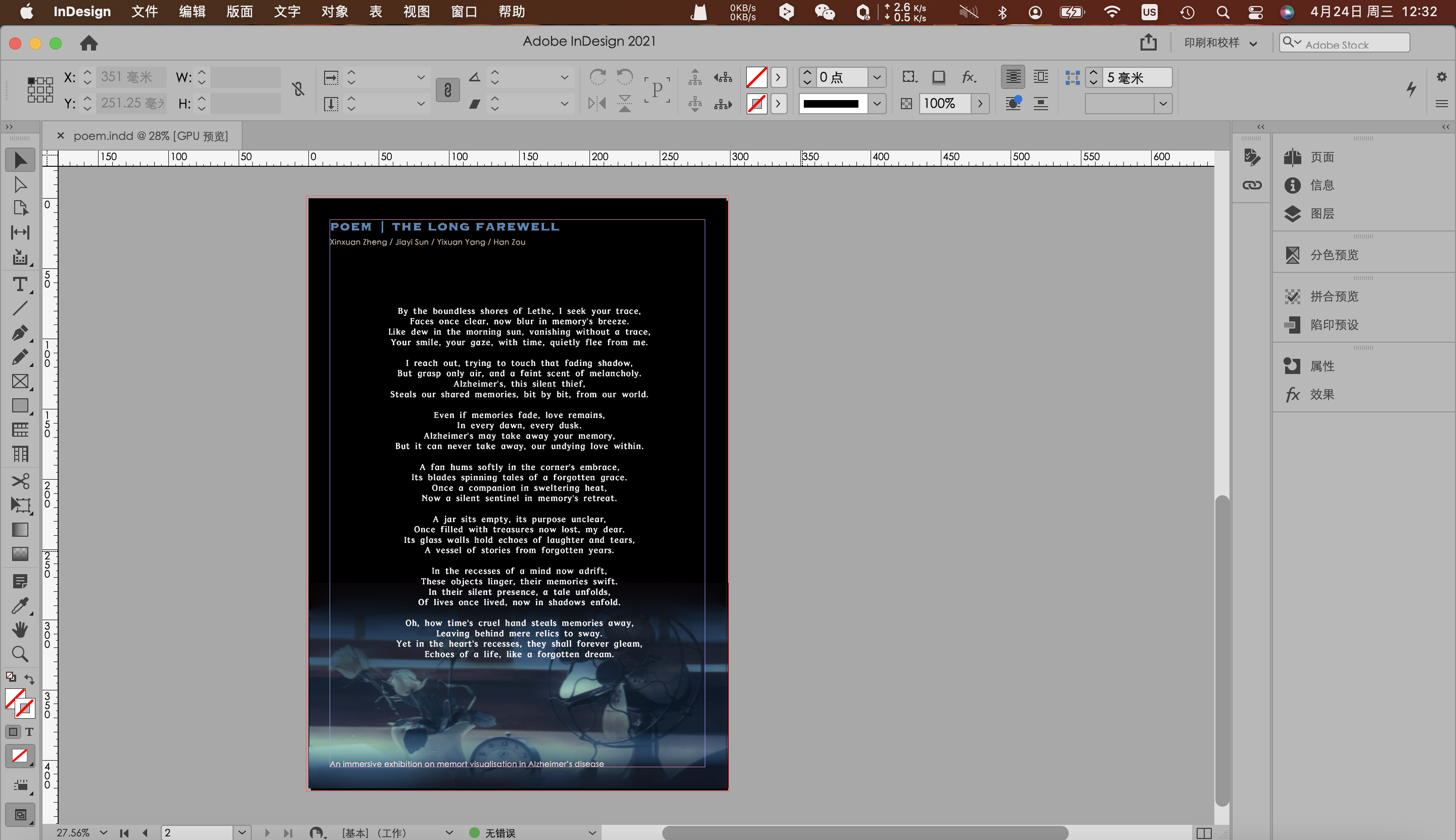

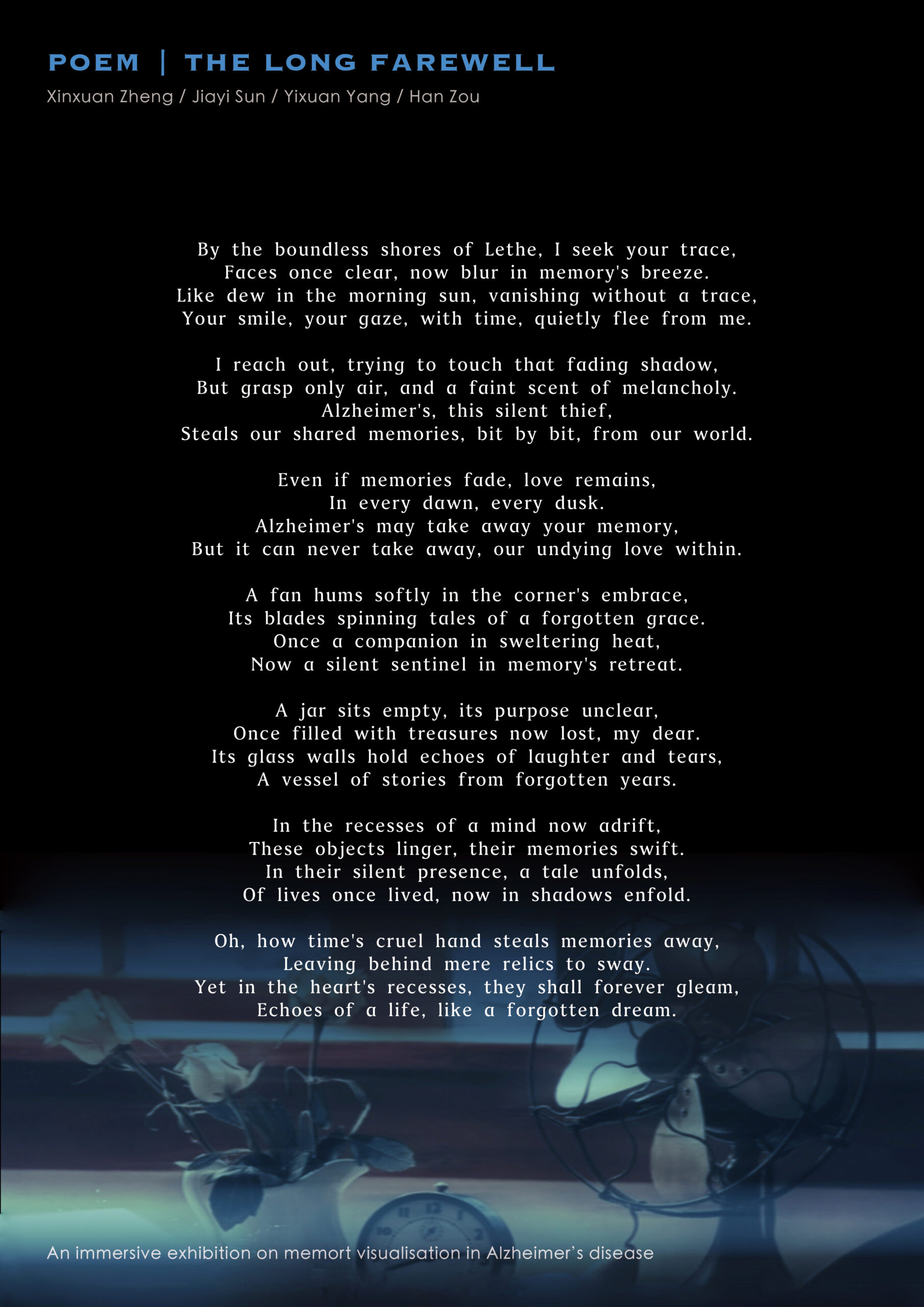

I used chat GPT to help me create an English poem based on a part 2 scenario. And I hope this poem can help the experiencers to read aloud in part 3.

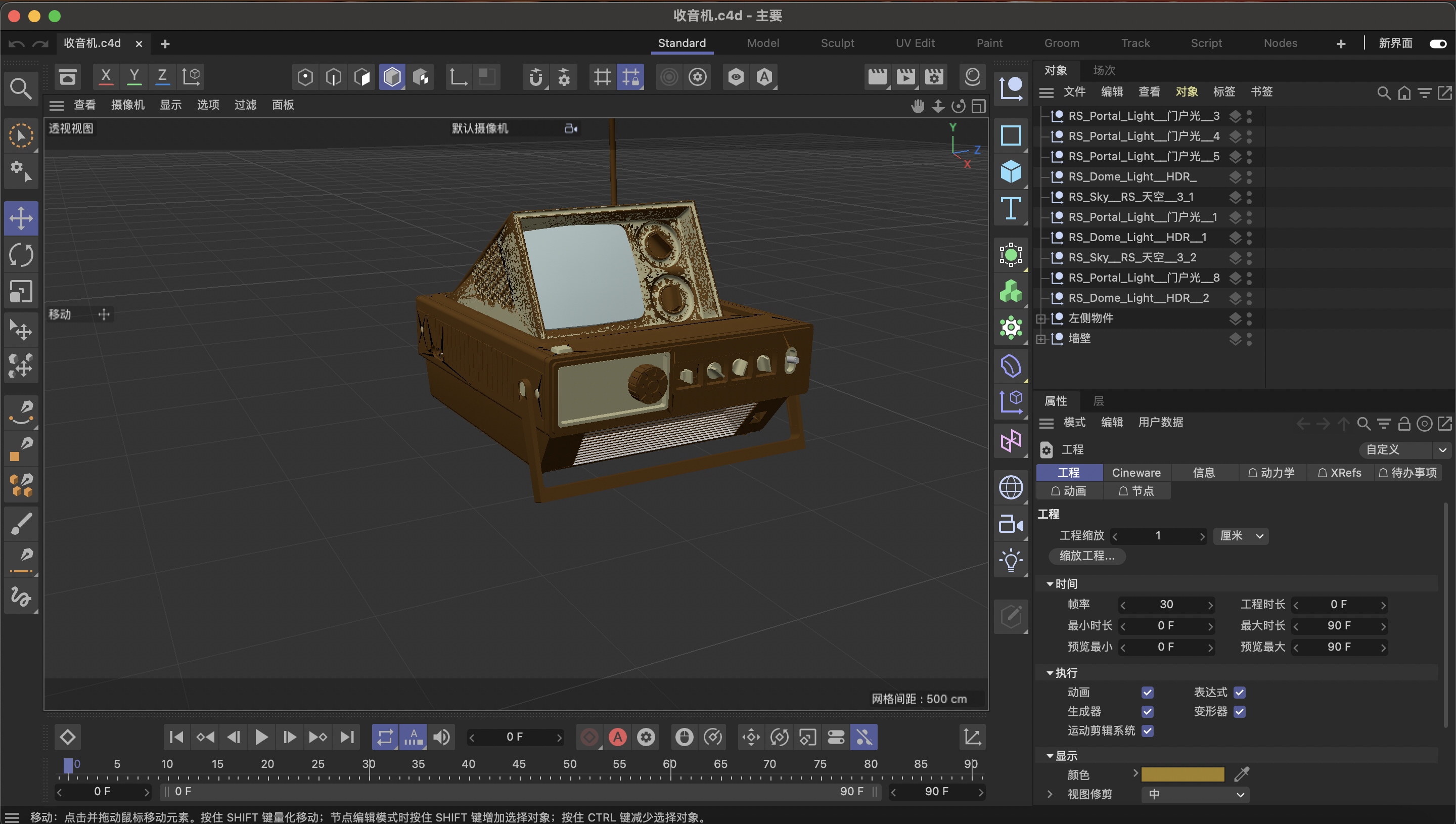

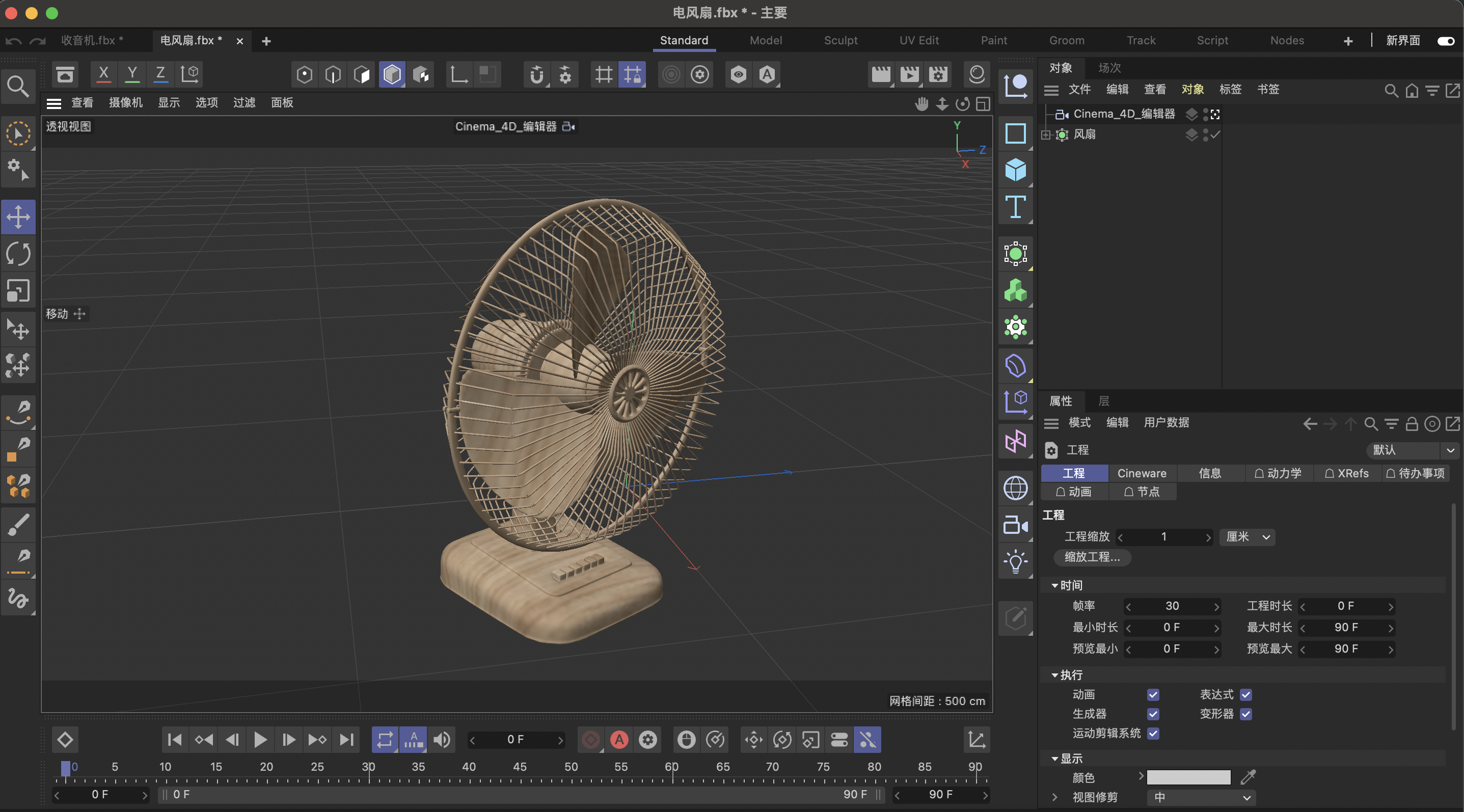

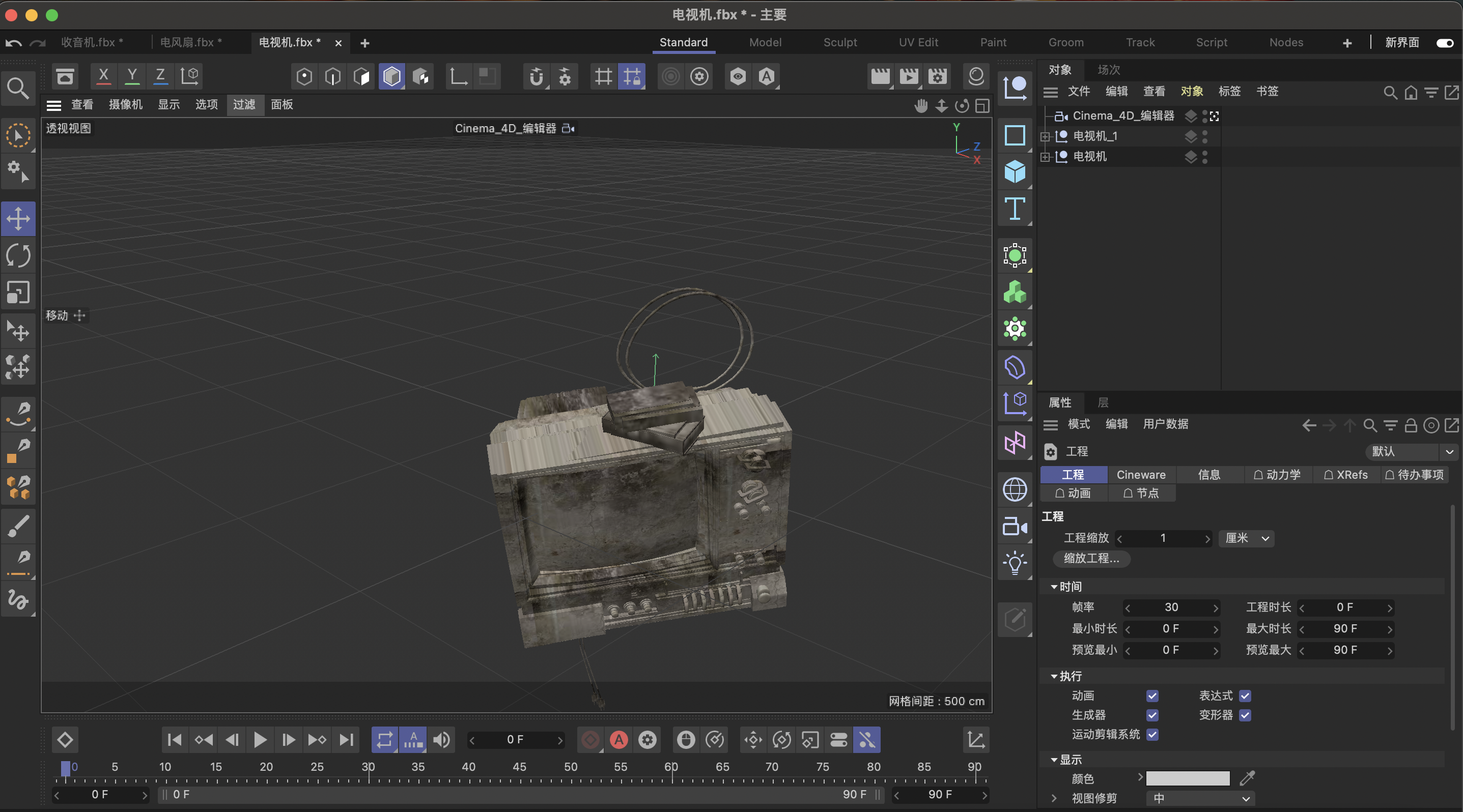

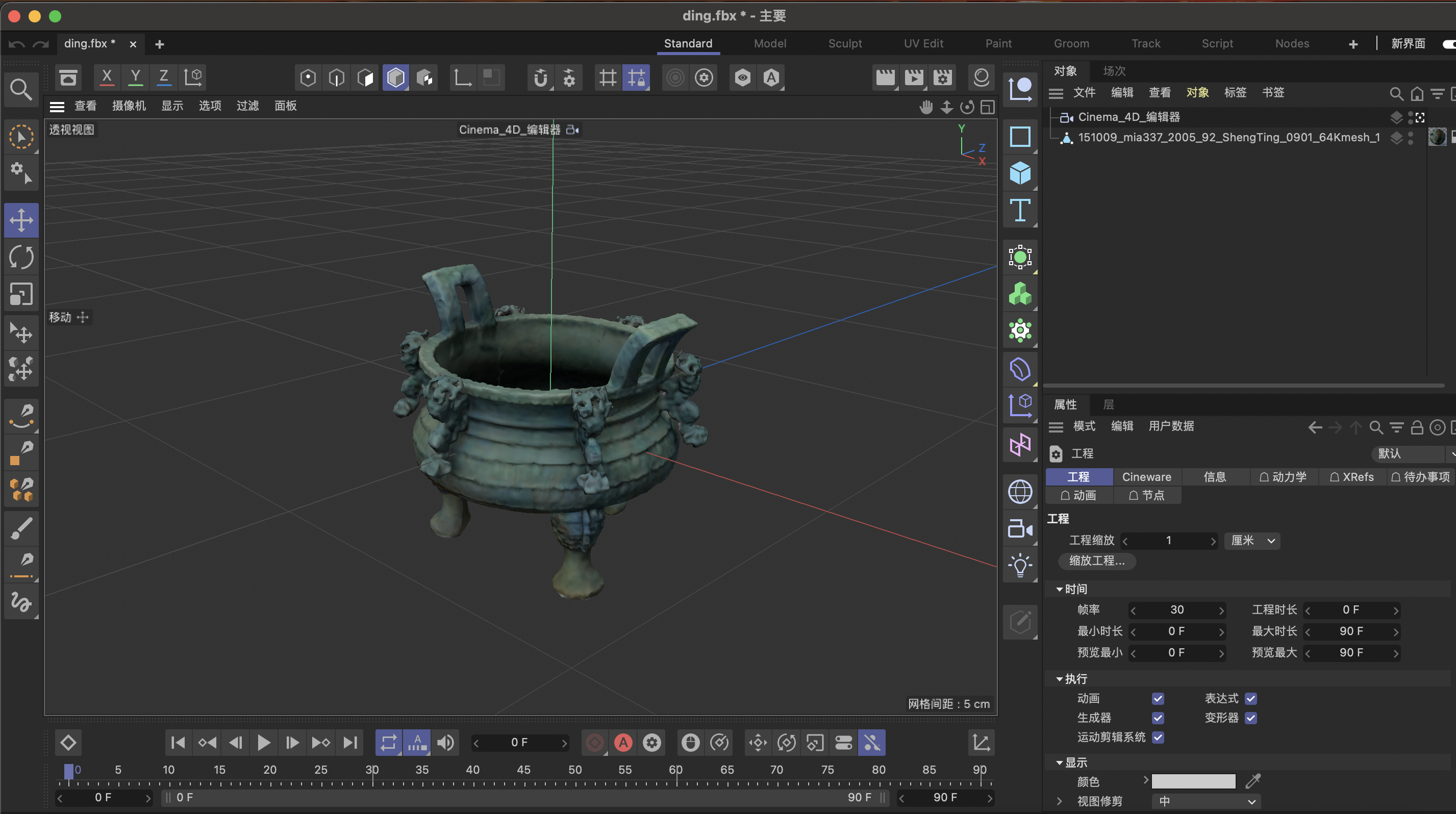

I modeled objects that elderly people tend to forget globally through C4D, which included fan, television, radio, and tank. I made sure that they were the same size and that they were labelled with different materials.

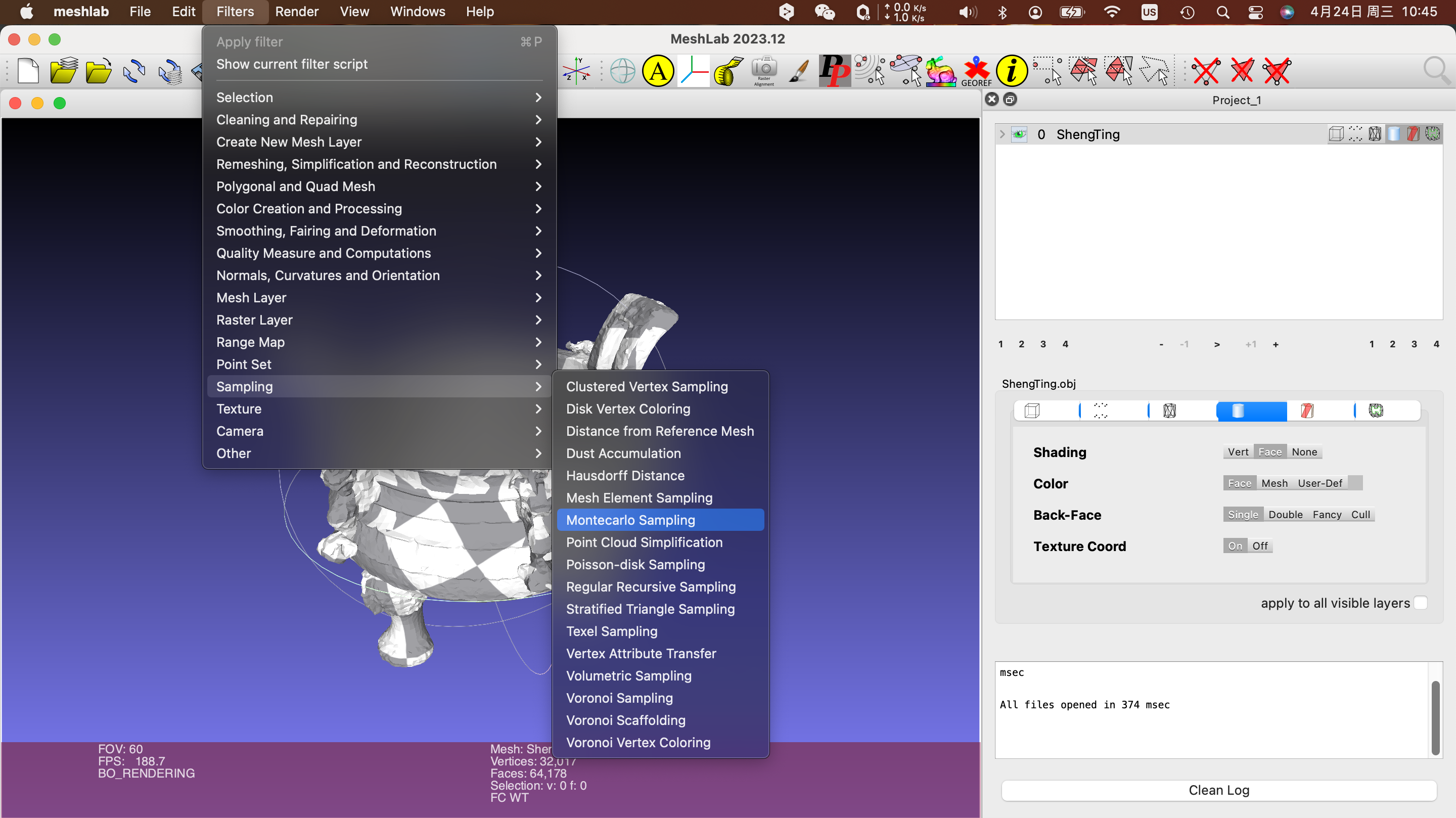

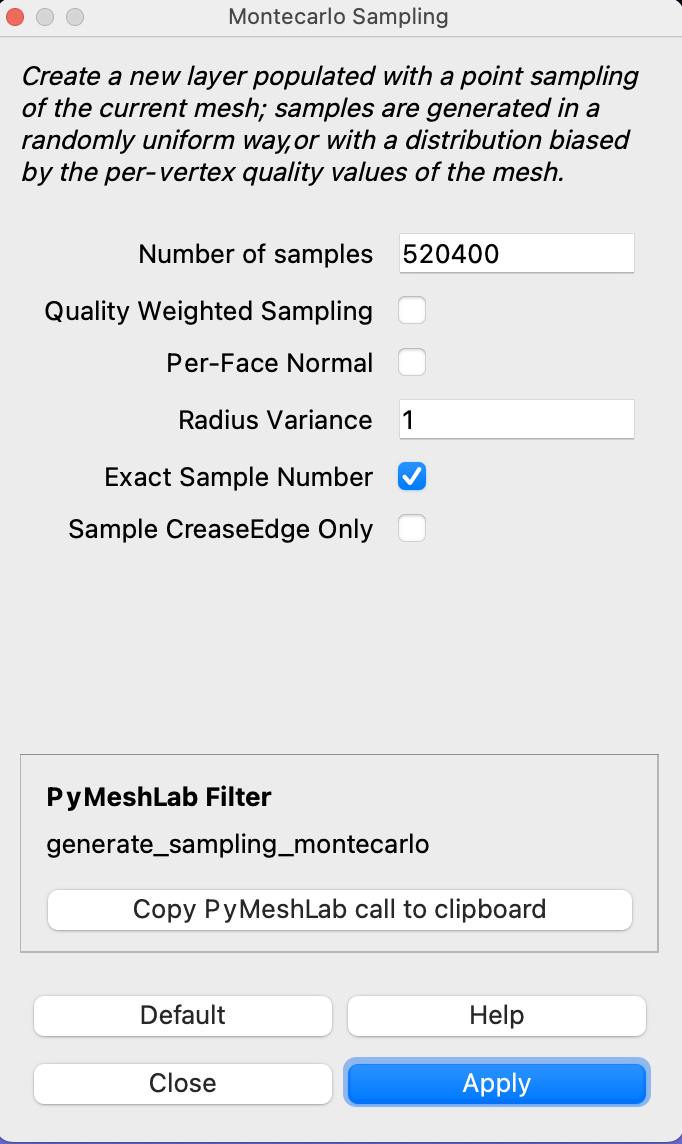

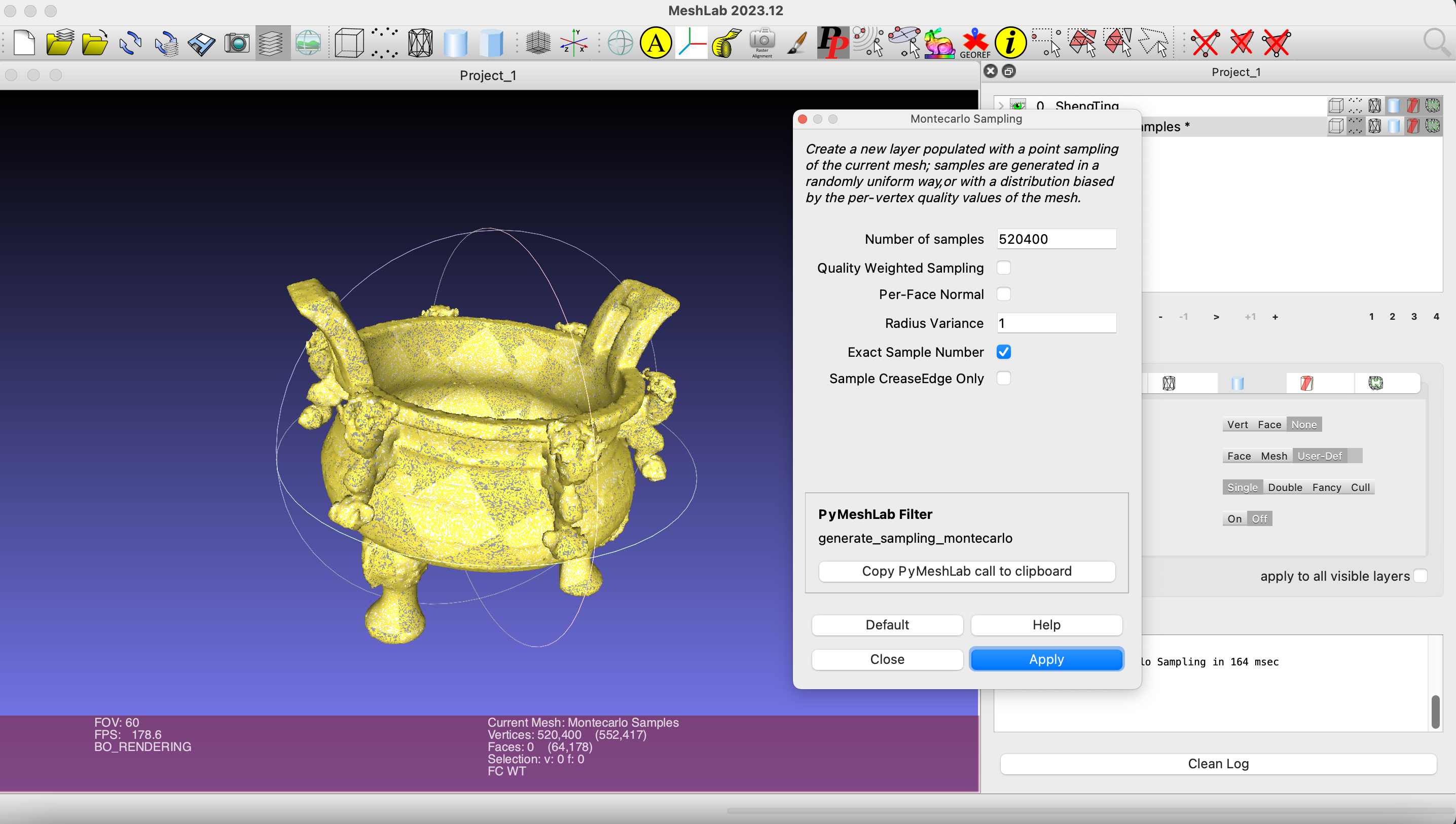

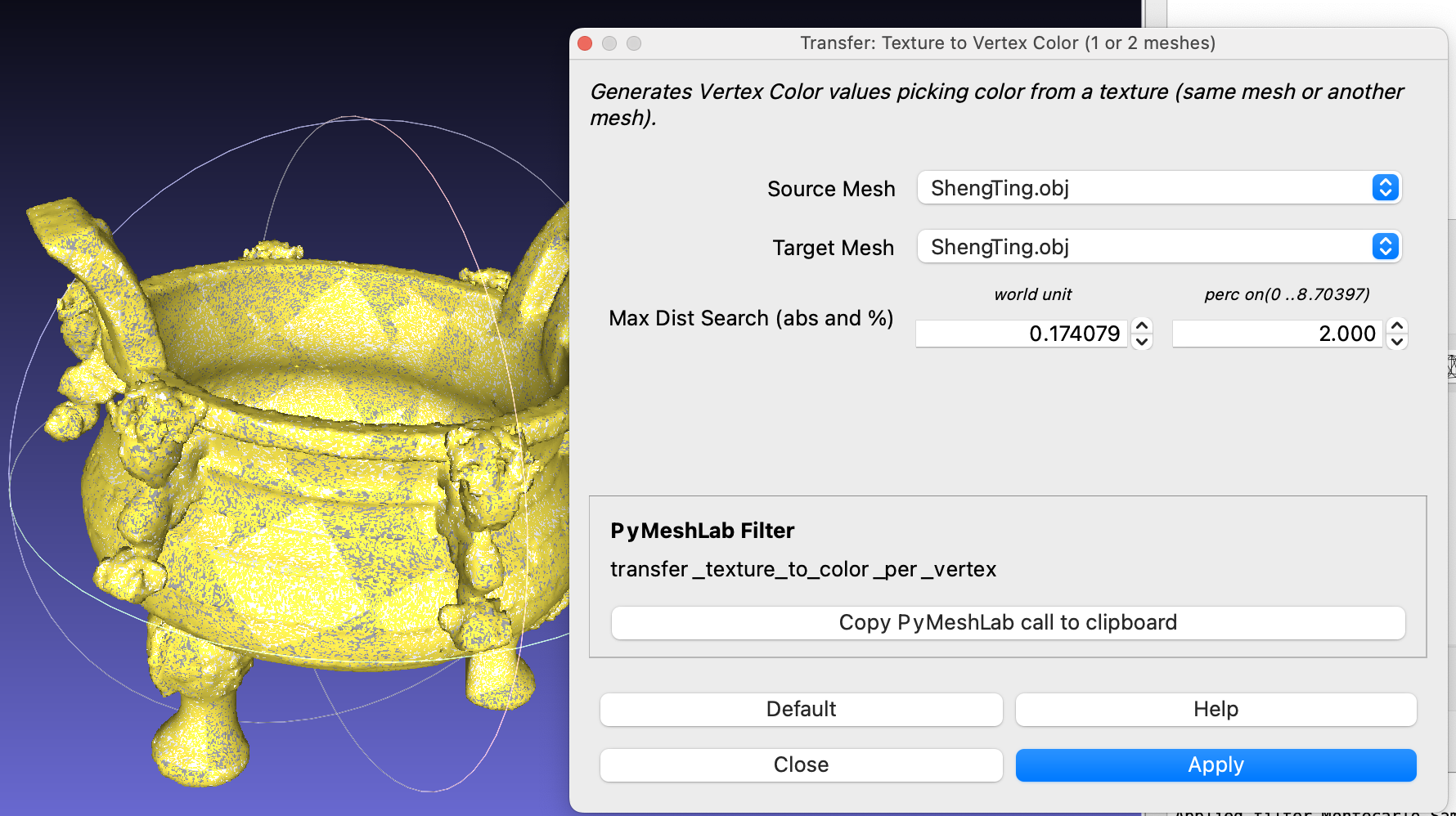

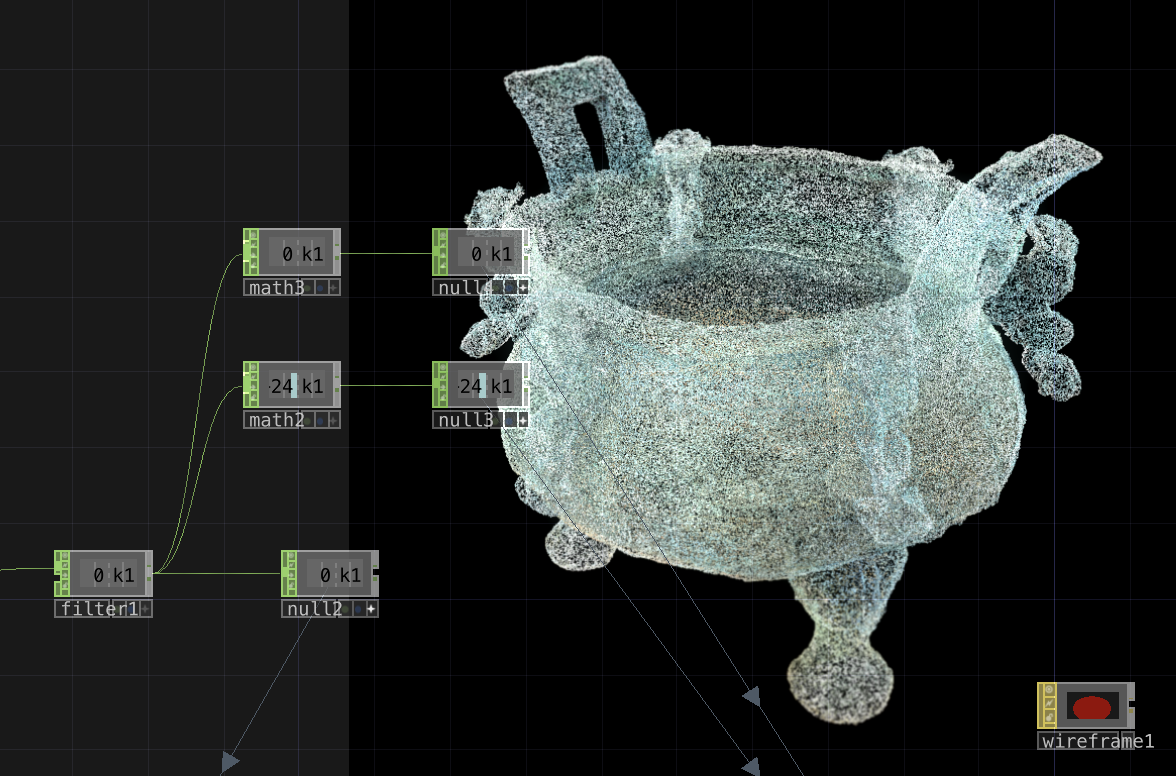

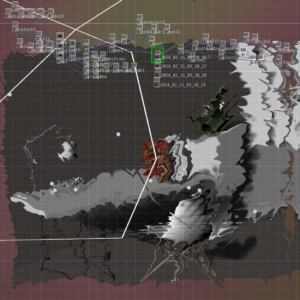

I added point cloud particles to the already built obj. model via meshlab to allow it to change dynamically once imported into touch designer.

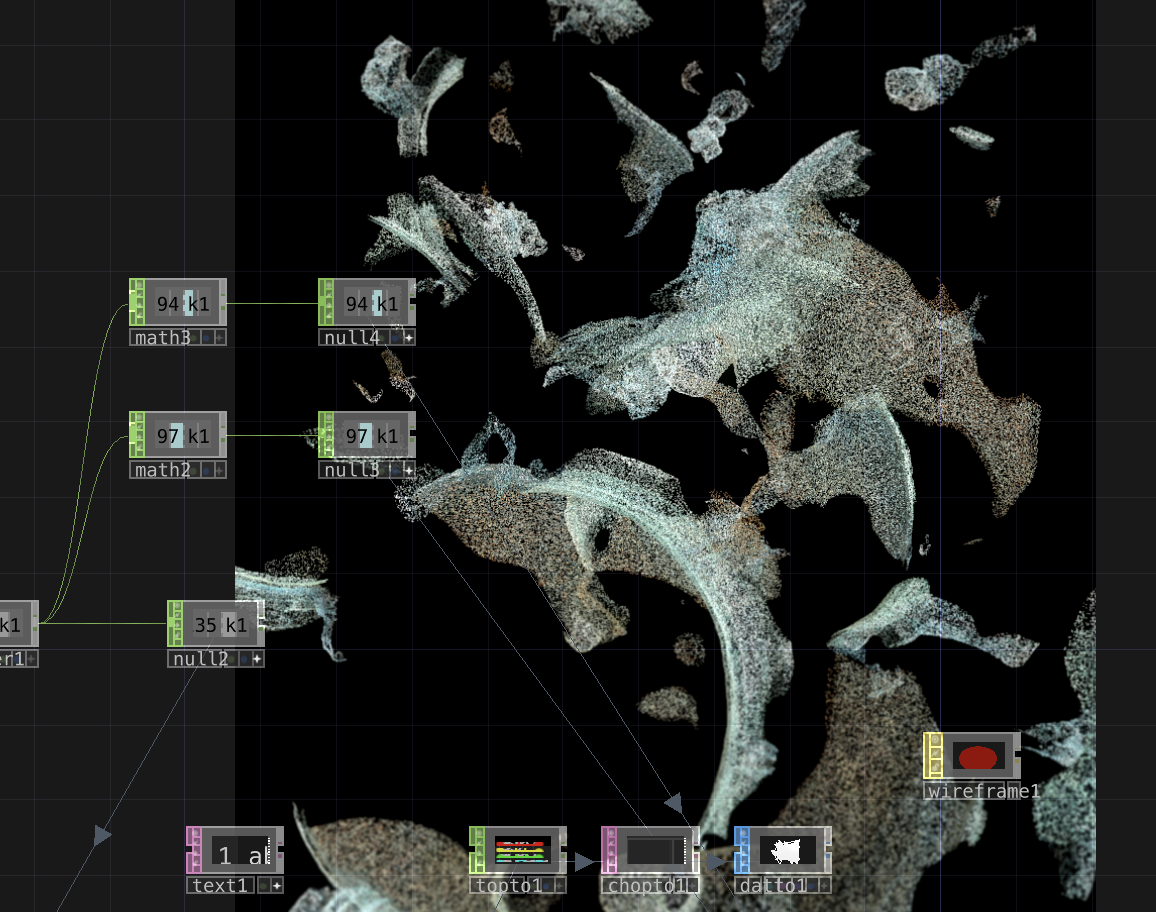

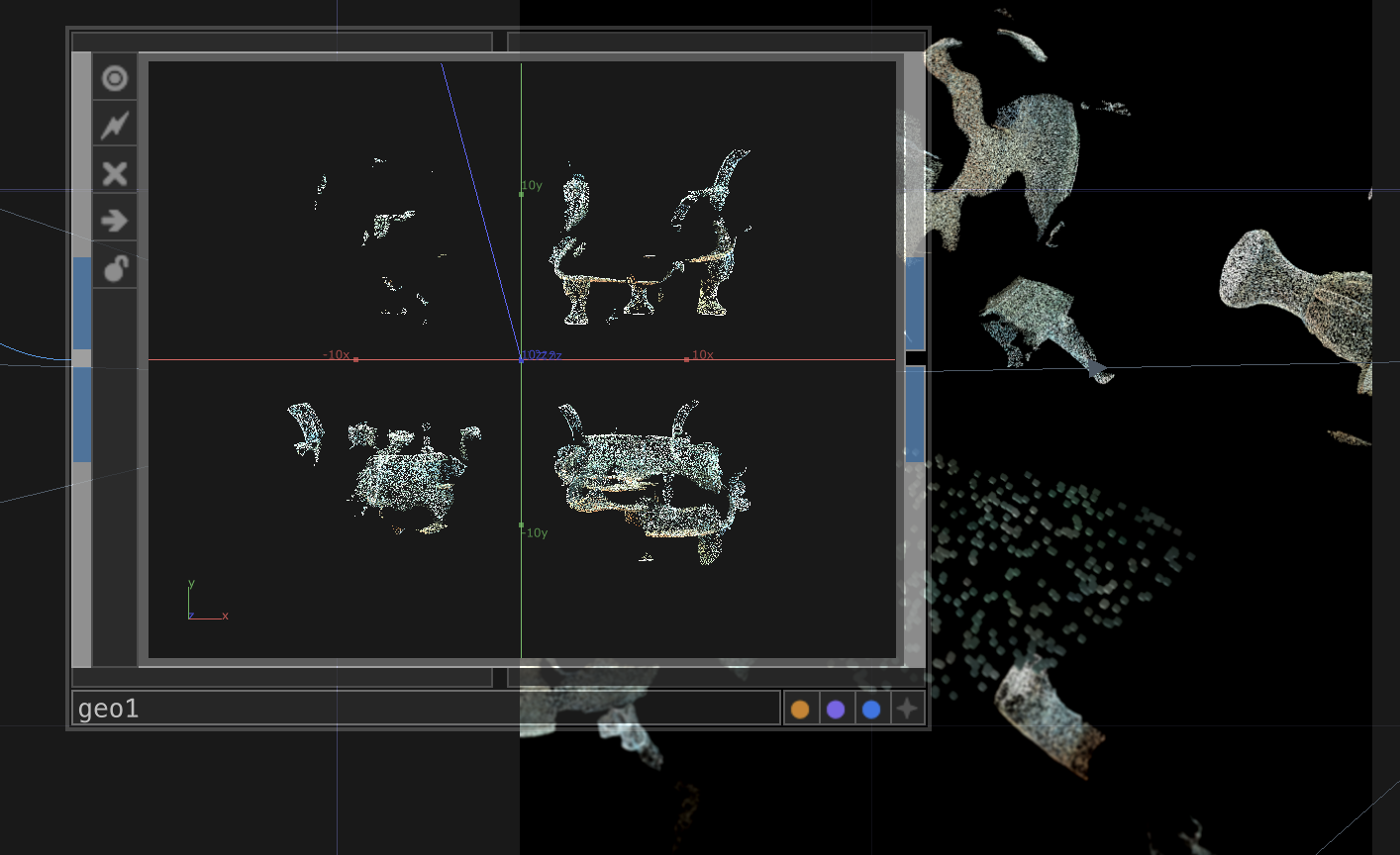

I used a timed loop of explosions and reorganizations of each object through touch designer as a way of simulating the patient’s fragmented memory of the forgotten objects.

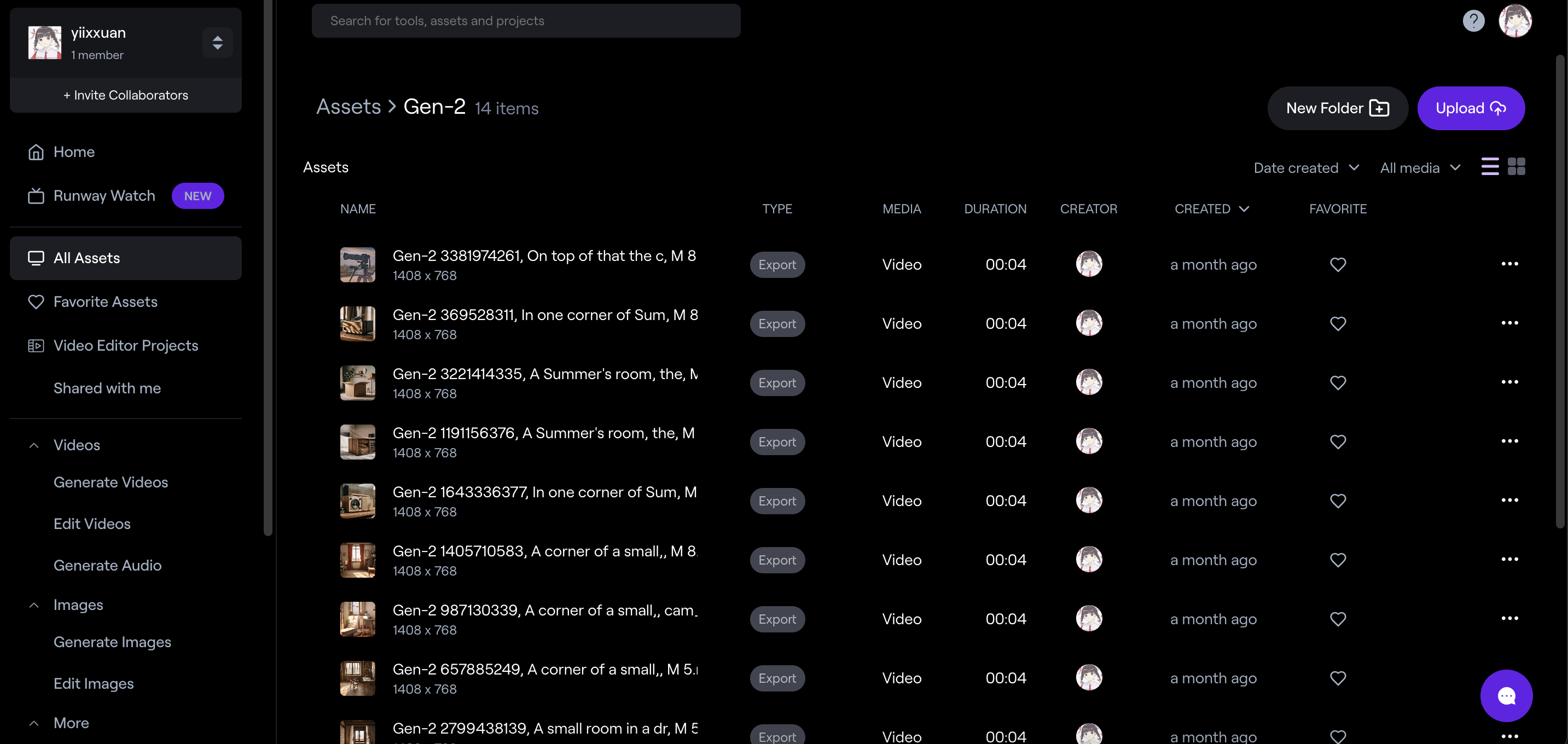

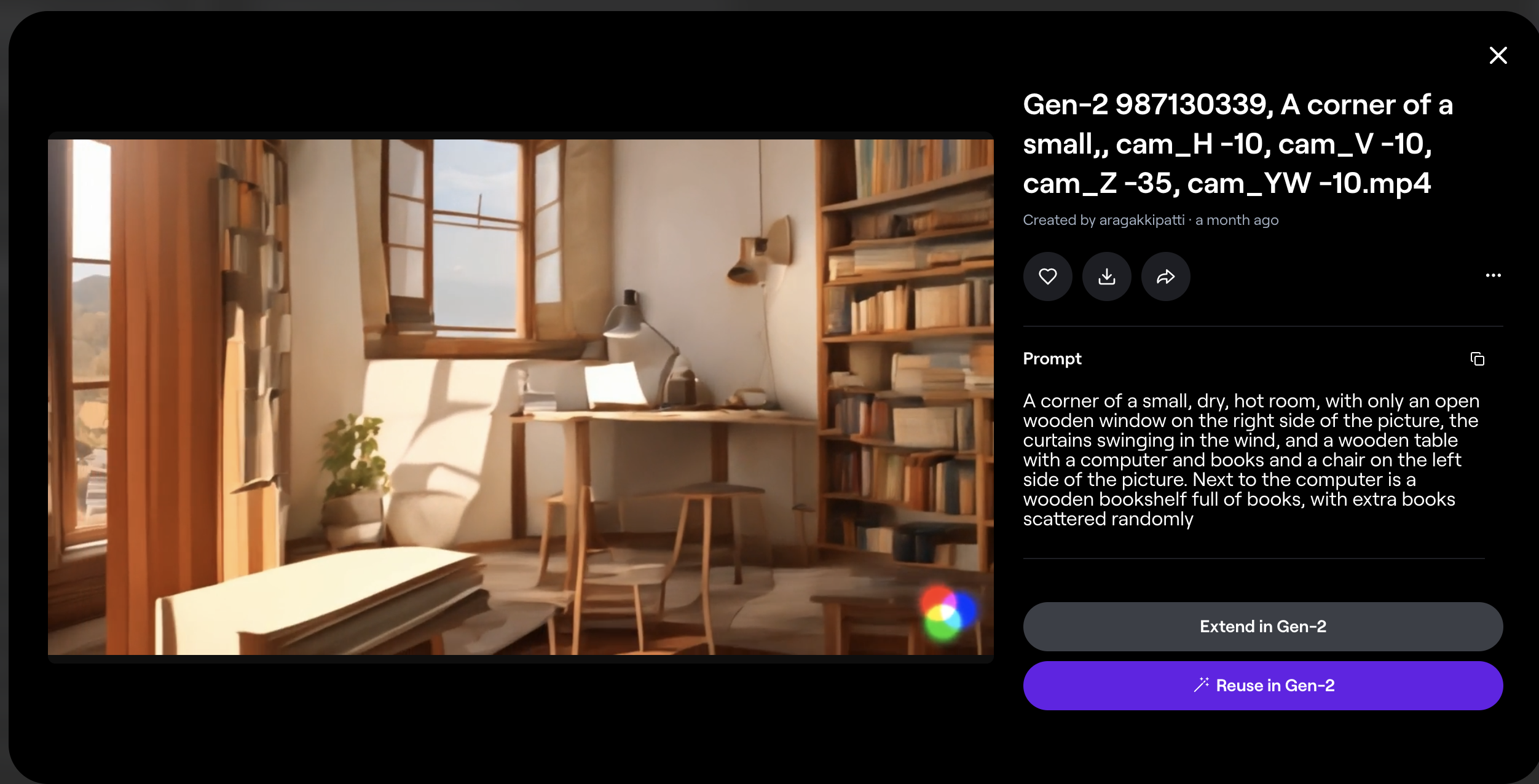

I used runway to generate videos of images of home in patients’ minds from around the globe and had these videos interspersed with objects. The aim was to increase the immersion of the person experiencing the experience.

Main idea:

To simulate the world in the patient’s mind when searching for an object through the first perspective

Week 7 Progress:

I tried to use C4D to model and texture different objects, then use Meshlab to attach point cloud particles to the model, and finally use Touchdesigner to create the effect of point cloud particles breaking and reconstructing.

I also tried different glitch effects and glitch sound effects to simulate the ambient sound of a patient looking for an object.

Follow-up ideas:

1. I would like to use AI to create a human voice to shout around this video in surround sound to make the patient look for these items and try to create an anxious sound.

2. I would also like to use Touchdesigner to construct a background of particle transformations of a real scene to be placed on the underside of these few objects to simulate a real space scene effect.

The effect reference:

(0:34-0:38)

I hope to create a world as seen through the eyes of an Alzheimer’s patient. In the eyes of a person with moderate Alzheimer’s disease, they tend to forget the object they are going to look for or suddenly forget their task in the process of looking for it, which in turn creates emotional and psychological problems in the form of anxiety and a sense of distrust in the world.

Therefore, through my design, I hope to present a first-hand view of a moderate Alzheimer’s patient’s condition and mimic the world as they see it in their eyes, helping the experiencers to experience the patient’s range of emotions first-hand.

Before this design, I did some research and I found that the patient’s state was associated with significant declines in overall cognition, verbal memory, language, and executive function, and these associations were amplified by increased anxiety symptoms. (Pietrzak RHLim YYNeumeister A, et al, 2015). At the same time, when I researched the world as seen from the patient’s first viewpoint, Steven R. Sabat said that through his conversations with several men and women with Alzheimer’s, he demonstrates how the powerlessness, embarrassment and stigmatization patients sufferers endure leads to a loss of self-worth. (Sabat S R, 2001).

In terms of technical realisation, I felt that I needed to make the experience immersive by allowing the experiencer to follow the rhythm of my design and the camera changes, my intention was to create the anxiety of not being able to find it within the allotted time. I achieved my visualisation by combining the first view of the patients I researched with the touch designer’s special effects to create a video. Secondly, through the feedback, I realised that the part I did before still required manually clicking in the background to switch scenes and did not connect to the other stages of the visualisation as a whole. So, I first used touch designer to create the video + audio of the effects for different scenes, and then put them into the same container to create a connection between each part. Thus achieving the effect of scene switching without manipulation.

Examples:

(From 1:42-2:23)

Reference List:

Pietrzak RHLim YYNeumeister A, et al (2015). Amyloid-β, Anxiety, and Cognitive Decline in Preclinical Alzheimer Disease: A Multicenter, Prospective Cohort Study; 72(3):284–291. Available at: 10.1001/jamapsychiatry.2014.2476

Sabat S R, (2001) The experience of Alzheimer’s disease: Life through a tangled veil[M]. Oxford: Blackwell. Available at: https://d1wqtxts1xzle7.cloudfront.net/94398763/the-experience-of-alzheimer039s-disease-life-through-a-tangled-veil-by-steven-r-sabat-0631216669-libre.pdf?1668688272=&response-content-disposition=inline%3B+filename%3DThe_experience_of_Alzheimers_disease_lif.pdf&Expires=1709753257&Signature=Tpjf24b4BxYWS9zb7NbxEujSgm-rHkQmUDbewGaVB3bviIfD1XeYNHLU~JrO4CCHx1IrbJ4f9bbw7qQzdSXlyVtSrbTq9jJlKGoMYeJKIkpG2GoXZcqFA22lLXb8oRC44f~8H0ROA0VF73fgtZLWmLbXJankpIwhi0EOHhZqz7PySMfMkZQuJTEKP5qCt8ffkmkg3tUerr6xQgb8w~JAwJhyJekG9ha5N5oy34HqEKnJexMvLvmvOikLMOhpWi9fqjt-1TtENBdo3LoUwUQjAA1HDnTbkaMTqkqQspsbeH6ccDMNYACDxKIviqnsfqp3FG5KnY6zx5cSNQau9jFpww__&Key-Pair-Id=APKAJLOHF5GGSLRBV4ZA

Project Introduction

Researching + photographing the historical morphological evolution of existing marine life (e.g. jellyfish) and collecting data on climate change and marine pollution, and then allowing AI machines to learn and speculate on the future ecosystems of the UK in the context of global warming and marine pollution, creating new forms of future creatures with echoes of the creatures’ distress signals, to create an interactive holographic installation of Ai-generated creatures. By modelling environmental factors and potential adaptations of species, we can gain insight into the possible ecological consequences of global warming and the emergence of new life forms, providing a unique perspective on how ecosystems may evolve in a future where these issues continue to worsen.

Process Idea

Tell the AI historical data on UK marine life (every 50 years), such as historical seawater temperatures, pollution levels, PH changes and image changes, and let the AI measure future changes in biomorphology.

Detect differences in the sound of organisms in different ocean data and let the AI mimic and predict.

Finally, we collect the morphology and sound of the future creatures generated by Ai, make secondary creation of image and sound through software such as touch designer, and design an interactive holographic device with Arduino or kinect, so that the user can observe the different reactions of the future creatures under different years of ocean environment through touching, waving, talking, and other interactions.