Preface

Once upon a time, Edinburgh was a bustling city filled with people and buildings. But after a catastrophic event, the city was abandoned, and nature began to reclaim its land. Years went by, and Edinburgh transformed into a lush, green jungle. The streets were overgrown with vines and tall trees, and the buildings were slowly crumbling away. But amidst the chaos of nature’s takeover, a new form of life emerged. Plants, once relegated to the parks and gardens of Edinburgh, now thrived in every corner of the city. Giant flowers bloomed in the ruins of buildings, and trees reached towards the sky, creating a lush canopy above.

Animals, too, had returned to the city. Deer and foxes roamed the streets, and birds nested in the trees. The sound of their calls filled the air, a symphony of life that had been missing from Edinburgh for so long. But those who ventured into the city had to be careful, for the plants had grown wild and unpredictable. Some had evolved to defend themselves, and their thorns could prove deadly. But for those brave enough to venture into the heart of Edinburgh, they were rewarded with stunning sights of nature’s beauty. And so, the city of Edinburgh became a place of mystery and wonder, a testament to the power of nature and the resilience of life. The ruins of the past served as a reminder of what once was, while the thriving jungle was a symbol of hope for the future (ChatGPT, 2023).

Introduction

In our Digital Media Studio project, we embarked on a captivating journey to investigate the realm of potential plant mutations and their prospective transformations in the future. Our team collaborated with a range of AI tools, such as ChatGPT, Midjourney, and DALL-E, to generate a series of enthralling sequences that offer unique insights into the possible evolutionary pathways of various plant species.

At the heart of this project lies the core theme of “Process,” which emphasizes the significance of human-AI collaboration in the creative journey. By leveraging the power of these cutting-edge tools, our team was able to explore and expand upon the intricate possibilities that lie within the plant kingdom. This submission highlights the various steps undertaken to bring these extraordinary visions to life, showcasing the seamless integration of human intuition and artificial intelligence.

The project is showcased using a diverse range of media, reflecting our commitment to innovation and providing an immersive experience for our audience. By incorporating projectors, tablets, Arduino-based systems, and Looking Glass panels, we have harnessed the potential of these new-coming technologies and furthermore emphasize interactivity to foster an engaging and memorable experience for our visitors. The combination of hands-on learning opportunities and interactive components encourages attendees to actively participate in the exploration of plant mutations and their potential future developments. This approach not only deepens their understanding of the subject but also sparks curiosity and fosters a greater appreciation for the complexities and beauty of the natural world.

Our process

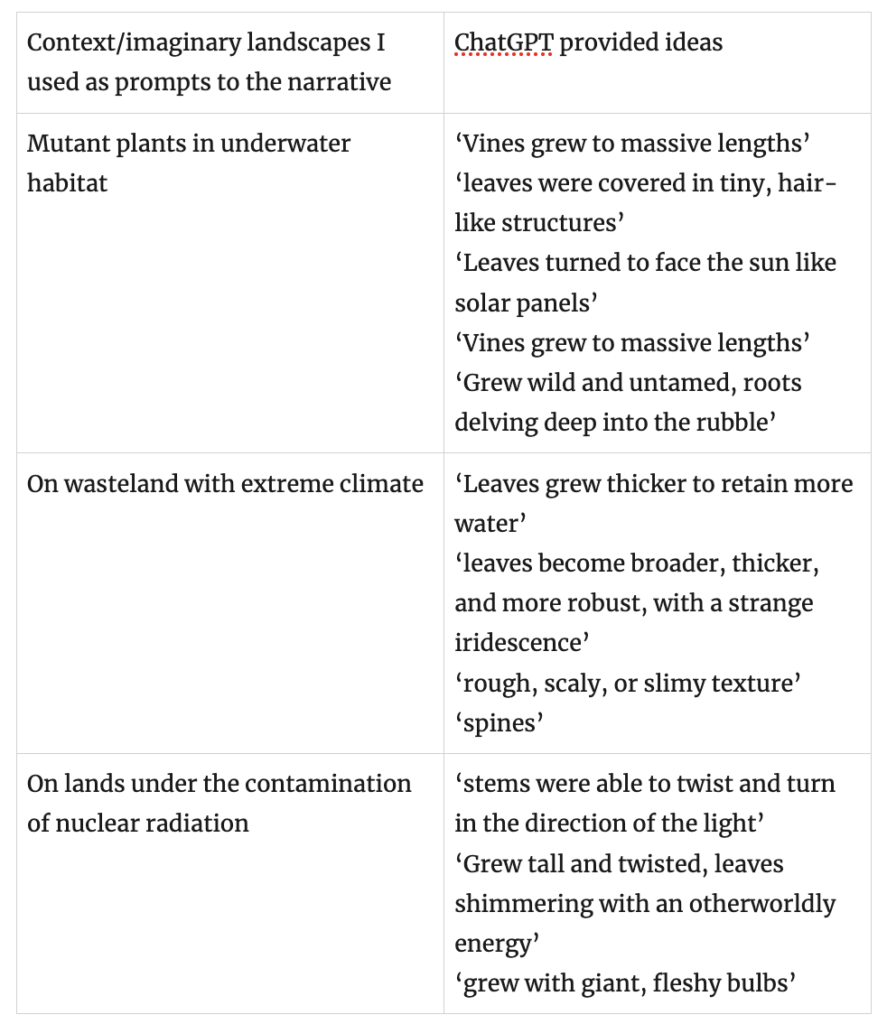

Our project started by initiating an in-depth engagement with the advanced language model ChatGPT, to explore and better understand the potential ways in which plant species might evolve and undergo genetic mutations in a diverse range of environmental conditions. We sought to gain insights into the complex adaptive mechanisms that plants may employ to ensure their survival and prosperity in the face of ever-changing ecosystems and environmental pressures.

Following the creation of a compelling narrative with the help of ChatGPT, our team delved into the exciting process of visualizing and presenting the plant mutations in the most engaging and thought-provoking manner. We aimed to capture the essence of the story and the unique characteristics of the mutated plants through various visual concepts and media.

After carefully considering the numerous artistic styles and media options, we narrowed down our selection to several distinct methods of presentation, each chosen to best encapsulate the plant mutations and the narrative that accompanies them:

-

- Projector

- Tablets

- Looking Glass

- TV

Softwares and Platforms:

TouchDesigner

Maxmsp

Unity

Final materials

AI Sequences and interactivity setup

Further Reflection

Under the relationship between people and the environment deduced by artificial intelligence Chat GPT, other members of our team also conducted in-depth exploration from another perspective. Using a flower as a medium, think about the relationship between people and the environment.

Background

Plant selection

Output direction

Through a series of interactive exhibits and activities, it aims to demonstrate the ways in which humans affect plants and promote a greater sense of responsibility for plant care and conservation.

Research process

Sound collection stage: (Chen Boya/Wu Yingxin/Liang Jiaojiao/Li Weichen)

In the early stage of design, our team rented sound collection equipment, went out to Dean Village and around Edinburgh to collect sound materials, and used the sound materials in the sound design part.

Device: AAA Rechargeable Batteries Zoom - H6 Zoom - H6 Accessory Pack 3.5mm Male to 1/4" Female Jack Adapter - 22698 Beyerdynamic - DT 770 PRO 250 OHMS - 6912 Beyerdynamic - DT 770 PRO 250 OHMS - 34224

In the process of going out to explore, we observed the growth status of different plants in the natural environment, and used sound collection equipment to capture the sounds of different plants and environments. From the initial sound of wind and water, birdsong, and tree branches rubbing, to vehicles, buildings, etc., they all have sounds. Inanimate or animate, all are making their own voices. We also use sound as an important sense to perceive the world.

In this process, our team members not only gained a lot of new knowledge, such as sound collection. At the same time, after being exposed to natural sound collection, we have re-recognized nature and are constantly thinking about the connection between man and nature.

For other details, please move to the personal report of the sound engineer. 》》》

Interactive Exploration Stage: (Chen Boya/Liang Jiaojiao/Wu Yingxin)

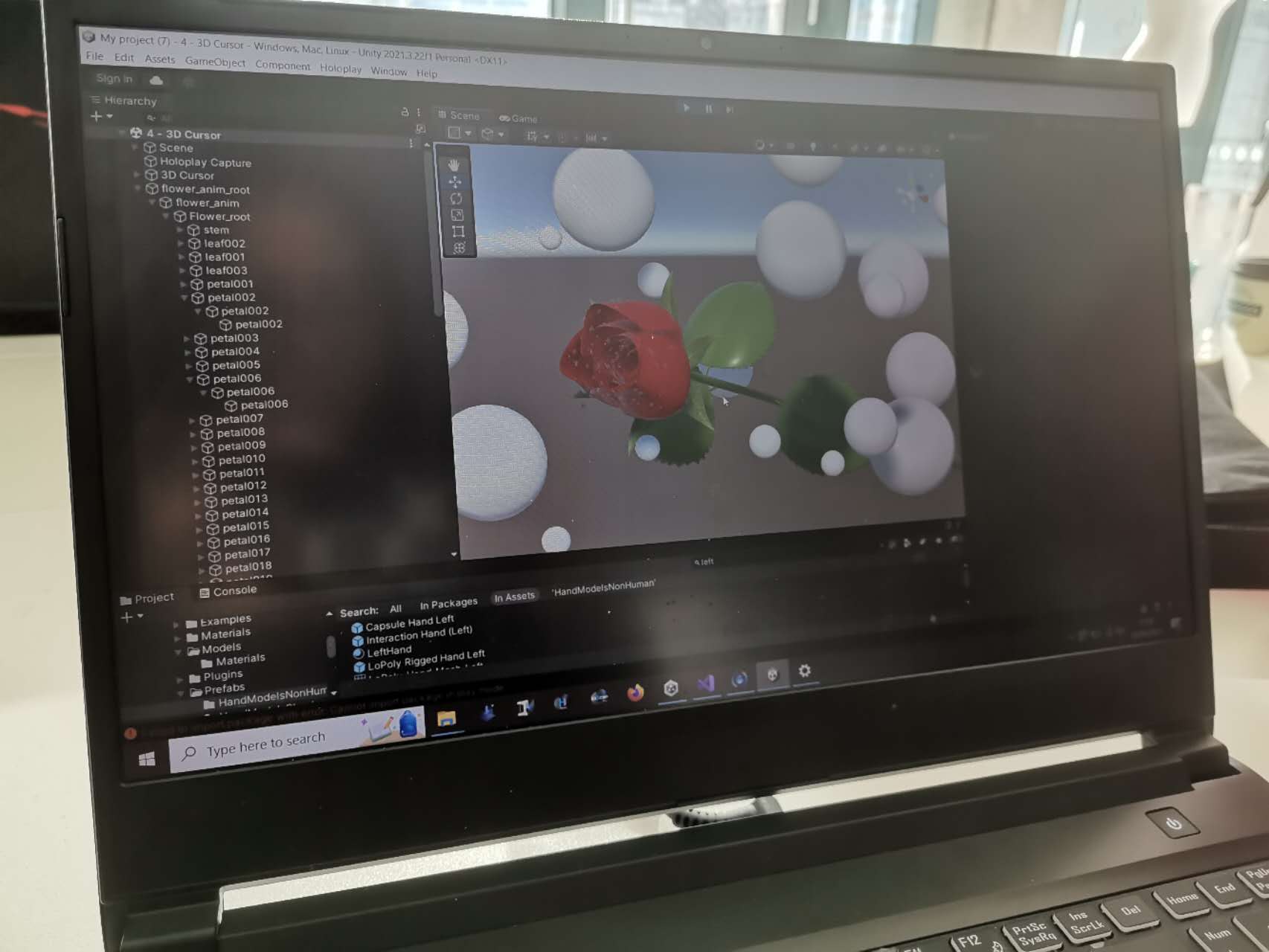

Device: Looking Glass Leap Motion Controller Performance Laptop

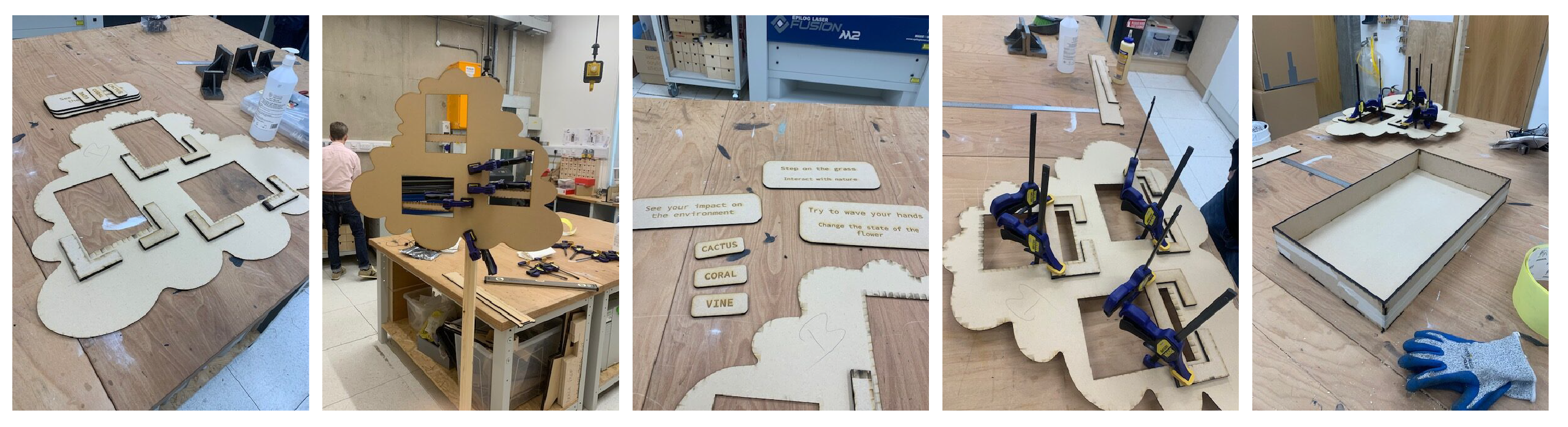

Materials and production: (Chen Boya/Wu Yingxin/Liang Jiaojiao/Li Weichen/Dominik)

Visualization(Yingxin Wu)

Maxmsp: I have taken a course about max which made me have a basic understanding of the function of max, while listening to a course about maxmsp taught by my professor of this course, I first considered using max at the beginning.

The iteration of the visualization has gone through countless versions. This report mainly explores the parts that have been modified after the pre》

Then I realized that it is difficult to modeling a program programmatically which is not be visualized enough. Especially when the teacher suggested that we could use the same element such as a flower to connect our logic to make our exhibition clearer.

So how to create a flower?

I refer to the following video:

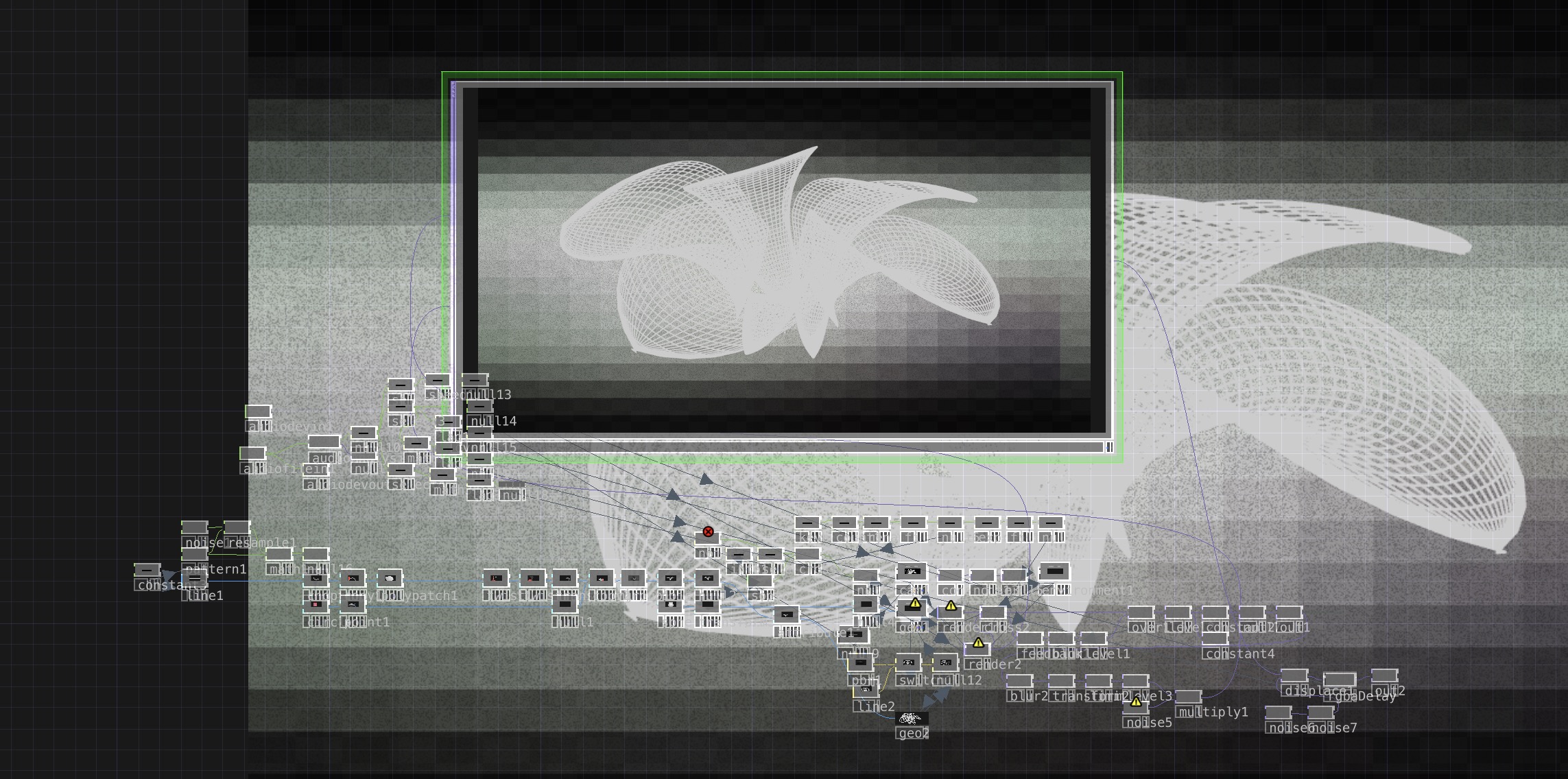

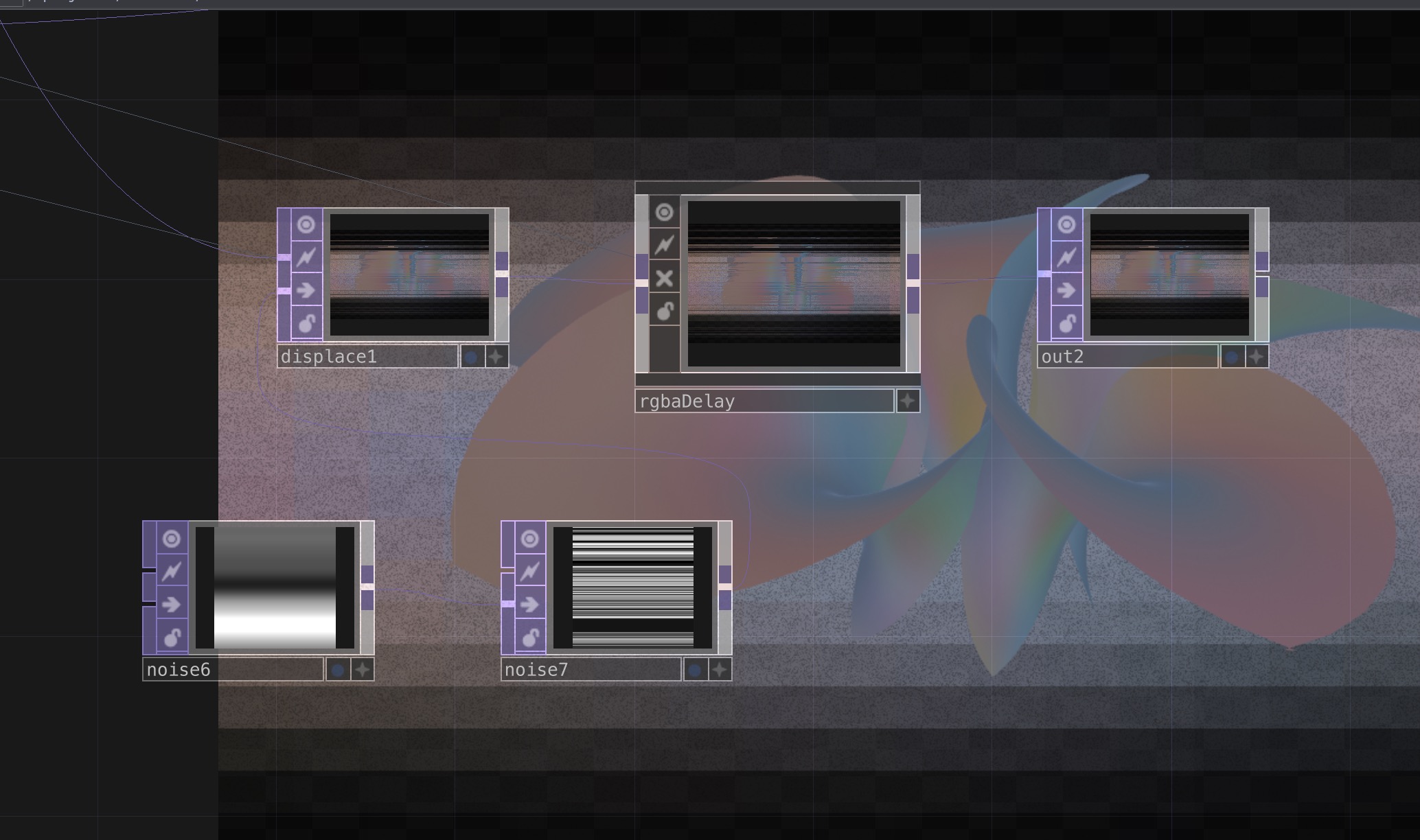

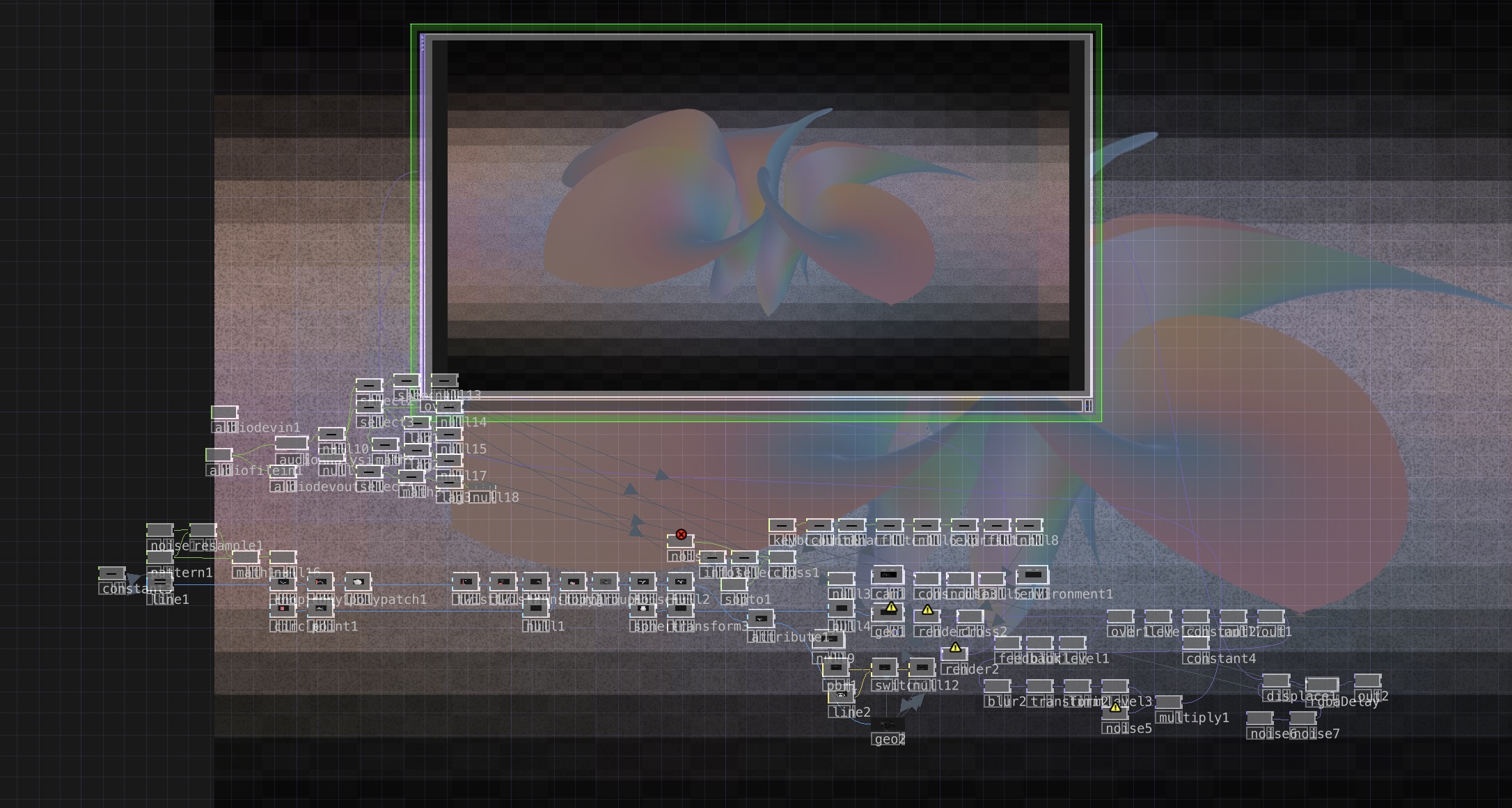

To be honest, I didn’t know anything about Touchdesigner before.

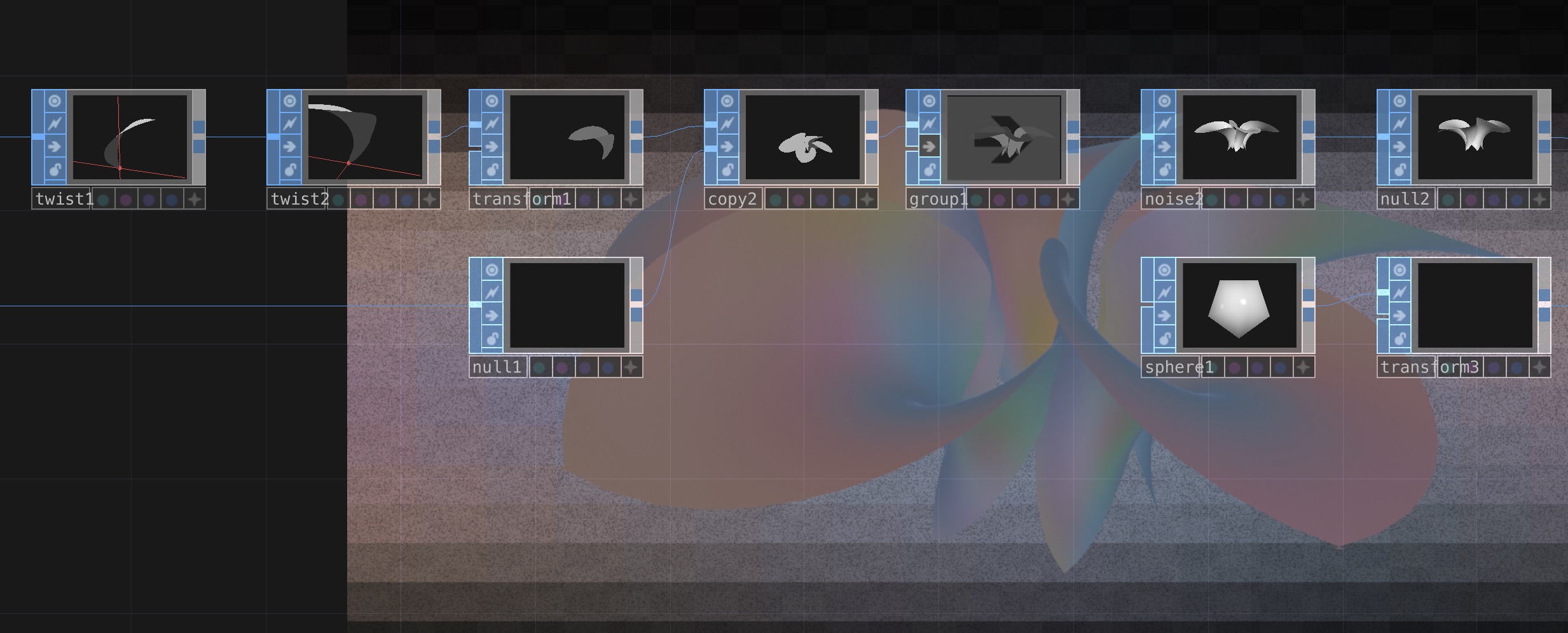

The first step in generating a flower is of course generating a petal. The shape of the petals is not complicated and has symmetry, which can be regarded as a geometric facet> There are many ways to generate such a patch geometry in Touch. Here, the spline is used to generate the patch outline, and then the poly algorithm is used to generate the patch.

Through this attempt of procedural modeling in TouchDesigner, I success. Components such as Copy SOP, Chop to SOP, Point SOP, and Group SOP are commonly used components for procedural modeling. Of course, there are other components that are not involved in this case.

Back to the flower itself, the growth of the plant and the opening of the flower are extremely delicate and wonderful, and the geometric structure of the plant is also very fascinating.

In terms of visualization, I used a more usable component that was modified by someone else authorized.

https://blogs.ed.ac.uk/dmsp-process23/2023/04/26/interactive/

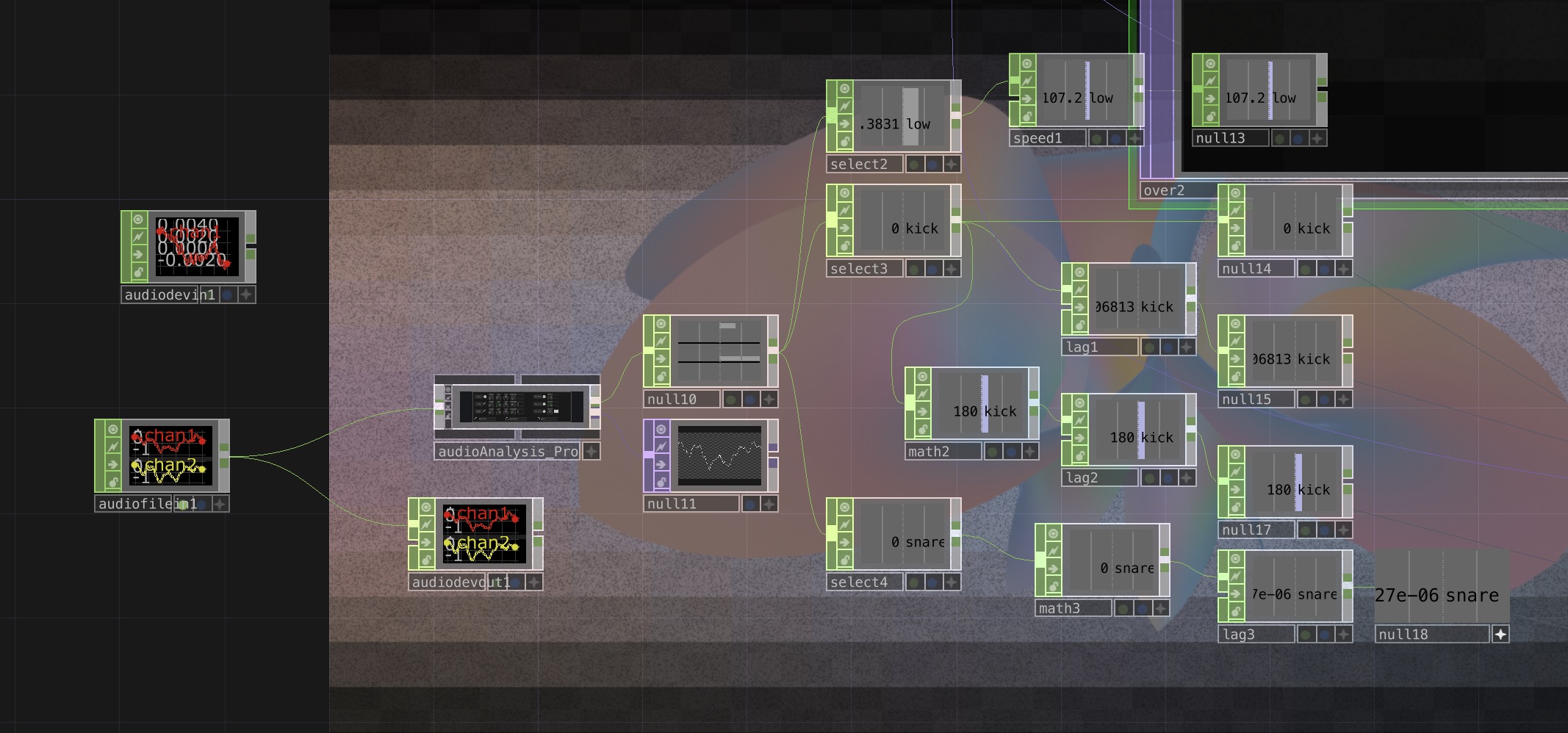

Others Sounds(Yingxin Wu)

devices: Microphone Stand (Low) Schoeps - MK4 + CMC1L H6

Music(Yingxin Wu)

The music this time is also a very bold attempt. It was not done with traditional daw but with maxmsp which has never been used before. Because at the beginning, I wanted to use a max to control all processes. But then we had more and more ideas, and a max can no longer satisfy us.

Speaking of music, the innovation of changing the software (daw-max) first brought many difficulties. For example, the modulation of timbre took a lot of time. When combined, it becomes more abstract due to non-linear reasons

Pre for Sound(Yingxin Wu)

devices: Microphone Stand (Low) Schoeps - MK4 + CMC1L USB 2.0 - A to B XLR Male to XLR Female * 8 Genelec - 1031A - Active Monitor * 2 Genelec - 8030A - Speaker * 2 K&M - Mic Stand Mackie - ProFX8

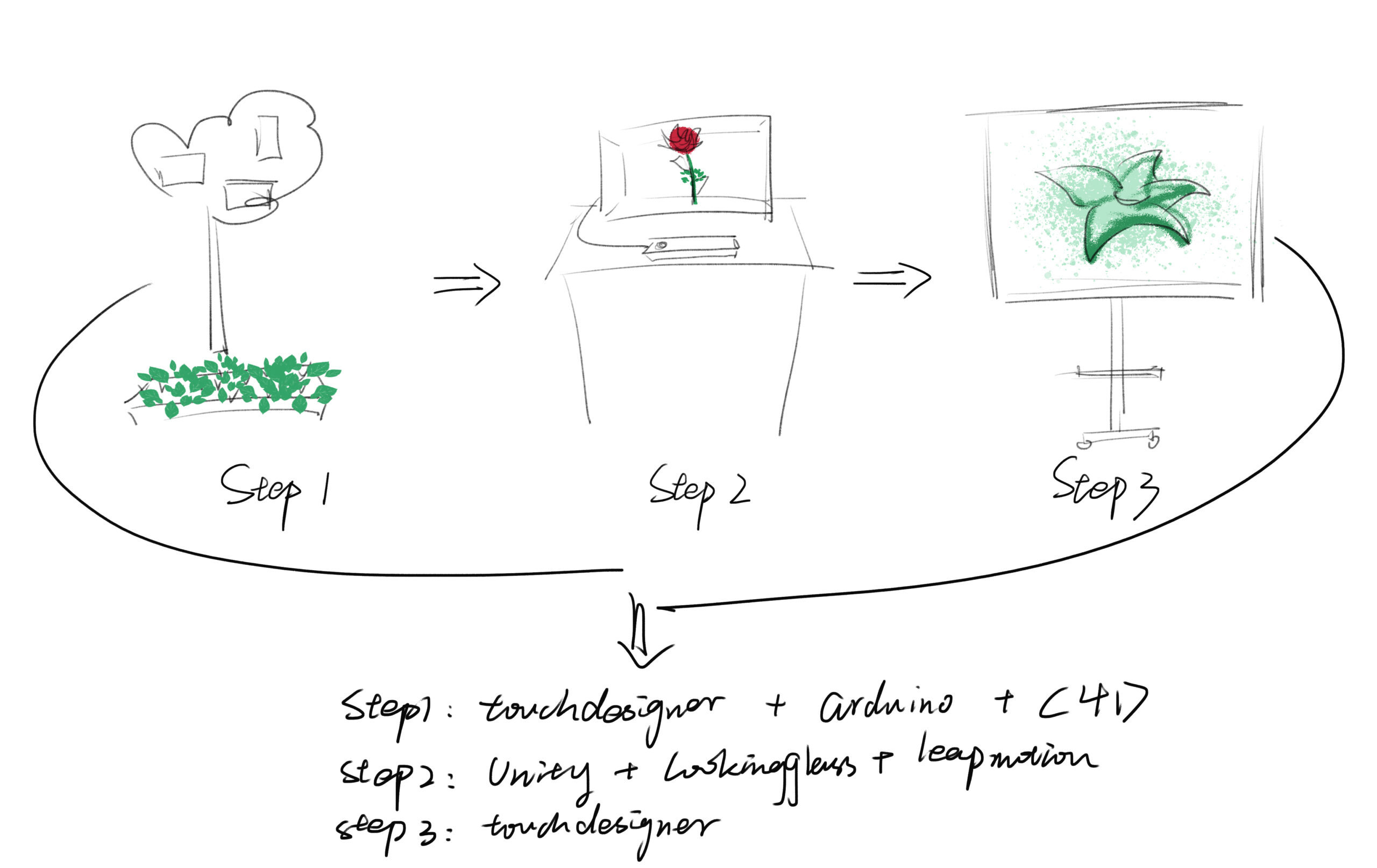

Ideation: sketch

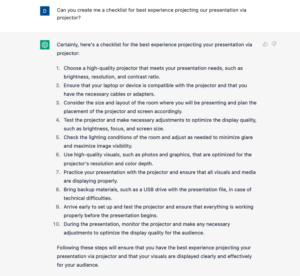

Steps to visit the exhibition

1. Step on the lawn and observe the plant growth process

When people walk into the venue and put their feet on the lawn, the video on the device in front of them will be triggered and played simultaneously.

- Meaning: Think about the connection between human activities and plants

2. Try to wave your hands

Use gestures to control different materials and states of flowers. Open hands: flowering; clapping hands: changing flower material; tilting hands: watering.

- Meaning: Different human activities have different impacts on the environment.

3. Feel the changes of Electronic Flower through drum changes

When people walk into the venue, their eyes will definitely be attracted by this beautiful visualized electronic flower. The flowers will dissipate as long as the sound or music. Among the changes are: coil change, blur change, rotation speed change, zoom in, color change.

- Meaning: This part represents the influence of sound, and different sounds will bring about different changes in the image. The effect of sound on plant growth has always been a very interesting subject. For years, scientists have been exploring how to use sound to make plants grow better, or make them produce more. It is also good for everyone to have a look at the beautiful electronic flowers and have an understanding of the sound from abstract to concrete.

Improve

After the exhibition, we made improvements to the previous design, including the improvement of the user’s tour of the exhibition, rich experience functions and integrity.

- STEP1: Sort out the logic, remake the plant model video, and optimize the experience process.

- STEP2: Add flower interaction methods to enrich user experience.

- STEP3: The visual replacement software is remade, the model is changed from the earth to a flower, parameter settings are added, and colors, lights, and materials are newly created or modified.

1.Vision (sketch/video/Unity)

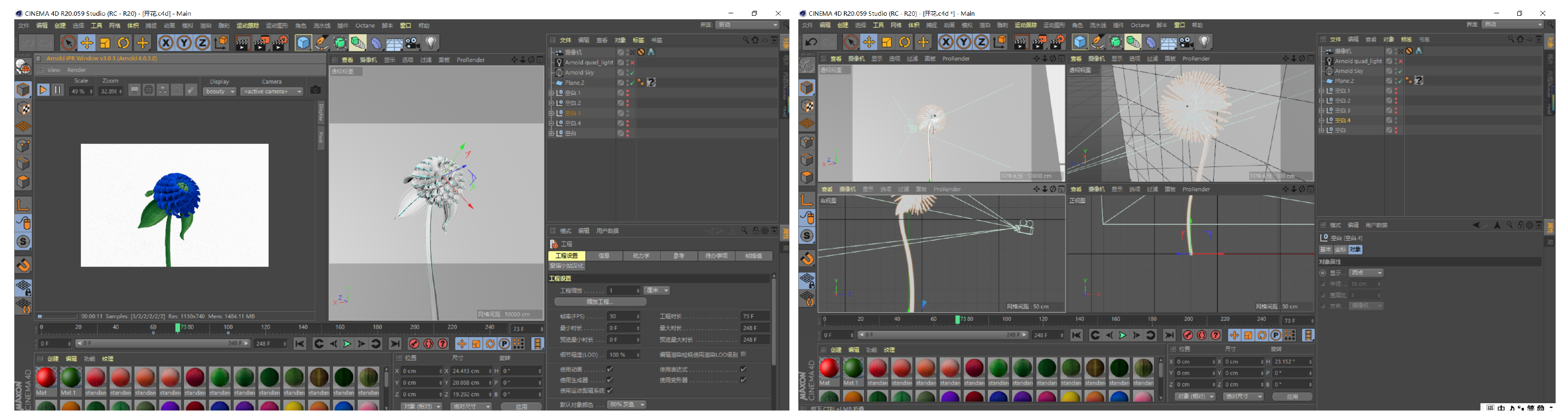

Visual design plays a vital role in exhibition design, as it not only catches the viewer’s eye but also conveys information and emotion. Our design concept is to create an environment full of life and vitality by using C4D flower animation and Unity animation to guide the audience to start their thinking and exploration in the exhibition space.

We chose to use flowers as the theme of visual elements. By using C4D flower animation, we can present three different types, colours and shapes of flowers, so that the audience can feel the richness and diversity in nature. We can also express the flow and change of life through the dynamic movement and change of flowers.

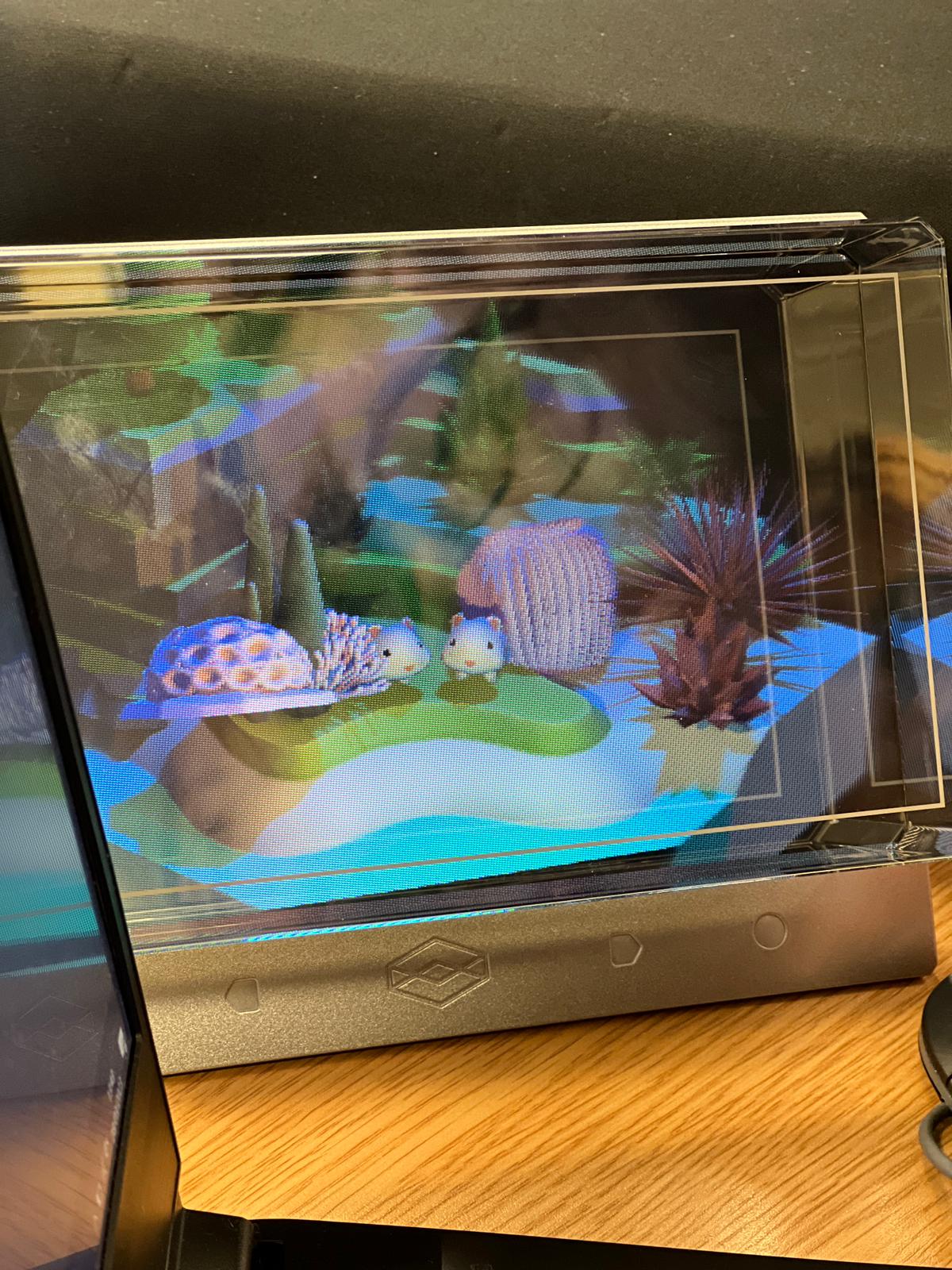

In the exhibition space, we will also use Unity animations to create an environment that echoes the flower theme. We put flowers into Lookingglass, and the experience can explore different gestures to correspond to different flower changes. These design elements are strongly interactive to capture the attention and interest of the audience.

Group1—plant animation production 1.0(C4D)

Group1—plant animation production 2.0(C4D)

Group1—plant animation production 3.0(C4D)

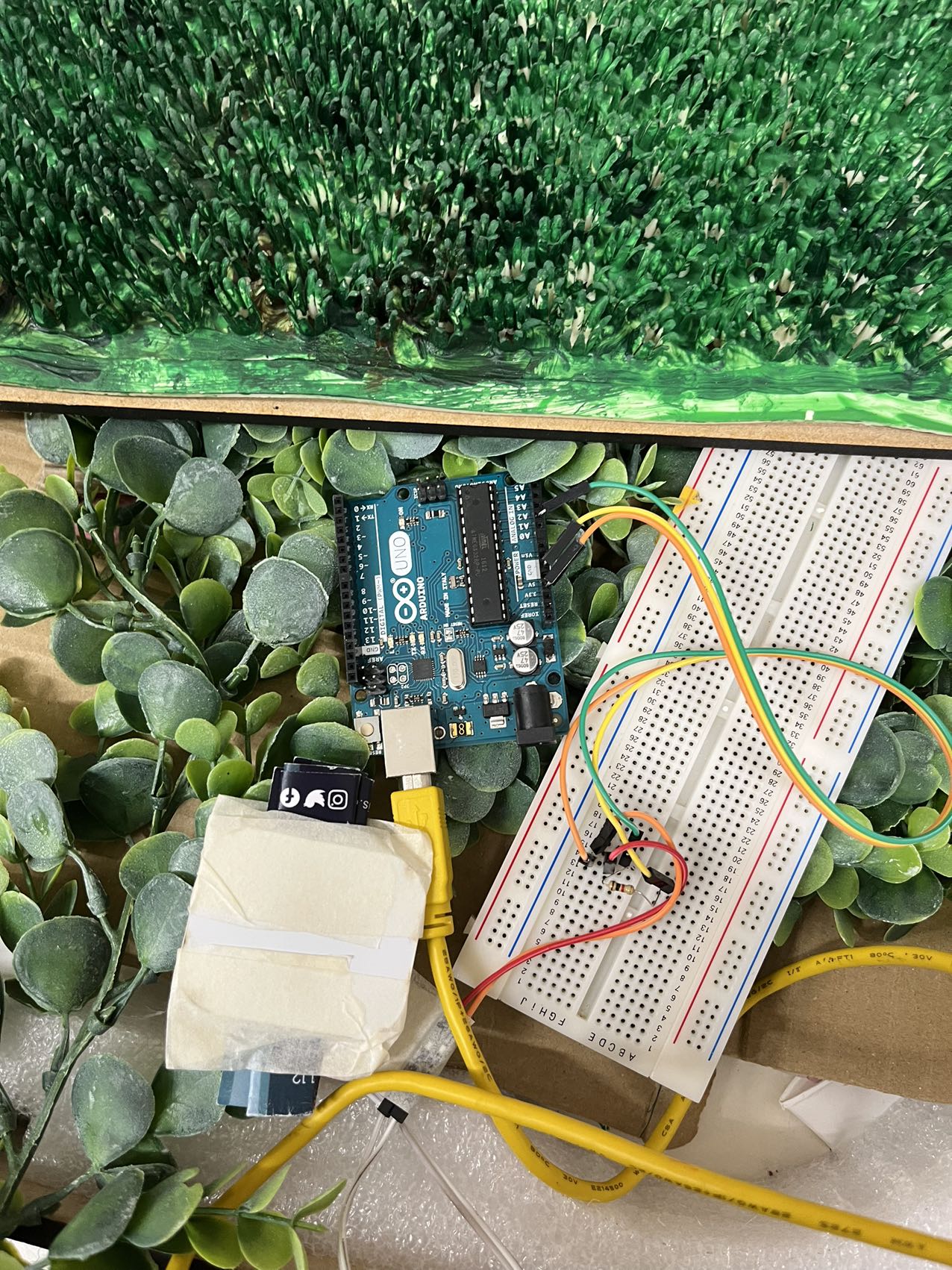

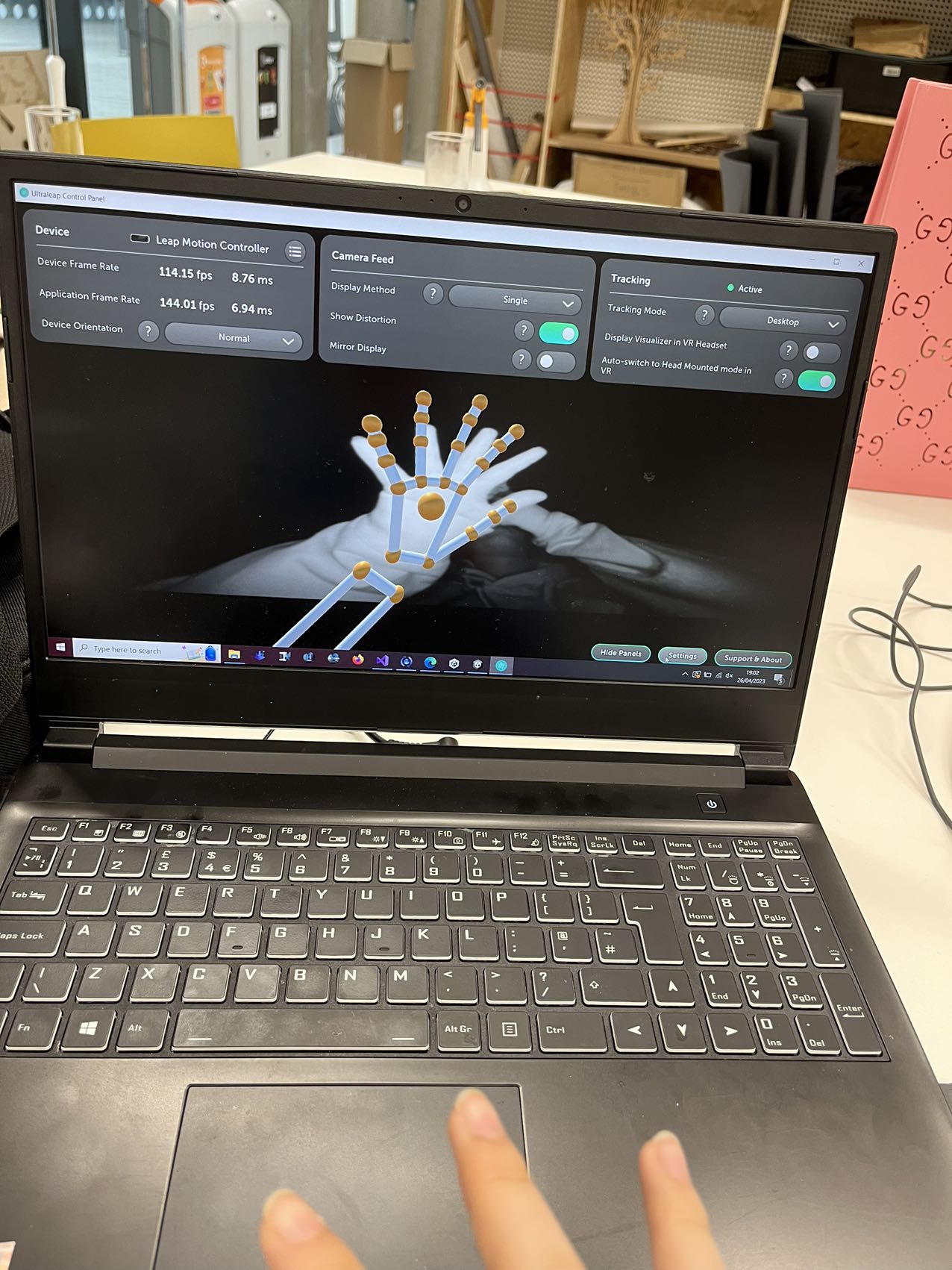

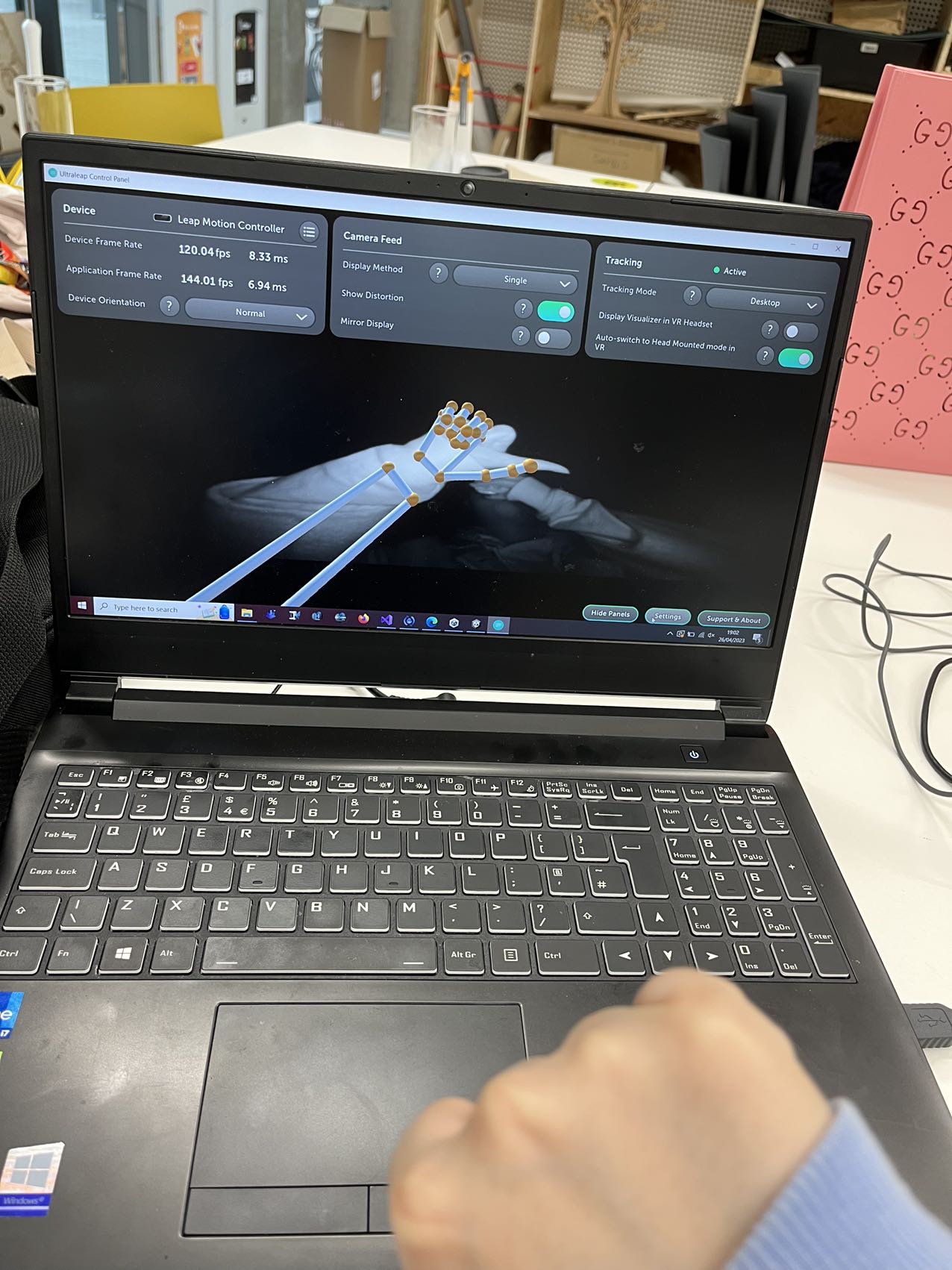

2. Interaction (Arduino/ Lookingglass/ leap motion) (Boya Chen )

In the choice and design of interaction methods, we wanted to create interaction methods that gave real-time feedback and were relevant to the theme of the design, rather than just simple audio and video control.

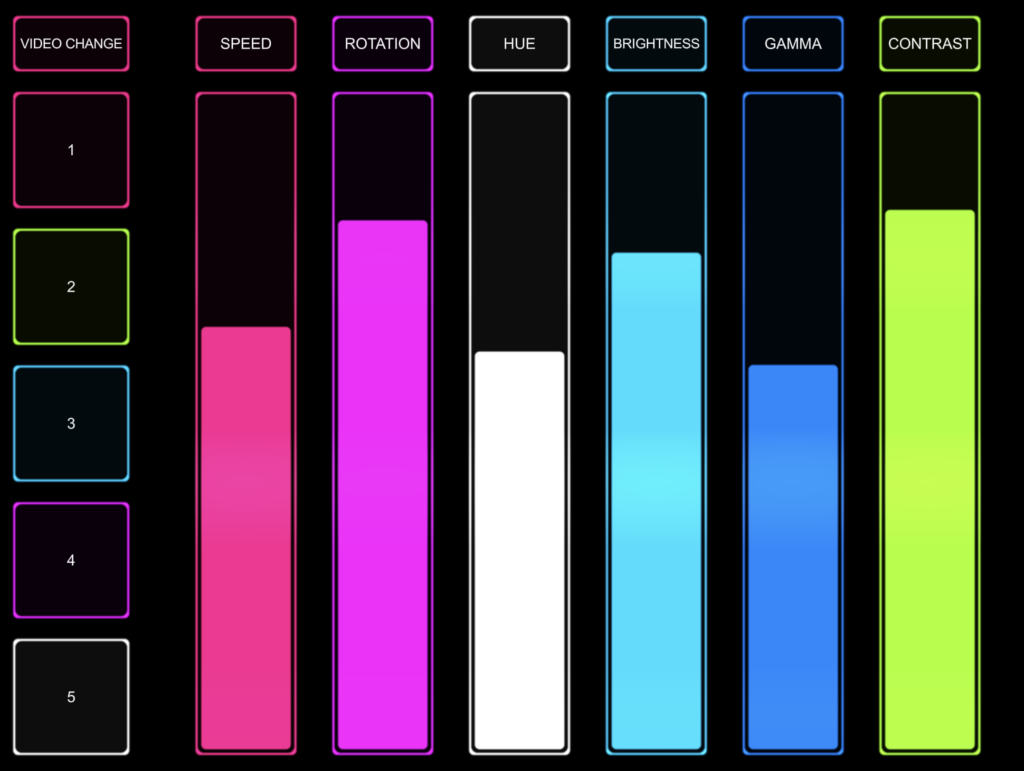

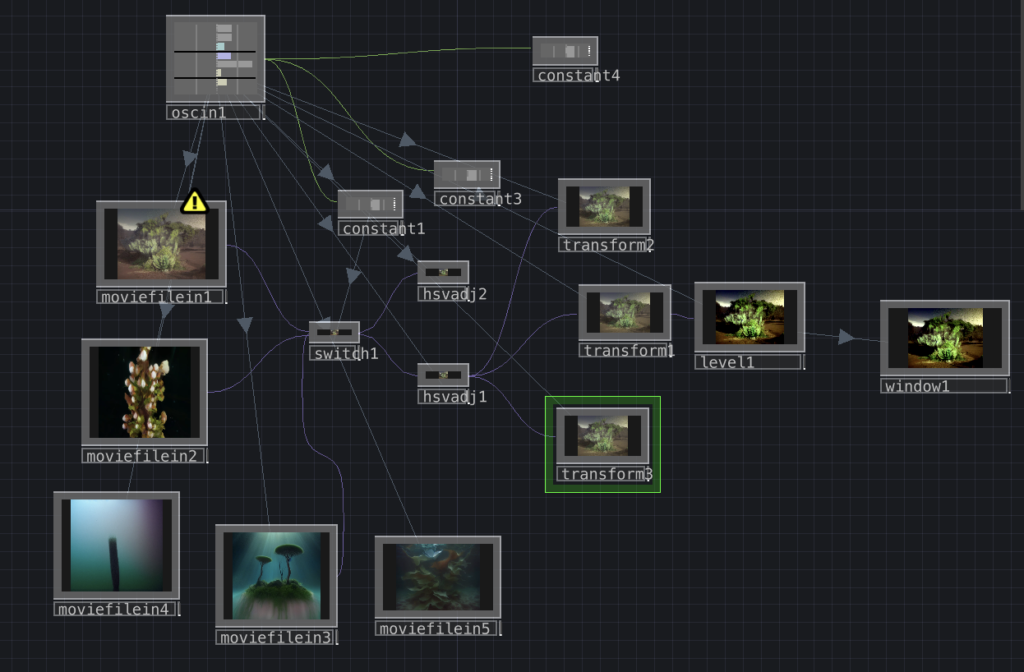

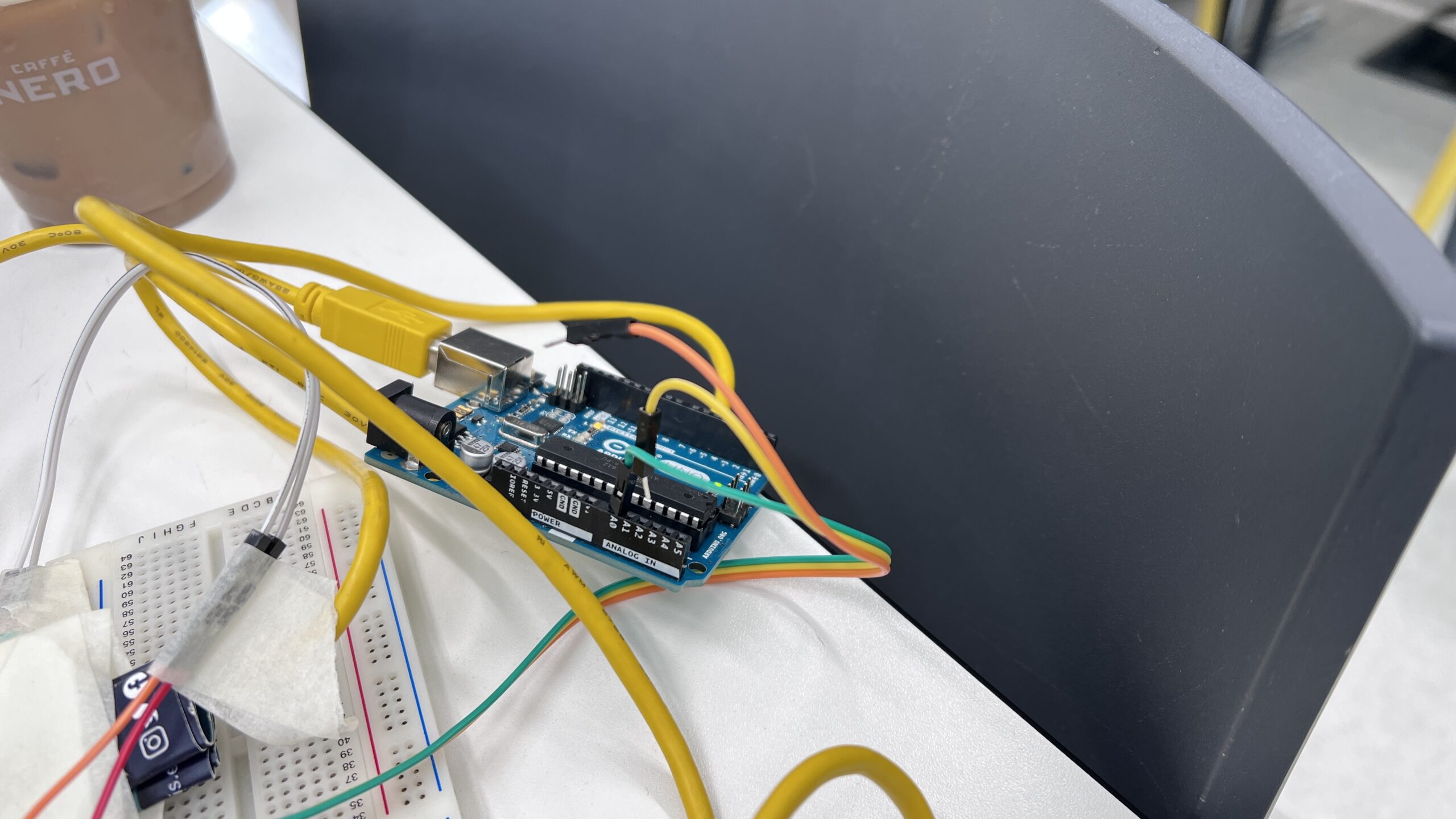

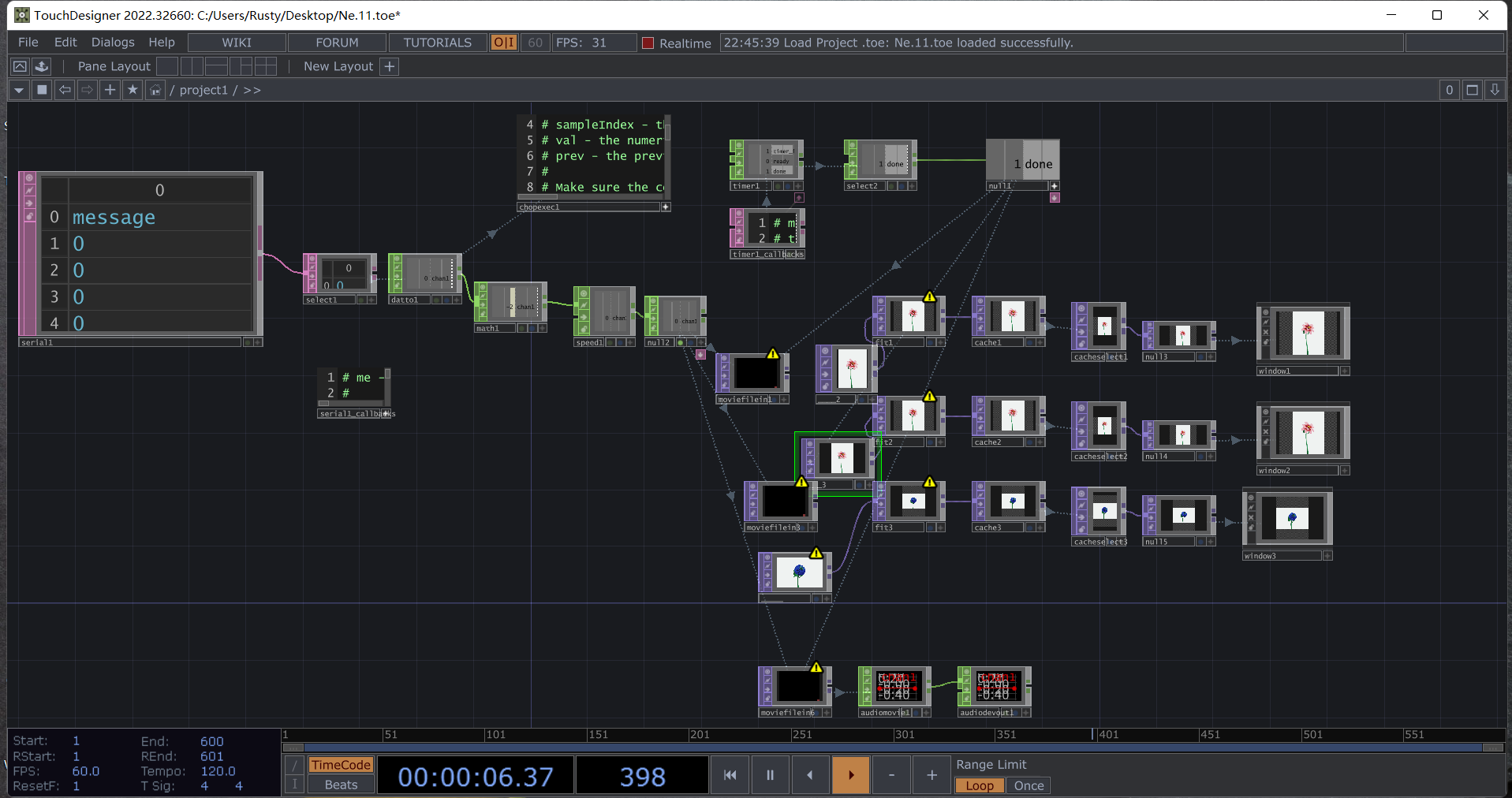

- For the first part of the exhibition, we wanted to combine the sounds previously captured outdoors with the animations we generated to create a playful interactive installation. As our theme is related to plants and the environment, we wanted to simulate the natural environment during the user experience, interacting with the animated sound effects through the user’s actions. Ultimately we used the touch designer to connect the interaction process. We used an arduino pressure sensor to connect to the touch designer to control the rhythm of the video and audio playback, and in the final exhibition we placed the pressure sensor underneath the lawn model so that the interaction process would be a rhythm of the user stomping on the lawn to control the natural variation of video and sound. In this way we wanted the user to feel the impact of their actions on the environment.

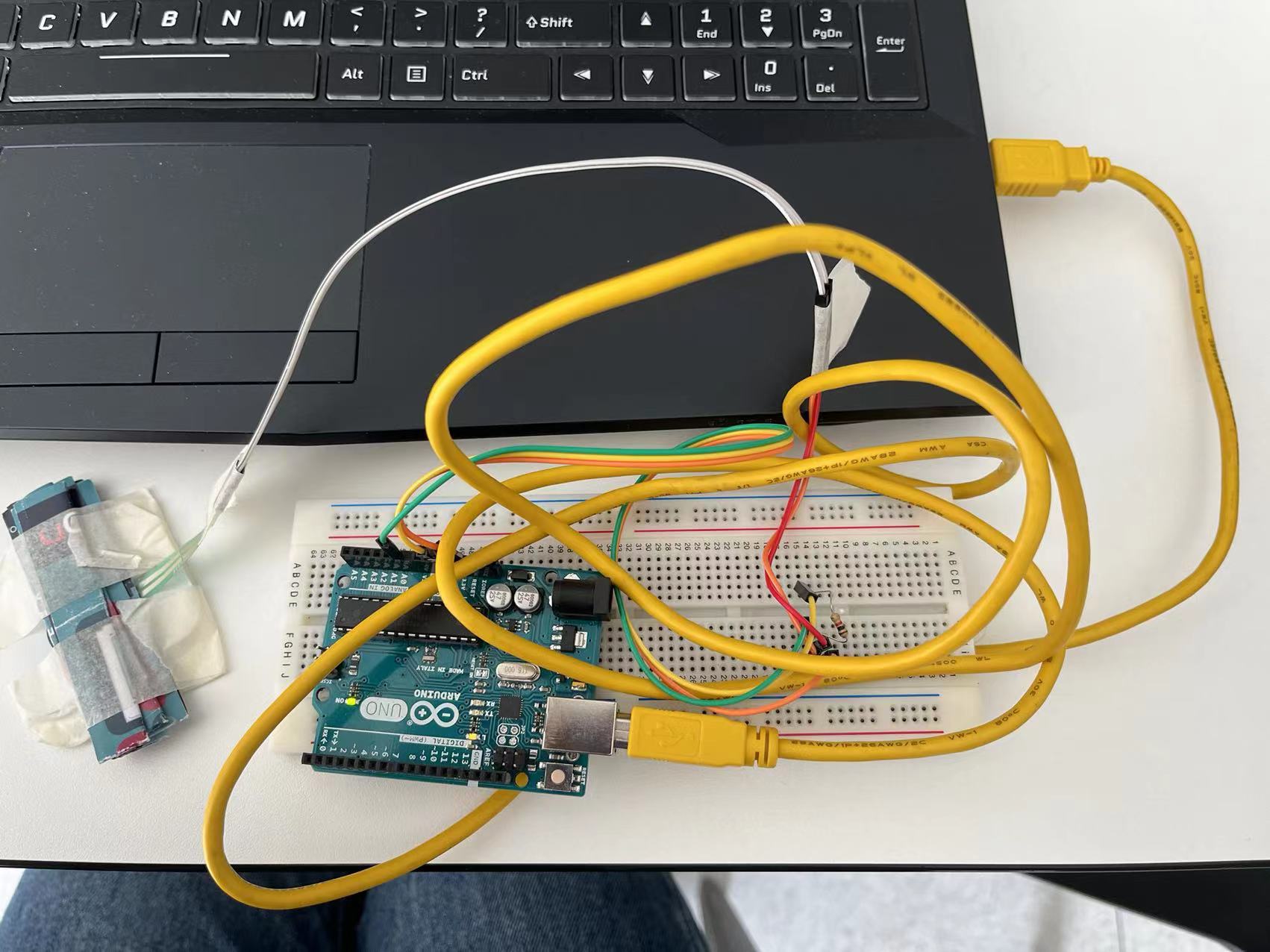

STEP

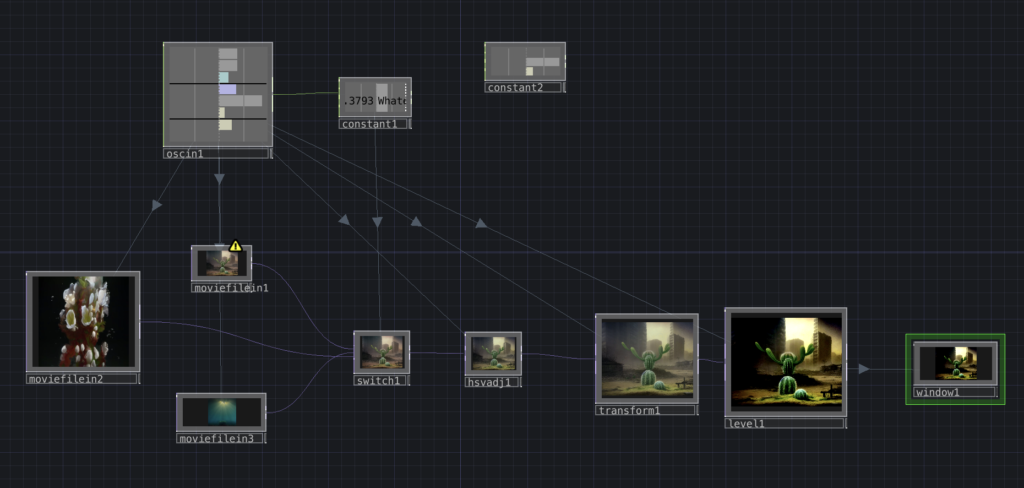

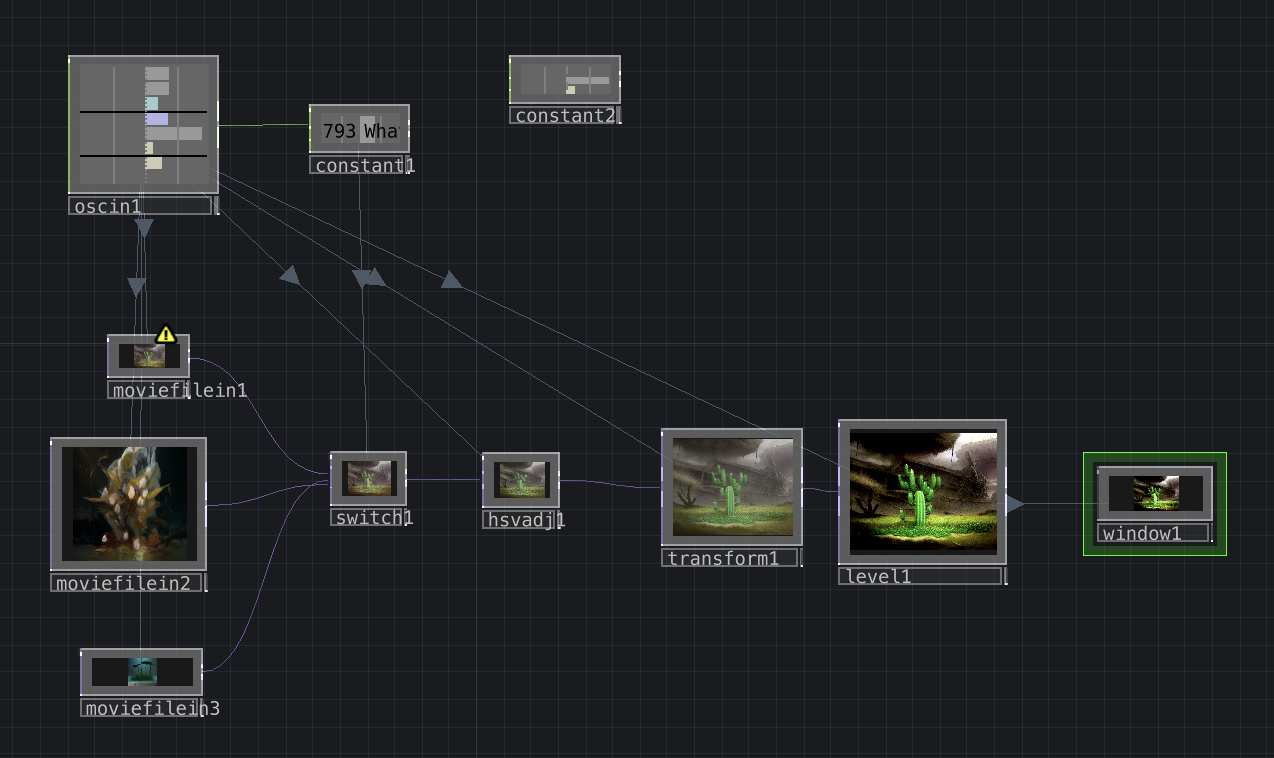

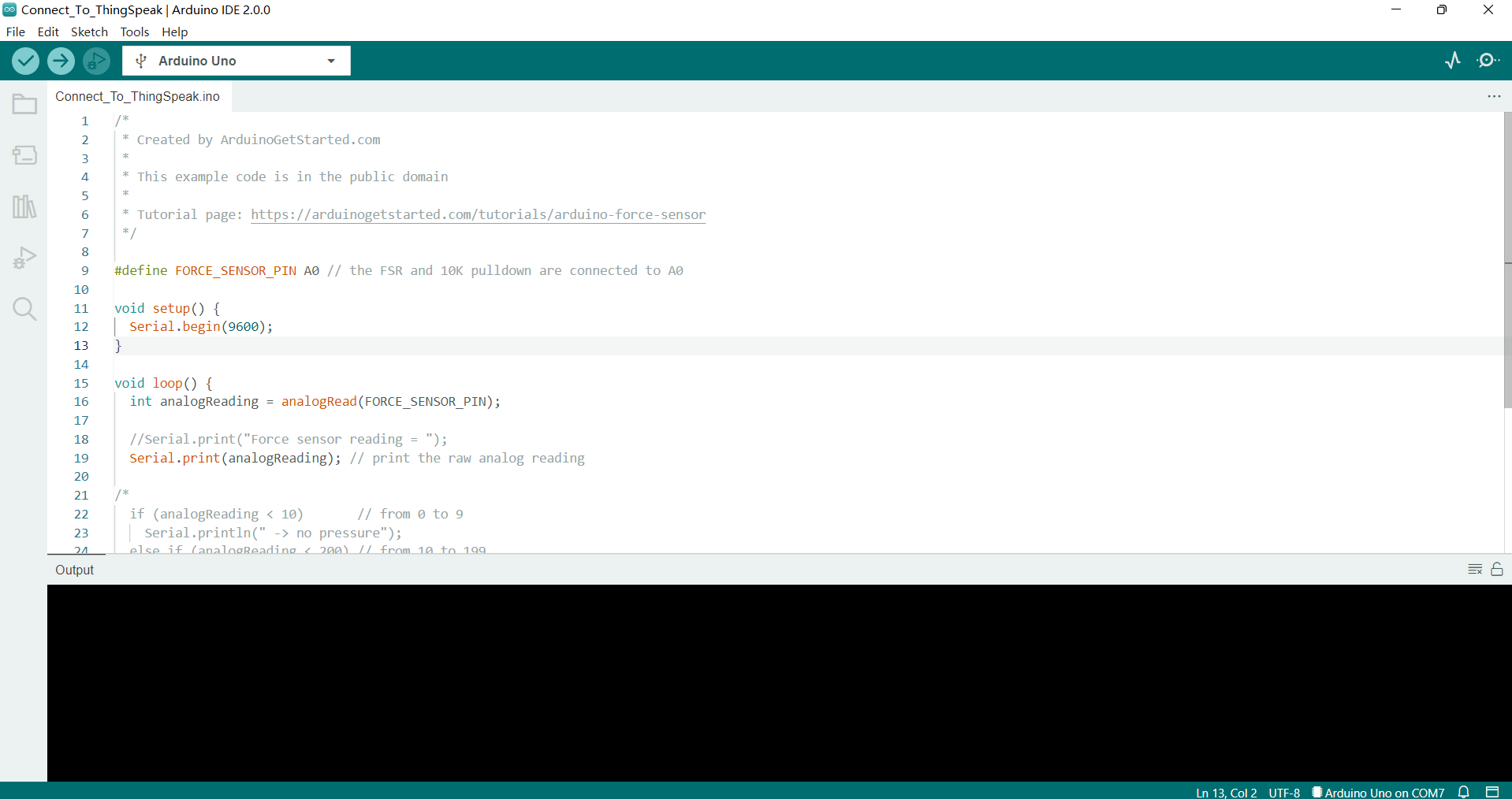

1.Connect the Arduino board and pressure sensor: Connect the pressure sensor to the Arduino board, and then connect the Arduino board to the computer.

2.Write an Arduino program: Use the Arduino development tool to write a program that reads data from the pressure sensor and transmits the data to the computer.

3.Install Serial DAT: In TouchDesigner, install the Serial DAT component, which can receive data sent by the Arduino board.

4.Configure Serial DAT: In TouchDesigner, configure the Serial DAT component to communicate with the Arduino board. The serial port and baud rate parameters need to be specified.

5.Create a trigger: Use the CHOP component in TouchDesigner to create a trigger that can receive data sent by the Serial DAT component and convert the data into a signal that can be used to control the speed of video playback.

6.Control the speed of video playback: Use the Movie File In component in TouchDesigner to import a video file, and use the trigger to control the speed of video playback based on the data received from the pressure sensor.

- In the second part of the exhibition, in order to allow the user to better experience the process of plant change, we placed the first stage of the 3d model into a holographic looking glass and used leap motion gesture recognition to control the change of the model. In addition to simple functions such as changing the perspective of the model, we have also designed gestures and signs to help the user interact with this part. For example, the gesture of watering a flower to make it start to grow, or the gesture of clapping a hand to change the external shape of the flower. leapmotion and looking glass not only make the visuals more intuitive, but also create a more natural and flexible way of interacting with the design content.

Steps

- Connect the Leap Motion and the Looking Glass

- Install the Leap Motion SDK: Download and install the Leap Motion SDK, which will provide useful libraries and sample code.

- Install the Looking Glass Unity SDK: Download and install the Looking Glass Unity SDK, which includes the necessary tools and assets for developing Looking Glass applications in Unity.

- Set up the scene in Unity: Create a new scene in Unity and add a Looking Glass plane to the scene. Import 3D model into the scene and place it in front of the Looking Glass plane.

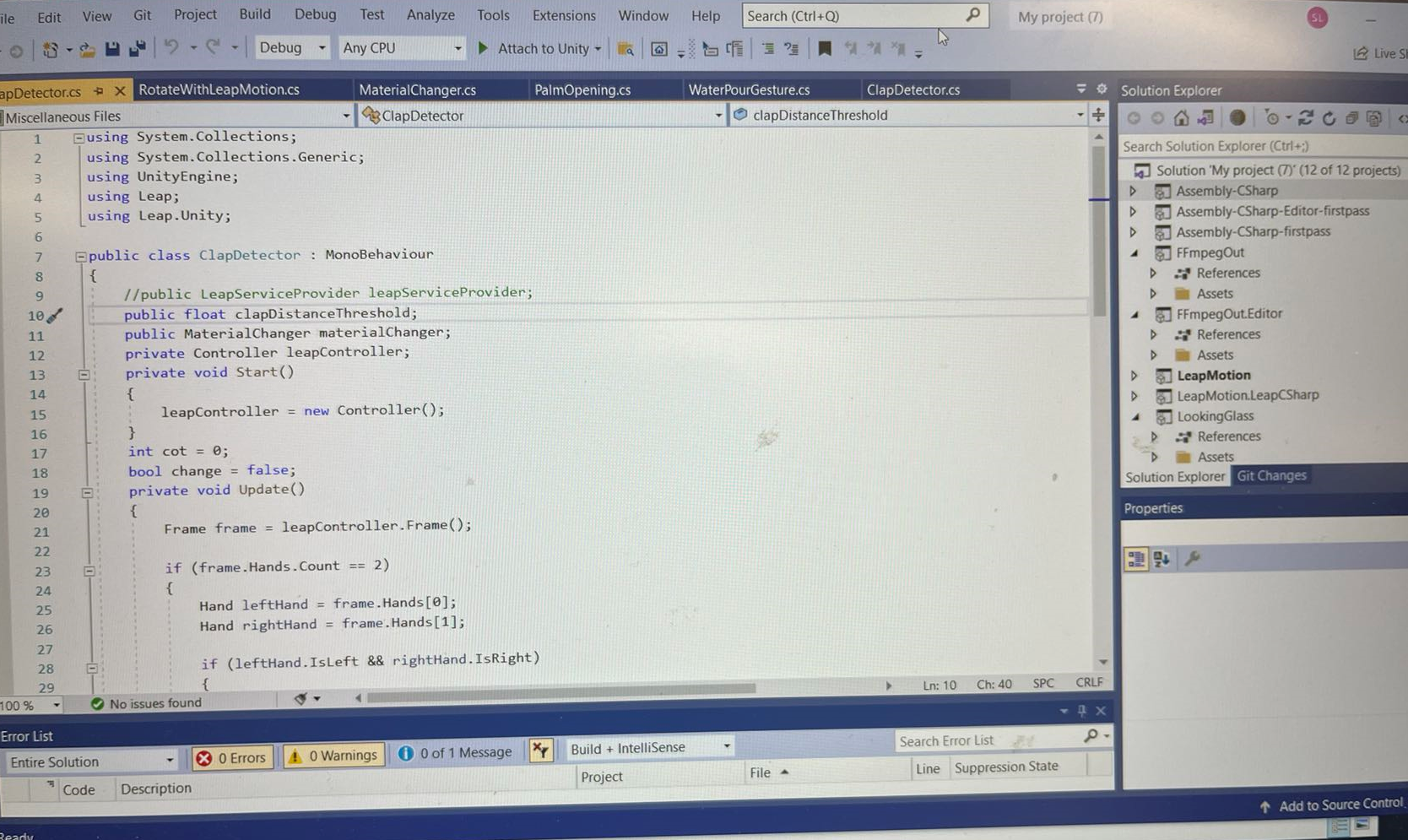

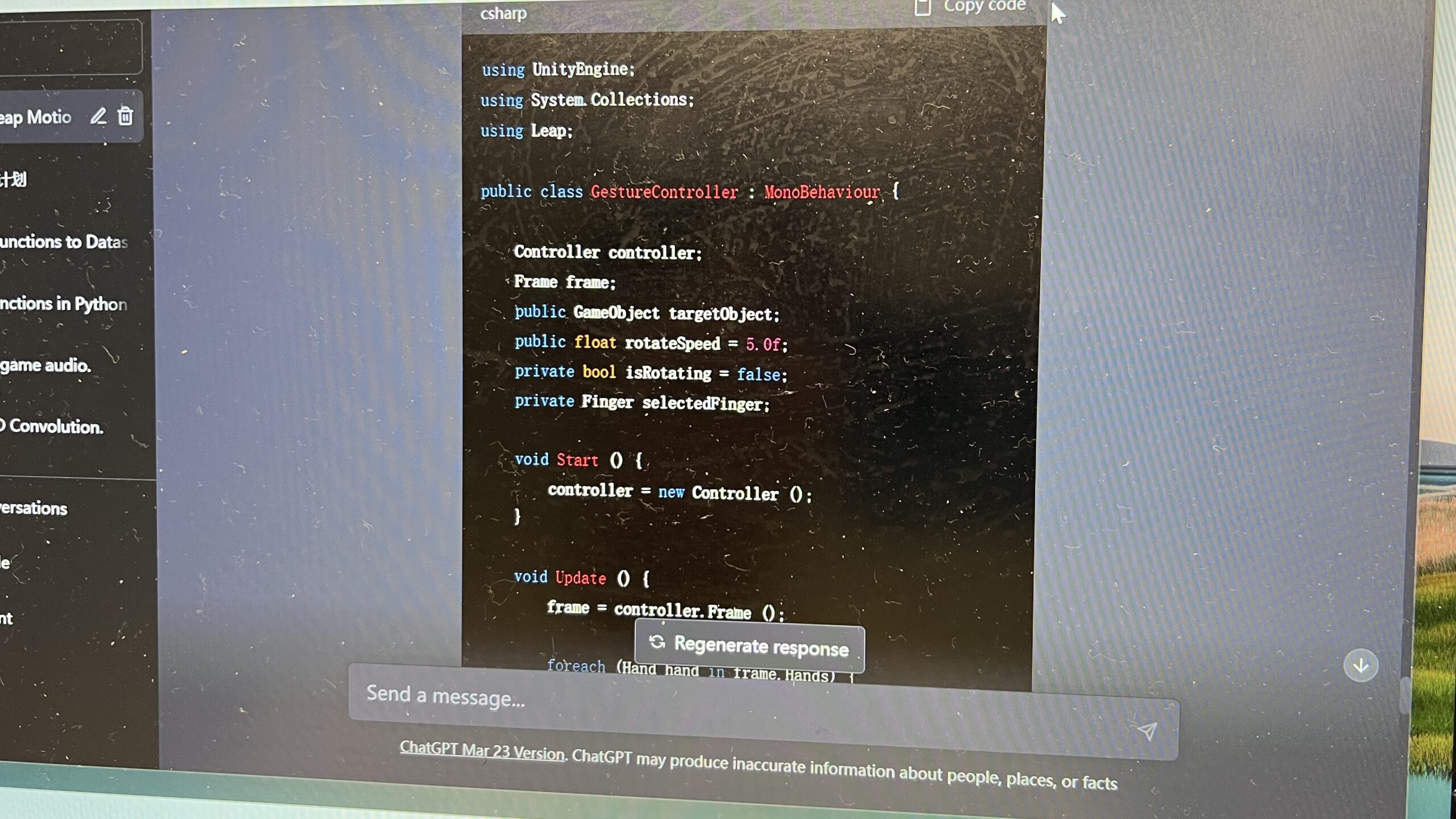

- Write the Leap Motion control code: Use the Leap Motion SDK to write a code that reads the Leap Motion hand gesture data and converts it into a control signal for the 3D model in Unity.

- Map the hand gestures to model controls: Map the Leap Motion hand gestures to control the 3D model’s rotation, translation, and scaling in Unity.

- The following are references to the interactive section

Collection of user experience feedback

1.“This is the first time I have participated in a plant-themed exhibition, and this exhibition has given me a deeper understanding of plants. I have never thought that there can be so many connections between people and plants. Now, I am beginning to realize that each There is also a close relationship between plants and humans. I also found the interactive parts in the exhibition very interesting, which was a great experience!”

2.“I find this exhibition very inspiring. It shows us the importance of plants in our lives and to reflect on the relationship between man and nature. I like the interactive experience in the exhibition, such as using lookingglass, and using different gestures to Flowers achieve different effects. It’s really interesting. So, I’m glad I participated in this exhibition, it gave me a deeper understanding of the relationship between nature and human beings.”

3.“I think the theme of this exhibition is very good. However, I still encountered some problems during the experience. For example, when I stepped on the lawn, the interactive device was not triggered in time, and the response was a bit slow, so I think I can continue to optimize it. Experience the process.”

4.“The visual effects and sound effects of this exhibition are very good. I feel that I am really in a plant world, and the various sounds in the exhibition make me feel that I am in a real environment, allowing people to understand It gave me a better understanding of the relationship between plants and nature, and also made me feel that I need to pay more attention to our natural environment. Overall, it was a very memorable experience.”

Conclusion

This innovative exploration of potential plant mutations and future transformations serves as a testament to the power of interdisciplinary collaboration and the limitless potential of human-AI partnerships. By harnessing the capabilities of advanced AI tools and working in synergy, we have successfully created an engaging and thought-provoking exhibition that invites audiences to ponder the fascinating intricacies of the natural world.

References

https://github.com/ultraleap/UnityPlugin/releases/tag/com.ultraleap.tracking/6.6.0

https://docs.lookingglassfactory.com/developer-tools/unity

https://docs.lookingglassfactory.com/developer-tools/unity/prefabs#holoplay-capture

https://wiki.seeedstudio.com/Grove-Touch_Sensor/

https://developer.leapmotion.com/unity

https://www.nts.org.uk/stories/the-thistle-scotlands-national-flower

https://1.share.photo.xuite.net/ngcallra/1148daa/16615727/894216827_m.jpg

https://www.bilibili.com/video/BV1he4y1B79Z

https://cowtransfer.com/s/725c23cf2d7f47

https://www.picturethisai.com/image-handle/website_cmsname/image/1080/154238468984143882.jpeg?x-oss-process=image/format,webp/resize,s_422&v=1.0

https://www.bilibili.com/video/BV1rr4y1Q714

https://www.bilibili.com/video/BV1he4y1B79Z

https://www.haohua.com/upload/image/2019-08/04/27b56_41fd.jpg