https://blogs.ed.ac.uk/dmsp-process23/2023/04/26/group1-exploring-and-using-the-touch-designer/

https://blogs.ed.ac.uk/dmsp-process23/2023/04/26/group1-leapmotion-and-looking-glass/

The limited computing power becomes a boundary to render our concept images and draw a large number of frames to create animation between mutated plants.

In fact, there are many solutions for running the program in a remote environment, such as Google Colab. I followed some tutorials to set up the Stable Diffusion on a remote computer, but the online unit’s storage had an error installing models from my Google Drive. I made it run the Stable Diffusion on a rented machine that has a powerful graphic card and successfully used it to upscale the sequence of one completed version of the video. It takes very much computing power to render 1800 frames from 512px resolution to 1024 px and draw extra details on each frame because the process of these images is to redraw every frame and make everything more intricate.

We have been enlightened by these practices in many ways.

Using reinforcement learning (RL) for Procedural Content Generation is a very recent proposition which is just beginning to be explored. The generation task is transformed into a Markov decision process (MDP), where a model is trained to iteratively select the action that would maximize expected future content quality.

Most style transfer methods and generative models for image, music and sound [6] can be applied to generate game content… Liapis et al. (2013) generated game maps based on the terrain sketches, and Serpa and Rodrigues (2019) generated art sprites from sketches drawn by human.

A range of engagement with AI tools and applications enables us to obtain some inspirations and key references to serve us in the development of our concept. They also help us in seeking visual elements to develop the design when we have little knowledge of the basic science of plants. Even if we know nothing about the nature of plants, we can quickly generate hundreds of images (and variants) of cacti, vines and underwater plants.

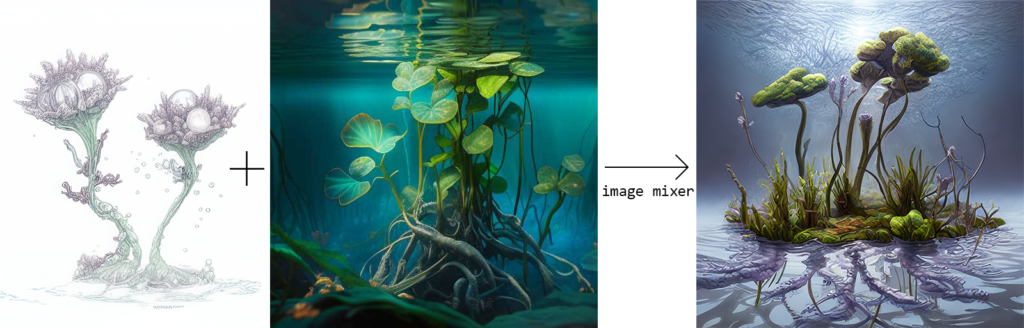

We can just use our imagination to blend together variants of plants that do not exist, given the established common sense of biology told by AI. For example, by adopting the basic attributes of an aquatic plant, fusing the characteristics of a coral colony with those of a tropical water plant, and adding some characteristics that receive the effects of environmental changes, a new plant is created.

By using functions from generators including Perlin noise, style transfer algorithms (NST) and feature pyramid transformer (FPT), we can quickly appropriate elements from different images and fabricate them together, for example, by imagining a giant tree-like plant, even though I am not a botanist. I can transform the organisation of the leaves, trunk and root parts of the tree. For example:

Change the leafy parts into twisting veins and vines like mycorrhizae.

Recreate the woody texture of the trunk into parts of another plant, making this plant into a mixture of multiple creations.

Then, place it in a body of contaminated land.

After subjective selection and order, the plants’ images were matched to a certain context, and we created a process of variation between the plants’ different stages.

Ai models are engaged in the processes of generating ideas, forming concepts and drawing design prototypes with different degrees of input and output. I used a variety of tools in the design process, and the selection of materials was a time-consuming and active process that required human involvement and modulation. I also had to control many parameters in the process of generating the material to achieve the desired effect.

In the future, if a set of interactive media were able to allow audiences to input their ideas to ai and generate animations in real-time through a reactive interface, a complete set of interactive systems would need to be built, and such a generative video-based program would require a great level of computing power.

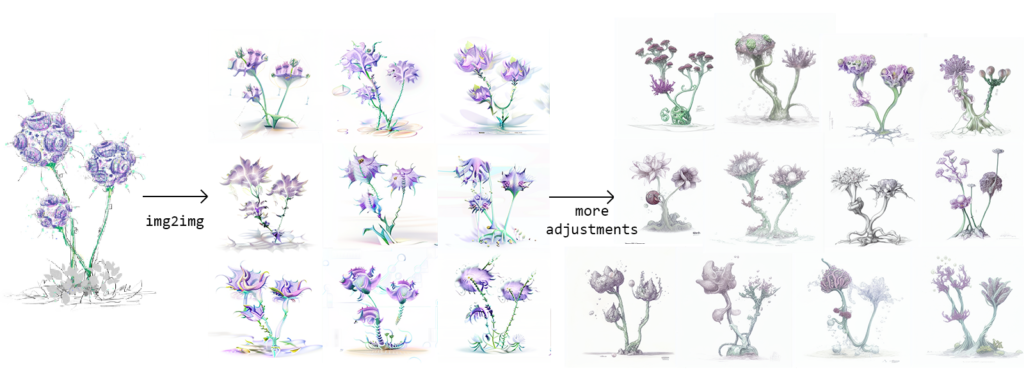

Many problems that can be encountered in the process of txt2img and img2img generation in which the outcomes vary dramatically and lack proper correlation suggest that more detailed adjustments to the image model are needed to improve.

Because when an audience is in front of an AI model and tells it: “I want a mutant plant in a particular environment”, the AI model may not be able to understand the vague description. To be able to generate and continuously animate our conceptual mutant plants in real time would probably require not only a computer that powerful enough to draw hundreds of frames in seconds but also a model that trained for plants’ morphology accurately and was able to originate new forms itself.

Among the many contents in online forums and communities of Stable Diffusion, LoRA is a series of models with extensive training in generating characters and human models, which have been adapted and trained for many generations in different categories. In the future, there may be models trained on a variety of subjects and objects that may have important applications in the design and art fields.

reference:

Adrian’s soapbox (no date) Understanding Perlin Noise. Available at: https://adrianb.io/2014/08/09/perlinnoise.html (Accessed: April 27, 2023).

Liu, J. et al. (2020) Deep Learning for Procedural Content Generation, arXiv.org. Available at: https://arxiv.org/abs/2010.04548 (Accessed: April 27, 2023).

Liu, J. et al. (2020) “Deep Learning for Procedural Content Generation,” Neural Computing and Applications, 33(1), pp. 19–37. Available at: https://doi.org/10.1007/s00521-020-05383-8.

Yan, X. (2023) “Multidimensional graphic art design method based on visual analysis technology in intelligent environment,” Journal of Electronic Imaging, 32(06). Available at: https://doi.org/10.1117/1.jei.32.6.062507.

Hello and welcome to my personal report.

Intro:

As a sound designer, I have contributed to more than just the sound component of our project. From the initial concept and exhibition framework to the final presentation, I have been involved in every step of the process. As a result, I believe that my contributions have been integral to the success of the project.

In this report, I will be discussing my contributions to four different aspects of the project: the visual and interactive electronic flower, the project’s recording and sound effects, the project’s music, and my involvement in other parts of the project. Each section will detail my specific contributions and how they contributed to the overall success of the project.

I created an electronic flower with sound interaction because I realized the importance of sound. In addition to designing sound, I also incorporated visual elements into the flower, making it a beautiful and interactive creation. I am proud of my idea and believe that others will appreciate this aesthetically pleasing flower. By highlighting the impact of sound in this way, I hope to raise awareness and appreciation for this often-overlooked aspect of our environment.

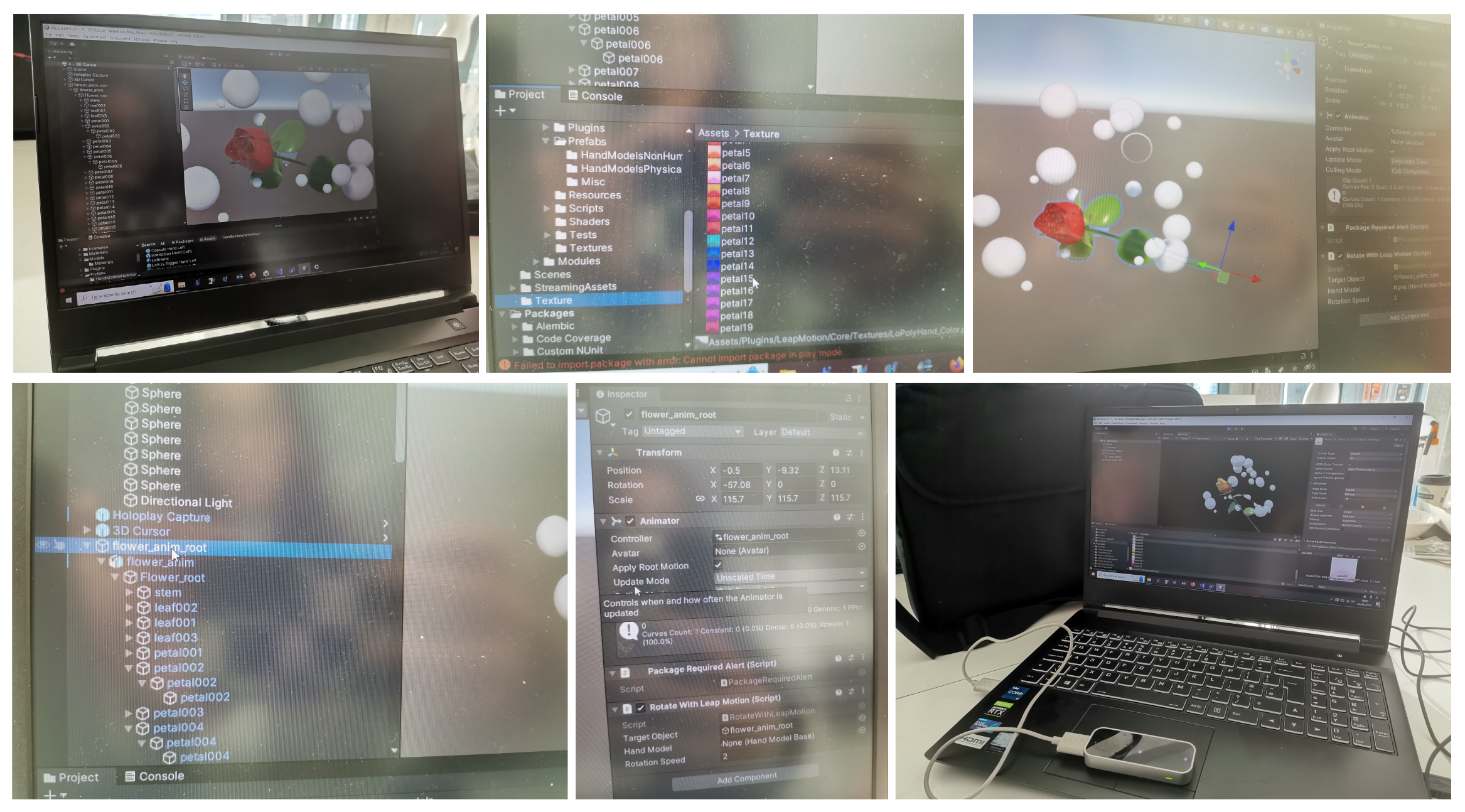

Part 1:the Electronic Flower

The visual and interactive electronic flower was the final piece of our group project. As the audience approached the end of the exhibition, they were greeted by the beautiful electronic flower. It was designed to be displayed on a large holographic projection to enhance its three-dimensional nature. The visual and interactive electronic flower not only provides a stunning visual display, but it also serves as a powerful demonstration of how sound can affect our environment. By reacting to different beats of the music, the flower creates a dynamic and engaging experience that shows how sound can alter our perception of space and enhance our sensory experience. This highlights the importance of sound in shaping our environment and underscores the need for thoughtful sound design to enhance our experiences.

Part 2:

Max and TouchDesigner are both powerful tools for audiovisual performance and interactive installations. In the case of my project, Max was used to create the music and sound effects, while TouchDesigner was used to create the visualizations that respond to the audio input. The two tools were connected using the AudioDevicelnCHOP component in TouchDesigner, which allowed the real-time microphone input to be sent from Max to TouchDesigner for visualization. Additionally, the project allows the audience to participate in the performance by adding their own sound effects, such as clapping or other sounds. This feature adds a level of interactivity and engagement, making the experience even more immersive and enjoyable for everyone involved. Overall, this project showcases the incredible potential of the intersection between music and technology, and how they can be combined to create stunning visual and audio experiences.

Part 3:

Sound effects are a crucial component of this project. The challenge lies in creating sounds that not only match the delicate nature of the flowers but also complement the music. Below is my summary of the sound effects used in this project.

Part 4:Others

As I mentioned before, my contribution to our group is not just limited to the audio part.

Firstly, the visualization is not just audio, and what I visualized is also very much in line with our theme.

Secondly, I made a significant contribution to the entire concept. During our first meeting, I introduced the concept of “evolution.” It was precisely because of my suggestion that we were able to create an AI video using machine learning. Furthermore, I also taught this method to one of our group members, which comes from a famous speech at the Chinese Central Academy of Fine Arts, where they made some fishes using the same method. At first, I used the “Runaway” software, but later switched to another one due to the former being chargeable. I also mentioned multiple times during our meetings that this machine learning can be run on the cloud, and later taught it to another group member.

As for why everyone did not make AI videos, it was because we really wanted to present more things to the audience, including ourselves. I have good relationships with other classmates in my class, who are in other groups, and we always communicate with each other. I know that everyone has many ideas, and this peer competition has motivated me and my few group members to work hard and learn as much as possible. If we were to compare the workload, I believe that I must be the one who has done the most. My part does not require, nor have I ever troubled my group members, and I have made efforts to assist them in many areas, including taking photos, testing links, and so on. This demonstrates my sense of responsibility. I also wrote some C# code and participated in testing, which cannot be fully documented. I had to postpone completing my personal report because of all these responsibilities.

Because my group members had never used Unity before, I taught them a lot in the second part. Many of the features they wanted were things I taught them. At first, my group members thought all animations in Unity were written in code and wanted me to write all of the animation code. Of course, this is not to criticize them. That’s great to hear that everyone has grown in the end. I think it’s not easy to complete the whole project in the end. I also had many things that I didn’t understand. At first, I didn’t know anything about TouchDesigner, but my group members wanted me to learn it, so I immediately learned it and made the visualization very well. My undergraduate major is directing, so I may be more sensitive to visuals. I don’t really know much about music, so I’m working hard to learn. I know that maybe I’m not doing very well, and I was almost on the verge of collapse when I found out I was the only sound designer. But I got through it and tried very hard to solve any problems instead of passing them on to my group members.

Our team was already very small only for 5 person, and I feel really upset that communication with my some of team members was not always smooth. For several days, I felt really depressed because of language barriers, and one team member had no idea about my workload. I have already done a lot, but in his eyes, I am not as good as him who just used an app someone else wrote. We had ten meetings, but he only showed up four times. I have new evidences for my words and medical records from my visits to the hospital if needed. The pressure is too much, actually I don’t want to complain in my personal statement, thanks for everyone.

Music

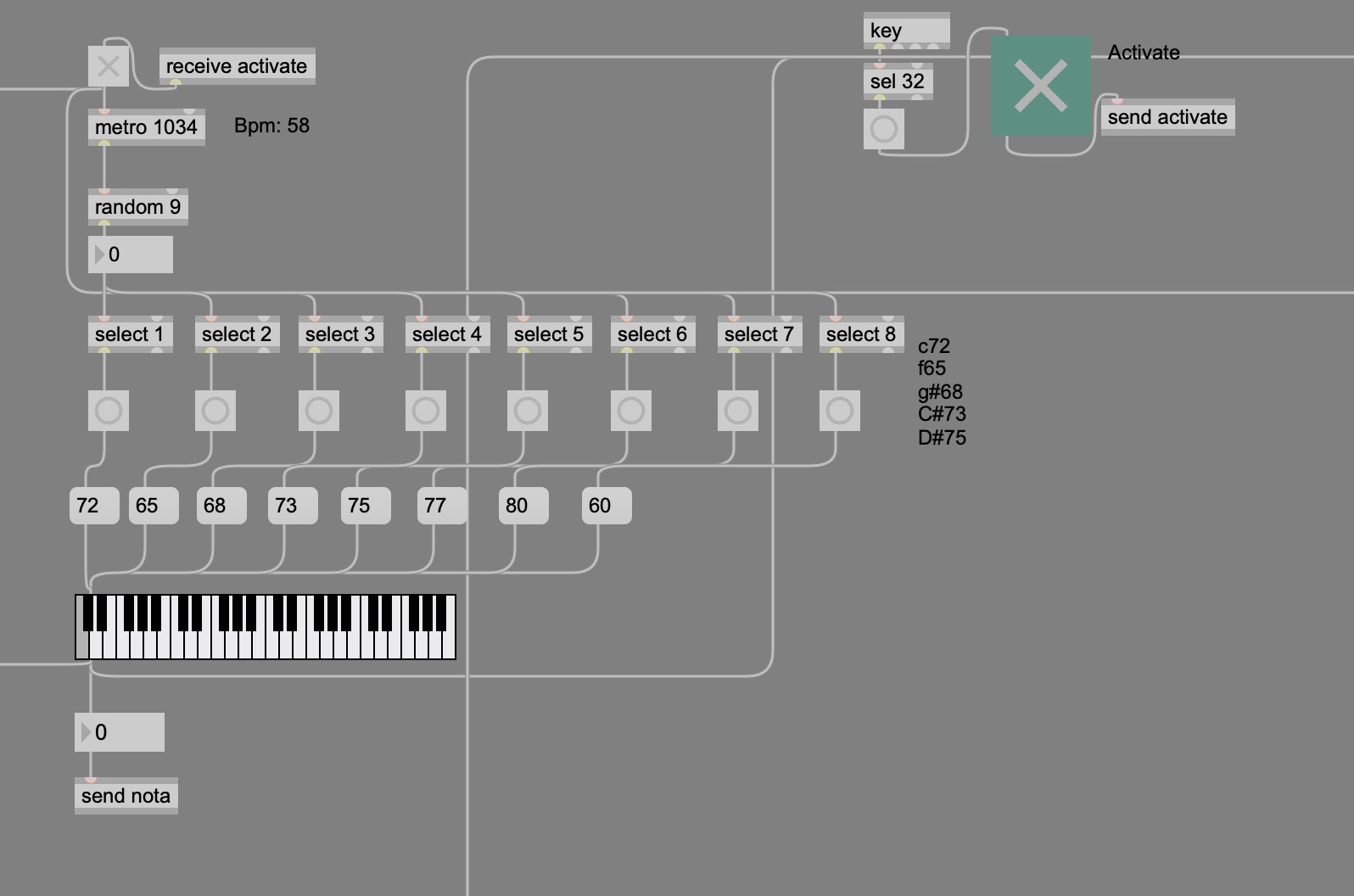

c 72 f 65 g# 68 C# 73 D# 75

The chords formed by these notes sound harmonious together. C, F, and G# form the chords of the C minor scale, while C# and D# form the chords of the C# major scale.

C and F: These two notes are typically considered to be natural, calm, and warm, suitable for creating relaxing music. G# and C#: These two notes are considered to be mysterious and enigmatic. C# and D# are often used to create a mysterious or fantastical ambiance. This add some vitality and dynamism to my music. The combination of these notes can create a mysterious atmosphere. The use of these notes also be to complement the visual elements which is plants of the project.

Overall, they can convey emotional colors and atmospheres related to the theme of interaction between plants and humans in my music.

Drum

Drums are often used in music to create a rhythmic foundation and drive the energy of a piece. In the context of my project, the drum is used to simulate the beating of a plant’s “heart” and to represent the pulsing energy of nature.

I have added a lot of resonance to the drum section, which can help create a more vibrant and dynamic sound, and also add a sense of spaciousness and depth to the overall mix.

I have written the following algorithm to adjust the rhythm of the drums.

if $i1 == 1 then $i1 else out2 $i1

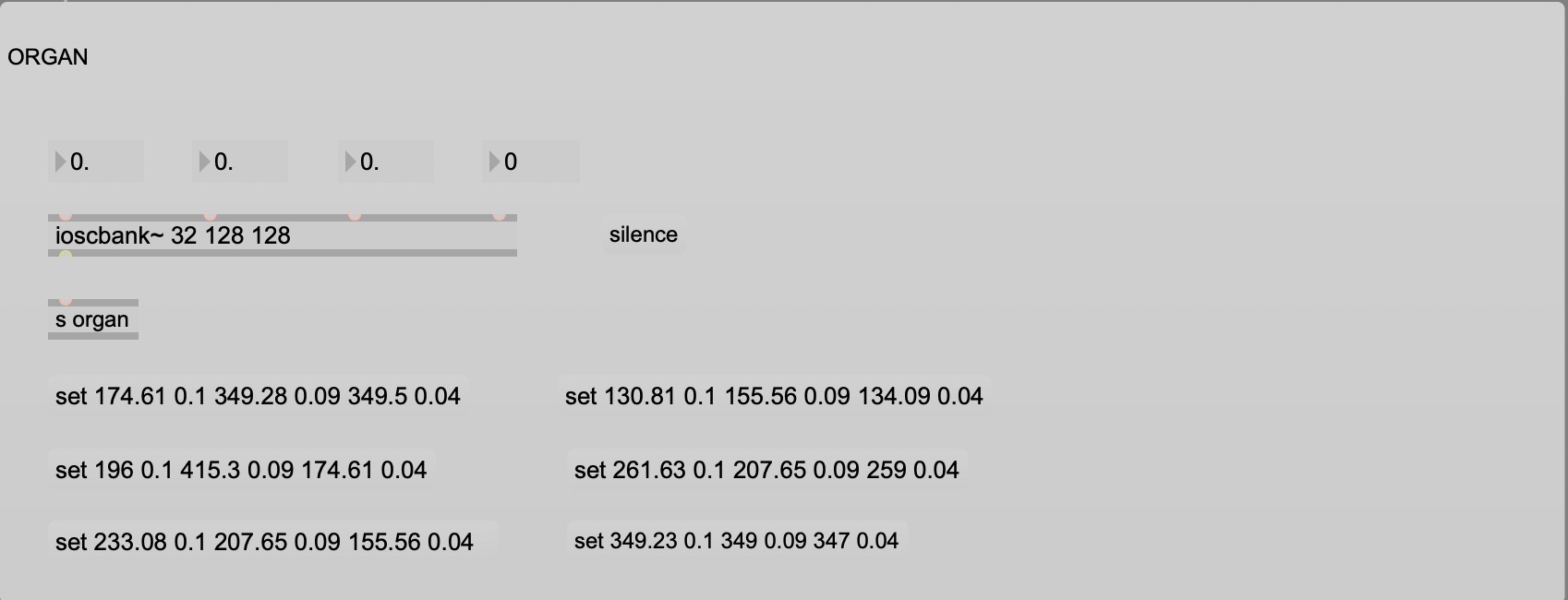

Organ

Organs are known for their rich and powerful sound, which can evoke a sense of majesty or grandeur. The reason for using the organ is to create a mysterious and ethereal atmosphere in the music and to create a sense of wonder in the listener.

Using the ioscbank~ object in Max is for achieving a multi-timbral effect in the organ section. This may make my organ sound more diverse and colorful, giving it more expressiveness.

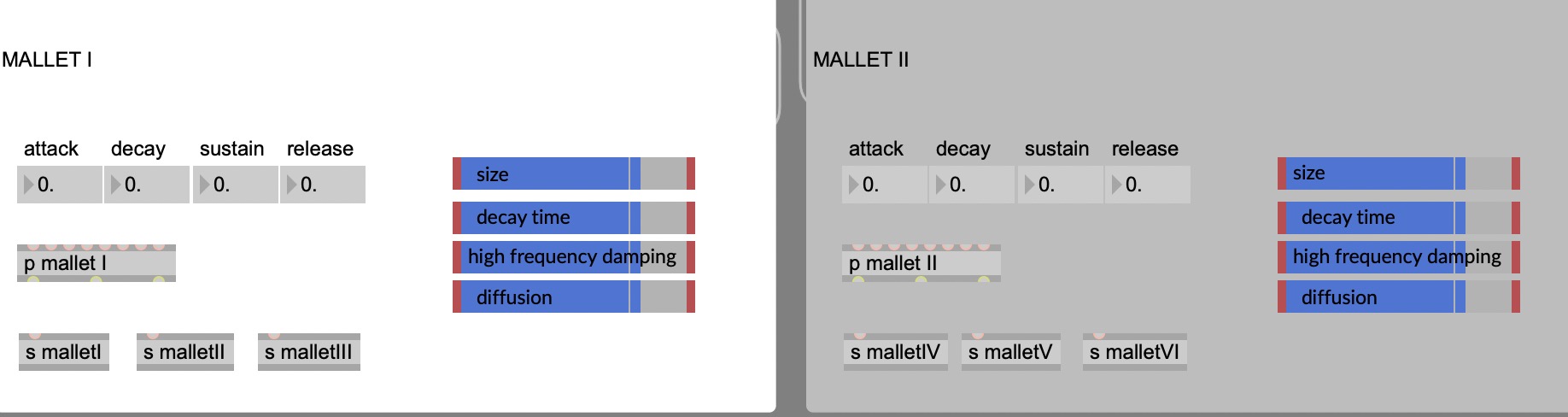

Mallet

Mallet instruments have a bright and sparkling sound that can add a sense of playfulness or whimsy to music. In my project, the mallet could be used to represent the delicate and intricate beauty of plants and flowers and to create a sense of joy.

Adjusting the ADSR in real-time during performance can allow me to follow any irregular changes in the plant’s behavior, which can enhance the overall musical experience and make it more dynamic and responsive.

I used 6 mallets to create a fuller sound and overtones and the way I used could reducing computational load.

Noise:

I also add some noise. Noise is used to represent the unpredictable and uncontrollable aspects of nature.

SET UP

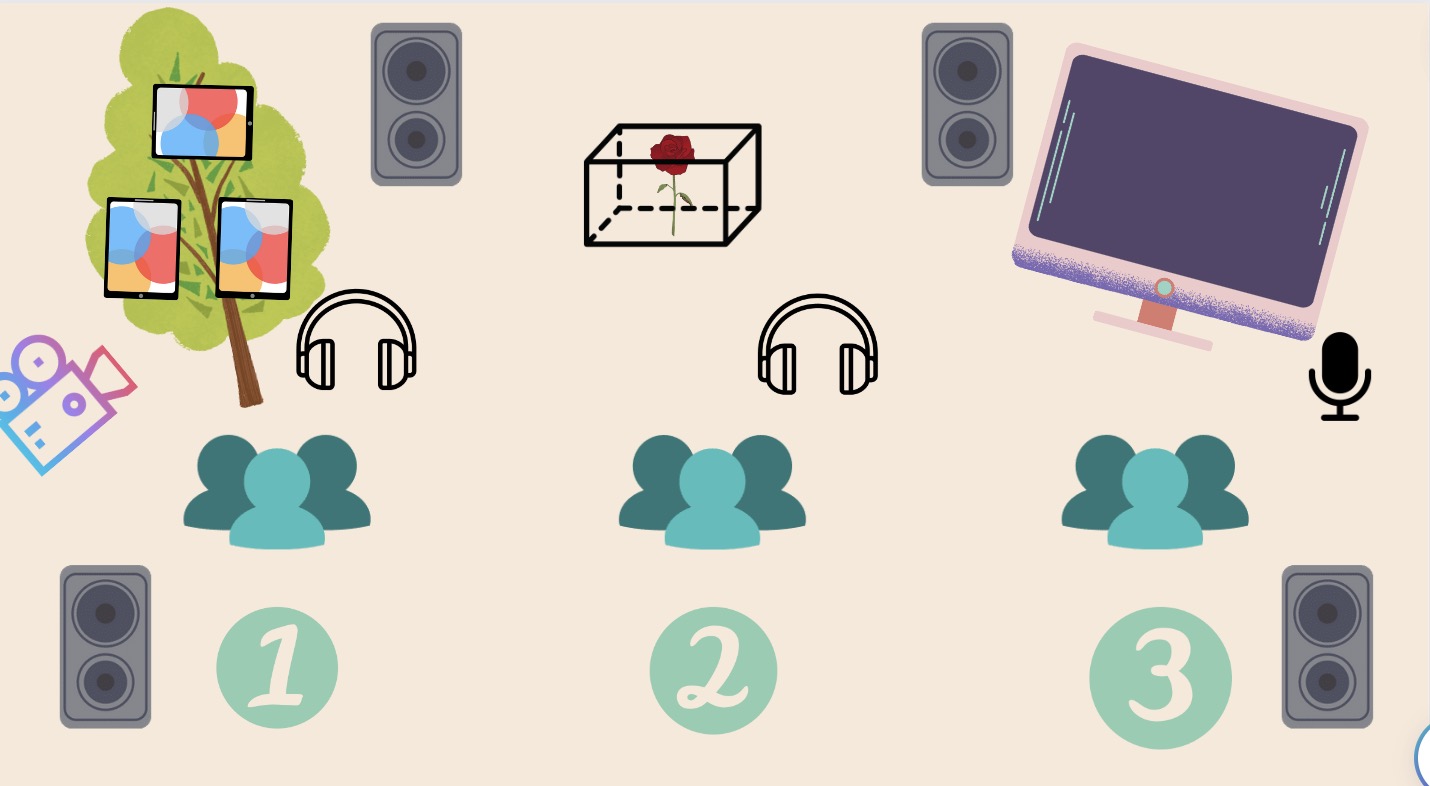

As shown in the diagram, the audio effects from the first part of the patchbay and the second part of the looking glass can be listened to using the headphones next to the device. Both are Bluetooth headphones connected to the computer’s audio that is hidden behind the stand. The audio from the first part is directly connected to TouchDesigner, and the audio from the second part is connected to Wwise. The third part has a microphone connected to TouchDesigner for visualization, allowing the effect to be changed based on the audience’s input.

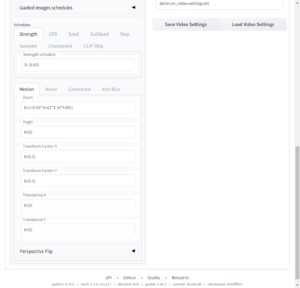

Having been researching how to create morphological variation between our plants’ concept images, I found the Deforum plugin that might be able to achieve the desired effect of bringing the mutated plants to life. This program enables us to morph between text prompts and images using Stable Diffusion.

There are many parameters in Deforum.

I tried different ways in the process of making our plant mutation footage. At the start, I only used prompts. As a result, it seems that simply using the prompt words leads to the video not drawing the plants correctly at some points of the video where they should have been. In both demos, my video has a camera zoom setting. However, sometimes it appears that the plants disappear from the picture. These attempts demonstrated the limitations of using cues alone to generate animation and proved the uncontrollable outcome of Diffusion’s video generation.

In Stable Diffusion, there is a CFG (classifier free guidance) scale parameter which controls how much the image generation process follows the text prompt (some times it creat unwanted elements from prompts). The same setting apply in Deforum’s video generation work flow, on the other hand, there are Init, Guided Images and Hybrid Video settings I need to figure with to morph between images and prompts.

Under the ‘Motion’ tab, there are settings for camera movements. I applied this setting in many times of attempts to generat videos but at the end I didn’t use it in our video.

an early version of video that generated from text prompts

Example of miss settings resulted in generating tiling pictures

In this sample, the output is slightly better when using both prompts and guided images as keyframes. The process has managed to draw the plant right in the centre of the picture. However, there are still some misrepresentations in the generated video. For example, the morphosis between multiple stages of plant transformation could be better represented but rather switches from one picture to the next like a slideshow. Moreover, As I typed something in prompts like “razor-sharp leaves” and “glowing branches”, the image was drawn incorrectly. For example, artificial razor blades come out on the plant’s leaves.

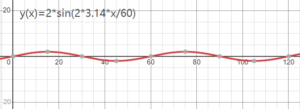

The setting specification of this parameter is like 0:(x). If the value x equal to `1`, the camera stays still. The value x in its corresponded function affects the speed of the camera movement. When this value is greater than 1, the camera’s movement is zooming in. And when this value is less than 1, the camera zooms out.

The Zoom setting here is `0: (1+2*sin(2*3.14*t/60))` The effect in its outputted video would be: camera zooms in from frame o to frame 30, zooms out from frame 30 to 6o (and the camera movement speed becomes 0 when it is in frame 30 and frame 60), every 60 frames this camera movent repeat how it moves. The sample video below works then same function but its movement is like: zoom in, stop zoom, zoom in again and stop zoom again.

The Zoom setting here is `0: (1+2*sin(2*3.14*t/60))` The effect in its outputted video would be: camera zooms in from frame o to frame 30, zooms out from frame 30 to 6o (and the camera movement speed becomes 0 when it is in frame 30 and frame 60), every 60 frames this camera movent repeat how it moves. The sample video below works then same function but its movement is like: zoom in, stop zoom, zoom in again and stop zoom again.

When I try to make intended effect of morph between two things I emplied an other type of function. Below is the Prompt I noted in its setting.

{

“0”: “(cat:`where(cos(6.28*t/60)>0, 1.8*cos(6.28*t/60), 0.001)`),

(dog:`where(cos(6.28*t/60)<0, -1.8*cos(6.28*t/60), 0.001)`) –neg

(cat:`where(cos(6.28*t/60)<0, 1.8*cos(6.28*t/60), 0.001)`),

(dog:`where(cos(6.28*t/60)>0, -1.8*cos(6.28*t/60), 0.001)`)”

}

The prompt function in the video is intended to show a cat from the beginning. With the video playing, the cat morphs into something else. When the video is played to frame 60, the cat becomes a dog. And 60 frames later, it changes back to a cat.

example of prompt and guided images input in later video generation

The spacing between the inserted keyframe images and prompt input should be equally away from their previous/next keyframes: e.g. {

“0”: “prompt A, prompt B, prompt C”,

“30”: “prompt D, prompt E, prompt F”,

“60”: “prompt G, prompt H, prompt I”,

}

reference:

https://github.com/nateraw/stable-diffusion-videos

https://github.com/deforum-art/deforum-for-automatic1111-webui

From the beginning, I don’t have a good image model for drawing plants. Midjourney supports running its ai model using a tool that is very friendly to beginners. I generate images by just inputting a set of prompts, including the phases of certain plants, certain ecology settings, certain backgrounds, etc. I can get a set of 4 images as a sample and generate another set of its variants if the images are not satisfactory.

I used this tool to get some visualisations and inspirations for our initial concept of forming the plants with Midjourney’s powerful model that trained with enormous data. Most importantly, a very developed model is essential to the outcome of the generation because the capability of the model to provide a precise understanding of what has been inputted determines how well the generated images come out as intended.

I used Midjourney at the beginning because it provides a relatively consistent style and tone in the same sets of generated results from the same sets of prompts. Midjourney provided me with a set of nice concept images in the early stages. However, due to the limited license. We cannot directly use Midjourney’s images in our finished presentation.

Later, I set up Stable Diffusion and started to look around how to run it locally to create concept images.

To gain visual references of concept art illustrations and designs, on the other hand, I appropriated elements for generating the appearance of plants that were drawn from a variety of image websites like Pinterest.

In conjunction with some sources on plant studies, their development and ecological evolution, I have drawn up a series of prompts for additional input beyond just the ChatGPT phrases to achieve the ideal output of the text-to-image process. Since many ideas of ‘bio morphosis’ of the plant are non-specific and sometimes can be fantasy in an environmentalism narrative, the process of making it is rather driven by imagination, even human concept artists would take a hard time for representations of the plants and their environments’ imagery because the subject we are creating doesn’t exist in the real word. Thus I modified every source of the prompt by specifying the settings and contextual information to make the prompt more absorbable to image AI tools.

At the very beginning, sample images generated by a default SD model were rather unsatisfactory, so I tried to adjust the prompts to make the images’ quality. If we just input a few prompts into txt2img to create a plant, it does not work.

As the composition of a plant itself is rather complex, a plant has stalks, leaves, a root system, buds etc. There is no quick way to generate all details of these parts well at the same time. Therefore, I used long strings of prompts to generate single plants in every picture. The number of concept illustrations generated was huge, and only a few quality materials can be selected to take into the following process.

After exploring, I found that if I want to make the generated picture more like a conceptual design, I need to add more specific styling descriptions and mention ‘by certain illustrator/artist’ at some point. Under the prompt words column, the weight of the pre-positioned prompt phases used in the generation is greater than that of the post-position prompt words. Place a prompt before other prompts can let it make a greater impact on the result. This also applies to negative prompts.

I put one of Jiaojiao’s sketches into the Stable Diffusion’s img2img to convert it to new sketches. Different prompts have been input in different steps during the process.

When creating the cactus illustrations, I used Photoshop to remove the background from multiple images, took one/several piece(s) from different plants and patchworked them into a new paint. The samples of plants that were stitched by different parts/tissues of plants were then sent to img2img and redrawn to generate more variants.

In addition, I generated a series of images that depict wasteland and post-apocalyptic landscapes, which would be used as background for the mutating cactus. Then, I removed the background of the cactus concept images, put the cactus in the middle of each scene, and redrawn them. In the last round of redrawing the image, I turned down the CFG scale and refined the prompt so the basic composition and tone of the image would stay consistent.

Used tools:

https://docs.midjourney.com/docs/midjourney-discord

https://github.com/deforum-art/deforum-for-automatic1111-webui

https://huggingface.co/lambdalabs/image-mixer

https://huggingface.co/spaces/fffiloni/CLIP-Interrogator-2

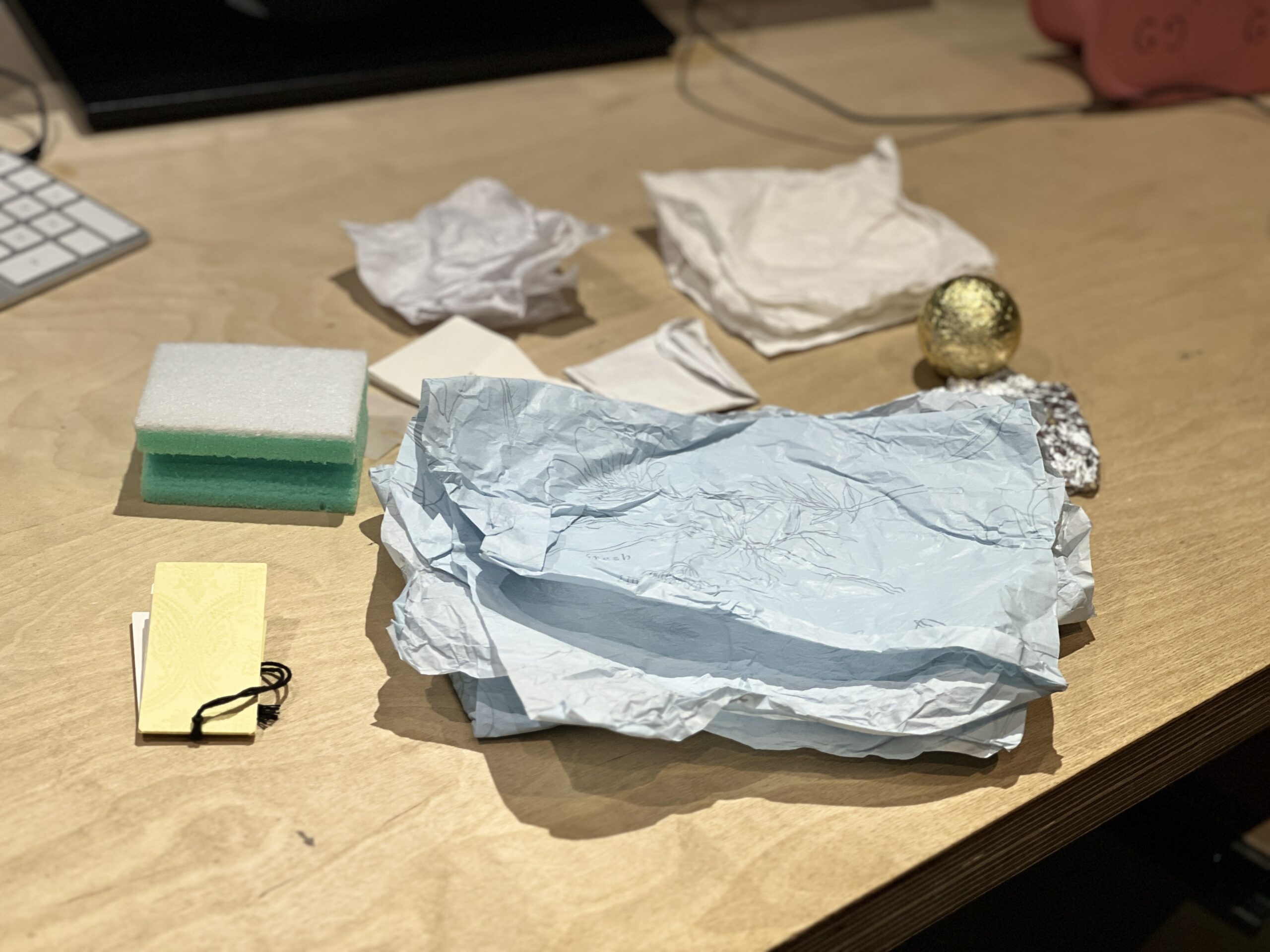

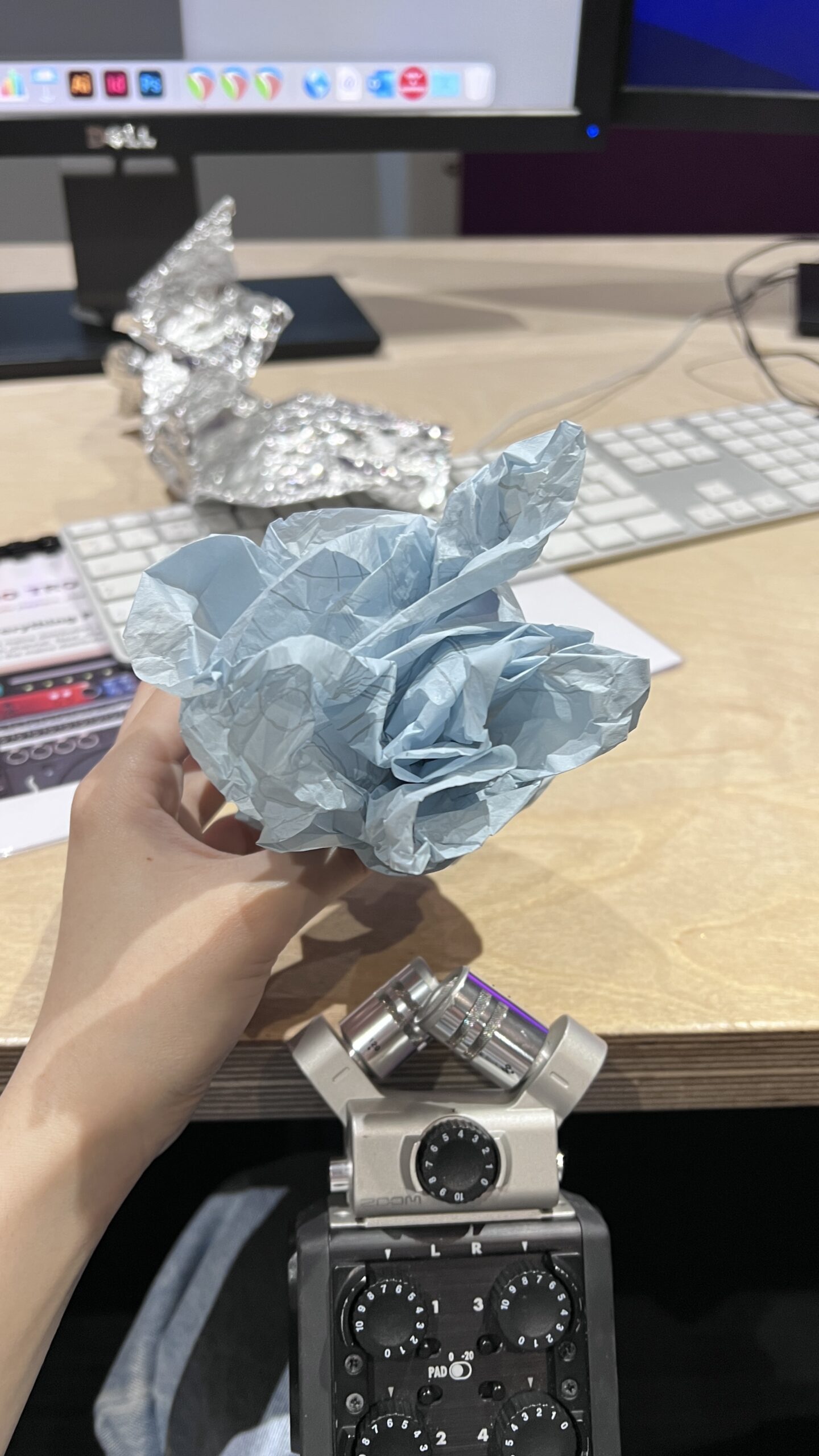

In the initial stages of my project, I consulted with my teacher on how to simulate the sound of a flower opening. My teacher suggested using objects such as unfolded paper to create the sound. However, I found that using a full sheet of tissue paper produced too much noise. Instead, cutting the tissue paper into small pieces, roughly one square centimeter in size, resulted in a purer sound. Additionally, unfolding objects such as tissue paper can produce a lot of noise. To address this, I used small pieces of tissue paper and mimicked the motion of gently stroking the flower petals to create a more delicate sound. Throughout the process, I kept in mind the need to convey a sense of gentleness and delicacy in the sound.

Another approach I took was to use a porous sponge cloth to create a sound effect for one of the flowers. This worked well as the texture of the sponge matched the porous texture of the flower petals. During playback, the sound was transmitted via Bluetooth headphones connected to a nearby tree-shaped audio station. This allowed visitors to experience the delicate sounds of the flowers without interfering with the music playing throughout the exhibition space.

My tasks in the group are design, modelling, animation and hand model making. Of course, there is also the sound part of the sound collection work. My team members come from various disciplines, so I imagined that our team can provide a series of interesting interactive experiences for the experiencers through an interdisciplinary approach.

During the whole project process, I have gained a lot of insight and ability improvement. First of all, I have also made great progress in design. I learned how to design models in two different software, C4D and Unity. I learned how to design according to user needs and usage scenarios and use a variety of tools for prototyping. design and test.

Second, I learned how to communicate and collaborate better. In the group, we need to constantly exchange ideas and progress to ensure that the project can be completed on time. Through constant discussion and feedback, I have learned to express myself better and accept suggestions from others. At the same time, I also learned more about the importance and efficiency of teamwork.

In addition, many challenges and difficulties were encountered during the project. For example, when the group communicates and communicates with problems and finds appropriate solutions, we finally complete the project. This communication process made me more aware of the importance of teamwork. In the face of difficulties, teamwork can improve efficiency and reduce personal burdens, and it can also allow us to learn and grow from each other through communication and cooperation.

Overall, I have benefited a lot from participating in the interactive installation group project. It not only improved my design ability but also gave me a deeper understanding of the importance and value of teamwork. I believe that these experiences and insights will be of great help to my future development and career.

At the same time, I also built the model of the flower in C4D, and made the material map in Unity.

There are science videos and documentation on how plants mutate in different environments, from which we can see that the traits of a plant can be inherited from its family and mutate in various ways in different ecological environments. Plants can mutually affect the environment in which it grows and reproduces.

Biology in Focus Ch 26 The Colonization of Land by Plants

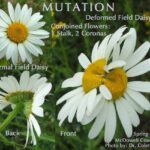

For example, some science videos mention a flower that is pink when grown in acidic soil. When grown in neutral or alkaline soil, its petals are purple in colour. Some materials have shown that daisies under the influence of nuclear wastewater have grown misshapen and fissioned open.

mutated daisies near Fukishima nuclear power plant

mutated daisies near Fukishima nuclear power plant

I utilized ChatGTP to gain some inspiration for our project’s context by asking it about “possible characteristics of mutant plants”, “how plants would evolve with the impact of environmental changes”, and “ideas of plants’ morphosis and changes in a post-apocalyptic world” etc. Furthermore, I collected ideas and inspirations from descriptions in its response.

Examples from ChatGPT’s answers:

| Context/imaginary landscapes I used as prompts to the narrative | Ideas provided by ChatGPT |

| Mutant plants in underwater habitat | ‘Vines grew to massive lengths’

‘leaves were covered in tiny, hair-like structures’ ‘Leaves turned to face the sun like solar panels’ ‘Vines grew to massive lengths’ ‘Grew wild and untamed, roots delving deep into the rubble’ |

| On wasteland with extreme climate | ‘Leaves grew thicker to retain more water’

‘leaves become broader, thicker, and more robust, with a strange iridescence’ ‘rough, scaly, or slimy texture’ ‘spines’ |

| On lands under the contamination of nuclear radiation | ‘stems were able to twist and turn in the direction of the light’

‘Grew tall and twisted, leaves shimmering with an otherworldly energy’ ‘grew with giant, fleshy bulbs’ |

| Questions: | Answers: |

| imagine how nuclear contamination affects aquatic plant habitats | possible negative effects nuclear contamination may have on aquatic plant habitats:

radiation exposure; soil and sediment contamination; disruption of food chains; toxic chemical exposure; changes in water chemistry; reduction in biodiversity; changes in plant community structure… |

| imagine the appearance/physical characteristics of underwater plants in contaminated environment | possible characteristics include:

Dark or unusual coloring (novel pigments); abnormal growth patterns (altered morphology); unusual size; abnormal texture; hybridization; enhanced photosynthesis; enhanced reproductive strategies… |

In addition, inspired by these sources, including a collation of ideas drawn by ChatGPT and plant studies, I have listed some phrases to describe the mutation plants. And with the descriptions of environmental change used for pairing background images as well as being input variables, I categorised the conditions and environmental variations into the following areas. Adjustments are made based on the concepts I mentioned in the plant-evolving flowchart.

Negative environment variables:

1. Waste, e.g. emissions of rubbish, industrial garbage- The plant grows thinner, synapses arise, and mycorrhizal-like proliferation grows out

2. Contamination – darkening of the end of the plant’s buds and leaves, causing the structure to a deformity

3. Nuclear radiation – deformity, decay of the peduncles and stems of plants, mutation

Positive environment variables:

Plants grow abundantly, grow faster, higher, and stronger, leaves turning green and thrive…

A constant value:

During the plant model itself animation, the plant grows up and grows bigger and higher

To gain visual references of concept art illustrations and designs, on the other hand, I appropriated elements for generating the appearance of plants that were drawn from a variety of image websites. like Pinterest.