As a final part of our project, we decided to divide up the documentation and write a separate section about each of the project components. It can all be found in the following PDF:

We have also created a video documentary:

As a final part of our project, we decided to divide up the documentation and write a separate section about each of the project components. It can all be found in the following PDF:

We have also created a video documentary:

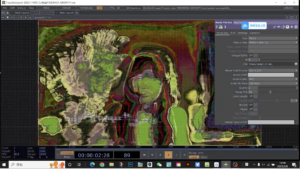

Just one day before the presentation, we had a very intense debugging session in Studio 4. Originally, I was scheduled to finalize the Wwise mix with Evan, but instead, we had to reconfigure the entire monitor setup for quad output and, most importantly, investigate why Unity was not allowing the sound to play in the required format.

We were all thinking very hard on the problem:

Eventually, Jules and Leo discovered that it had been a known bug affecting all Mac computers for over ten years. The final solution was to create a Windows build and run it from Leo’s laptop. The test conducted prior to the presentation was successful, ensuring that all technical components were ready for use.

Up until Week 9, our plan included buttons and other sensors triggering one-shot sound effects. However, after shifting to four reactive ambiences instead, many fully prepared sound effect containers in Wwise remained unused. Personally, I did not want to lose the time and effort invested in creating them, and I believed the final soundscape would be much richer if these sounds were included. Therefore, I decided to integrate them into the scenes where they were originally intended to be played.

All sound effect containers—whether random, sequence, or blend—were set to continuous loop playback rather than step trigger, allowing them to play consistently throughout each scene.

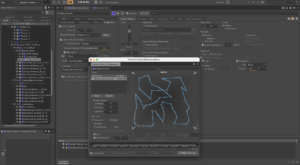

For example, the breath random containers (Female, Male, Reversed) were assigned different transition delay settings (with slight randomization), so that each container would trigger at a distinct periodic rate. Additionally, I implemented complex panning automation to move the sounds across all speaker directions, using varied transition timings and timeline configurations.

Or, the human voice sounds in Depression received more, shorter paths with longer timelines:

The first technique is better for larger amplitude, fast-changing movements, while the second one allowed me to have more control over the direction panning.

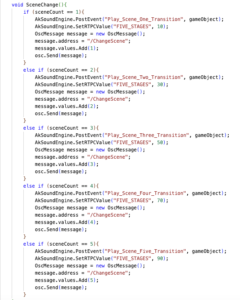

I also added 5 transition one-shot events to be called from Unity when the scene is changed:

We had enough time to test all components prior to the presentation, and I was also able to make last-minute mix adjustments in the Atrium, which greatly contributed to the overall balance of the soundscape.

The RTPC values controlling the distance–loudness relationship for the ambience proximity sensors were also modified to fade out at approximately 65 (on a scale of 0–100), instead of the previously used value of 30. This change allowed for a smoother fade over a longer distance range.

I felt incredibly proud and happy when the lights, sound, and visuals finally came together. With more people on the sound team than on visuals, I felt a certain pressure for the audio to stand out. Even just hours before the presentation, I worried it wouldn’t feel rich enough or convey the intended emotions. But when everything was played together, I thought it turned out pretty amazing!! I’m quite pleased with the final mix, and adding those last-minute sound effects was definitely worth it – they added so much more meaning to the scenes. I particularly appreciated the use of ‘human’ sounds, like footsteps and breaths. Given more time, I would have expanded on those elements further.

Personally, one area I would still refine is the harmony between visuals and sound. We received the initial visual samples before beginning the audio design, but some were later changed, which led to slight mismatches – particularly noticeable in the third stage, Bargaining.

It was wonderful to see so many people attend and interact with the installation. Understandably, everyone I spoke to needed a brief introduction to the theme, as our interpretation of grief was quite abstract. Still, the feedback was overwhelmingly positive, and many were impressed by the interactive relationship between audio and visuals.

I learned a lot about teamwork during these months, especially how crucial it is to find everyone’s personal strengths. Despite starting out bit slow with assigning the roles, I think by the last 2-3 weeks we all really did feel focused on what we should do and how to contribute in maximum efficiency – and this clearly manifested in the success of the presentation day:))

I am also grateful to have learned so much about Unity and Wwise. In particular, I found it fascinating to explore Wwise in a context so different from its typical use in game sound design!! Furthermore, seeing Kyra and Isha’s incredible work in TouchDesigner definitely inspired me to learn using the software to create audio-visual content in the future.

Firstly, Evan’s computer—which was intended to run the bounced Unity project and the Max patch—was not compatible with the local Fireface soundcard. As a result, we had to transfer all projects to my computer, install RME, and sync the Wwise session to the installed quad system. This significantly delayed our ability to test more hands-on sound settings. Fortunately, we were able to book the room for the following day as well.

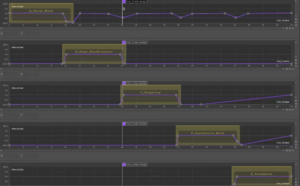

Once everything was finally set up and functioning, I was able to begin creating a more refined mix of the five ambiences in each stage, along with a “master mix” to ensure coherence among all five.

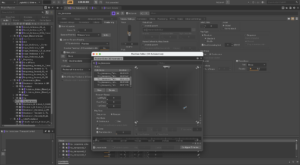

I utilized Wwise’s Soundcaster function, which greatly facilitated testing the maximum possible loudness (i.e., all ambiences at maximum volume per stage) and the dynamics between the consistent base ambience and the variable ones.

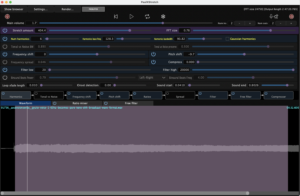

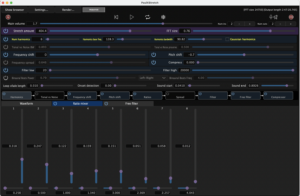

Below are several screenshots of the Soundcasters:

While the others were working on Touch Designer to visualise the fades between stages, we set up the sensors on the side, and successfully achieved:

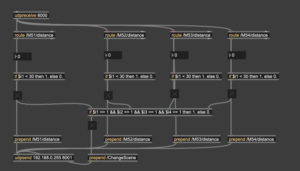

Here’s the Max patch for receiving the OSC from 4 sensors:

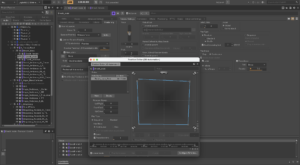

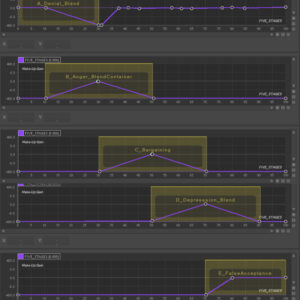

Since the data that controls which state is being played does not move too smoothly, I had to change the blend container fade lengths/shapes a bit, for a slower, smoother transition between the states.

Before:

After:

The individual Proxy RTPCs are currently configured to be at 0 dB when set to 0 (on a scale from 0–100), and are faded to -192 dB (the minimum value available in Wwise) at 30. This configuration is due to the sensor data remaining relatively stable within the 0–30 range; beyond that, it becomes increasingly erratic. This may result in a sudden “on-off” transition for the ambiences rather than a smooth fade-in. However, further testing is required to determine the optimal maximum value. In real-world terms, a sensor value of 30 corresponds to a distance of approximately 0.4 meters from the device.

The girls successfully resolved the issue with Touch Designer, allowing us to test all four sensors, along with the audio and visuals, together for the first time! And luckily, it was a huge success!! The ambiences were triggered and controlled as intended, the sensors influenced the visuals, and both sound and visuals transitioned smoothly between scenes when required.

Here are a couple pictures of me, working super hard:))

During the test, the audio was running directly from the Wwise session; however, when we built the Unity project, the sound was played only in stereo. All output settings were correctly configured in both Wwise and Unity—the latter even indicated that the sound was being played in quad—but in practice, it was not.

As Alison House was closing, we had to conclude the test. Despite this bug we run into at the end, I am extremely happy that all other technical components worked!!

We have one full week to resolve the Unity issue, during which I also need to produce a final, improved sound mix and potentially add additional RTPC effects. As we are heading towards the final product, I’m very excited to see how it will all turn out!

The task on building the audio components have progressed, we combined our ambiences with Evan and built the system for the interactive environment change.

A master Blend container displays the individual stage blend containers, in the scale of 0-100, each stage [emotion] will have a value of 20. The RTPC is a Game Sync Parameter called FIVE_STAGES.

Screen video of the Five stages RTPC in action

https://media.ed.ac.uk/media/DMSP%20week%209%205stages%20RTPC/1_jrzrfuxs

Up until now we designed maximum 1-2 ambiences for each state, however, Leo suggested we could have an ambience for each speaker (each equipped with a Proximity sensor), so the audience could mix the atmo themselves.

This required us to build a new logic system in Wwise, and create further 3-4 ambiences for each scene. Due to the nature of the Speaker setup in Atrium which we will be using [4 speaker surround], – for each stage we will have a ‘Center’, non-directional atmo playing from all speakers, and 4 directional atmos, panned Front-left, Front-right, Back-left, Back-right.

Once again, we shared the work with Evan, I agreed to do Denial, Anger, and half of Depression.

In Wwise, we created a Game Sync Parameter for each Speaker Proximity sensor, we will assign the corresponding ambiences here individually, in each stage.

We had a successful session of connecting one sensor to a Wwise ambience, where the distance was controlling a High-pass filter RTPC. Next week Tuesday we are planning to test more at the same time:))

Here’s a short video of us testing:

dfb2af39-1f82-4075-983e-0f301c8bacdb

(More about this in our project blog)

On Wednesday (26th March) we will have a big testing in Atrium, to practice how the sound will be moving between the stages!!

As planned, during these weeks I focused on sound implementation in Wwise, and creating new sound effects.

The progress of our sound team has been slower than planned because we had to solve some issues of communication about the sound scapes’ concept. However Evan kindly offered to help with the Wwise session build, which drastically speeded up the process.

I agreed to do the first and fourth stage (Denial and Depression), and will do the sound effects for Acceptance.

Since I already had some sound effects crafted for this stage, I grouped them into folders. Next I had to build a logic system in Wwise:

link for Wwise Denial soundcaster:

https://media.ed.ac.uk/media/t/1_kod3nzx7

For depression I wanted to create a quite dark atmosphere as a base layer, and use lots of human voices to evoke the memories of shared moments with friends, family, social times.

Since the provided visual design sample looked like a person behind an wall that separates them from the present, I wanted to replicate this in the audio by filtering out high frequencies:

The base atmo layer therefore gets a heavy high cut filtering after trigger start (this was applied in Ableton, before implementing to Wwise), and a second layer of filtered storm ambience is occasionally triggered to add weight and a “clouded” emotion to the scene’s soundscape.

Apart from the unmodified “Vox_x” files (only have reverb to place them away in distance) an additional random container of transposed voices are used to enhace the dark sensory of passing time, and bittersweet memories.

The footsteps, personally represent a sort-of hallucination for me, like someone else was still around us, watching from close.

Link for Wwise Depression soundcaster:

https://media.ed.ac.uk/media/t/1_8el8h85o

We created a Unity project and successfully received OSC data via Lydia’s Proximity sensor.

Next week we aim to successfully crossfade and move between the five stages triggered by data. However, we are stil having difficulties about how can we approach that switch between the stages, and how to specify/ limit the data to receive smooth transition.

After the first assignment, which was mostly about pre-planning, conceptualization and research, in week 5 we moved to phase two, the technical realization.

In the next couple of weeks, my primary role will be to plan the sound engine logic, therefore to build the Wwise session, and implement Xiaole and Evan’s sounds. Furthermore, I will build the Unity project with the help of Leo and other team members: this will be controlling our phone sensors, and triggering the sound from Wwise.

We have about 4 weeks until we have to present the installation which is an extremely tight deadline considering how many assets we need to build, but if we follow the plan we agreed on it should be all manageable! :))

Until 18th March:

Until 25th March:

Until 1st April:

To have some materials to put into Wwise next week, I created some ambience tracks which will serve as a base ‘music’ layer providing some emotional setting to the room.

As recommended by Sound designer Andrew Lackey, I used PaulXStretch to create infinite ambient loops. The software allows users to control the harmonic components and ratios, so while recording, I constantly moved those to create ever-changing, but still atmosphere.

Then , following Xiaole’s research about the recommended elements, I have used breath sounds to represent emotions such as: inner suffering, choking on air and feeling empty while suffering from loss. A dark Japanese anime, Devilman Crybaby’s soundtrack by Kensuke Ushio served as a great inspiration to use traditional Eastern percussions and voices as sound effects to enrich the ambience with horror/darkness. (A great example is ‘The Genesis” :https://www.youtube.com/watch?v=s2tk1gzE8eo&list=PLRW80bBvVD3VzTdVNE_pjMC4S-PJefbxb

I used recordings I made with Evan this week:

Occasional hits adds the weight and a sense of darkness to the setting, while ticking clock represents the passing of time in this dark, empty state.

Of course, this is a structured short piece, in the project all of these sounds, and lots of others, will be separate events which we can trigger in Wwise, resulting a more random sonic outcome.

I have also created sketch ambiences for stage 2:

Personally I think anger is a lot more intense, and energetic scene from the grieving person’s perspective. The aggressive and harsh rhythmic ambience I made help to evokes some sort of irritation and (naturally, anger) in the listener. (additional layers will be provided by others)

Next week I will start implementing these into a Wwise session and start to figure out the events and trigger layout system:))

Lydia – Arduino- Touch Designer integration, sensor programming

Isha – User interaction flow, storyboards, visual concept

Kyra – Visual design, Touch Designer

Xiaole – Audio aesthetic research

Evan – Sound design

Lidia – Conceptualisation, note taking

The project is based on two core concepts: using the five stages of grief as a theme and creating an interactive audio-visual installation. Integrating these ideas into a cohesive and meaningful concept was not a straight journey, but we have now established a strong foundation:

Expressing the profound impact of social interactions on individuals experiencing grief and how these interactions, in turn, shape our own self-reflection.

But how did we get here?

In the first week, we focused on defining what the word ‘presence’ means to us: It is not only to be physically present in a space but to be consciously aware of being there, at that moment. Being present requires us to open our senses and pay attention to everything that surrounds us: every change of breeze in the air, every sound, every movement of light, and every change of sight. Moreover, it means to actively concentrate and pay attention to the other beings around us.

As Buddhist monk Thích Nhất Hạnh wrote once, “The greatest gift we can make to others is our true presence” (Hạnh, 2023).

Coping with the loss of someone or something one loves is probably the biggest challenge in everyone’s life (Smith, 2018). It is highly an individual experience, however, what might be the connection in all, is the need for others who can offer support and comfort: a Swedish study based on a survey outlined that participants during such difficult times did report their need for emotional support. Despite mentioning that this is mostly “provided by family and friends”, receiving kind gestures and a positive attitude from a stranger can equally bring a significant change (Benkel et al., 2024).

The project seeks to raise awareness of this topic, leading us to create an audio-visual multisensory installation portraying “The five stages of grief”. The installation will be ‘an exploration of being present in grief’, primarily from the perspective of an imaginary character’s inner mental world. Secondarily, we direct back a rhetorical question: Do they see themselves in it, and are there perhaps any feelings which they have been hiding from themselves?

First introduced as “The Five Stages of Death” by Swiss-American psychiatrist Elisabeth Kübler-Ross in 1969 (Kübler-Ross, 2014), the model says that those who experience sudden grief will most likely go through the following 5 stages and emotions: denial, anger, bargaining, depression, and acceptance. However, these are very strict categorizations of humans’ complex scale of emotions. The lines between these feelings are blurred (Grief is Not Linear: Navigating the Loss of a Loved One, 2023), therefore, in our project as well, we will aim to present the audience with the general impression of grief as we imagine it from a creative point of view.

Our weekly progression to arrive to this concept can be found in our ‘Progress of Meetings” blog:

https://blogs.ed.ac.uk/dmsp-presence25/2025/02/10/progress-of-meetings/

The installation will be a true sense-stimulating phenomenon, with powerful sound ambiences, low-humming sounds and whispers, and abstract visual design of distorted faces, muted screams, and chaotic shapes and forms: by mixing the technique of real-time processed video footage playback and digital designs.

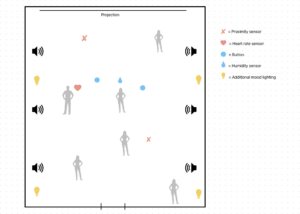

The showroom location will be in a closed space such as Alison House’s Atrium, to provide the necessary atmosphere of the intimate and dark characteristics of one’s innermost mind. An array of 6-8 speakers placed around the walls of the room will provide a surround sound environment, while a projector on the front will display the designed visuals.

There will be multiple (at least 5) sensors, such as a heartbeat sensor, buttons, knobs, light sensors, and proximity sensors. These will be placed around the centre of the room, for the visitors to interact with. The sensors will be built with Arduino, which will feed the data into Touch Designer to control the main audio ambience, additional SFX and the visual design.

Rather than going through the 5 emotional stages in order, it will be a non-linear journey influenced by the activity of the visitors. The starting scenario is: with no, or only a few visitors, our imaginary grieving person (abstract presentation on the projection) will be in the worst mental state (first stages of the 5). It will be in chaos, darkness and pure depression. As the number of visitors and interactions with the sensors grow, the system will gradually become more and more calmer, and eventually evolves towards the last stage: acceptance.

However, if the interactions come to a halt, the negative emotions will come back once again.

Humidity sensor: as the crowd grows and reduces, the change in humidity of the air will provide a smooth and slow change over time. The sensor will most likely affect the sound ambience and the amount of distortive processing on the visuals.

Heartbeat sensor: when visitors scan their heartbeat, as a symbol for supporting the other with care and love, it will be presented with a corresponding heartbeat sound and visuals

Buttons/knobs, proximity sensors, light sensors: will trigger SFX and VFX, or change a set of real-time processing parameters.

The knobs/ buttons do not have a fixed direction, such as turning left for sadness, right for improvement in mood. Instead, it would be completely random. The goal is to get the audience involved, representing the true nature of how random emotions can come and go. Of course, if the input difference is significant, the grief gradually becomes more controllable.

More detailed description of technical concept and user interface layout can be found here:

https://blogs.ed.ac.uk/dmsp-presence25/2025/02/10/conceptual-foundations-and-user-interaction-flow/

In order to create relevant sound design aesthetics for the project, Xiaole did a throughout research on audio design techniques on the theme of grief, and related art projects:

https://blogs.ed.ac.uk/dmsp-presence25/2025/02/10/dmps-presence-25-research-for-audio/

Then, Evan created the first samples of short sounds designs: one for the five stages each.

https://blogs.ed.ac.uk/dmsp-presence25/2025/02/09/sound-design/

In the next couple of weeks we will aim to create more ambiances, effects, and effect parameter changes

The audio-system will be built in Wwise for the freedom of triggering and real-time processing multiple events/sound effects/ ambiances at the same time. This will be integrated into Unity, to use the given data (collected from sensors) to trigger the events. Since now we have a strong concept foundation, experienting with and building these systems will be our main goal for the next weeks.

The visuals will be designed with Touch Designer, where the various data sources will trigger different layers/ effects

First, Isha created a moodboard to summarize our ideas for used textures, colors, saturations, figures:

Then Kyra developed the first sketches about one of the emotions: ‘anger’

After the workshop at week 4, Lydia has taken up the role of computer tech design. The sensors will be built using Arduino, which will send the data bot the Touch Designer for the visuals, and Unity for the audio. In the next week, the workload will be share between her and Isha.

The technical research and progress can be found here:

https://blogs.ed.ac.uk/dmsp-presence25/2025/02/10/arduino-integration-with-touch-designer/

Benkel, I. et al. (2024) ‘Understanding the needs for support and coping strategies in grief following the loss of a significant other: insights from a cross-sectional survey in Sweden’, Palliative Care and Social Practice, 18. Available at: https://doi.org/10.1177/26323524241275699.

Cohen, S. (2004) APA PsycNet, psycnet.apa.org. Available at: https://psycnet.apa.org/buy/2004-20395-002.

Grief is Not Linear: Navigating the Loss of a Loved One (2023) Veritas Psychotherapy. Available at: https://veritaspsychotherapy.ca/blog/grief-is-not-linear/.

Hạnh, T.N. (2023) Dharma Talk: True Presence – The Mindfulness Bell, Parallax Press. Available at: https://www.parallax.org/mindfulnessbell/article/dharma-talk-true-presence-2/ (Accessed: 9 February 2025).

Heaney, C.A. and Israel, B.A. (2008) Health Behaviour and Health Education. Jossey-Bass, pp. 190–193. Available at: https://www.medsab.ac.ir/uploads/HB_&_HE-_Glanz_Book_16089.pdf#page=227.

Kübler-Ross, E. (2014) On Death and Dying, www.simonandschuster.com. Scribner. Available at: https://www.simonandschuster.com/books/On-Death-and-Dying/Elisabeth-Kubler-Ross/9781476775548 (Accessed: 9 February 2025).

Smith, M. (2018) Coping with Grief and Loss: Stages of Grief and How to Heal, HelpGuide.org. Available at: https://www.helpguide.org/mental-health/grief/coping-with-grief-and-loss.

Young, S.N. (2008) ‘The neurobiology of human social behaviour: an important but neglected topic’, Journal of Psychiatry & Neuroscience : JPN, 33(5), p. 391. Available at: https://pmc.ncbi.nlm.nih.gov/articles/PMC2527715/.