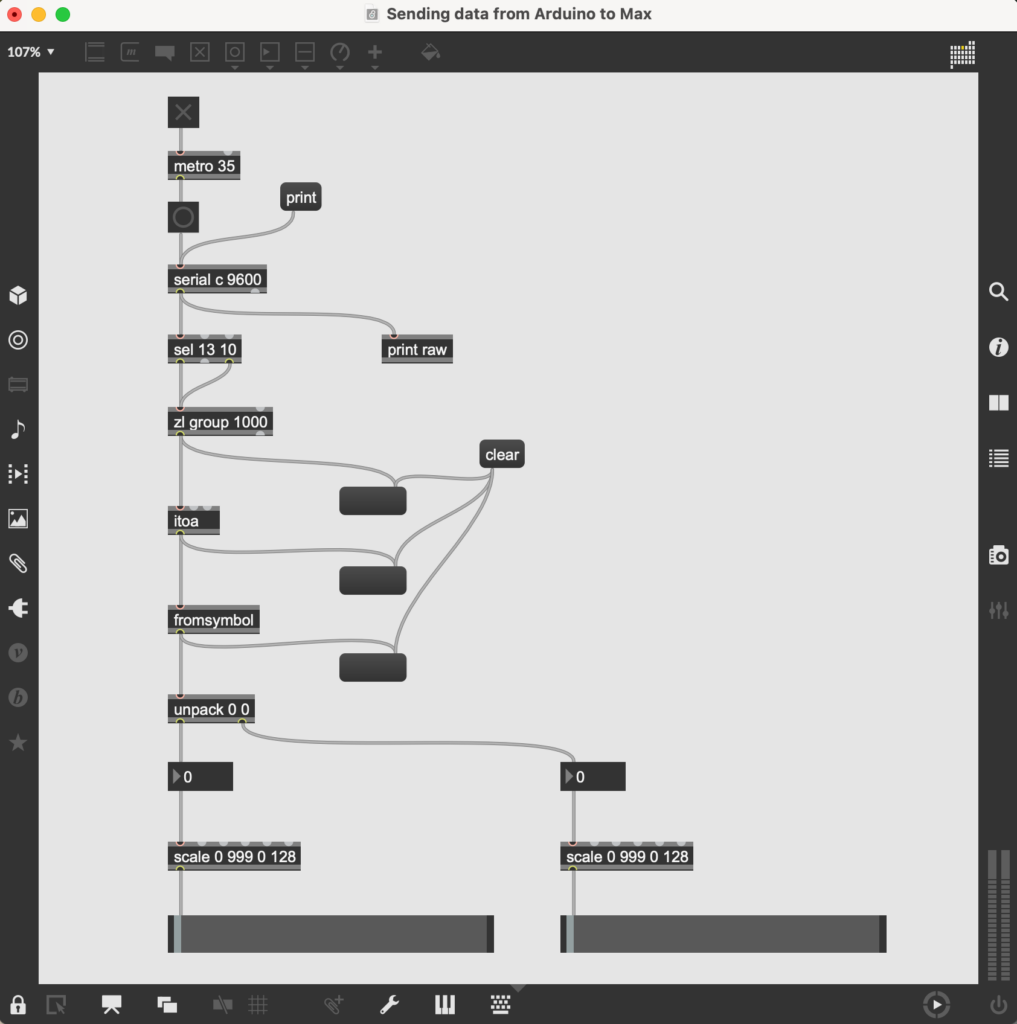

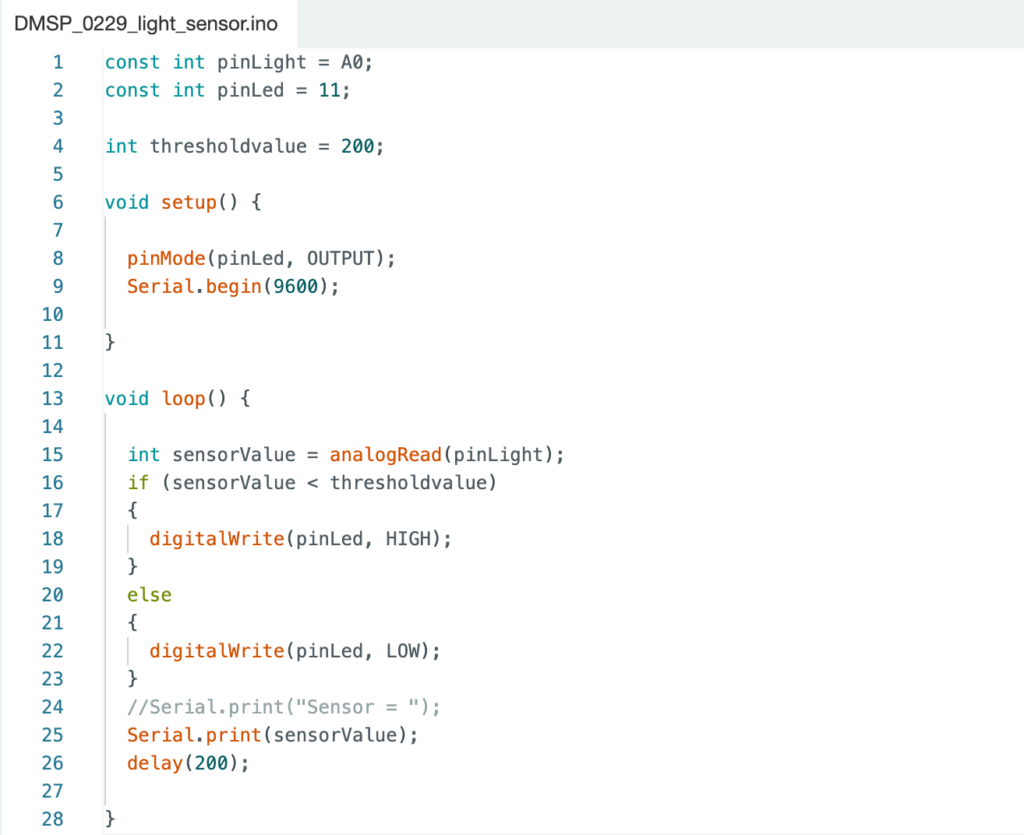

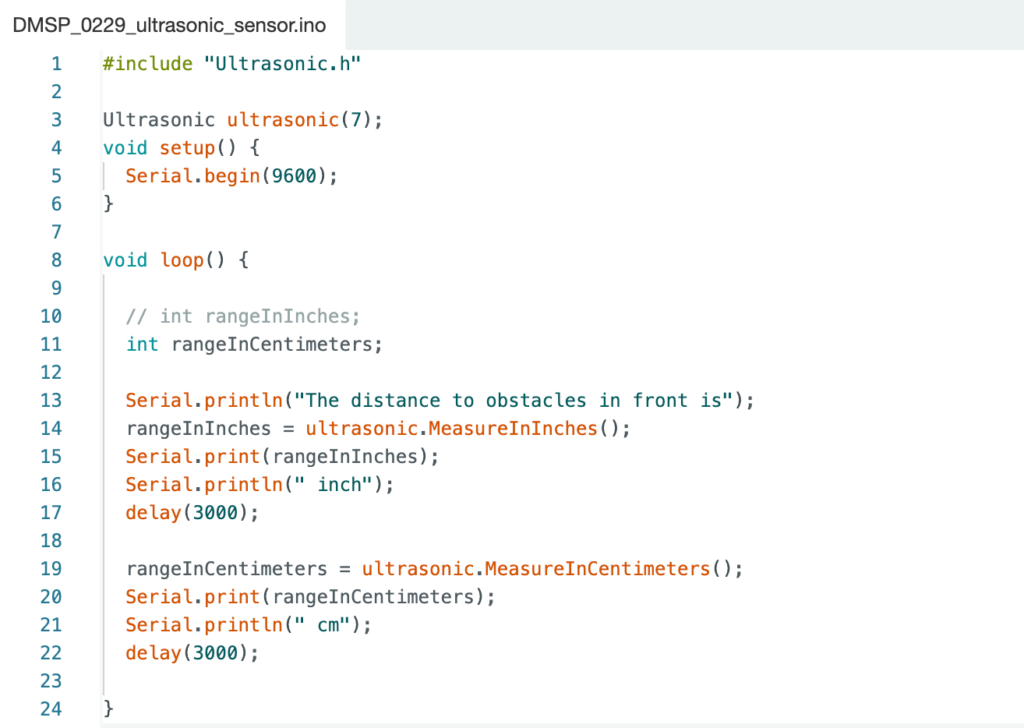

On Tuesday, we asked joe to help us solve the problems encountered in the code when max connected to the sensor. We need to adjust some codes to ensure the rationality of max’s operation.

On Wednesday and Thursday, we had a meeting with Leo to solve some problems about max.

The technical problems we mainly solve are as follows:

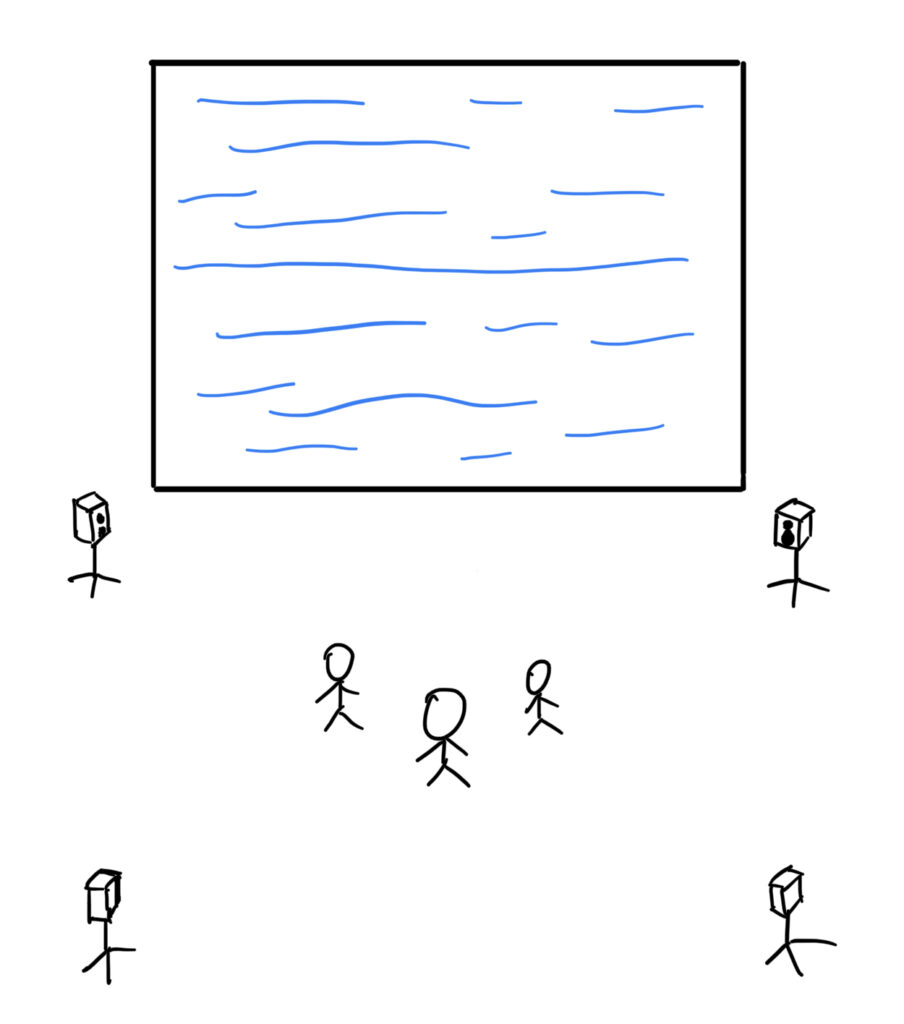

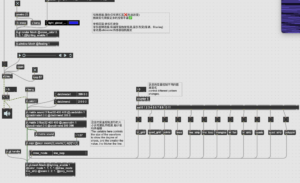

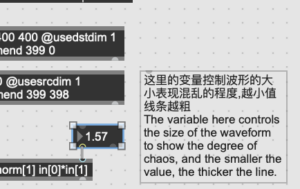

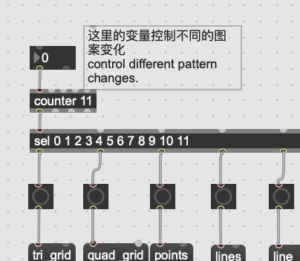

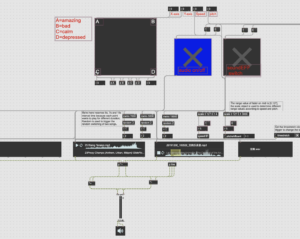

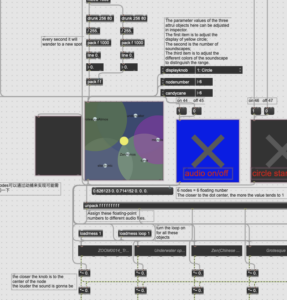

On Wednesday, my patch only did the sound effect part. Our sound effects have four levels. I classified them well, but I didn’t know how to transition from the first level to the second level. I thought of using preset object, but I encountered some problems. Leo suggested that I use buffer to store all the sound effects and change the levels at any time, but the final solution was to use pattrstorage object to control the appearance and changes of each level through the storage of the level meter data. Another technical problem is that max involves the on-site display. Because we use multiple speakers, we need to create an effect in max that can output different sound effects to multiple speakers. But we haven’t decided whether we want surround sound effect or multi-channel effect. Leo suggested that I use ICST ambisonics tools to output surround sound.

On Thursday,We took class so we asked Jules a lot and he showed us the use of panner.

I have to think about how to make the panner function into our patch.

Here is three different parts of our project !