Preliminary conception

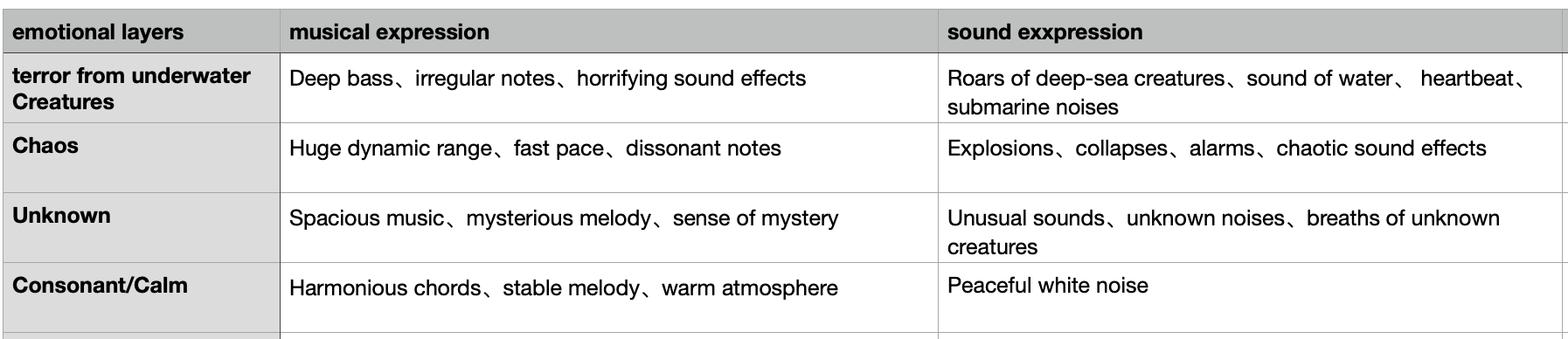

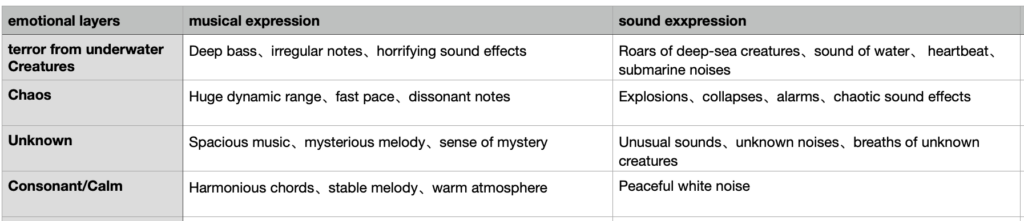

Sound dominates this project. We emphasise the expressive force of sound to achieve the effect of creating an atmosphere, and compared with vision, using max in sound will make me more confident.The following table is my idea about sound effects and music.

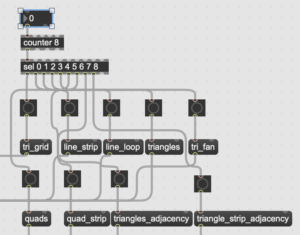

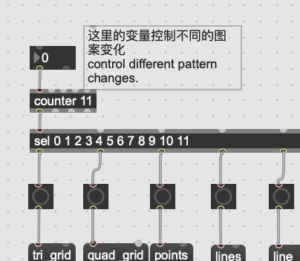

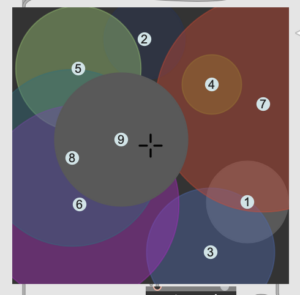

We decided to use the expression of four emotional levels. The first thing that came to my mind was the square object that can be used in the patch, which can be pictslider or nodes. So at first, I put both of them in my test patch and made some attempts to decide which one is more suitable for our project. This is also one of the questions that we asked Jules in the first presentation.

pictslider & nodes

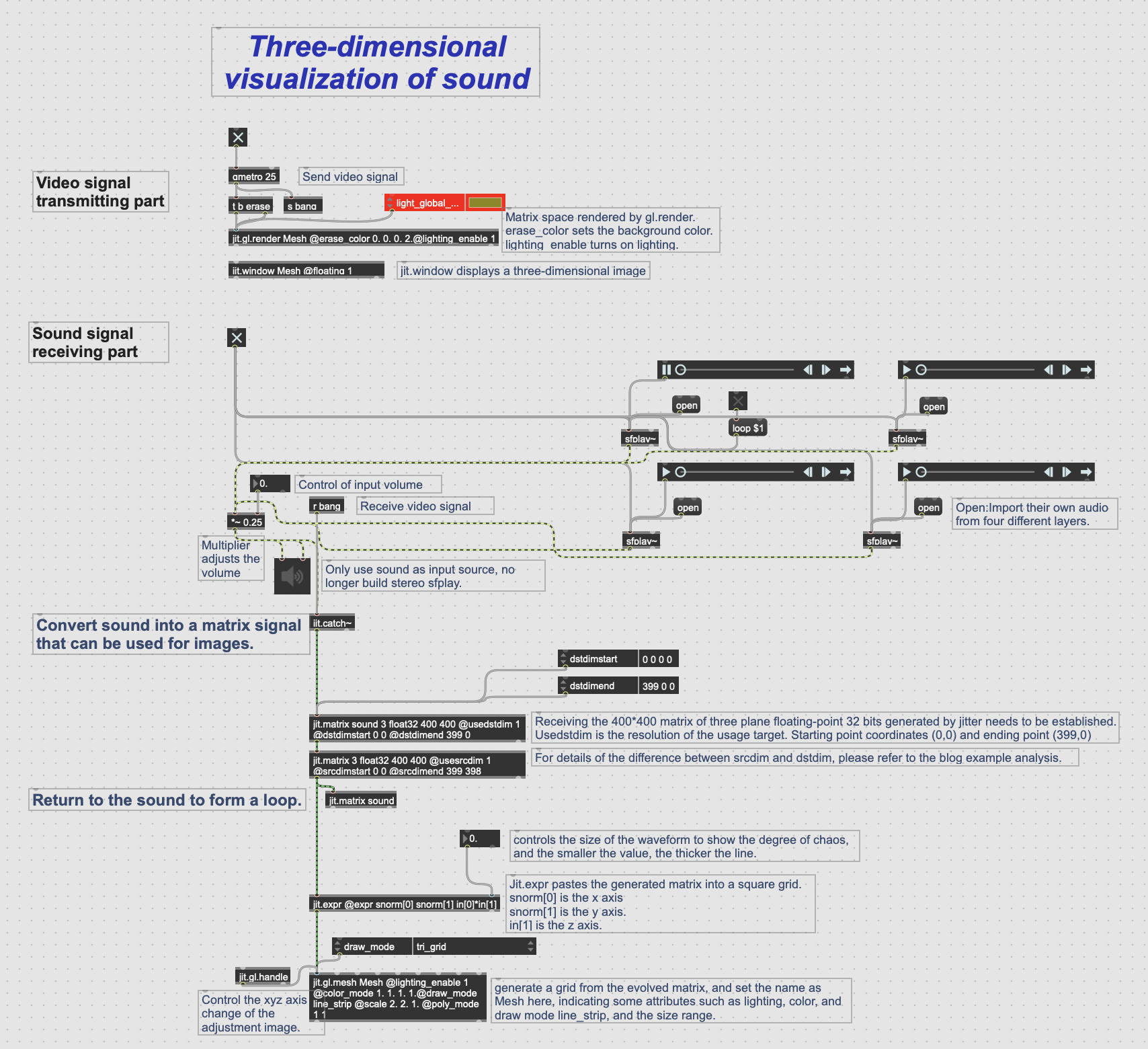

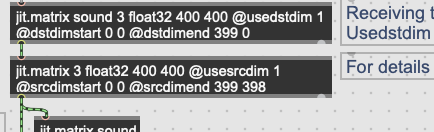

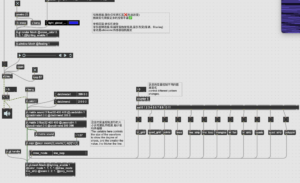

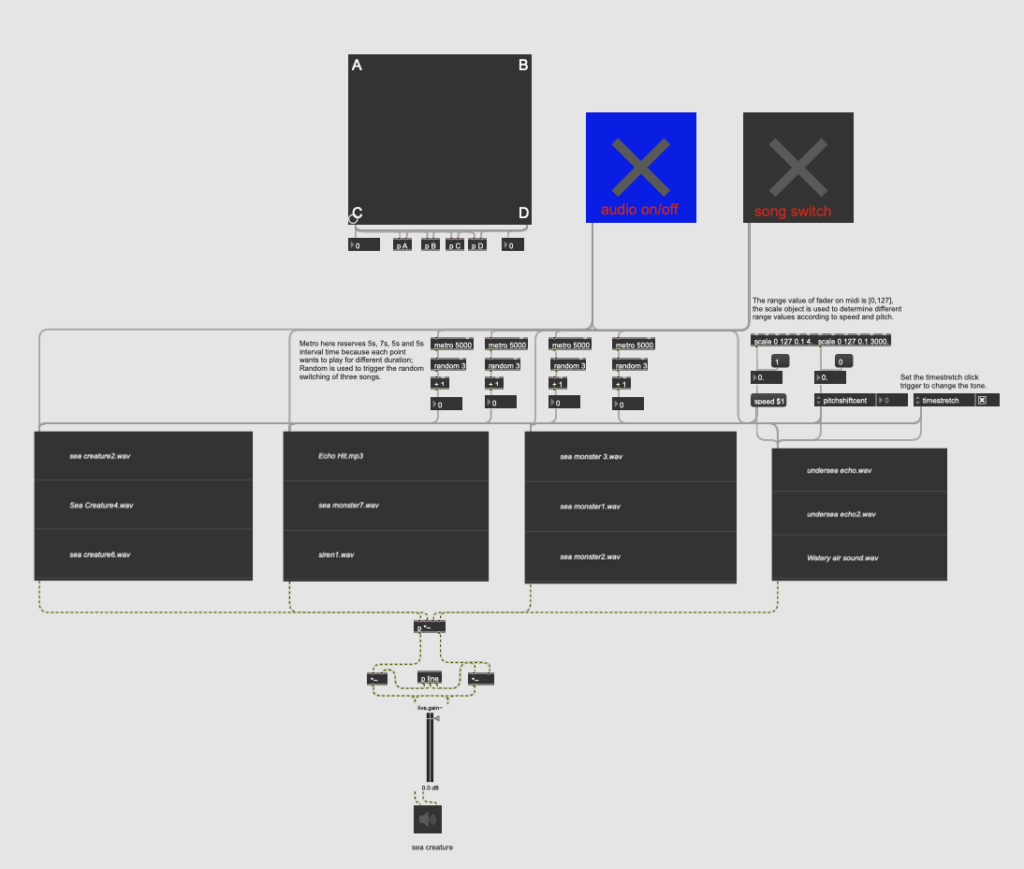

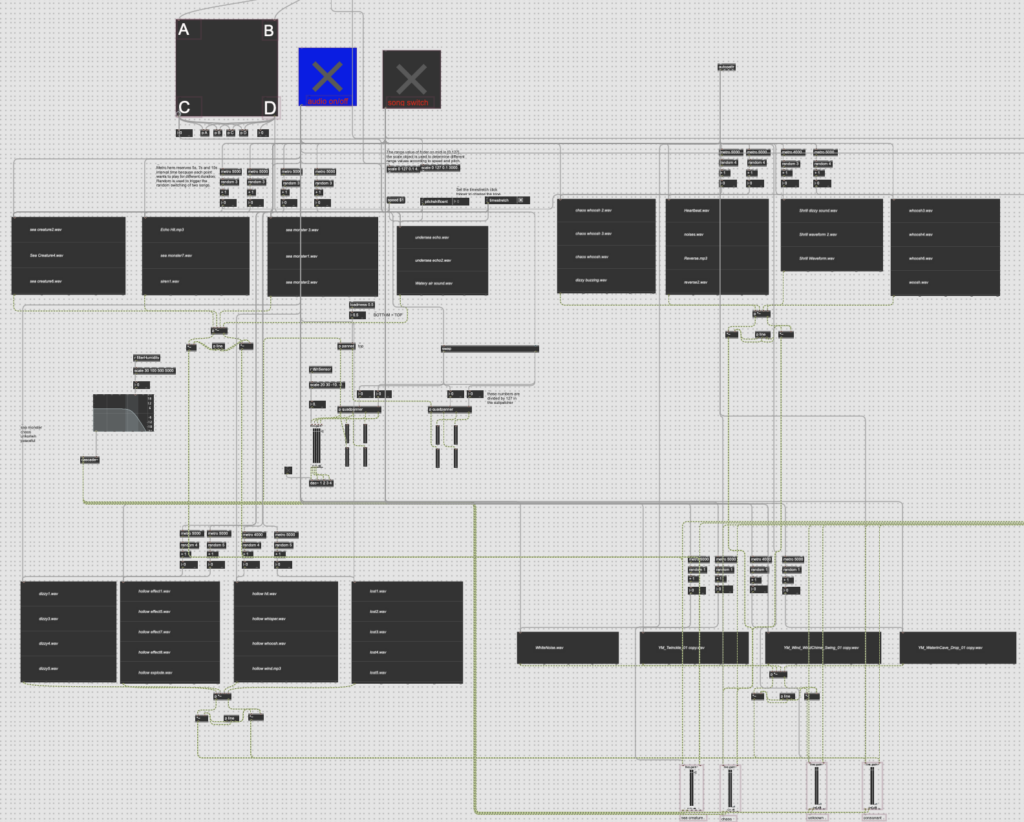

Deconstruction of sound part patch

With the progress of the project, the concept gradually became clear, and my patch became more perfect.

Construction of basic layer(patch link)

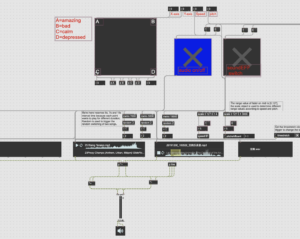

As shown in the figure, you can see that I set the four corners of pictslider as A、B、C、D points, and the circles in them can be controlled. The four playlists below correspond to A、B、C、D points respectively. On the first level, we show the scene of sea monsters, so these sound effects are all sea monsters and underwater creatures.In this way, the other three layers of scenes are also evenly distributed in these four corners.

Because the variables to be regulated by the sensor were not determined at that time, I set a number of manually controllable elements, such as speed, pitchshift and timestretch, as dynamic quantities to make the audio change and control it by the sensor.Detailed comments have been marked in the picture.

In terms of artistic presentation, I have made a lot of cuts in the sound effects.We have produced many sound effects on each layer, but considering that many people will not have the patience to listen to so many similar sound effects in actual performances, which will make people feel tired aesthetically. In the end, in every emotional layer of the whole project, we used 12-18 sound effects evenly distributed, while the last layer used only four sound effects as a finishing touch to express the sense of calm.

here is the sound effect part on patch:

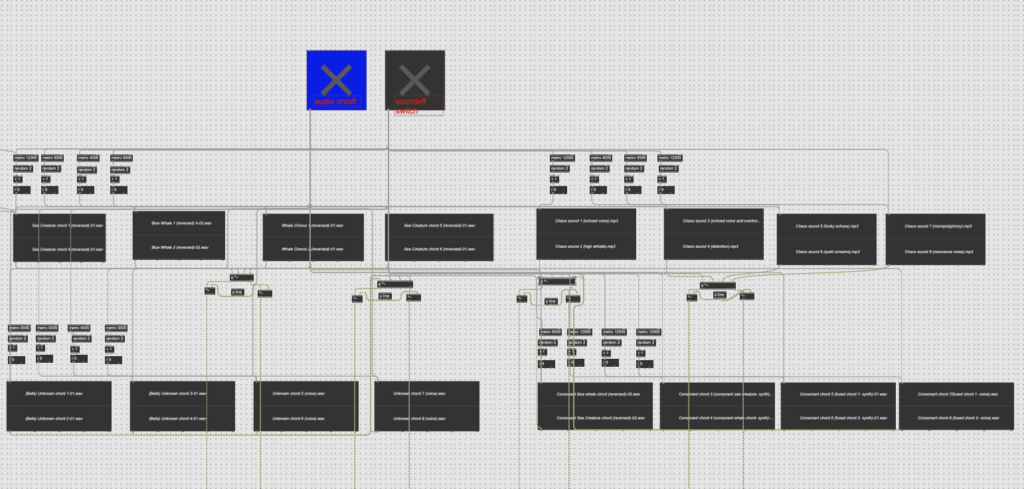

For the music part, I do the same thing. Corresponding to each level of emotion, the chords of music are more concise, and each level is exactly eight chords, so it is two to be assigned to four corners.

here is the music chorus part on patch:

This is the patch link of our whole project.

Use of new objects

I met some new problems in the process of patch improvement, which also made me learn new knowledge about max. The problems encountered are as follows:

- Based on the audience’s listening comfort, how to make these four layers of emotions cross-fade and naturally present when switching?

- Based on the need of immersion, how to output it to multiple speakers to make it have audio-visual changes?

- Based on the need of diversified regulation of sound, how to make the sound itself change more?

For these three questions, the following three parts are used in max to give corresponding answers.

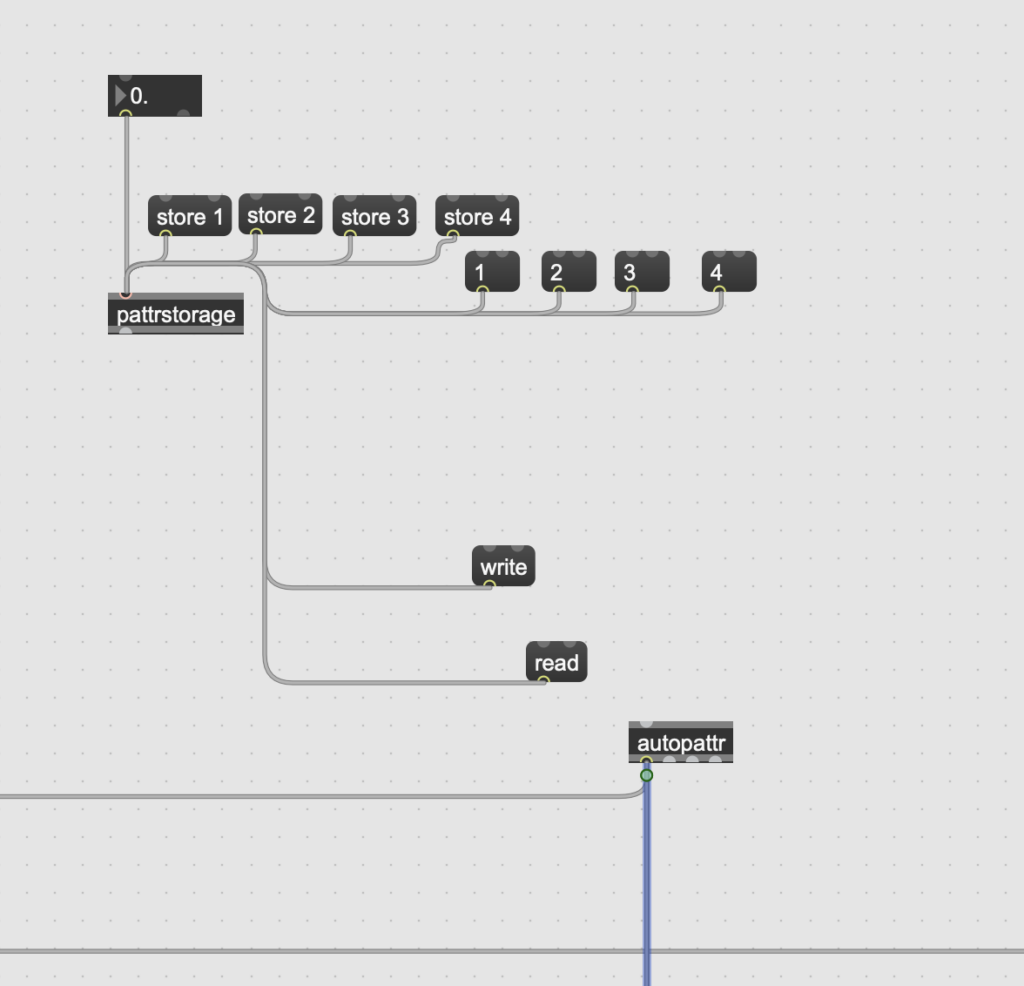

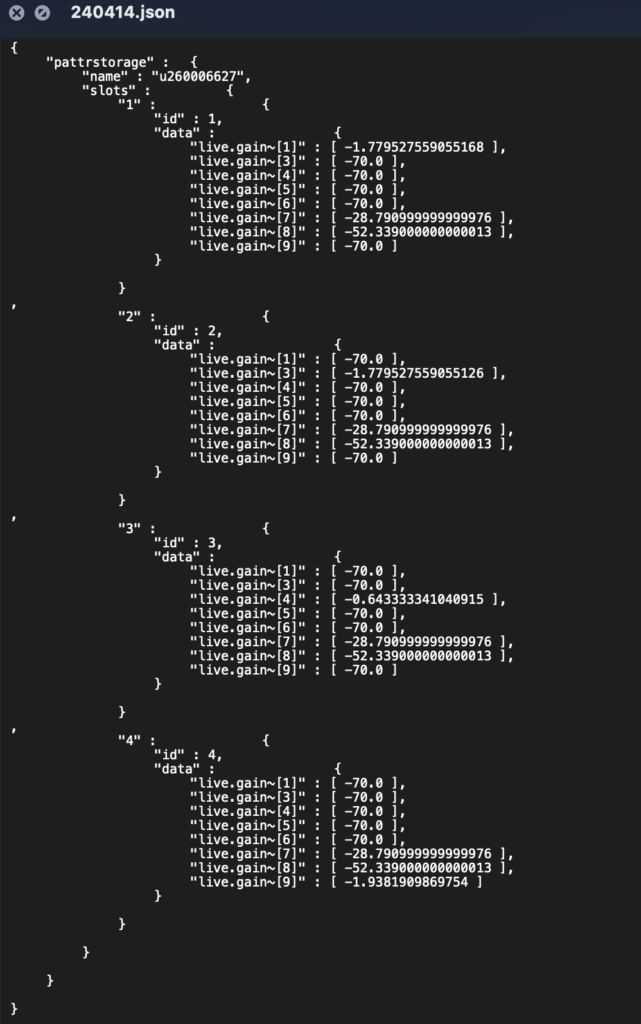

autopattr&pattrstorage

For the first question, Leo gave me this solution when we had a meeting with Leo.I use pattrstorage object as a preset to increase and decrease the level in order to make the transition between layers smooth.I use it to connect a midi controller to control the switching. Leo refers to the instructional video of this link:https://youtu.be/5-4YZwbKB-k?si=1NeUJhZddplc0xT_

Video shows specific functions:

As shown in the figure, the file read in the operation is a code file with. json suffix:

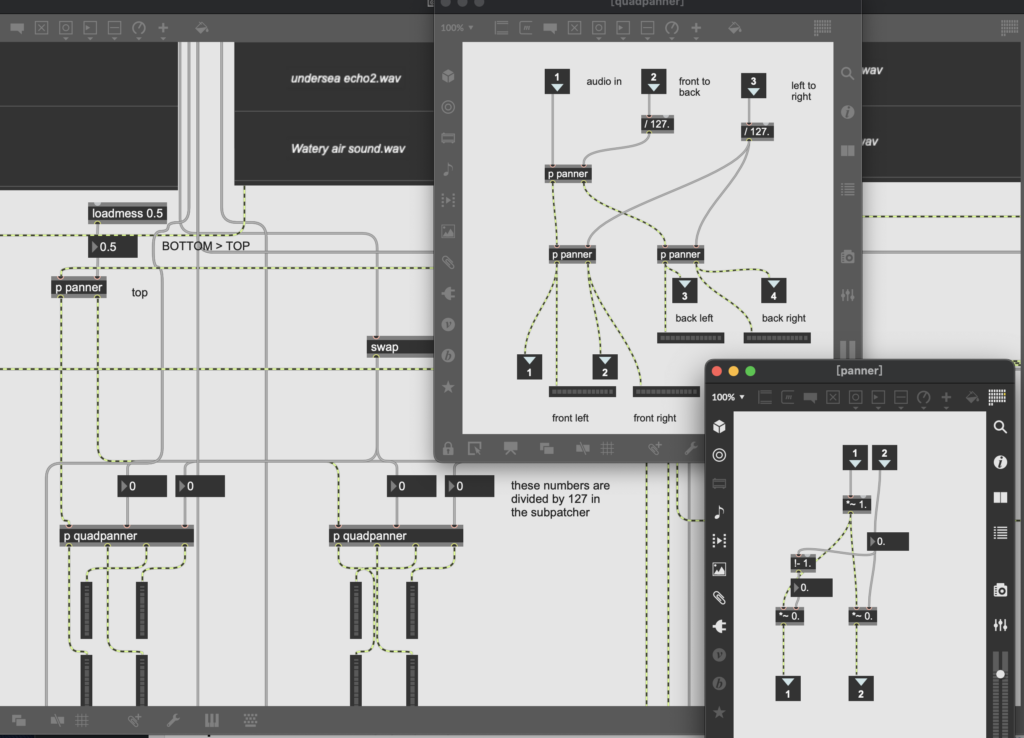

panner

For the second question, we asked Jules for a lot of help. I want to create the fear of sea monsters surrounded from all directions, so I need to use sound image processing. At the same time, I also need A、B、C、D points to correspond to different speakers in speaker settings.

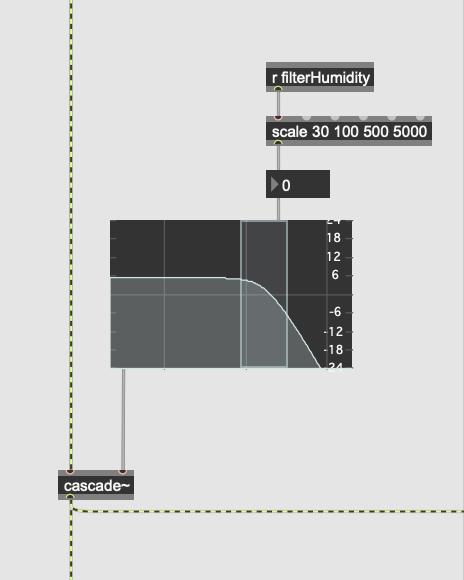

filter setup

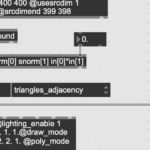

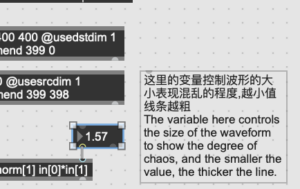

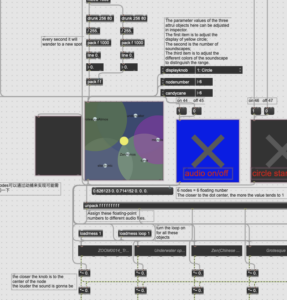

In the final presentation, Leo gave us the suggestion that we need more changes to make our project sound less monotonous. So we use the cascade~to filter an input signal using a series of biquad filters and use filtergragh~to generate filter coefficients.

As shown in the figure, our control range is cut off 500-5000 Hz, and(30 ,100) is the range given by the humidity sensor.

Improvement suggestions

The sound part received valuable opinions from many teachers.

Set more speaker controls to seek a better sound experience.

Add frequency levels of music or sound effects to make it sound richer, such as high frequency.

If there are more channels coming from above, it will highlight the immersion of the characters on the seabed.

Personal reflection

The construction of the sound part in max patch takes the longest time among all tasks, because new problems are constantly discovered and new tasks appear due to the requirements of project presentation, and at the same time, we are constantly asking the teacher for help to solve the problems.

In the third rehearsal, I was still trying to make sure that the signal sent by panner could not be transmitted to four speakers. Although the sound signal was successfully transmitted in the end, more speakers were not used to reflect the immersion in the final presentation.

We each have our own responsibilities throughout the birth of the project, but I have participated in every part of our group except for the music part. Because of the company of team members and the support of teachers, I was not very anxious from beginning to end. This embryonic project gave me the courage to continue exploring audio-visual interactive software.

Thank you again for Leo’s responsible company and all support, Jules and Joe’s technical support, all the teachers, classmates and friends who came to watch, and all the members of DMSP_presence group.