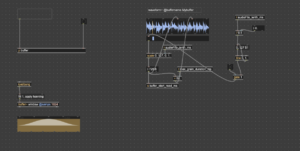

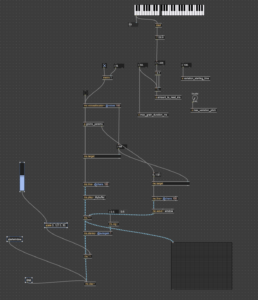

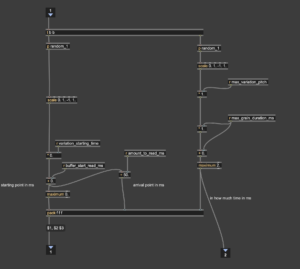

Over the past few weeks, I have been focusing on developing sound processing tools in Max/MSP as part of my project. One of the key components I have completed so far is a sound particle effect processor, which allows me to manipulate audio in a granular fashion. This effect will play a significant role in shaping the sonic textures of the final installation, helping to deconstruct and reconstruct the recorded industrial sounds in a dynamic way.

At this stage, I have not yet fully determined the interaction methods that will be used in the final installation. Since the nature of audience interaction will depend heavily on the collected sound materials and the spatial design of the installation, I have decided to finalize these aspects after the preliminary sound collection and 3D modeling phases are complete. This will ensure that the interaction methods are well-integrated into the overall experience rather than being developed in isolation.

My next step is to continue building additional sound processing tools in Max/MSP, refining effects that will contribute to the immersive auditory landscape of the project. These may include spatialized reverberation, frequency modulation, and real-time sound transformations that respond to audience engagement.

By structuring the development process this way, I aim to first establish a versatile set of sound manipulation tools, then tailor the interaction mechanics based on the specifics of the sound environment and installation setup. This approach will allow for greater flexibility and ensure that the interactive elements feel natural and cohesive within the final experience.

I will provide further updates once I begin the field recording phase and initial modeling work, which will be crucial in shaping the interactive framework of the project.

Written by Jingxian Li(s2706245) & Tianhua Yang(s2700229)