Sound Design Feedback from Jules

-

Speaker Setup:

Jules suggested that the speaker arrangement could be more “irregular” to align with the innovative nature of the exhibition. We can reorient the four speakers to “pair” differently with West Court’s built-in stereo system. For instance, adjusting them so that the field recordings are heard predominantly from the right, while the data sonifications emerge from the left. Additionally, changing the direction of the speakers will enable us to capture both the direct sound and its reflections off the venue’s surfaces, adding a dynamic layer of auditory variation.

-

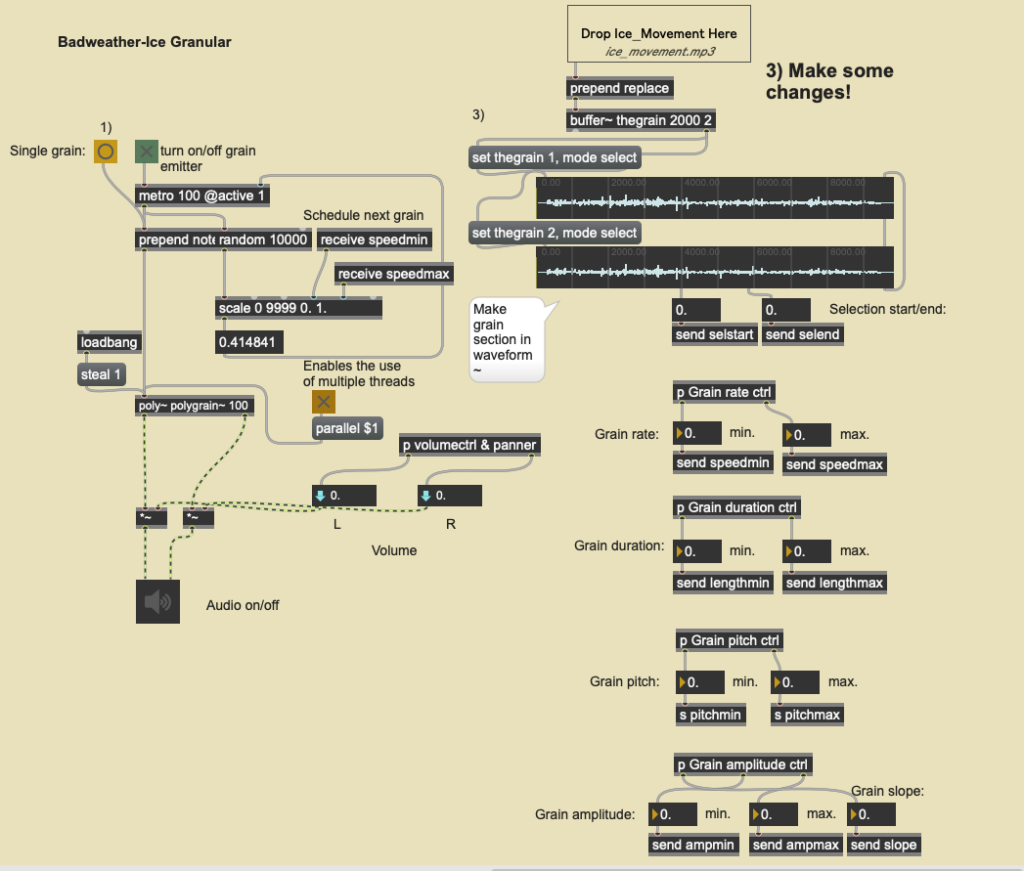

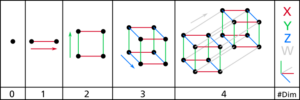

Spatial Mixing:

Jules also recommended considering spatial mixing to better replicate the LiDAR scanning process. Currently, our data sonifications are set to stereo output. However, enhancing this setup to allow RGB sounds to emanate from varying heights or to have the LiDAR high-tech sounds orbiting around the listener could significantly enrich the auditory experience. Such adjustments would not only simulate the three-dimensional aspect of LiDAR scanning but also create a more immersive and engaging environment for the audience.

Visual feedback

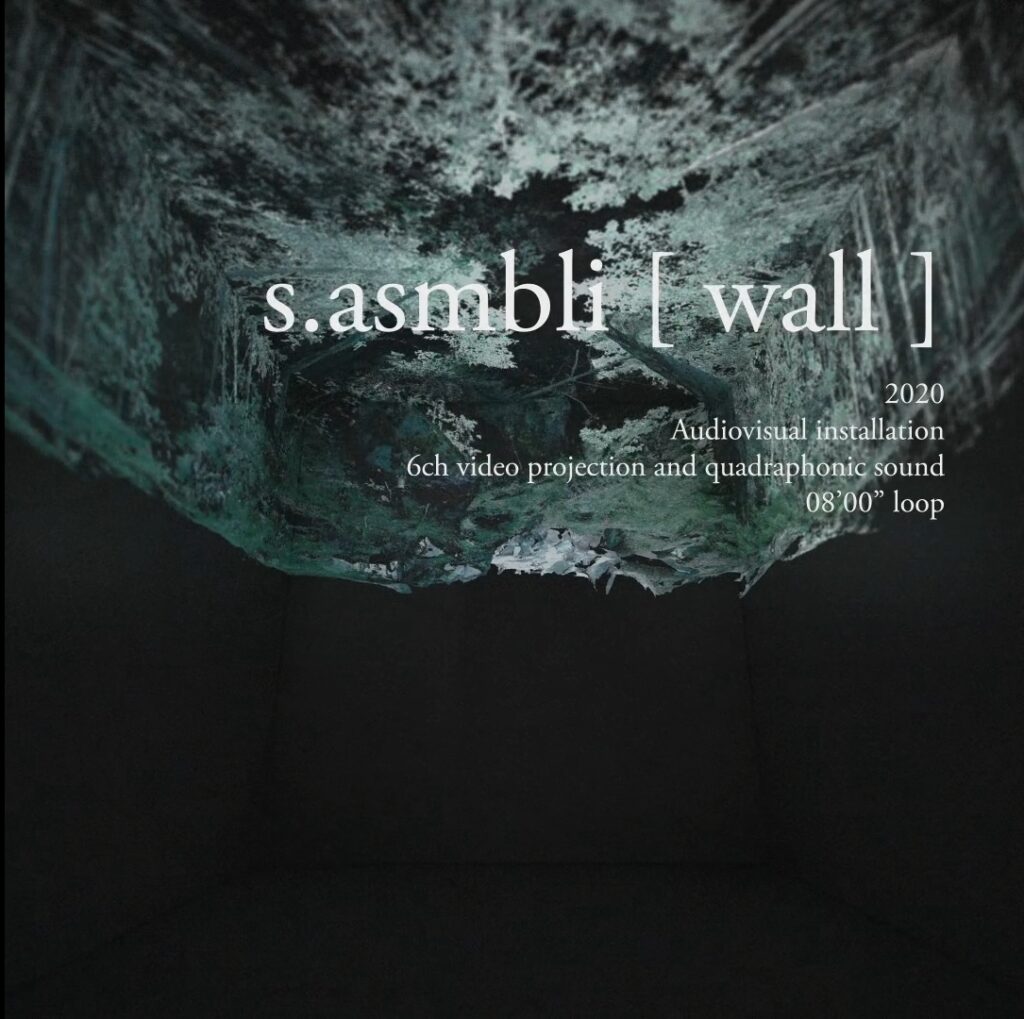

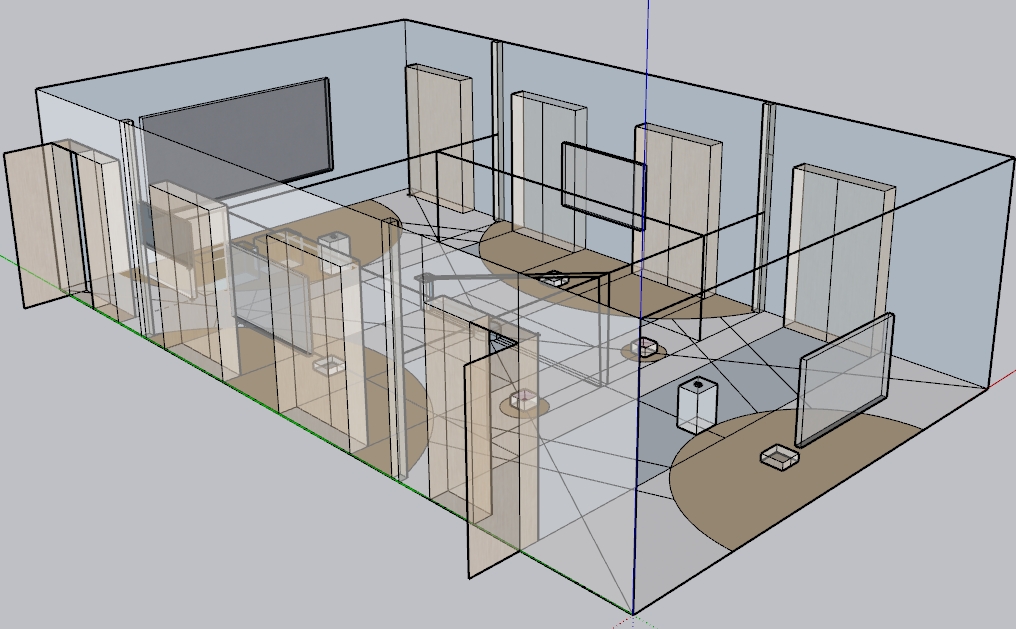

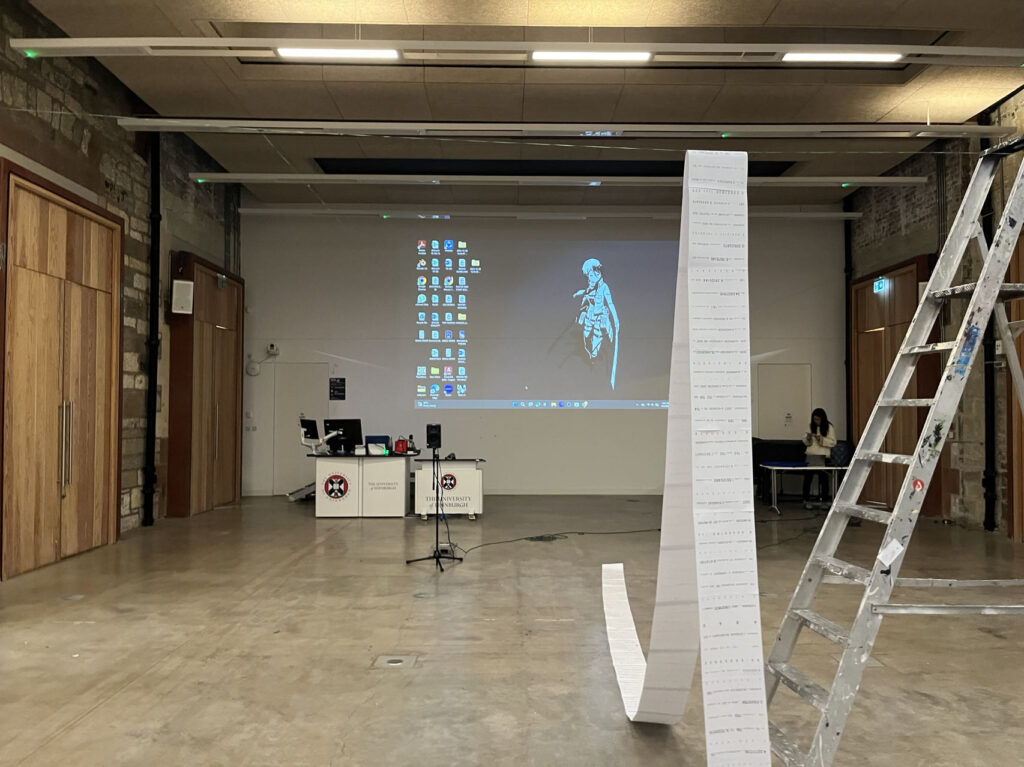

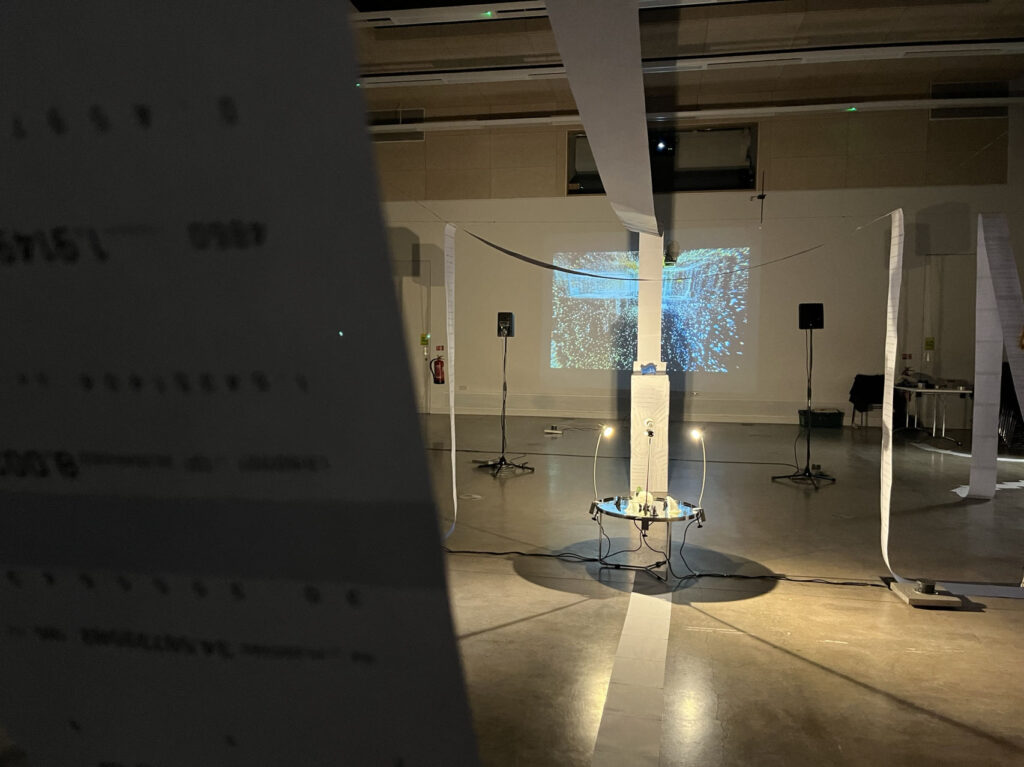

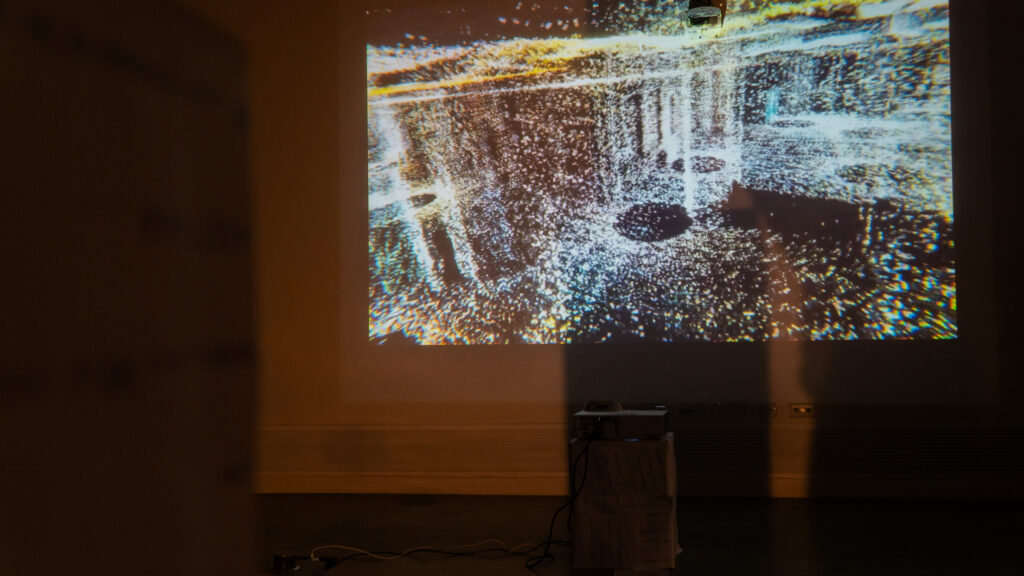

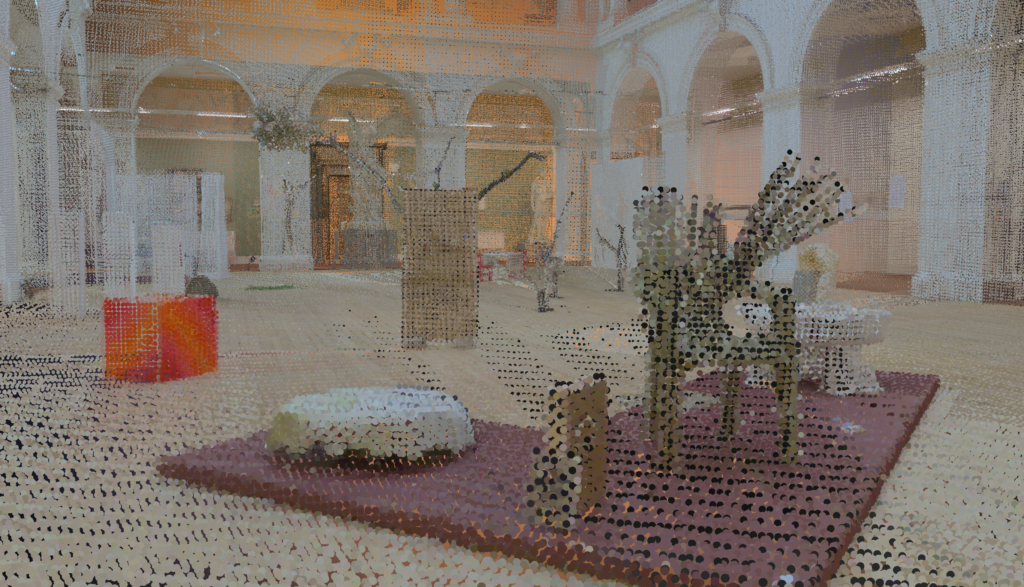

Visitors were effusive in their praise for the astute utilization of space evident throughout the exhibition, lauding the discernible arrangement of diverse elements. Particularly striking was the efficacy of the central installation featuring suspended white paper, which commanded attention and imbued the space with a sense of cohesion and intrigue. Notably, interactive projects, such as those showcasing the Edinburgh College of Art (ECA) and Dean Village, stood out prominently among visitors, garnering widespread recognition for their engaging and immersive qualities.

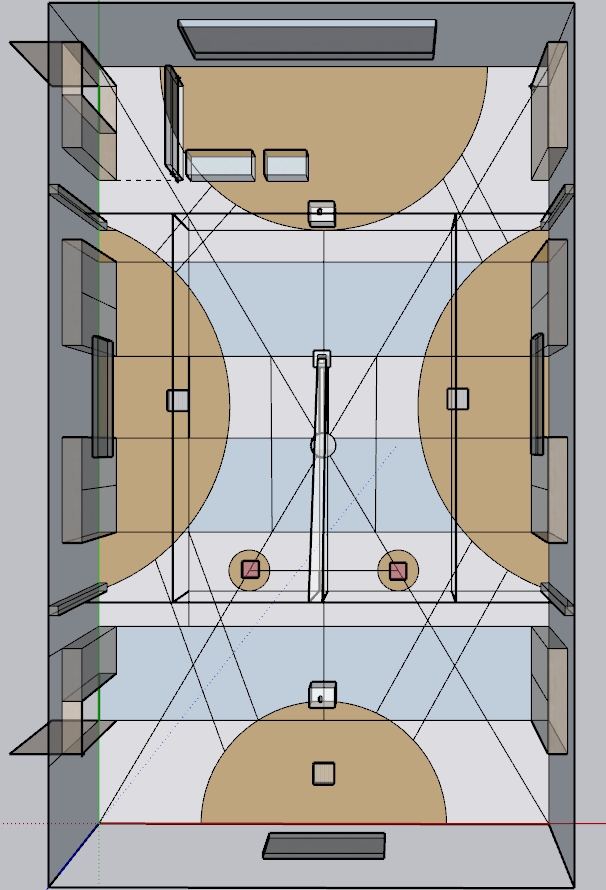

Furthermore, valuable input from Jules highlighted the potential for enhancing the symbiosis between the projects and the exhibition venue itself. Specifically, suggestions were made to align the layout of the venue with that of the ECA projects, thereby fostering a seamless integration and heightening audience immersion. By mirroring the configuration and ambiance of the projects within the exhibition space, attendees could be further enveloped in an experiential journey that transcends mere observation, fostering deeper engagement and appreciation of the showcased content.

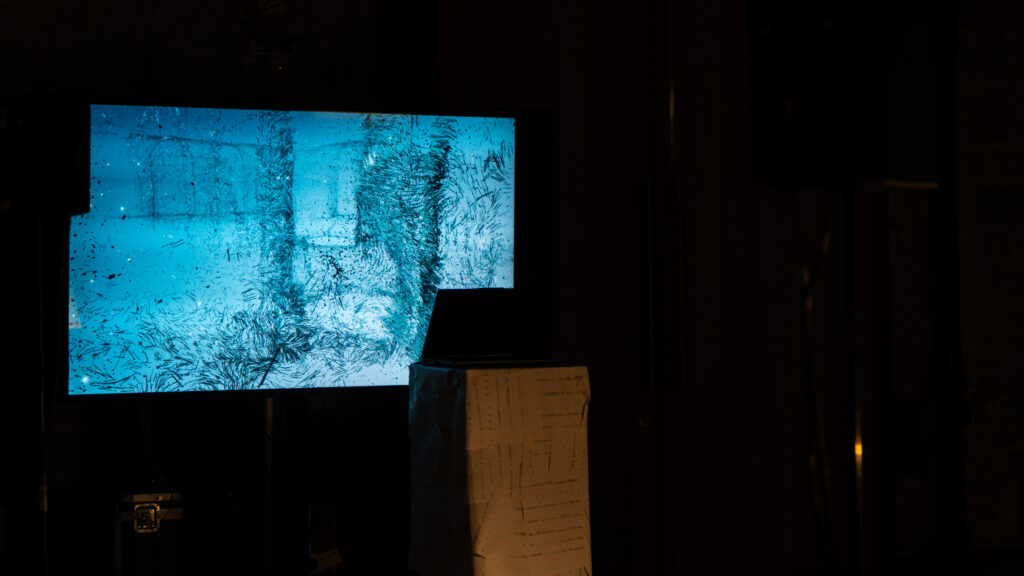

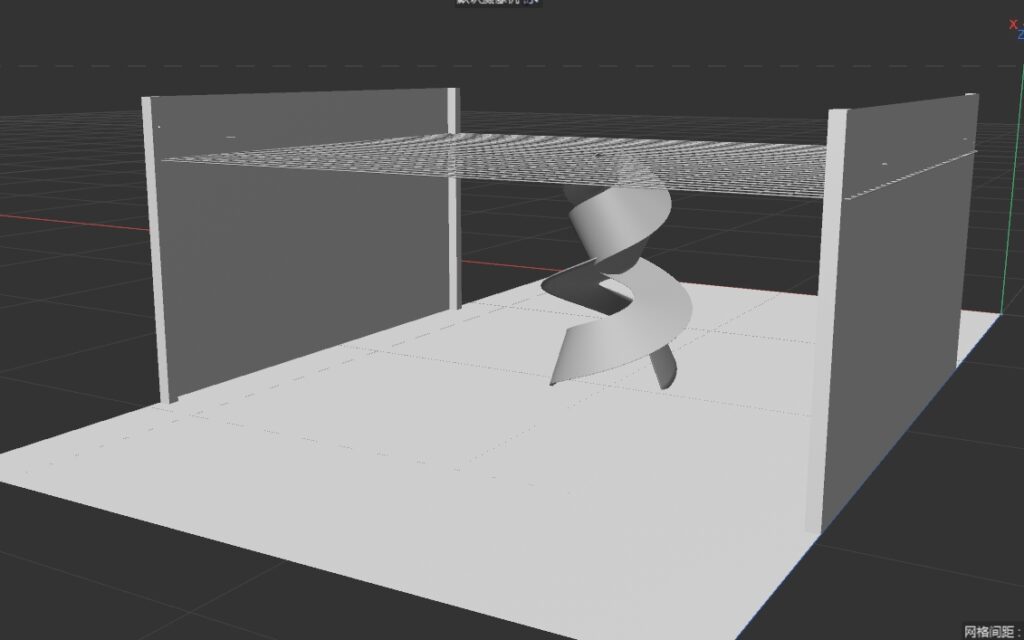

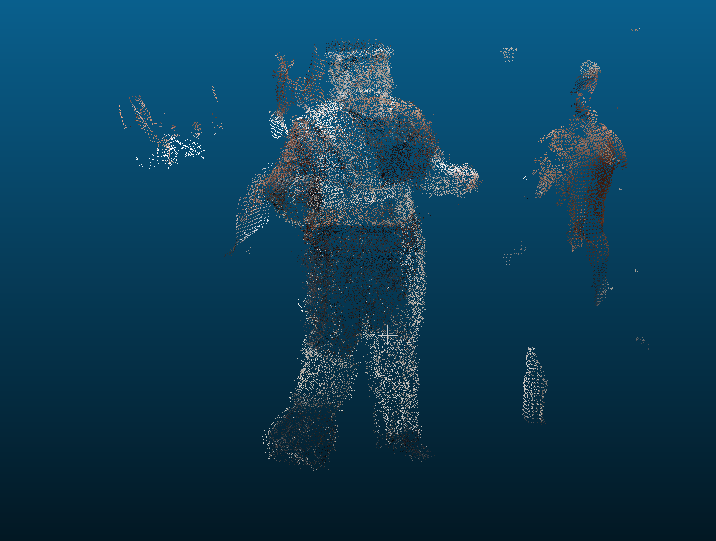

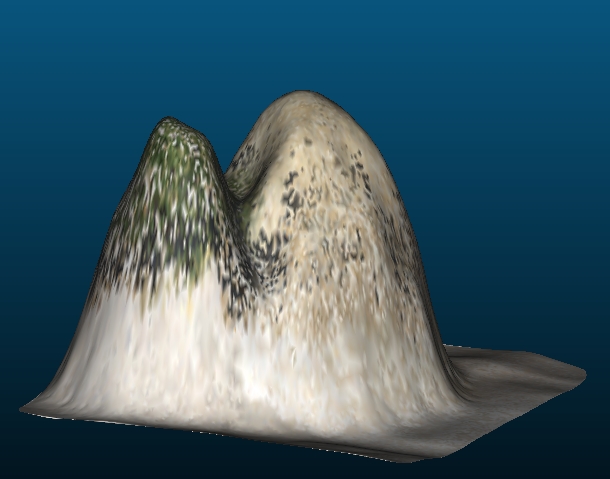

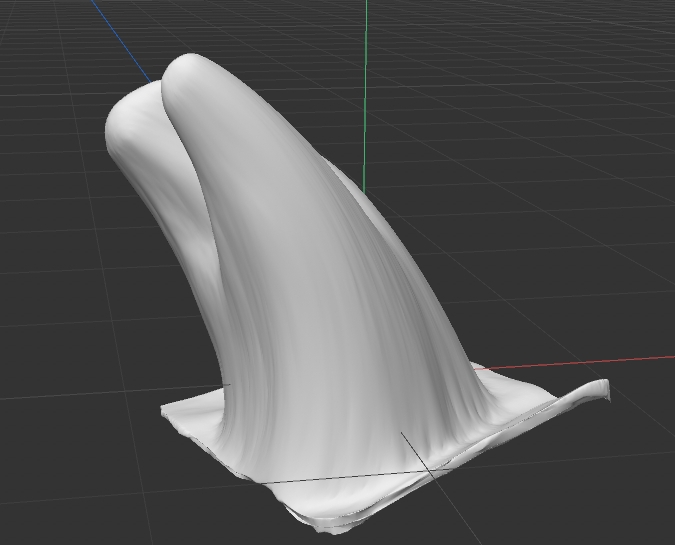

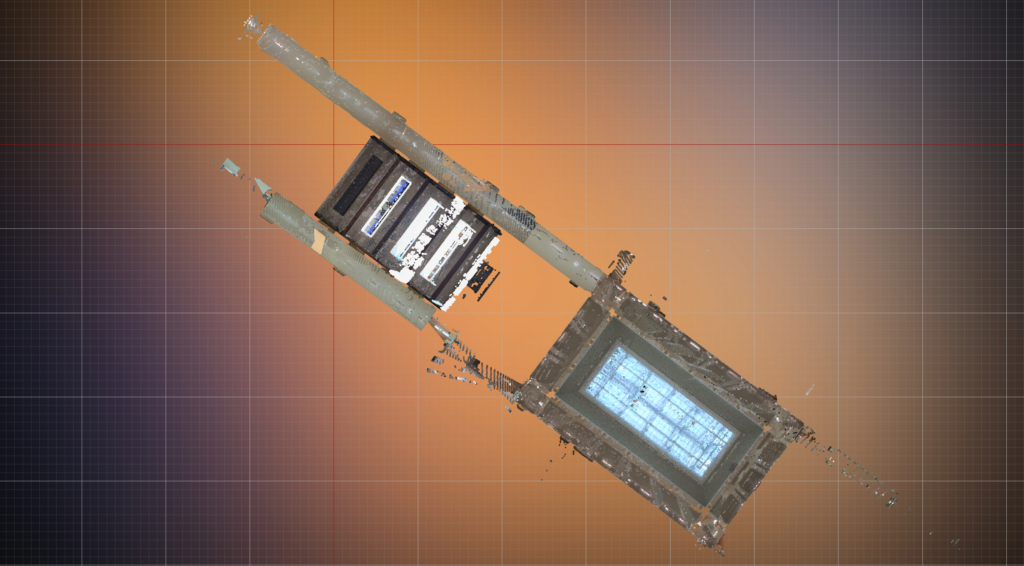

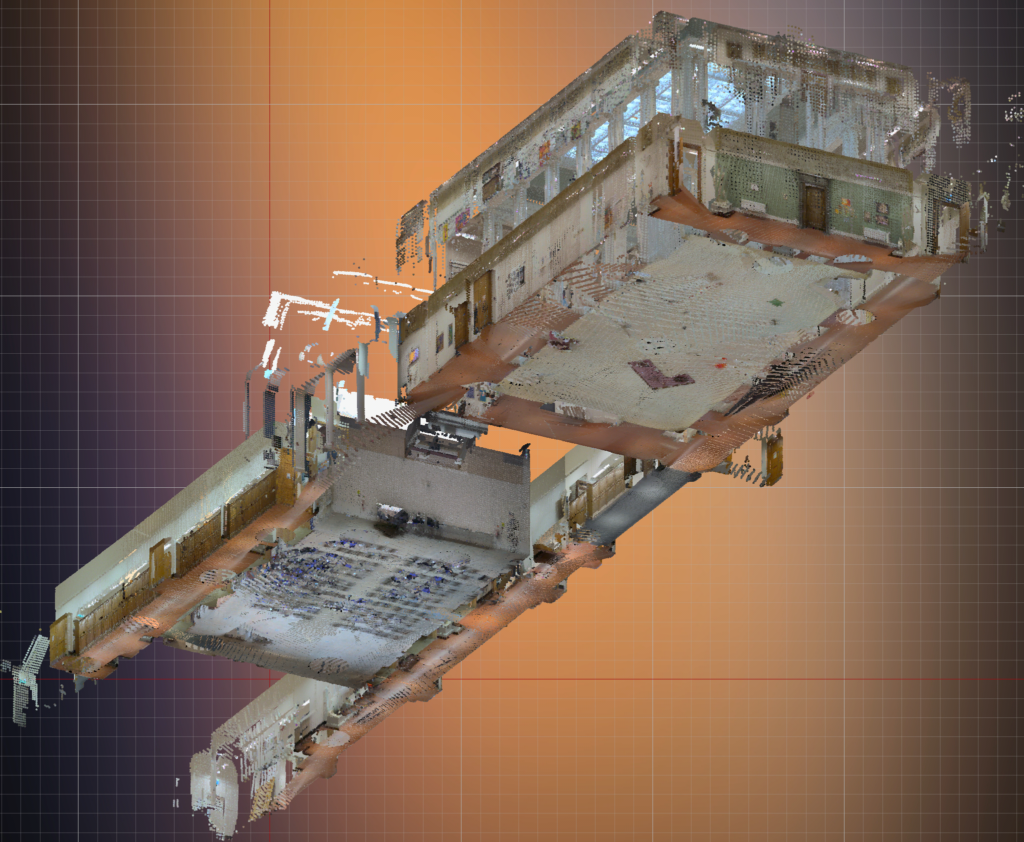

Fig 2-rendering of main object

Fig 2-rendering of main object

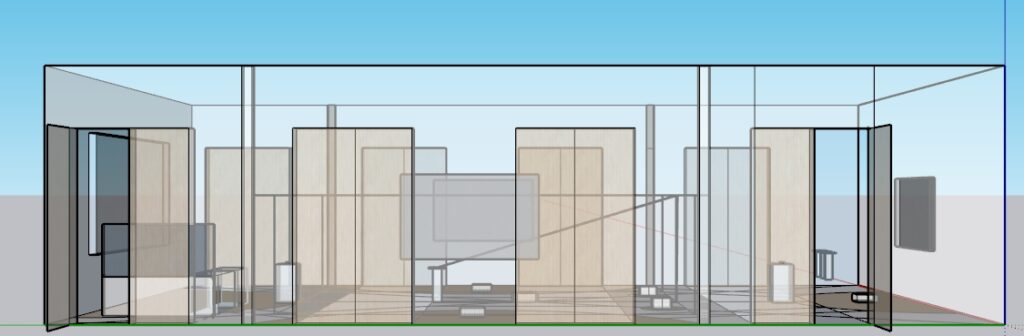

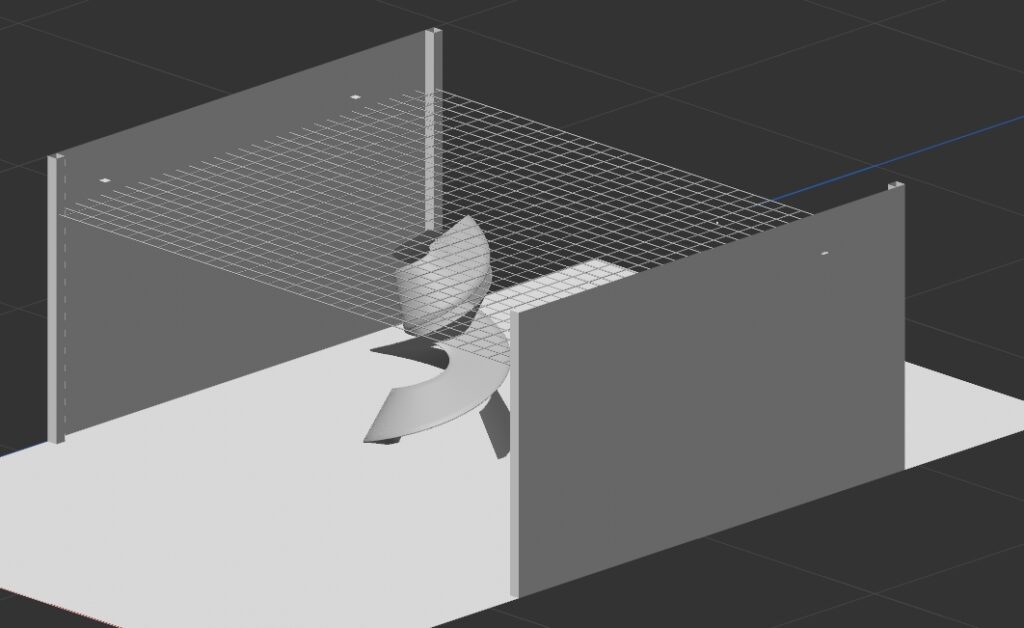

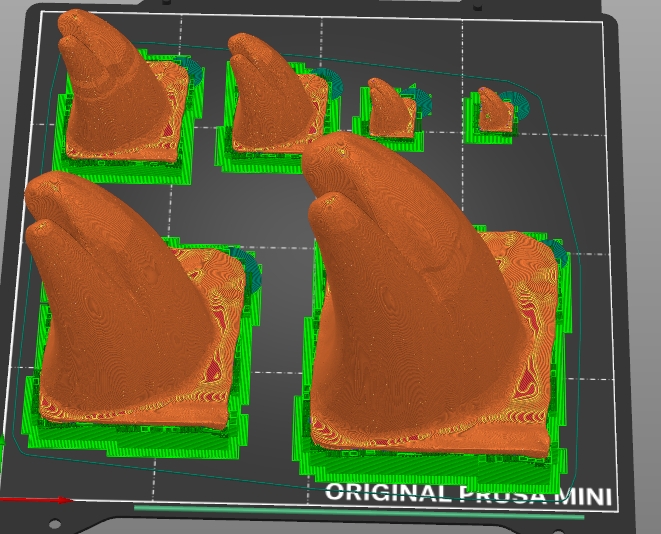

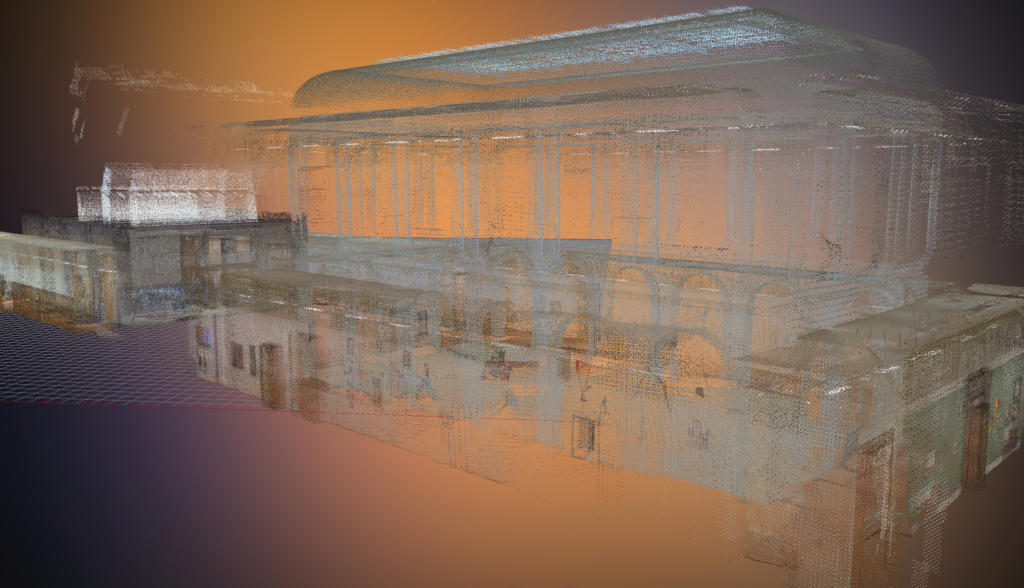

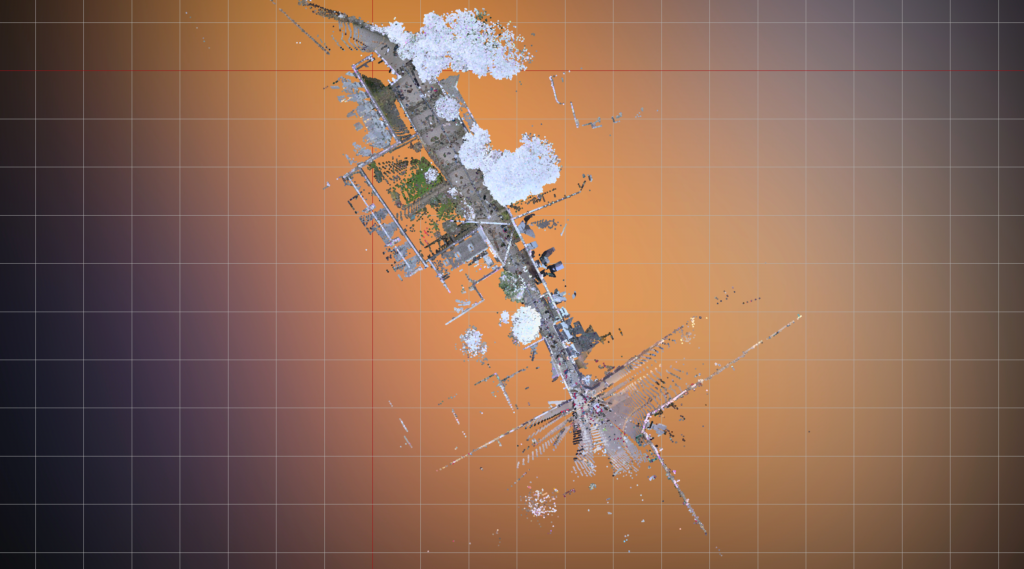

Figure 8 South corridor by west court

Figure 8 South corridor by west court

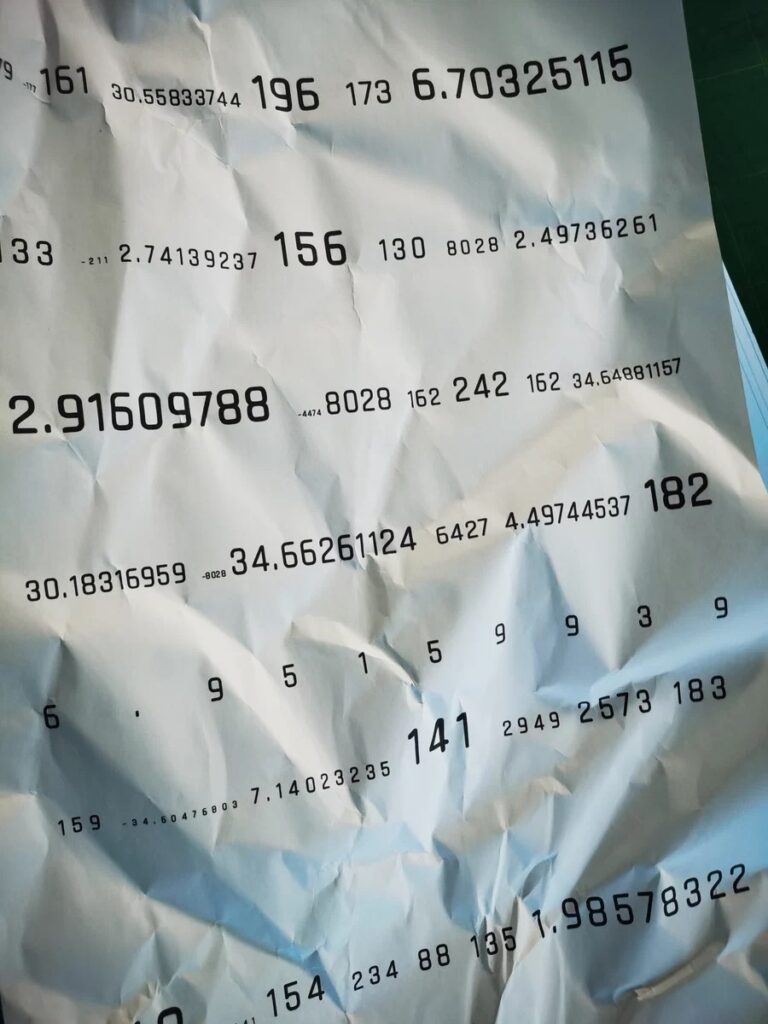

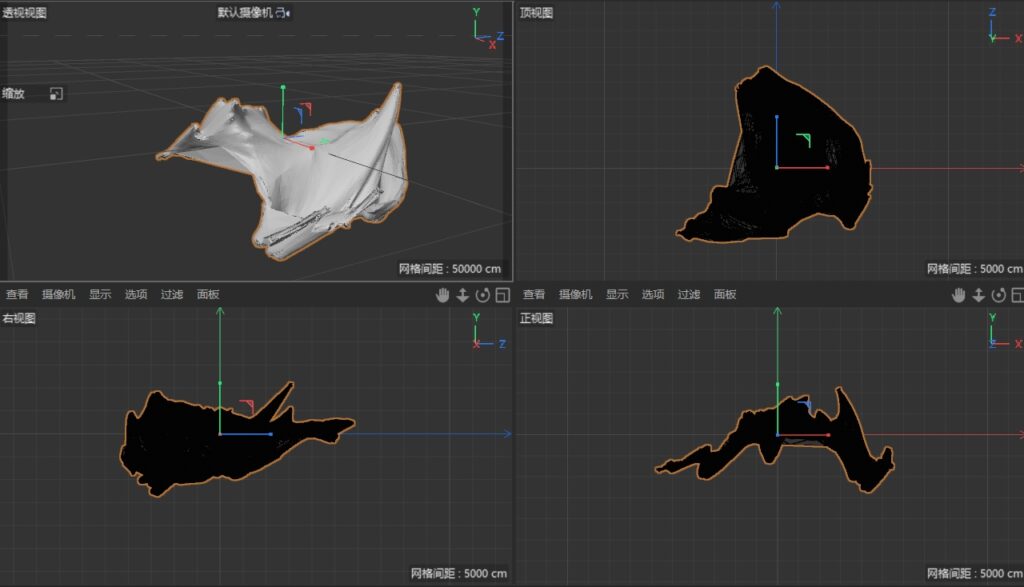

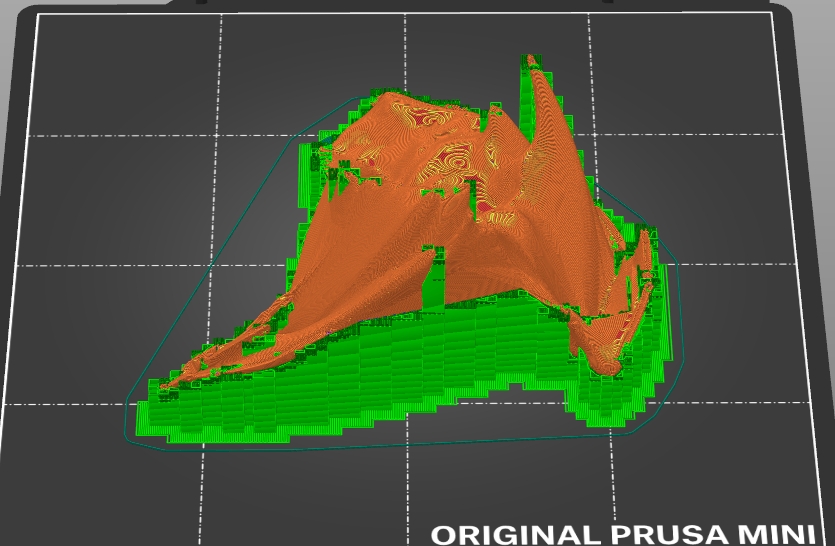

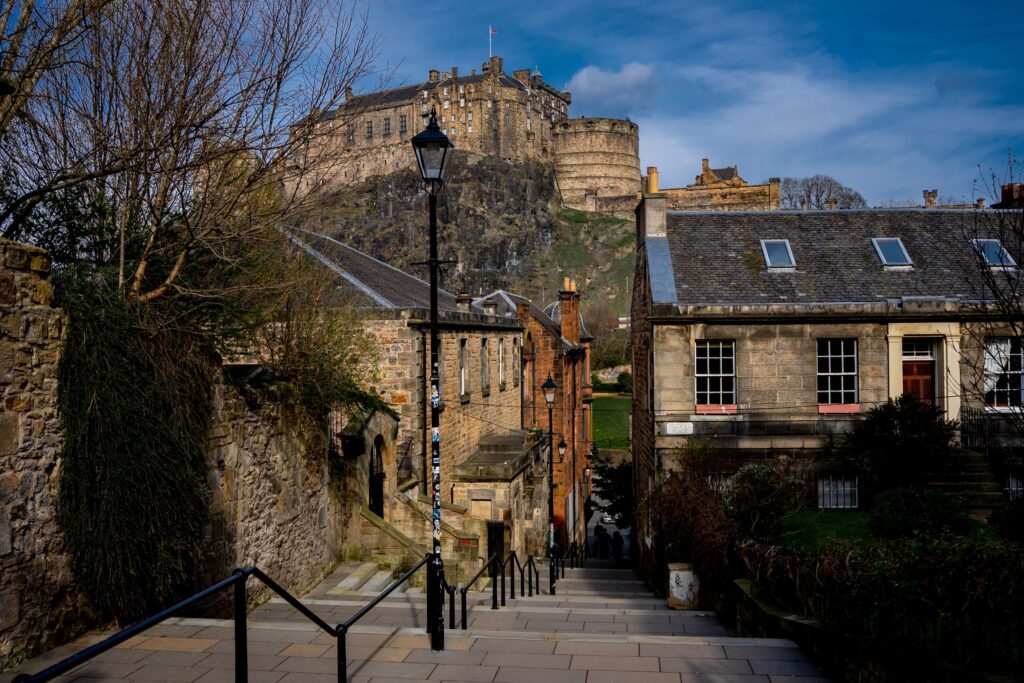

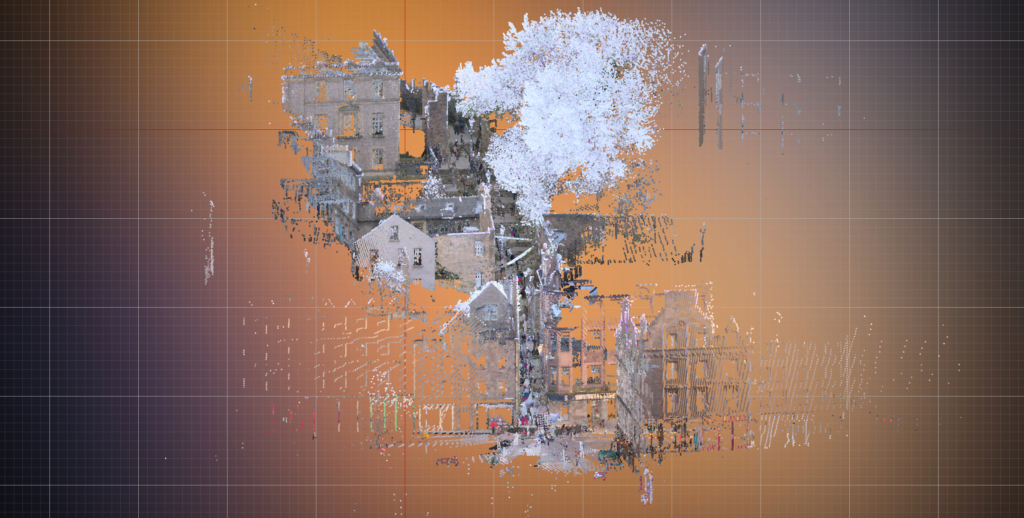

Figure 14 Vennel Steps top view

Figure 14 Vennel Steps top view