In terms of scene scanning we scanned a total of eight objects, of which four large scenes and two special objects we did special processing through other software in the post.

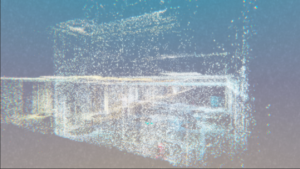

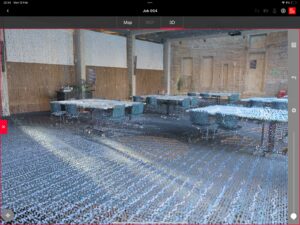

Firstly, we chose EFI as our test site for our first scan and we recorded the detailed scanning process in this scan. So we can be handy for future scans.The reason for choosing EFI is firstly because EFI has a wider environment while the house is sorted in a more regular manner, and it is more convenient to collect data with less foot traffic.In order to enhance the collection of data, a two-phase approach was implemented. Initially, a comprehensive scanning of classrooms was conducted, encompassing all corners to delineate the spatial extent of the building. Subsequently, a detailed examination of the interior of each room was carried out to capture nuanced features. The second phase concentrated on the internal corridors of the building, employing an inward-to-outward methodology to gather comprehensive spatial data. This approach encompassed the acquisition of positional, chromatic, and intensity data, enabling a meticulous assembly of point cloud data and the identification of optimal capture angles for detailed analysis.

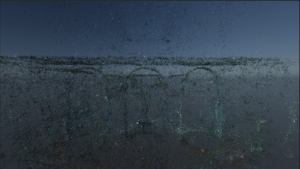

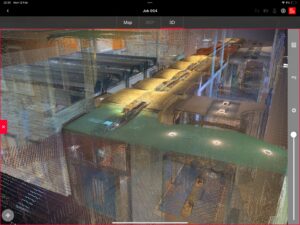

The initial selection for our primary scanning endeavor was the Edinburgh College of Art (ECA). Given our nascent acquaintance with radar scanning technology at this juncture, we opted for an environment with which we held a profound familiarity—the ECA Main Building. Specifically, our focus extended to the West Court and Sculpture Court within the aforementioned edifice, alongside the interlinking corridor facilitating access between these locales. Notably, these areas not only serve as focal points for numerous campus activities but also witness considerable pedestrian traffic, thereby affording a rich tapestry of diverse and compelling data. Moreover, given their recurrent role as venues for our academic pursuits and culminating events, we deemed it a singularly poignant endeavor to encapsulate these spaces as emblematic data points.

Subsequently, our exploration extended beyond the confines of the campus to encompass an off-campus locale—the Vennel Steps. This distinctive site not only serves as a pivotal conduit linking Lauriston Place and West Port but also affords a panoramic vantage point overlooking the majestic Edinburgh Castle. Reverberating with the collective memory of the city, the Vennel Steps transcend their utilitarian function to assume the role of a symbolic bridge, forging connections between disparate locales. From a data-centric perspective, this site boasts a singularly unique topography characterized by staggered elevations, punctuated by the surrounding residential structures. Such distinctive features lend themselves to an unparalleled portrayal through the lens of data points, offering a nuanced depiction distinct from that of other locations.

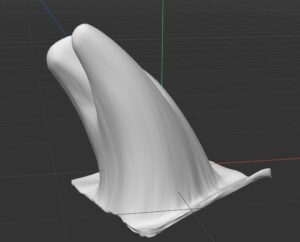

After that, our data collection efforts transitioned towards outdoor environments, with Dean Village—an esteemed historic locale nestled in the north-western precincts of Edinburgh—emerging as the focal area for our endeavors. Our initial foray led us to the environs of the Water of Leith Walkway, where we meticulously scanned a wooden bridge adorning the path. Nestled amidst a serene ambiance, this bridge epitomized solidity, connectivity, and enduring continuity in its steadfast form. Its tangible presence served as a tangible point of embarkation, imbuing our journey with a palpable sense of foundation and materiality. Subsequently, we directed our attention towards a nearby waterfall, strategically chosen for its dynamic character—a departure from the static features of previous scanning locales. This marked our inaugural attempt at capturing data from a substantial, dynamically evolving entity, thereby broadening the scope of our technical proficiency and experiential repertoire.

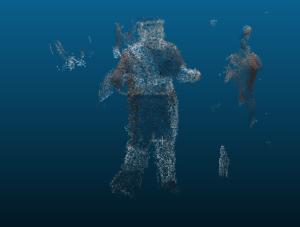

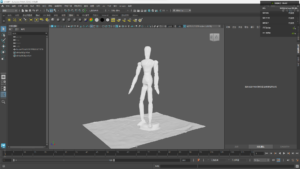

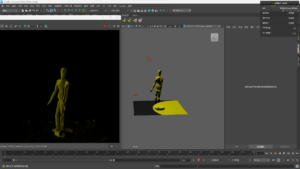

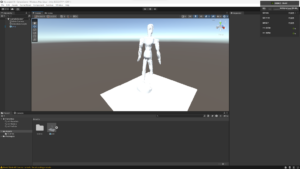

In addition to large scenes, we also scanned a number of other objects, such as people and some small objects.