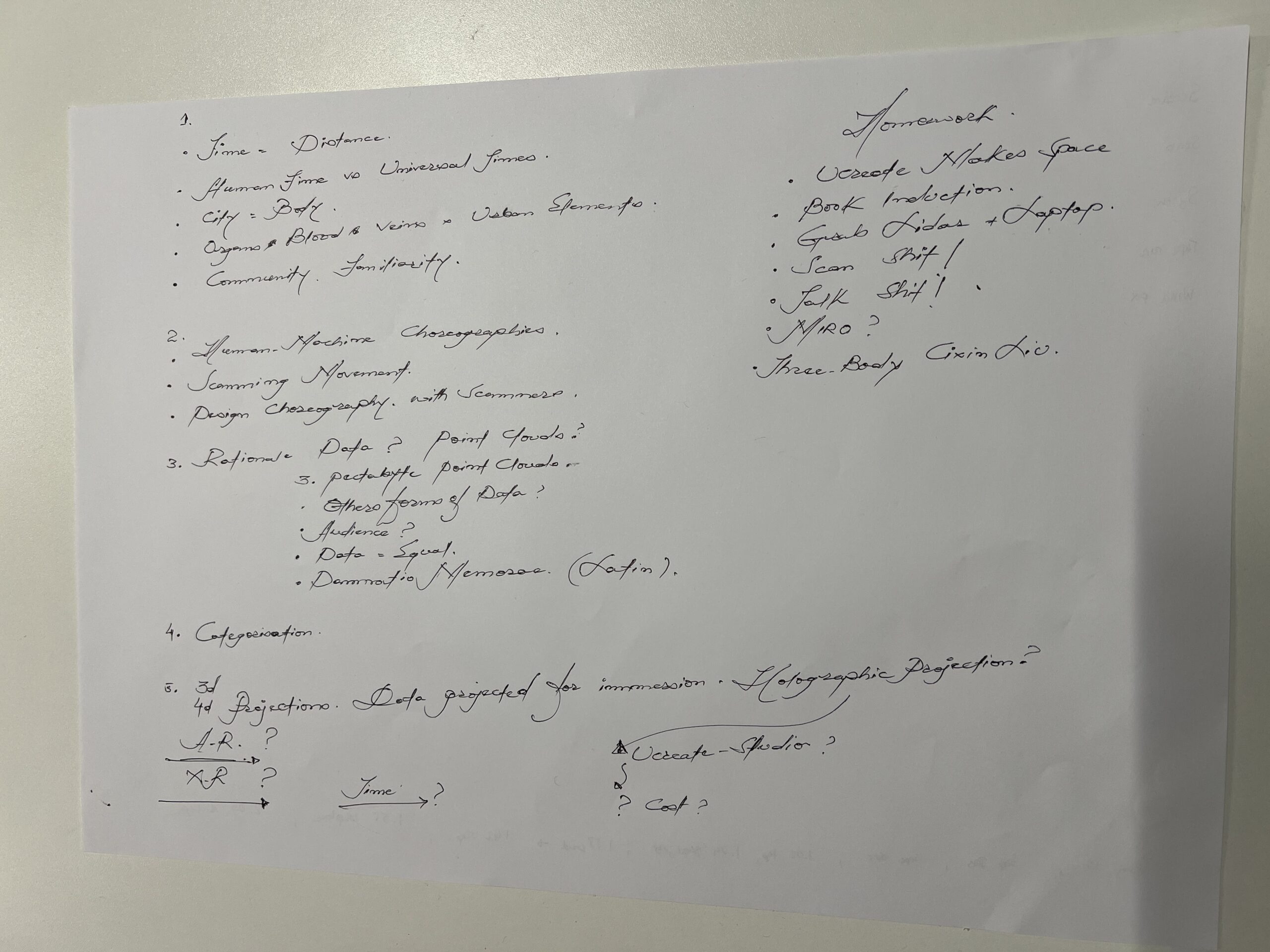

The combination of visuals and sound in films brings us an immersive experience, where sound plays a crucial role in shaping immersive spaces.Michel Chion categorizes film sound into two parts in “Audio-Vision: Sound on Screen”: Diegetic, which is sound within the narrative space, and Nondiegetic, which is sound outside the narrative space. The interplay and transformation between these two types of sound create a sensory immersion for the audience.Meanwhile, Ben Winters mentions in “The Non-diegetic Fallacy: Film, Music, and Narrative Space” that Nondiegetic sound is partly a sign of the fictional state of the world created on screen.Therefore, can we apply the theories of Michel Chion and Ben Winters to art installations, making sound an indispensable part of sensory immersion and allowing sound to work with visuals to create a field within the “Place” of this installation?

Sound is divided into two parts: Diegetic and Nondiegetic. Diegetic refers to field recordings, while nondiegetic refers to LiDAR data sonification. In the Diegetic component, we use field recordings to reveal the unnoticed details in the real world, which can give the audience a sense of familiar unfamiliarity. This type of sound can shorten the distance between the audience and the space of the installation. In the Nondiegetic aspect, we primarily use sounds from synthesizers, which are very distinctive and can quickly capture the audience’s attention. Through the combination of diegetic and nondiegetic sounds, the entire installation’s field is placed at the intersection of reality and virtuality, making it both real and beyond reality.

The sound design of this project is strategically divided into two principal components: diegetic field recordings and nondiegetic data sonification.

Diegetic Component: This segment includes field recordings from iconic locations across Edinburgh, including historic Royal Mile, bustling Princes Street, tranquil Dean Village, busy Waverley Station, and relaxing Portobello Beach. Crafted from meticulous field recordings, this object captures the essence of Edinburgh’s diverse auditory environments, weaving together the distant chatter of passersby with the rapid flowing of water into a rich tapestry that embodies the city’s unique atmosphere. This approach allows exhibition visitors to immerse themselves in the complex soundscapes of Edinburgh, experiencing it as a cohesive hyperobject within a singular exhibition space.

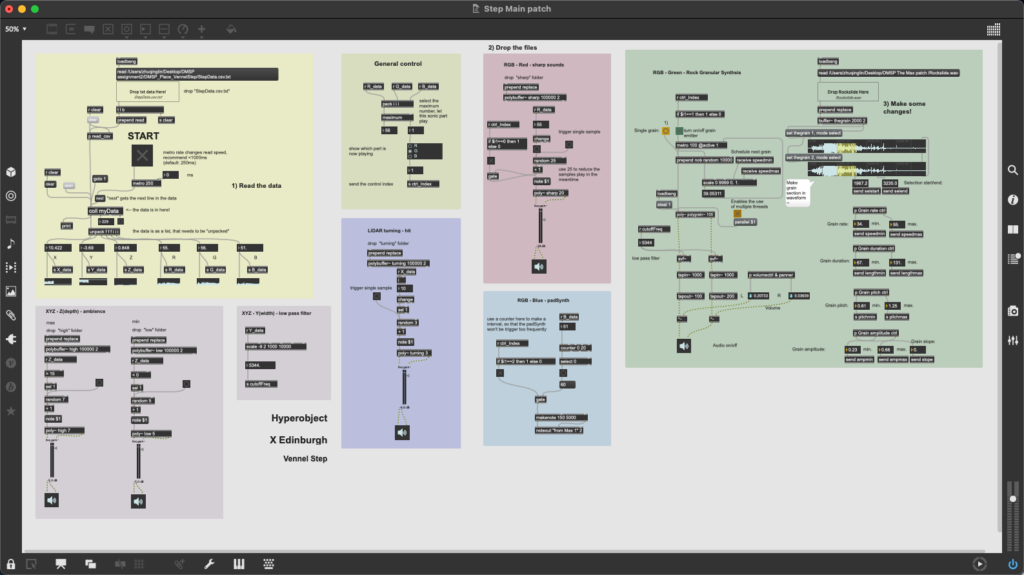

Nondiegetic Component: This component focuses on the sonification of LiDAR scan data, employing Max/MSP to transform the point cloud into the audible. Specifically, this data-driven sound design translates the intricate spatial (XYZ) and color (RGB) data of Edinburgh’s Vennel Step and Dean Bridge into a captivating auditory experience. The essence of these places is not merely represented but reimagined through the sonification, creating a soundscape where technology meets artistry.

1. DIEGETIC FIELD RECORDING

The field recordings are edited into several one-minute segments, seamlessly interconnected by ambient music transitions, offering a continuous 12-minute auditory journey. Each segment is introduced by a voiceover, delivered in a Scottish accent, that introduces the featured location, thereby grounding each auditory snapshot in its geographical and cultural context.

Figure 1: The edit session of the 12-minute field recording

The ambient music transitions serve not only to give the audience a moment to refresh their ears and prepare for the next soundscape, but also to reflect the high-dimensional nature of Hyperobjects. These transitions use pads to establish a sonic zone conducive to mind wandering, complemented by the use of reversed bell sounds to symbolize the free flow of time. This musical approach not only enriches the listening experience but also simulates the sensation of navigating through time and space within a multi-dimensional framework.

Figure 2: The arrangement session of ambient music transitions

This auditory experience transforms everyday noises into a profound exploration of space and memory, guiding you through an unseen Edinburgh where every sound tells a story. It extends an invitation to experience Edinburgh as never before, where the essence of each location is not only heard but deeply felt, creating a vivid and lasting impression of the city’s atmospheric diversity.

2. NONDIEGETIC DATA SONIFICATION

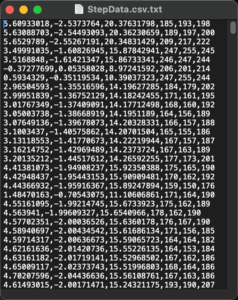

LiDAR technology, which captures environments to generate point cloud data, provides a distinctive avenue for uncovering the hidden characteristics of a place through sound. The point cloud data obtained from LiDAR scans can be converted into a CSV format readable by Max/MSP using CloudCompare, facilitating the manipulation of audio based on data.

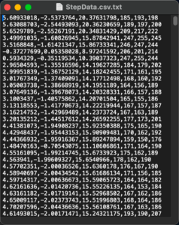

Figure 3: The raw point cloud data of Vennel Step

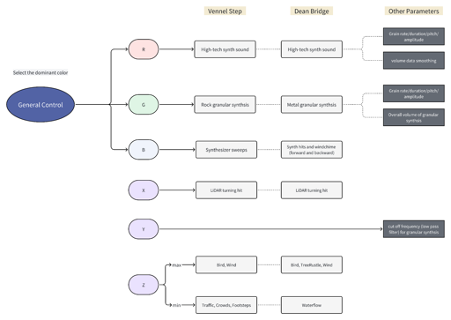

Within the Max patch framework, RGB data-driven sounds emerge as primary sonic elements. A general control mechanism assesses the RGB values to identify the dominant color, which then activates the playback of a color-correspondent sound. This sound mapping process is influenced by subjective color associations: red with sharp, intense sounds; green with natural sounds; and blue with harmonious sounds. This method not only audibly illustrates the visual spectrum but also invites listeners to explore the emotional resonance of colors.

Depth is articulated through the Z data, introducing a layer of complexity to the soundscape. By mapping the vertical extremities of each site to distinct sounds, the installation captures the topographical variance of Edinburgh. This approach not only highlights the physical contours of the locations but also envelops the listener in a multi-dimensional auditory space.

Furthermore, significant shifts detected between adjacent rows of LiDAR data—marking the completion of an angular scan—are signified through a distinctive “hit” sound, thereby audibly marking the progress of the scanning process. Other data types, not directly converted into sound, serve as control signals that adjust sound parameters such as cut-off frequency and volume in real-time, adding a dynamic layer to the auditory experience.

Figure 4: The diagram of the Max patch

While the Max patches for both locations share a basic logic, modifications have been made in audio sample selection and data mapping to reflect the unique characteristics of each site.

Red: Shared Elements Across Sites

Both locations employ the same audio assets for the color red, which represents the high-tech texture of LiDAR. This uniformity emphasizes the technological underpinnings central to both sites, thereby reinforcing their conceptual linkage.

Green: Site-Specific Sound Design

For the color green, we have implemented granular synthesis to reflect the granularity of point cloud data typical of LiDAR scans. By dynamically adjusting parameters such as grain rate, duration, pitch, and amplitude, we transform the data’s variance into an auditory experience. Specifically, the base materials differ: Vennel Step incorporates sounds derived from rock, while Dean Bridge uses metal sounds, reflecting their respective construction materials.

Blue: Interpreting the Color and the Site Feature

At Vennel Step, the color blue is conveyed through MIDI outputs that trigger harmonious synthesizer sweeps. These sweeps metaphorically depict the significant elevation changes of the steps, likening the vertical variation to the changing rate of an LFO (Low Frequency Oscillator) in a synthesizer. This auditory translation invites listeners to experience the physicality of the steps through sound.

At Dean Bridge, blue is represented by playing a specific audio sample, composed of harmonious synth hits and wind chime sounds, both forwards and backwards. This technique symbolically reverses time, reflecting the bridge’s longstanding presence and its role as a temporal conduit linking the past, present, and future. Through these sounds, we encourage the audience to perceive the bridge across various temporal dimensions.

Z Depth: Reflect the surroundings of the site

Vennel Step’s low end is adjacent to a bustling road. When Z is at its lowest range, the sounds of traffic, conversing crowds, and pedestrian footsteps dominate, capturing the urban vibrancy of the area. As Z ascends to its highest range, the ambience shifts dramatically—birds chirping and wind rustling become discernible, previously masked by the city noise and becoming perceptible only at elevated heights.

At Dean Bridge, the extremely low range of Z captures the sound of water flowing under the bridge. At the highest range, the auditory scene shifts to include birds, wind, and the rustling of trees. This reflects the natural environment surrounding the bridge, embraced by venerable trees.

Figure 5: The Max patch of Vennel Step

This data sonification offers more than just a novel way to perceive data; it invites participants to immerse themselves in the digital heartbeat of Edinburgh, where every data point sings a piece of the city’s story, crafting an immersive narrative that bridges the gap between the digital and the sensory.

The overall sound design for this project represents a pioneering endeavor to merge the realms of environmental sounds and data sonification into an immersive auditory experience. By capturing the essence of Edinburgh through field recordings and interpreting physical data through sonification, we offer a unique dual narrative of the city. This innovative approach not only showcases the potential of sound as a medium to interpret and represent LiDAR data, but also invites audiences to engage with the environment and data in a deeply immersive and sensory manner.

(Pic 2: Using AR functionality to take a photo of the scanned model alongside the original)

(Pic 2: Using AR functionality to take a photo of the scanned model alongside the original)