Projects

Main project

Our project aimed to investigate various modalities for the dissemination of lidar data. Our exploration encompassed the following mediums:

- Paper: The utilization of printed raw lidar data facilitated a tactile engagement, allowing users to physically interact with the information. This approach offered a tangible means of presenting the data, enhancing comprehension and immersive understanding.

2. 3D Printed Objects: Through the process of 3D printing, we rendered the lidar-scanned data into physical objects, such as the representation of a sheep’s hat. This reinterpretation of the data into tangible forms provided a novel perspective, transforming abstract data into palpable entities, thereby enriching the experiential dimension of the project.

3. Visual Objects: Employing various visual effects and manipulations, we presented processed lidar data in digital formats. By leveraging digital visualization techniques, we aimed to offer diverse perspectives and experiences of the scanned environment, enabling users to explore the data through different visual lenses.

4. Sound Objects: Our project incorporated both diegetic (field recordings) and nondiegetic (derived from lidar-scanned data) sounds from the scanned environment. These auditory elements were integrated with the visual objects, synergistically enhancing the overall experiential dimension. By intertwining soundscapes with visual representations, we aimed to provide users with a holistic and immersive sensory experience, enriching their understanding of the scanned environment.

In summary, our project engaged with a multidimensional approach to the dissemination of lidar data, encompassing tactile, visual, and auditory modalities. Through these diverse mediums, we aimed to offer users a comprehensive and immersive exploration of the scanned environment, transcending traditional data dissemination methods and fostering novel modes of engagement and understanding.

Paper:

The exhibition space incorporates the use of paper as a fundamental medium, symbolizing the unprocessed lidar data obtained from scans. Positioned strategically throughout the exhibition, these paper displays afford visitors direct access to the foundational data upon which the entire curated experience is based. Furthermore, the arrangement of these paper elements serves a dual purpose, guiding the flow of visitors through the space while also concealing undesirable negative spaces, thereby fostering a cohesive and immersive exhibition narrative.

3D Printed Sheep’s Hat:

Utilizing 3D printing technology, the project explores the transformation of traditional objects, such as the Edinburgh black face sheep’s hat. Through scanning and subsequent manipulation of size and color, the hat undergoes perceptual metamorphosis, transcending its conventional identity. This intervention prompts viewers to reconsider their preconceived notions of form and function, thereby enriching their engagement with the exhibited artifacts.

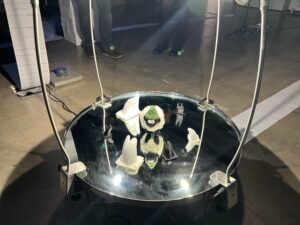

3D Printed Human Object:

A member of the group, Ming, serves as the subject for the 3D printed human object. Ming is captured amidst black dustbin bags and foil, creating data voids within the scan. The resulting 3D-printed representation encapsulates Ming’s movements, highlighting the interplay between presence and absence. This exploration not only captures Ming’s physicality but also underscores the expressive potential of material choices within the scanning process.

Vennel Staircase Visual Object:

The Vennel Staircase, a renowned photographic landmark in Edinburgh, undergoes reinterpretation through lidar scanning and digital manipulation. The staircase is visually reimagined as cascading downwards akin to a waterfall, a transformation further accentuated by accompanying auditory elements. This intervention not only reframes the familiar space but also invites viewers to contemplate the dynamic interplay between physical reality and digital representation, thereby fostering a renewed appreciation for the urban landscape.

Dean’s Village Visual Object:

Dean’s Village, chosen for its prominence as a tourist destination in Edinburgh, serves as a canvas for digital manipulation and reinterpretation. Through lidar scanning and subsequent visual manipulation, Dean’s Village is depicted as spiraling into an alternate dimension, eliciting intrigue and personal engagement from the audience. This intervention not only offers a new perspective on a familiar space but also underscores the transformative potential of digital technologies in reshaping our understanding of urban environments

ECA Visual Object:

The underlying principle governing the design methodology employed in crafting the point cloud data for the campus section was the reinterpretation of familiar vistas. First central to this endeavor was the conceptualization and realization of the Edinburgh College of Art (ECA), whose thematic essence revolves around the motif of “natural growth”. This initiative entailed a holistic amalgamation of disparate elements, including the West Court of the ECA’s main edifice, the intrinsic architectural configuration of the Sculpture Court, and the outward expanse of the ECA center courtyard. Leveraging the transformative capabilities inherent within the point cloud model, each constituent data point was imbued with characteristics reminiscent of verdant vitality, thereby evoking an imagery akin to the organic evolution of flora. By meticulously processing the point cloud model, each data point was rendered to emulate a dynamic graphic portrayal, exhibiting a continuous metamorphosis akin to that of a flourishing botanical entity. Consequently, the architectural ensemble of the ECA assumed an appearance reminiscent of a post-apocalyptic construct enveloped by lush vegetation. Furthermore, the experiential facet of this endeavor was enriched through the utilization of a third-person controller mechanism, facilitating player traversal and exploration within the designated environment. This immersive engagement afforded participants a departure from quotidian encounters, thereby fostering a transformative encounter with the built environment.

EFI Visual Object:

The next step was to process the point cloud model of the EFI building. Situated within the esteemed confines of the Old Royal Infirmary and constituting an integral facet of the University of Edinburgh, the Edinburgh Futures Institute (EFI) epitomizes a nexus of innovation and forward-thinking scholarship. In conceptualizing the design ethos for this visionary institution, paramount consideration was accorded to its futuristic orientation, encapsulated within the overarching theme of “fantasy and technology”. Accordingly, the aesthetic palette adopted for EFI’s particle representation exudes a pronounced technological ethos, characterized by an interplay of vibrant blues and yellows. Each datum within this milieu undergoes a distinct processing treatment, markedly divergent from the approach adopted for the Edinburgh College of Art (ECA). Herein, every data point assumes the form of a fluctuating piece of paper, symbolizing the transient nature of information dissemination and evolution. The chromatic spectrum exhibited by these particles extends beyond the predominant blue and yellow hues, encompassing an eclectic array of colors such as azure, emerald, amethyst, and citrine. Moreover, the dynamic positioning of each data point imbues the ensemble with an ethereal, ever-shifting quality, evoking a surreal, dreamlike ambiance. Ultimately, the integration of a first-person camera interface facilitates user immersion within the reimagined confines of the EFI edifice, offering a transcendent journey akin to traversing the realm of dreams.

Sound object:

The combination of visuals and sound in films brings us an immersive experience, where sound plays a crucial role in shaping immersive spaces.Michel Chion categorizes film sound into two parts in “Audio-Vision: Sound on Screen”: Diegetic, which is sound within the narrative space, and Nondiegetic, which is sound outside the narrative space. The interplay and transformation between these two types of sound create a sensory immersion for the audience.Meanwhile, Ben Winters mentions in “The Non-diegetic Fallacy: Film, Music, and Narrative Space” that Nondiegetic sound is partly a sign of the fictional state of the world created on screen.Therefore, can we apply the theories of Michel Chion and Ben Winters to art installations, making sound an indispensable part of sensory immersion and allowing sound to work with visuals to create a field within the “Place” of this installation?

Sound is divided into two parts: Diegetic and Nondiegetic. Diegetic refers to field recordings, while nondiegetic refers to LiDAR data sonification. In the Diegetic component, we use field recordings to reveal the unnoticed details in the real world, which can give the audience a sense of familiar unfamiliarity. This type of sound can shorten the distance between the audience and the space of the installation. In the Nondiegetic aspect, we primarily use sounds from synthesizers, which are very distinctive and can quickly capture the audience’s attention. Through the combination of diegetic and nondiegetic sounds, the entire installation’s field is placed at the intersection of reality and virtuality, making it both real and beyond reality.