Here is the PDF of the Submission 1.

Please read the following blog posts in order.

dmsp-place24

Here is the PDF of the Submission 1.

Please read the following blog posts in order.

Context and Objectives

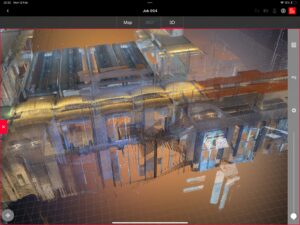

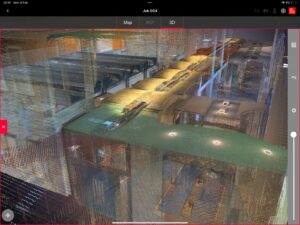

The Old Medical School, one of the oldest medical schools with substantial architectural height, was selected as the primary site for implementing LiDAR scanning techniques acquired from uCreate training.

Technology Selection

The BLK360 G1, with its extensive range capabilities, was chosen for its ability to capture the full scope of the building’s façade, which was critical for the comprehensive data collection required for this historic structure.

Scanning Methodology Adjustment

Initial plans to conduct a central scanning operation were altered upon discovery that the extremities, specifically the corners, were not being captured effectively. A strategic decision was made to scan from point 2, facilitating a seamless integration with point 3’s scan for complete data stitching.

Adaptive Scanning Strategy

Faced with the challenge of scanning the entire site in one session, the team adapted their approach by employing multiple scanning points and conducting repeat scans to ensure accuracy. This underscored the importance of pre-planning scanning operations according to the unique characteristics of each site to achieve complete data acquisition.

Selection Rationale

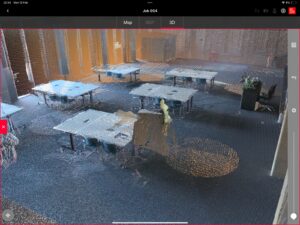

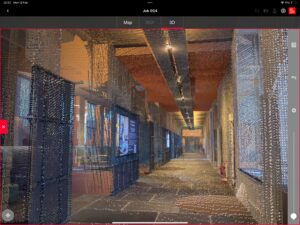

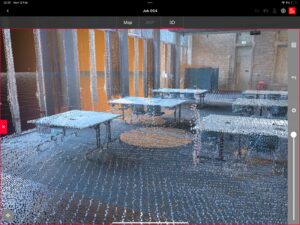

The Edinburgh Futures Institute was selected for the second case study due to its historic significance within the University of Edinburgh and its embodiment of modern challenges and educational methods in its architecture, providing a rich comparison to the Old Medical School.

Advanced Scanning with BLK360 G2

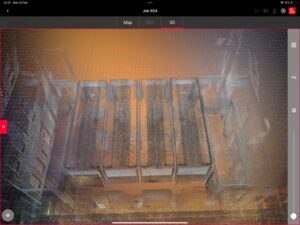

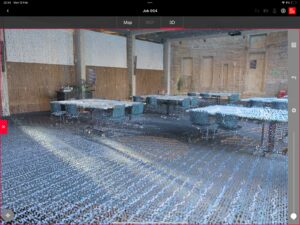

For this second scan, the advanced BLK360 G2 was utilized for its enhanced scanning speed and high-precision range. The process was divided into two distinct phases: classroom scans to establish building dimensions and detailed corridor scans to capture the finer architectural elements.

Classroom Scanning

The first phase involved scanning from each classroom corner, capturing detailed measurements to establish the building’s spatial dimensions. This provided a foundational layout from which further details could be extrapolated.

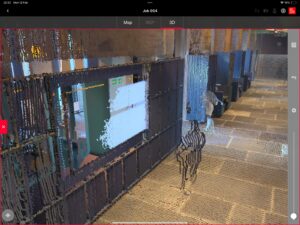

Corridor Detail Scanning

The second phase focused on the internal corridors, using an inside-out scanning approach. This comprehensive method yielded not only spatial data but also detailed color, intensity, and textural data, which would be vital for future design considerations.

Data Processing and Workflow

The data processing stage was crucial in understanding the storage and manipulation of point cloud data. The team gained expertise in selecting and filtering data, as well as in mastering the software workflow necessary for processing LiDAR data, which included:

Initial data capture with the LiDAR scanner.

Data synchronization via Cyclone FIELD 360 on an iPad.

Point linking and exportation in a specified format.

Importation into Cyclone REGISTER 360 for editing.

Selection of specific data attributes for final export, detailing key characteristics like distance, color, and material properties.

Projecting an Image onto Water, Windows, and Other Challenging Surfaces – GoboSource

Based on Ming’s idea for creating four dimensions, we came across a project called Cube Infinite. They used six mirror surfaces, which were put together into a cube shape. They explained that when they looked into it through an opening, it gave a vertigo-like sensation. This made me curious if a four-dimension feel could be achieved when the projection is made inside this cube which would feel like an infinity realm inside scanned buildings or created with scanned images of outside places.

When Infinity Comes To Life (youtube.com)

This gave an idea of how different materials come together in the same place and give us different visual experiences. In this project, they use a specific kind of mirror that makes things appear concave when come closer and convex as you go further. At an optimum distance, it appears to be flat. How could this surface change the visual experience if the scanned projections are onto them?

This gave rise to another thought about surfaces that interact with human movement or touch. Exploring not only the movement but also enhancing it with projections and sounds that correspond would be something interesting to work on.

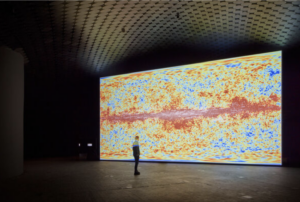

The art of Japanese composer and artist Ryoji Ikeda (1966) consists of precise soundscapes and moving image. The artist approaches his materials in the manner of a composer. Whether a pixel, sound wave, space or data, Ikeda sees them all as part of the composition process.

Ikeda’s exhibition at Amos Rex draws inspiration from the museum’s extraordinary architecture. On view are five installations, which explore the invisible dimensions of the universe and pushes the limits of perception.

Data Translation : The work translates various data inputs into audio-visual outputs that reorder how we see and hear the world around us. This speaks to Ikeda’s broader artistic aim of revealing the hidden structures and patterns that underlie our reality.

Immersive environment: The 2,200 square meter domed underground space, combined with surround sound and large-scale projections, creates an immersive experience that envelops the audience. The industrial hum and vibrations add to this effect.

Hypnotic, meditative visuals: Works like “mass” feature repetitive, pulsing geometric animations that have a hypnotic, trance-like quality. They act as visual mantras that captivate attention and inspire imagination.

Perceptual phenomena: Many of the works leverage illusory effects, afterimages, and optical tricks to engage the viewer’s perception. For example, “spin” uses a rotating laser projection to create a flickering 3D/2D effect.

Ryoji Ikeda adds to his universe of data in Helsinki – The Japan Times

The proposed installation aims to redefine perceptions of Edinburgh by presenting its overlooked architectural elements through an amplified data-informed lens. Utilizing Lidar scanning technology, the project captures intricate details of iconic sites, dissecting them into abstract components. These elements are then projected onto unconventional materials, sourced from waste produced by the University of Edinburgh. By juxtaposing familiar landmarks with discarded materials, the installation challenges viewers to reconsider their preconceptions and engage with the city in a new light. Through this innovative approach, the project seeks to foster a deeper connection between the audience and the urban landscape, encouraging reflection on sustainability, consumption, and the inherent beauty of everyday spaces.

The proposed installation seeks to offer viewers a pulverised perspective on Edinburgh’s architectural landscape, challenging conventional notions of beauty and significance. Often, iconic sites in the city are revered for their historical or cultural importance, yet the everyday elements that comprise these landmarks are overlooked. We aim to shift this narrative by leveraging advanced Lidar scanning technology to capture the intricate details and nuances of these structures, dissecting them into their fundamental elements.

In a collaborative effort with the University of Edinburgh, the installation addresses waste management by repurposing discarded materials collected from the university premises. This approach not only promotes sustainability by reducing waste but also adds layers of meaning and context to the project, emphasizing the symbiotic relationship between architecture and environmental stewardship.

At its core, the installation employs an innovative projection mapping technique. Rather than projecting conventional images onto screens or walls, abstract representations of the scanned architectural elements are projected onto the collected waste materials. This juxtaposition challenges viewers’ perceptions and prompts contemplation on themes of consumption, waste, and environmental responsibility. By visually intertwining Edinburgh’s architectural heritage with waste materials, the installation encourages audiences to critically examine the balance between preservation and progress.

The selection of materials for the installation is deliberate, aiming to create contrast and tension. On one hand, there is the timeless elegance of Edinburgh’s architecture, abstractly represented through projections. On the other hand, there is the tangible reality of waste materials, symbolizing the transient nature of human consumption and its environmental impact. This contrast encourages viewers to engage with the installation and reflect on the environmental implications of modern living.

Additionally, the interactive nature of the installation invites viewers to actively participate. As they navigate through the exhibit, they are prompted to consider the relationship between the projected images and the physical materials on which they are displayed. This experiential approach fosters a deeper connection between the audience and the urban landscape, prompting reflection on the intrinsic beauty of everyday spaces and the importance of sustainability in preserving them for future generations.

In conclusion, the proposed installation represents a novel exploration of Edinburgh’s architectural identity, blending technology, art, and sustainability in a thought-provoking manner. By recontextualizing familiar landmarks and repurposing discarded materials, the project challenges viewers to reconsider their perceptions of the city and its built environment. Ultimately, it advocates for a more conscious and responsible approach to urban living, inviting audiences to envision a sustainable future for cities worldwide.

Firstly, conceptually, LIDAR is a laser technology that can capture objects and three-dimensional surfaces in space. After scanning, a data model can be obtained, however, the original scanning model generally has a high number of faces and its uv is not considered continuous, the scanning model I got is using each face is a separate uv block, this uv block corresponds to a piece of colour on the texture map, maybe this is more convenient for the automatic generation of uv and texture maps when scanning, and the automatic generation process may produce a lot of fragmented and scattered Each texture corresponds to a material ball. It is possible to generate a low model of the scanned model by surface reduction software, but if you don’t merge and re-process the uv and texture of the generated low model, it will lead to too many material spheres and textures, and there are too many uv seams and overlapping vertices, which makes it difficult to do normal baking.So we probably won’t be doing too much with the materials of the model, and will probably continue to use a point model rather than a face model at this stage.And the treatment of the point model can be summarised in three steps:

a. Importing point cloud data:

Firstly, you need to import the point cloud data scanned by the LiDAR into the computer software of your choice. Many 3D modelling software support importing point cloud data, such as MeshLab, CloudCompare, etc.

b. Cleaning and filtering:

Lidar scanned point cloud data may contain noisy or irrelevant points that need to be cleaned and filtered. This can be done by removing outlier points, performing smoothing operations, or applying other filters.

c. Point Cloud Reconstruction:

After cleaning and filtering, you can use point cloud reconstruction algorithms to convert the point cloud data into a surface mesh, which means that the points in the point cloud are connected to form a surface. This can be done with algorithms such as Poisson Reconstruction, Marching Cubes.

And all the above steps can be simply realised in the software accompanying the radar system. And after the above steps are completed we need to import the model into Maya or Blender to set up the track animation. The tentative initial approach now is to set up a first person view camera as the subject.The first thing to do is to create the path curve, which will animate the object that will move along the track, this can be done using the “EP Curve Tool” in the “Create” menu to draw the curve freely. Then it’s time to set up the path animation: select the camera and choose “Animate” -> “Motion Paths” -> “Attach to Motion Path” in the menu bar. In the options window that pops up, select the path curve and adjust settings such as Offset, Start Frame, End Frame, etc. Then it’s time to adjust the animation parameters and add additional animation effects, which will be changed a little further when you get down to the nitty-gritty.

The combination of visuals and sound in films brings us an immersive experience, where sound plays a crucial role in shaping immersive spaces.Michel Chion categorizes film sound into two parts in “Audio-Vision: Sound on Screen”: Diegetic, which is sound within the narrative space, and Non-Diegetic, which is sound outside the narrative space. The interplay and transformation between these two types of sound create a sensory immersion for the audience.Meanwhile, Ben Winters mentions in “The Non-diegetic Fallacy: Film, Music, and Narrative Space” that Non-Diegetic sound is partly a sign of the fictional state of the world created on screen.Therefore, can we apply the theories of Michel Chion and Ben Winters to art installations, making sound an indispensable part of sensory immersion and allowing sound to work with visuals to create a field within the “Place” of this installation?

Sound is divided into two parts: Diegetic and Non-Diegetic. Diegetic refers to Place Sonification, while Non-Diegetic refers to LiDAR Sonification.In Diegetic sound, we use sounds that are closer to the real world, which can give the audience a sense of familiar unfamiliarity. This type of sound can shorten the distance between the audience and the space of the installation.In Non-Diegetic sound, we primarily use sounds from synthesizers, which are very distinctive and can quickly capture the audience’s attention.Through the combination of Diegetic and Non-Diegetic sounds, the entire installation’s field is placed at the intersection of reality and virtuality, making it both real and beyond reality.

For our simulated Edinburgh immersive experience, it’s essential to employ strategic spatial audio techniques. To achieve this, our recording plan incorporates the Ambisonic format for mixing, with the aim of utilizing a 5.1 (or 5.0) surround sound playback format to immerse the audience.

1. Microphone

Sennheiser AMBEO VR mic

https://bookit.eca.ed.ac.uk/av/wizard/resourcedetail.aspx?id=4149

Schoeps – MK4 + CMC1L * 2

2. Portable Field Recorder

Sound Devices – MixPre-6 II

https://bookit.eca.ed.ac.uk/av/wizard/resourcedetail.aspx?id=9483

Zoom – F8(backup)

https://bookit.eca.ed.ac.uk/av/wizard/resourcedetail.aspx?id=4175

3. Accessories

Toca – Slapstick

https://bookit.eca.ed.ac.uk/av/wizard/resourcedetail.aspx?id=7135

Rycote – Cyclone – Fits Schoeps MK4 Pair

https://bookit.eca.ed.ac.uk/av/wizard/resourcedetail.aspx?id=10052

Rycote – Cyclone – Fits Sennheiser AMBEO

https://bookit.eca.ed.ac.uk/av/wizard/resourcedetail.aspx?id=10049

K&M Mic Stand * 2

https://bookit.eca.ed.ac.uk/av/wizard/resourcedetail.aspx?id=8261

Sound Devices – Battery

https://bookit.eca.ed.ac.uk/av/wizard/resourcedetail.aspx?id=1509

AA Battery Charger

https://bookit.eca.ed.ac.uk/av/wizard/resourcedetail.aspx?id=10083

AA Rechargeable Batteries x 4 *2

https://bookit.eca.ed.ac.uk/av/wizard/resourcedetail.aspx?id=6024

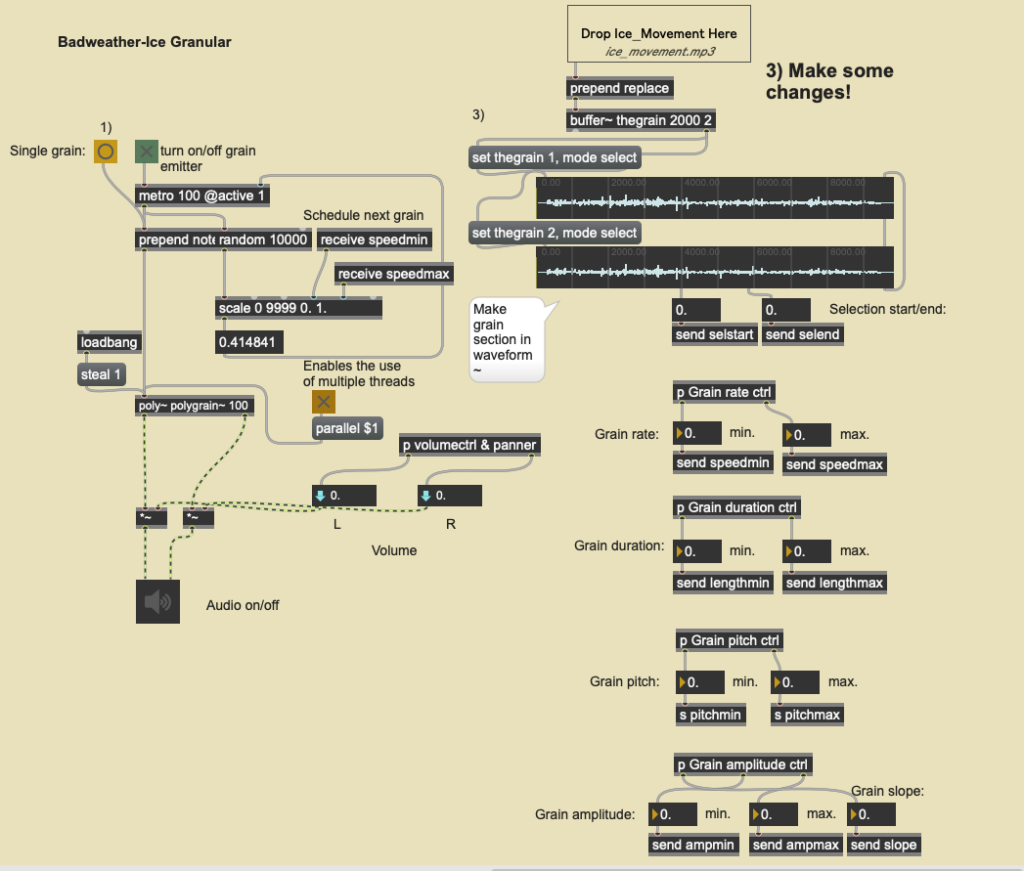

LiDAR technology, which captures environments to generate point cloud data, provides a distinctive avenue for uncovering the hidden characteristics of a location through sound. By selecting data points that hold particular relevance for auditory interpretation, these elements can be converted into sounds that are perceptible to an audience. The point cloud data obtained from LiDAR scans can be converted into a CSV format readable by Max/MSP using CloudCompare, facilitating the manipulation of audio based on data.

In this project, the intention is to use granular synthesis to represent the granularity of point cloud data. By controlling arguments such as grain rate, duration, pitch, and amplitude in real-time based on the data from the place, the variation within the point cloud data can be audibly demonstrated. Moreover, Max/MSP allows for further sonification through data input, such as using the data to control the parameters of processors and synthesizers, or triggering specific samples with extreme values. This approach enables real-time sound matching based on visual effects, bringing the scanned environment to life in a unique and engaging way.

Audio 1: A granular synthesis for rocks(using weather data in Iceland).

LiDAR, as a contemporary high-tech innovation, has inspired audiovisual installations that often employ nearly sci-fi sonic textures to reflect their technological essence. These installations predominantly use synthesizers and processors to create sounds, aiming for an auditory experience that matches the visual in its capacity to transport audiences beyond the familiar. The sound design in these setups focuses on dynamic and timbral shifts that keep pace with visual transformations, emphasizing rhythm and atmosphere rather than conventional melody.

An notable example in this domain is Ryoichi Kurokawa’s “s.asmbli [ wall ],” where sound plays a pivotal role in creating the atmosphere and making the LiDAR scanning process audible. The installation capitalizes on the rhythmic aspects of the scanning to set its tempo, relying mainly on synthesized sounds without clear melodic content. It employs reverberation automation to reflect changes and progressions within the scene, enabling the audience to intuitively understand the creation and evolution of data. This immersive experience guides viewers through the construction, transformation, and dismantling of various places, driven by the combined narrative of sound and visual.

The adoption of technologically derived timbres is intended to signal the processes underlying the visual display, employing nondiegetic sounds to hint at the artificial crafting of these places. This strategy not only deepens the immersive effect by linking technology with creativity but also prompts the audience to consider the interplay of human and technological efforts in depicting and altering environments. Installations like Kurokawa’s encourage exploration of the relationship between organic and technology, showcasing the artistic possibilities offered by LiDAR.

This section serves as a brief summary of several meetings held up to the time of Submission 1, and is used as an outline; details of each meeting can be found in other sections of the Blog.

January 25th, 2024

Theme of the Conference :Group members met after class to learn about our professional backgrounds, software us specialise in, and to set up a Miro, whatsapp group for future communication.Additionally, we confirmed with Asad email that the meeting will take place every Friday.

January 26th, 2024

Theme of the Conference :The first formal meeting to learn from Asad about the relationship between data and Place and how we should go about applying that data, in addition to watching some videos made from the data we got from the radar scans, which helped us to get a better understanding of the topic of the class, and after the class we each came up with our own ideas for the project we were going to work on.

February 1st, 2024

Theme of the Conference:We didn’t get to borrow radar equipment as our school wasn’t able to train us this week, but we still did some scanning via our mobile phone apps.

In addition, we have come up with some ideas for this assignment, the exact details of which can be found in the Blog.

February 2nd, 2024

Theme of the Conference:For this meeting we consulted Asad and we planned and summarised our previous ideas in detail, in addition we considered the feasibility of the individual plans.

As none of us are familiar with the effects of radar scanning, at Asad’s suggestion we will start by picking a few locations around the school to scan and see how it works. After having a detailed understanding of the radar we will proceed to the next step.

February 5th, 2024

Theme of the Conference:We spent the day training on the radar instrument, learning the basic operating principles and use of the radar, and were given a week’s worth of radar to use.

In addition, we chose a suitable location within the campus to conduct a field survey after the training was completed.

February 8th, 2024

Theme of the Conference: In this meeting, we have consolidated the results of the previous meetings in order to come up with a general draft of the programme, and we have decided to bring different experiences to the audience by projecting the data from the radar scans onto different materials.In addition, we have selected a video for reference.

February 9th, 2024

Theme of the Conference:

Based on yesterday’s meeting, we held this online meeting mainly to determine everyone’s responsibilities in the project, with Akshara in charge of the data collection statistics and model presentation part, YiFei in charge of the visual effects and project documentation part, and MingDu and QingLin managing the sound design as well as the conversion processing between the data and the sound effects.

February 12th, 2024

Theme of the Conference:Asked Asad for advice based on all the tasks we have completed at this stage, as well as asking some questions about some of the problems we had in completing Submission1. Also learnt how to import the data we collected using the radar into the software to turn it into a model, do some simple texturing of the model, animation and export the model to other formats.

The perception of the word place may vary from person to person. To me a place is always connected to the emotions i experienced which makes it a memorable experience.

During last meeting with Asad Khan, we discussed various ideas of how we could make an interesting immersive experience installation with the help of Lidar Scanner.

As we are a group with different cultural background, we wanted to take advantage of it. As we discussed an thought that i had in my mind a few days back popped in my head. A place can be used differently in different cultural background and the people, culture and emotions give the place a whole different meaning. For example, while i was walking through the meadows, I imagined how the whole place of meadows could be changed if it were placed back in my home town in India. It would had a very different use and feel to what it has over here. So the various cultural uses of the places could be captured to show how a same place can give a different feel and emotion. As Ming Du suggested, adding user interaction to this could keep the audience engaging and interested to test out different combinations which would provide a different feel every different time.

Another thought that popped in my head was could lidar scanned images be perceived in 3-dimention with stacking up various 2d images in the room , making us feel like we are walking into the actual place. The manipulation of shadows was also something that caught my mind when the nature of 3-dimension comes into play. The shadows give depth to what we perceive. But can this be manipulated by us into looking differently or being differently by different people? Capturing actual images and then mixing it with only shadows that are captured could give us a totally different feel. The whole viewpoint of an object and a manipulated shadow could cloud the image of the actual object in our mind. Could this be something that we can play with and manipulated? Also as Qinglig suggested, we could not only use the scanned images, but also mix the experience with real time objects which would give a different feel to the whole experience.

Combining these aspects with various sound interventions could alter our perception of an object which could be interesting as it is not something that can be captured by the naked eyes. Image manipulation could be perceived by different people by different methods.