Time for a more studious post now:

After attending a lecture about Virtual Reality, where the concepts of immersion and presence where discussed, it got me thinking. Is what we are achieving in this project immersive? How are we able to convey a sense of presence for the audience?

Let’s look at the definitions of both immersion and presence, how each are quantified and applied in this situation. The research that follows will focus on the terms in relation to technology and art exhibitions.

Immersion

Slater and Wilber (1997) define immersion as a description of a technology, and describe the extent to which the computer displays are capable of delivering an inclusive, extensive, surrounding and vivid illusion of reality to the senses of a human participant. The idea of immersive fallacy, is one that says the pleasure of a media experience lies in its ability to sensually transport the participant into an illusory, simulated reality (Salen and Zimmerman, 2003). VR is usually viewed as the most immersive form of technology. So how can an exhibition be immersive without this? And how would you quantify the experience as being immersive?

Regarding these definitions, it can be seen that immersion is a wide scale and therefore you cannot simply say whether an experience is immersive or not. However, it is possible to look at sub categories of immersion in the context of an experience. Similar to the idea of immersive fallacy, an immersive escapism experience is where a participants emotional state make them feel as if their body has separated from themselves and no longer exists. This is often the main motivation for the attendance of festivals and exhibitions (Manthiou et al., 2014; Sobitan and Vlachos, 2020; Guo et al., 2021). Another sub category, is an immersive educational experience. This is when an engaging environment is presented that is highly interactive at virtual AND physical levels (Dennison & Oliver, 2013).

Presence

The sense of presence, defined by Gruter and Myrach (2012) describes “the subjective experience of feeling present in a non-physical space”. This is an overarching definition of the term. When technology is added into the equation, the term presence changes to “telepresence” and is shortened to “presence”. The International Society for Presence Research defines the concept of presence as a psychological state in which a person fails to fully acknowledge the role of technology in their experience, even though they are aware that they are using it. Their perception of objects, events, entities, and environments is not fully influenced by the technology involved. (Ispr.info, 2000)

It occurs during an encounter with technology, it is a multidimensional concept. They say that presence is greater when a technology user’s perceptions only partially acknowledge the actual role of the technology in the experience. Presence is the property of the individual and varies across people and time.

The concept of presence is needed for the connection between people and the virtual surroundings (Grassi, Giaggioli and Riva, 2008). According to Sastry and Boyd (1998), the sense of presence arises from the interaction of the user with the environment. In order to feel present, you need to give people the chance to choose, to move objects in the context. It’s important for technology to be invisible to the user, so the subject can focus on the task instead of the medium: the better a medium supports the subject’s actions, the greater the sense of presence.

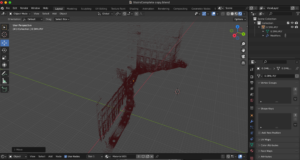

I believe that there are aspects from the main definition of immersion as well as the two subcategories that relate to how we want this exhibition to be an immersive experience. We are aiming to create a surrounding and vivid illusion of reality that, when combined with audio sensory input and the added interaction being a physical movement, will allow users to be immersed in the exhibition.

For the sense of presence in relation to this project, we are giving the user the option to control their experience through the physical interaction. The technology of the Arduino sensors is mostly invisible to the user as it is small and hidden in the shadows. The users attention is captured by the visual and sound response to their movements, therefore they are focusing more on the task and are more present during the experience.

To conclude, this project is both immersive and conveys a sense of presence for the user. Whilst perhaps not “fully immersive” in the sense that VR would be, this experience uses interactive, non-invasive technology in a space that is constructed to be surrounding the user for a multidimensional and multi-sensory exhibition.

If you’re reading this in order, please proceed to the next post: ‘Place and Non-Place’.

Molly Munro

References

Carù, A. Carbonare, P. Ostillio, M. Piancatelli, C. (2020). The impact of technology on visitor immersion in art exhibitions. In: Massi, M. Vecco, M. Lin, Y. (Ed). Digital Transformation in the Cultural and Creative Industries: Production, Cons. London: Routledge. pp.13-31.

Dennison, W. and Oliver, P. (2013) ‘Studying Nature In Situ : Immersive Education for Better Integrated Water Management’, Journal of Contemporary Water Research & Education, 150(1), pp. 26–33. Available at: https://doi.org/10.1111/j.1936-704X.2013.03139.x.

Grassi, A., Giaggioli, A. and Riva, G. (2008) ‘The influence of media content and media form in sense of presence: a preliminary study’, in. Presence 2008. Procs of the 11th Annual intl workshop on presence, pp. 258–259.

Guo, K. et al. (2021) ‘Immersive Digital Tourism: The Role of Multisensory Cues in Digital Museum Experiences’, Journal of Hospitality & Tourism Research, p. 109634802110303. Available at: https://doi.org/10.1177/10963480211030319.

Ispr.info. (2000). Presence defined. [online] Available at: https://ispr.info/about-presence-2/about-presence/.

Manthiou, A. et al. (2014) ‘The experience economy approach to festival marketing: vivid memory and attendee loyalty’, Journal of Services Marketing, 28(1), pp. 22–35. Available at: https://doi.org/10.1108/JSM-06-2012-0105.

Salen, K. and Zimmerman, E. (2003) Rules of play: game design fundamentals. Cambridge, Mass: MIT Press.

Sastry, L. and Boyd, D.R.S. (1998) ‘Virtual environments for engineering applications’, Virtual Reality, 3(4), pp. 235–244. Available at: https://doi.org/10.1007/BF01408704.

Slater, M. and Wilbur, S. (1997) ‘A Framework for Immersive Virtual Environments (FIVE): Speculations on the Role of Presence in Virtual Environments’, Presence: Teleoperators and Virtual Environments, 6(6), pp. 603–616. Available at: https://doi.org/10.1162/pres.1997.6.6.603.

Sobitan, A. and Vlachos, P. (2020) ‘Immersive event experience and attendee motivation: a quantitative analysis using sensory, localisation, and participatory factors’, Journal of Policy Research in Tourism, Leisure and Events, 12(3), pp. 437–456. Available at: https://doi.org/10.1080/19407963.2020.1721638.