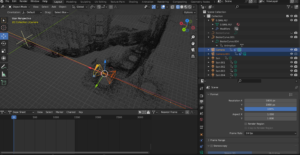

The “Place” group has been a remarkable learning experience, characterized by effective collaboration and communication among team members throughout the project’s development. While the exhibition received positive feedback, there are aspects that could be improved in future iterations from a designer team perspective.

One challenge we faced was the accuracy of the ultrasonic sensor, which was influenced by the materials of clothing, such as cotton. Consequently, audience members wearing cotton were unable to trigger the sensor effectively. To address this issue during the exhibition, we provided an iron board as a prop for the audience to hold in front of their chests, enabling them to trigger the sensor. With more time and resources, an alternative solution could involve creating wheel-shaped props using laser cutting, incorporating the concept of “driving through time and space.” Adding a scenario or narrative to the project would help justify the use of such props.

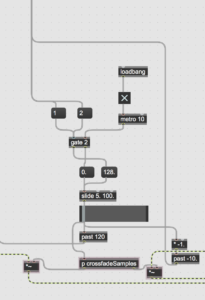

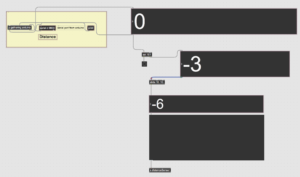

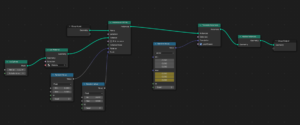

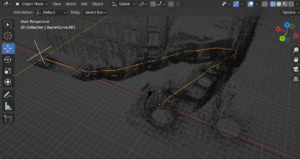

Another area of concern was the Kinect. As mentioned in a previous blog post, we disabled the Kinect function during the exhibition, as it interfered with the ultrasonic sensor, our primary interaction method. It would be beneficial to reintegrate the Kinect, given the time and effort spent on developing its engaging interaction features. One potential solution could involve changing the Kinect’s properties, such as using the y-axis data from movement capture instead of the distance between the user’s hands (x-axis data). This approach could offer similar visual effects without disturbing the ultrasonic sensor’s data collection while also providing more possibilities for visual elements and greater freedom for audience interaction.

Working within a large group, I learned the importance of time management and task organization, skills that are deceptively difficult to implement. I am grateful for the efforts of Molly and Dani, who effectively set up the exhibition, including designing the equipment displays, posters, postcards, guidebooks, 3D printing, and slideshows, providing a solid foundation for a successful exhibition. David’s exceptional leadership allowed each team member to find their tasks, make decisions, and ensure we were on the right track. Xiaoqing, Chenyu, and YG were consistently receptive to suggestions and supportive of others. Finally, I appreciate the assistance of Yijun and Yuxuan, with whom I collaborated on TouchDesigner, and who patiently addressed my questions.

Thanks for all the teammates!

Allison Mu