In order to document the project journey and to keep track of team meetings, practical sessions, and meetings with tutor Asad Khan. The two team members that are assigned to meeting notes and documentation (Molly and Daniela) are familiar with using the collaborative software “Notion” for note keeping.

January 26th, 2023 – First (non-official) Meeting.

This is the first time all group members met one another. Taking place during Thursday’s DMSP lecture, the members traded names, their backgrounds, and why they chose the topic of Places. Following a general group discussion about the topic, A google form was created and sent out to determine when everyone was free during the week in order to establish a standing weekly meeting.

January 27th, 2023 – First Contact with Asad.

First meeting with everybody here with Asad. Introductions, why we have chosen this topic, what we envision for places, and how we conceive the possible portrayals of this theme were discussed as he was not present in the first meeting. ChatGPT was explored with questions such as ‘is a non-place the same as a liminal place?’

We are to keep posting into the Teams chat for a constant record of events, ideas, stream of consciousness, etc. The focus at this stage is to collect the data from scans.

January 30th, 2023 – Workshop with Asad.

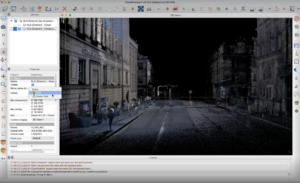

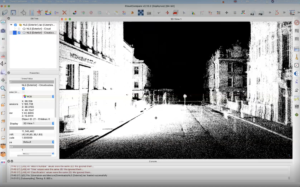

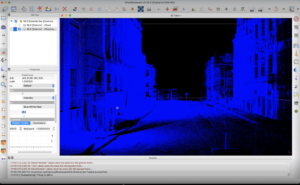

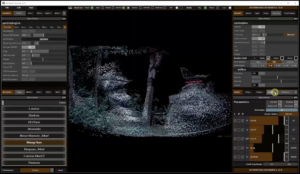

The first workshop was run by Asad. The team explored the data processing software CloudCompare and how it manages point cloud data. Some points learned:

- Merge between two lidar scans is possible. Defining the start of the point cloud and the end state.

- We would need to import into CloudCompare and subsample before exporting to unity to reduce the number of points and avoid crashing.

- You could convert the scans into sound.

- Use a medium setting on the scanner – will take 5 minutes.

- Define the places where you want to scan before you go to the site – scout these places.

- We can make 3D objects and then convert them into point clouds and place them into a scan.

- Microscan something in detail with a handheld scanner and make it into a hologram?

January 31st, 2023 – Team meeting.

For this meeting, we decided to meet up in the Common room in Alison House and after our last meeting with Asad, we wanted to discuss where we want the project to go forward.

One thing we all agreed upon, was to develop the project with a focus on an important place in Edinburgh, so we decided to work in Miro and add different places we could scan for the project. Some of the places that came up were: The Royal Mile, Mary King Close, Innocent Railway Tunnel, Botanical Royal Gardens, Armchair Bookshop, Banshees labyrinth, and The New Steps.

One of the topics that came up, was how could we incorporate the physical aspect of the exhibition, we discussed the creation of a 3D printer scale down the size of the places we scan, and also of holograph effect from mico-LiDAR scanning.

The next meeting will be in ECA as it will be our first time with the LiDAR scanner and want to learn to use it and start scanning our environment as an exploration of the technology.

February 2nd, 2023 – First Scans.

In the ECA exhibition hall, the team started to take their first LiDAR scans. We discovered that for the best and most accurate linking between scan positions, it works best when in line of sight of the last scan. It does technically work when moved up a level, however, there is the more manual alignment required.

In the ECA exhibition hall, the team started to take their first LiDAR scans. We discovered that for the best and most accurate linking between scan positions, it works best when in line of sight of the last scan. It does technically work when moved up a level, however, there is the more manual alignment required.

The day before, David had tested out the scanner in his room at home. This is where the mirror phenomenon was discovered: It takes the reflection as if it were a doorway. Read David’s exploration blog post.

February 6th, 2023 – LiDAR Training at uCreate.

We had the induction training in uCreate Studio, where we learned the security protocols when working in the workshop. We also had an introduction to the different machines that we have available to use in creating, such as 3D printing, laser cutters, CNC machines, and thermoformed machines.

Afterward, we went to the LiDAR workshop, where they showed us the correct way to use the LiDAR scanner, as well as the procedure we need to follow to transfer the data from the iPad to the computer, and the software we need to use to work with the data.

February 7th, 2023 – Individual project proposals and final decision.

Each member presented their own idea of how they envisioned the project to be formed. Some members were unable to attend at the same time, so those who were free most of the day met with them first to hear their ideas and present them to the rest of the group later. Each idea was discussed, pros and cons analyzed and eventually, we came to a decision we all agreed on. The biggest decision that needed to be explored before being 100% certain, was the location: The News Steps.

February 7th, 2023 – Scans of the News Steps.

LiDAR scans of the News steps with Daniela, Molly, and David. Experimented at night with the flash enabled on the scanner. Also tested out how the scanner would align two different scans on different levels of the stairs. The scans came out really well and gave us an idea of how we could keep developing the project. We were able to link both scans, even tho they were at different heights on the stairs.

February 11th, 2023 – Team meeting with Asad.

This team meeting allowed the group to touch base with Asad prior to the first submission to verify that the project idea is realistic, achievable, and interesting.

February 13th, 2023 – Team meeting for Submission 1.

The team got together to figure out the final details of the submission. We had a good record of our overall process but had to create a nice workflow for our blog. We worked on finishing up blog posts with information on our previous meetings, and our research development, and we assigned the roles of each team member for the next submission.

February 23th, 2023 – Sound meeting

The first Sound department meeting took place, all parts of the sound team attended the meeting and took part in its content. The session was structured across each sound task, as mentioned in the latest sound post. Each team member had the opportunity to catch up and show individual progress on their coordinated task. A collective effort also allowed for planning the future steps of each job.

The meeting took place in the following and played out in the following order:

- Soundscape Capture with Chenyu Li – Proposal and Planning;

- Place Sonification with David Ivo Galego – Data-Reading method demonstration and future Creative approaches;

- Sound Installation with Yuanguang Zhu – Proposal Review and further planning;

- Interactive sound with Xiaoqing Xu – Resources overview.

March 3rd, 2023 – Team meeting with Asad

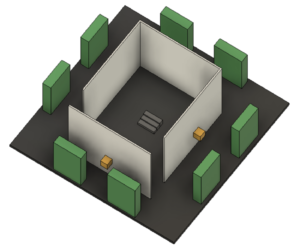

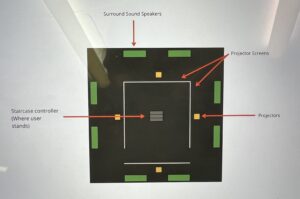

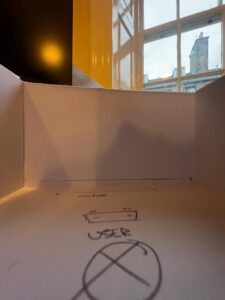

In this meeting we decided to book a short throw projector to make some tests of how it would look, we also purchased a shower curtain to try and project on top of that, but once we tried it, we realized that there was not enough brightness. This helped us to understand what kind of projectors we would need for our exhibition, and we notes that we needed to find out projections screens that fit the space we are gonna be in.

March 9th, 2023 – Team meeting with Jules

In this meeting, we had a talk with Jules about our concept, but most importantly it was a more technical talk, about how many projections we are planning to use, and what kind of sound equipment was gonna be needed. Jules recommends we have a test day, so we can make sure what we choose is correct and working properly.

March 10th, 2023 – Team meeting with Asad.

For this meeting, we meet online with Asad and had a really interesting talk and explanation on how we can use CharGPT in our work, as a collaborator and helper to develop our projects.

March 26th, 2023 – Team meeting with Asad.

During this meeting we meet in the Aitrium to test the sound and projections in the room the exhibition was gonna take place. One important thing we discovered was that there is a switch in the Atrium to close the blinds on the ceiling.

April 3rd, 2023 – Midnight Test

During this day Molly and myself went to the Aitrum at night, to test the projections and how they looked when there was no light outside. We also moved things around and worked with the objects already in the Atrium to create an optimal setup for the exhibition, we created a video explaining where we planned to put the different elements of the exhibition, so we could have this as a guide for the day we needed to set up. During this time we also discovered in a corner of the room several big wooden boxes, that we decided to use as stands and props in the space.

If you’re reading this in order, please proceed to the next post: ‘Design Methodology’.

Molly and Daniela

>>

>>

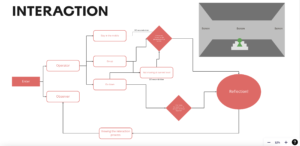

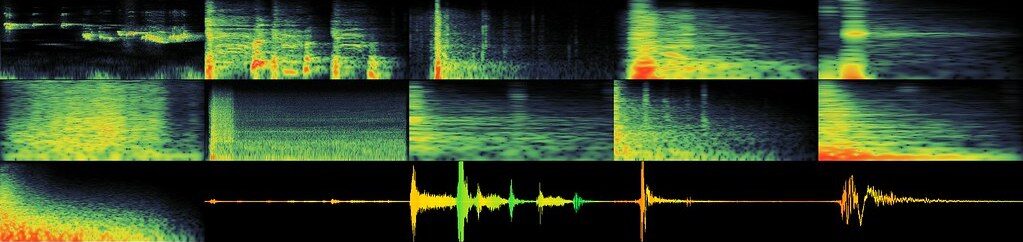

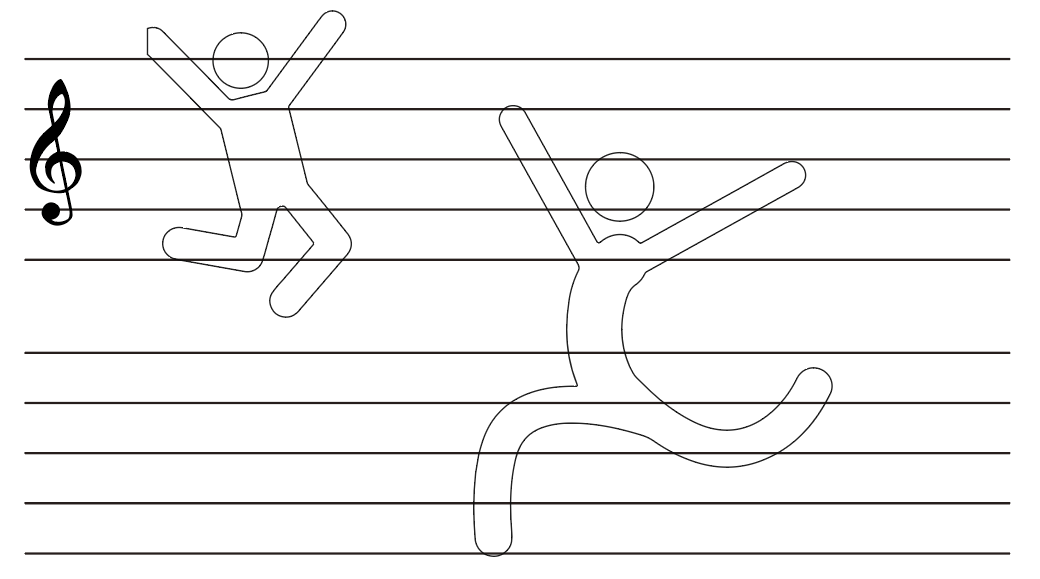

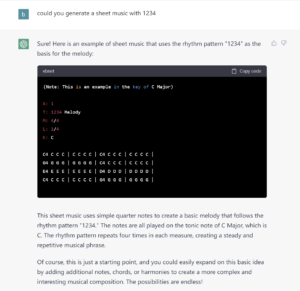

As shown in the diagram, we can correspond the scanned shape to the score to get the exact track.

As shown in the diagram, we can correspond the scanned shape to the score to get the exact track. I tried providing Chat gpt with a few simple numbers to generate a basic melody, and I found that it worked.

I tried providing Chat gpt with a few simple numbers to generate a basic melody, and I found that it worked.