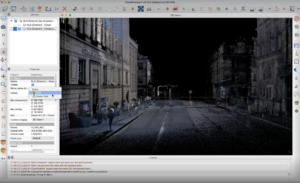

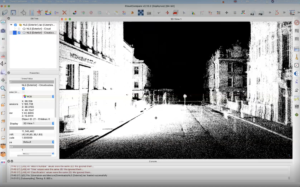

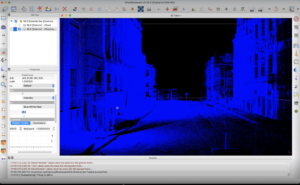

First of all, I feel very fortunate to have taken the DMSP course and the Place theme this semester, which has been an unforgettable journey for me. Not only have I learned how to use LiDAR and TouchDesigner, but I have also worked with exceptional teammates to create an impressive project.

In terms of the final exhibition, although we achieved certain results, there are still many areas that can be improved upon. Firstly, the venue could be improved to create a more immersive environment, such as a curved screen that can cover not only the surrounding area but also above and below the audience, creating a stronger sense of immersion. Additionally, using edgeless LED screens or other display technologies can enhance the display effect, and a larger sound system can be employed to improve the audio experience. Additionally, to address the issue of conflict between the ultrasonic sensor and Kinect during the exhibition, we can consider using physical buttons or devices as an alternative means of interaction.

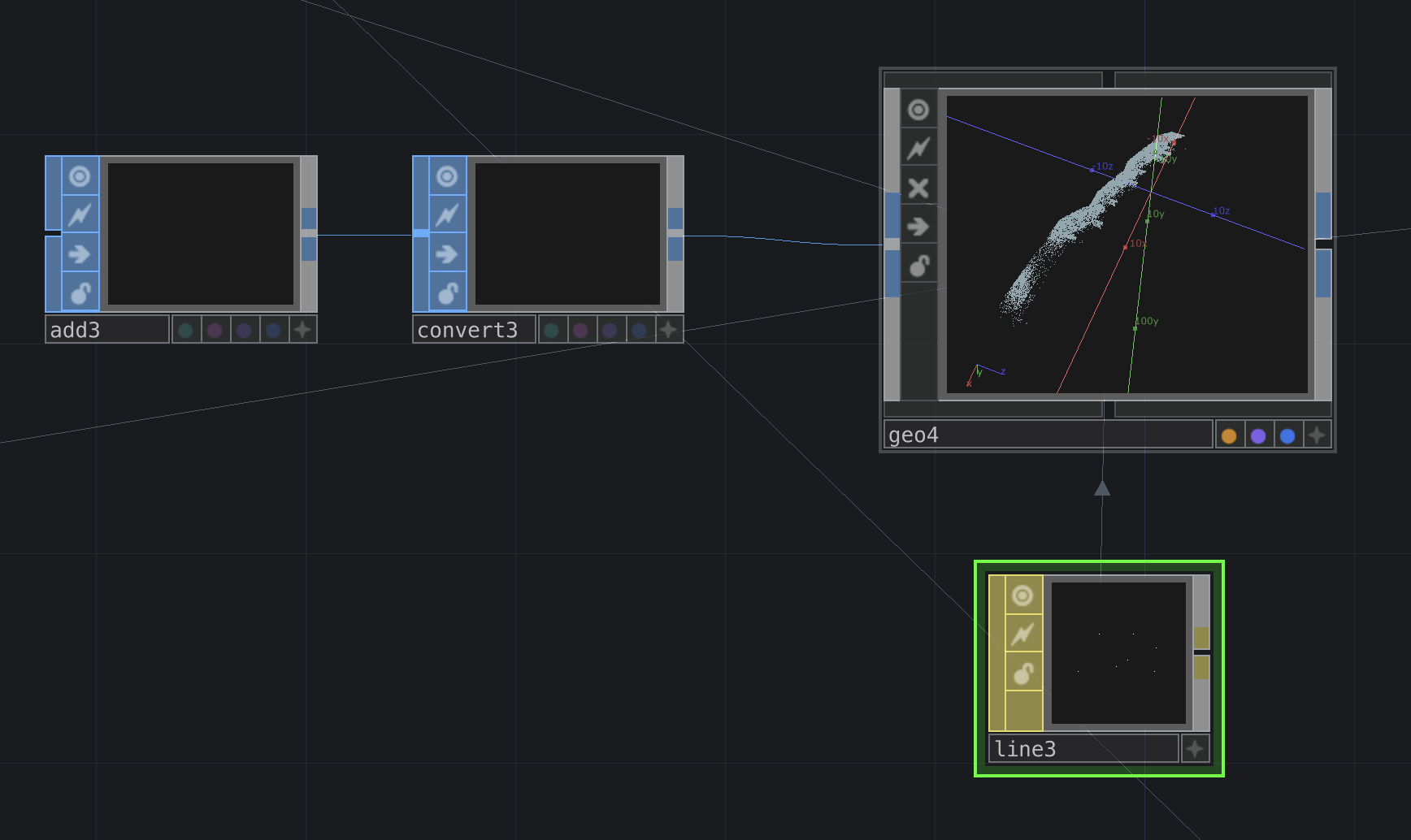

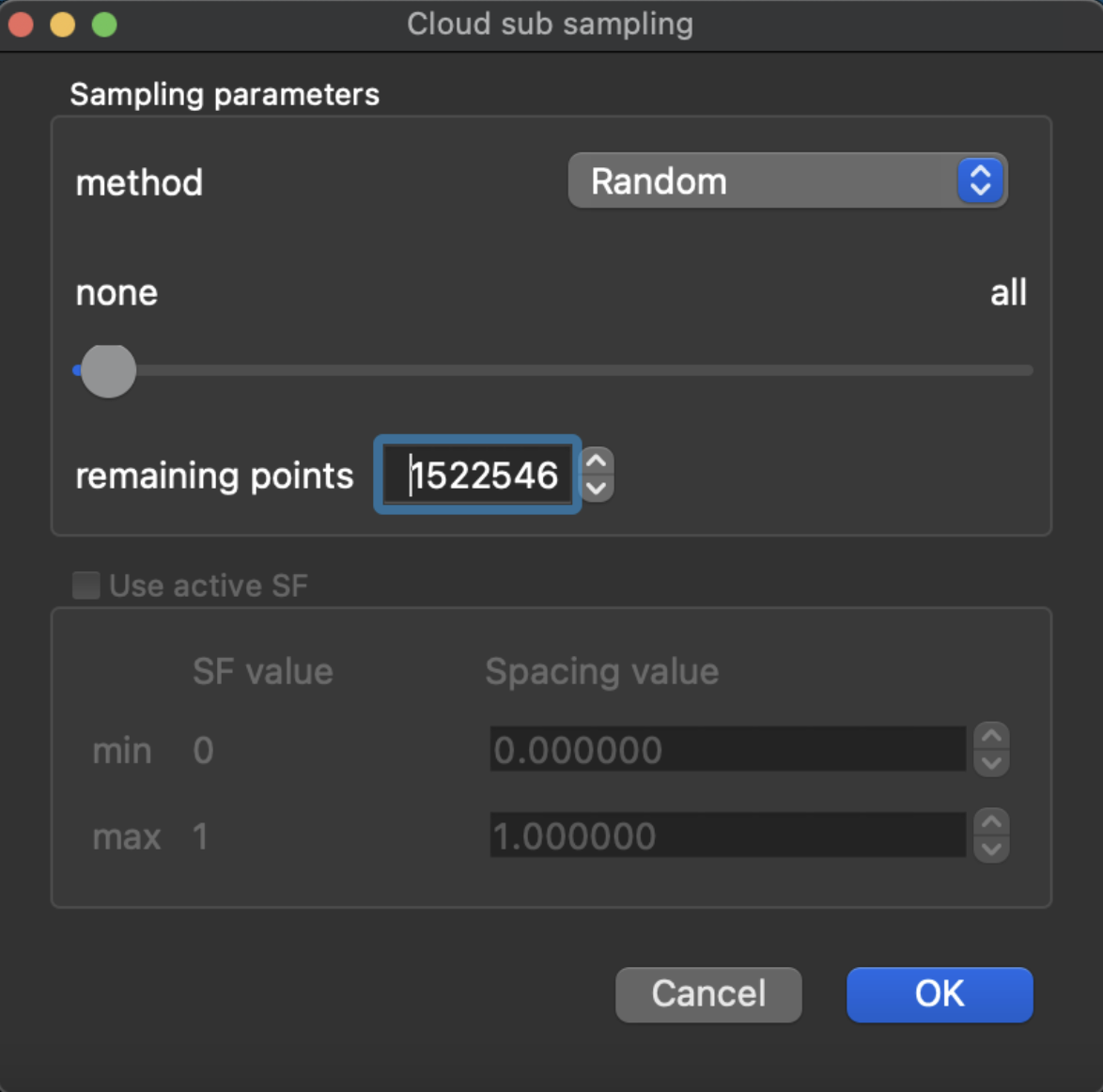

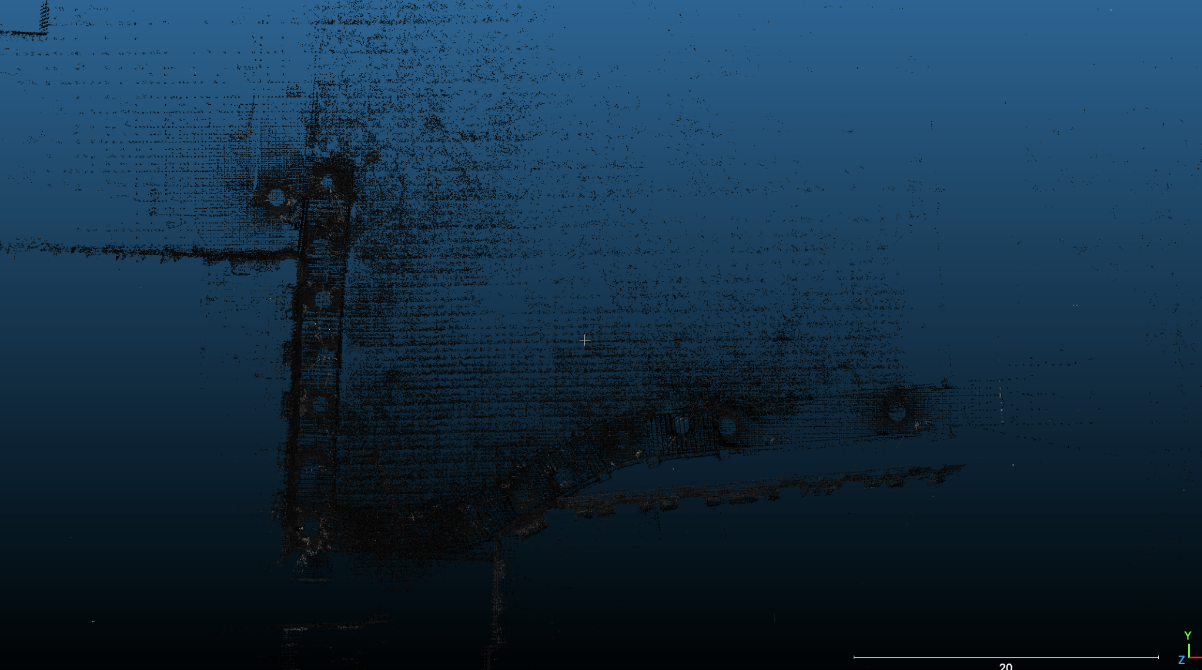

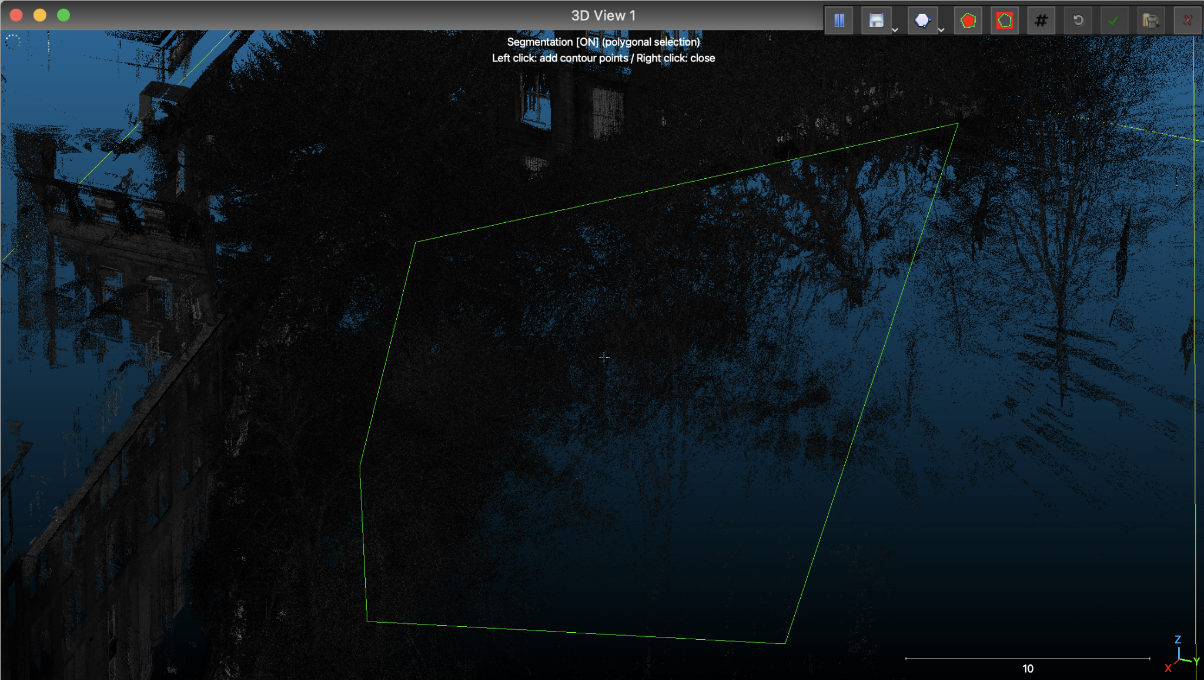

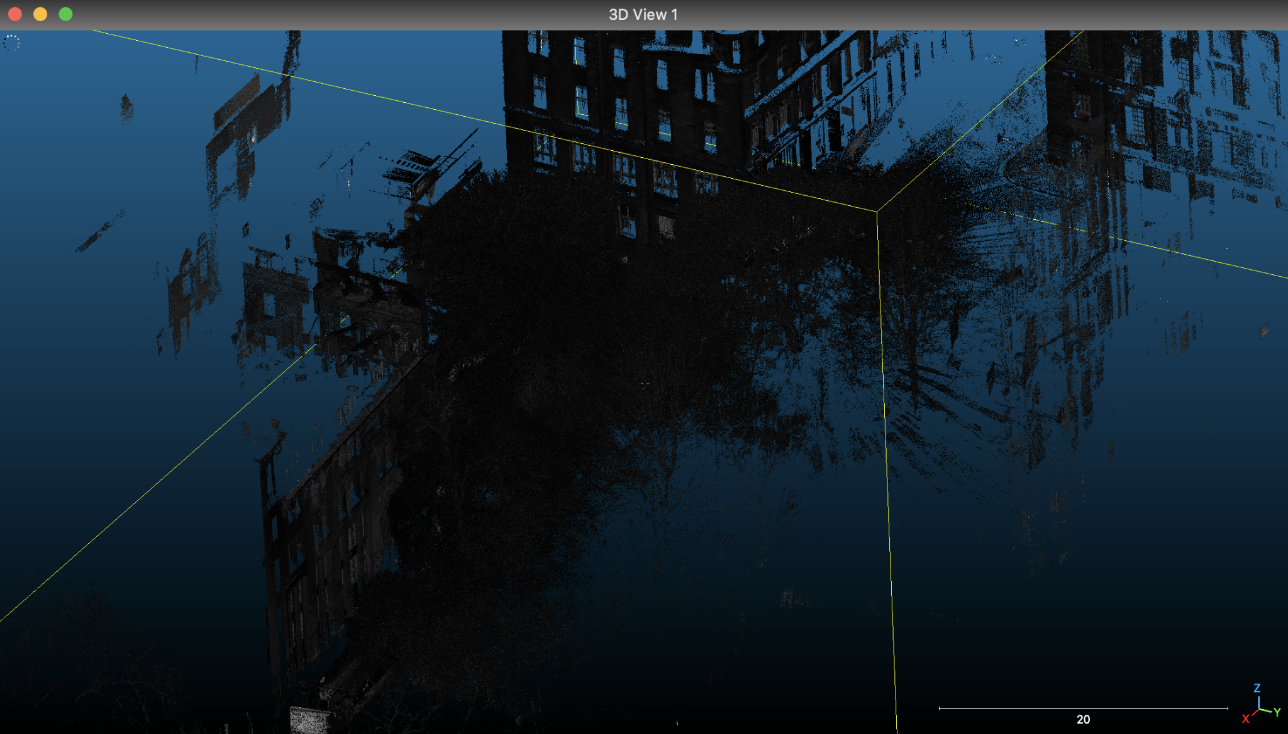

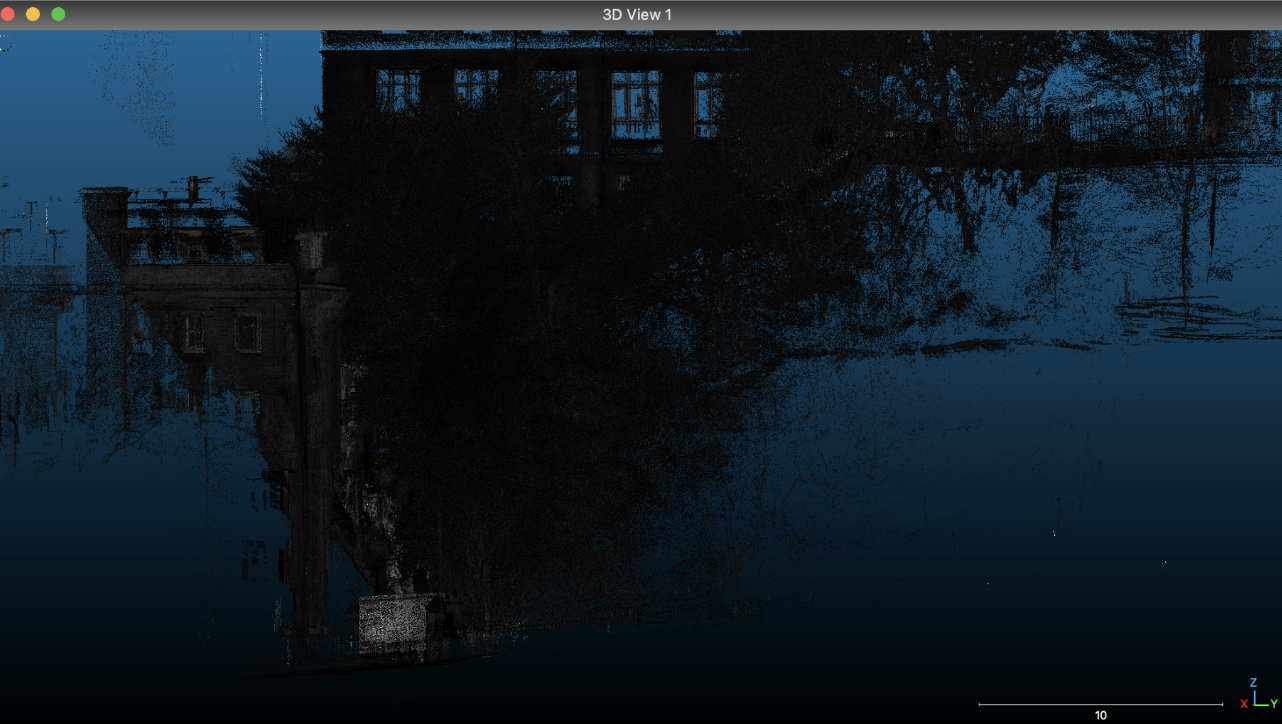

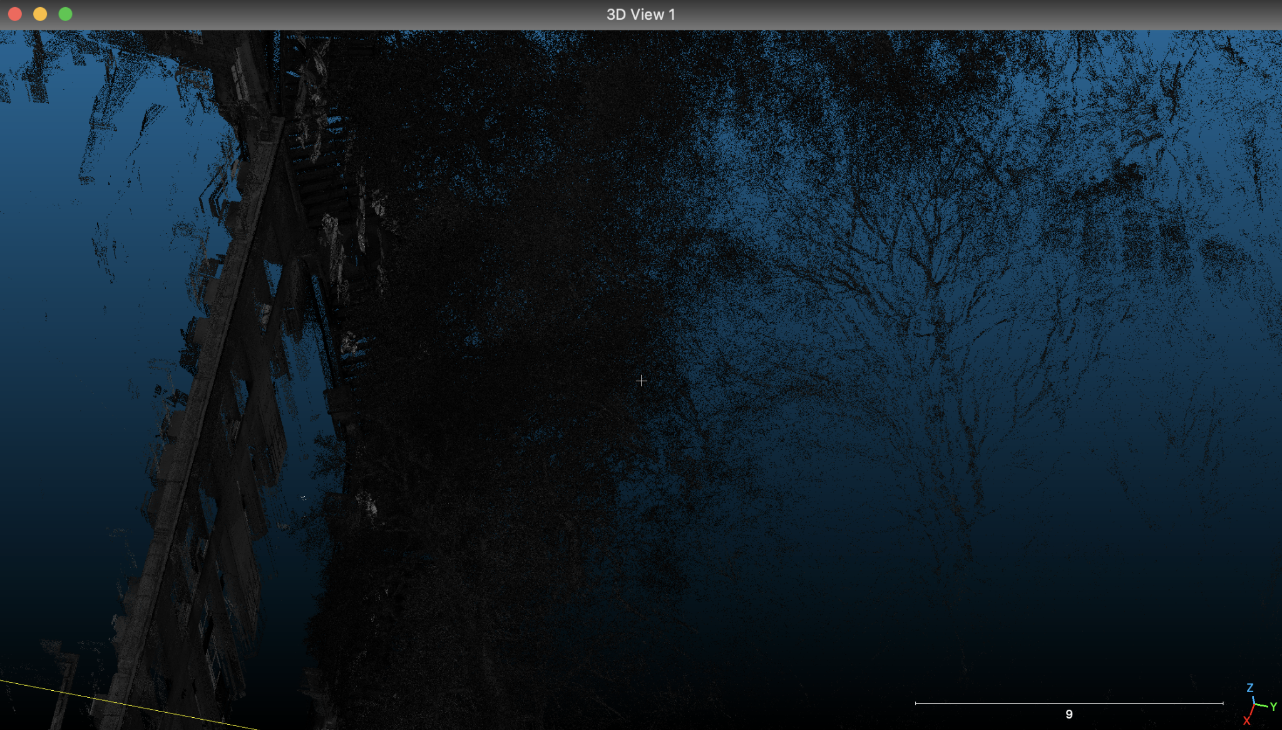

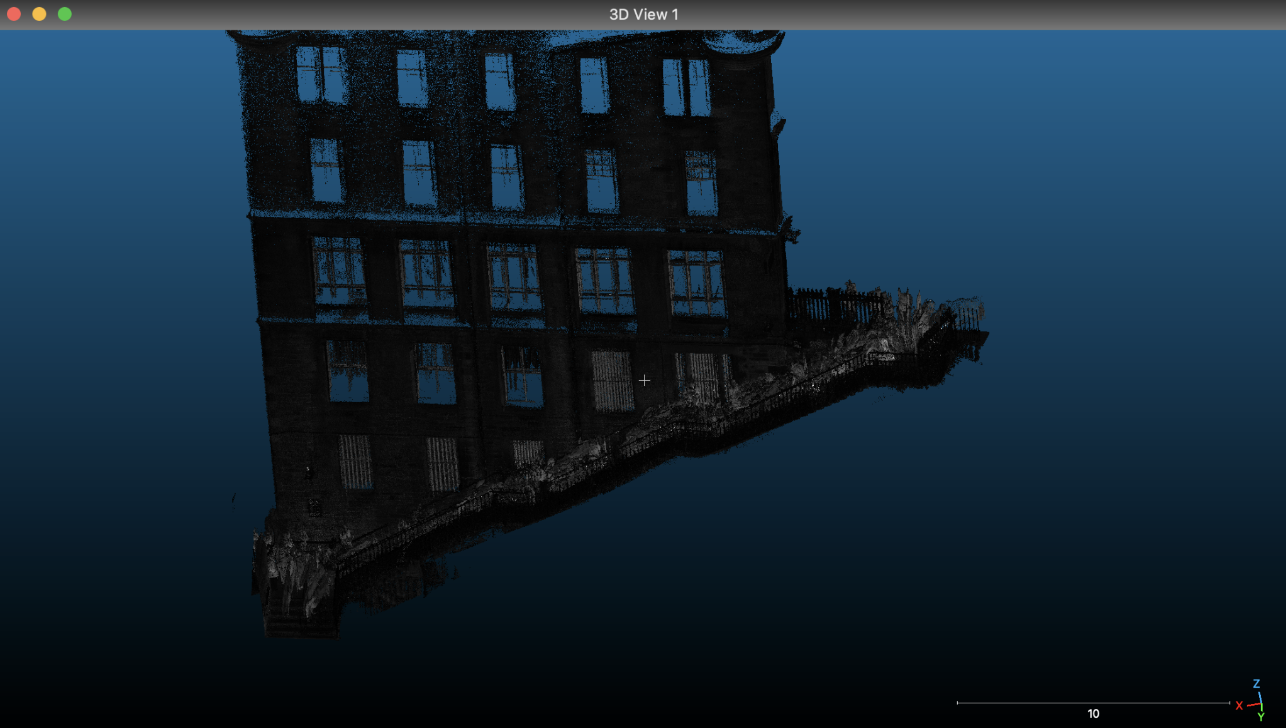

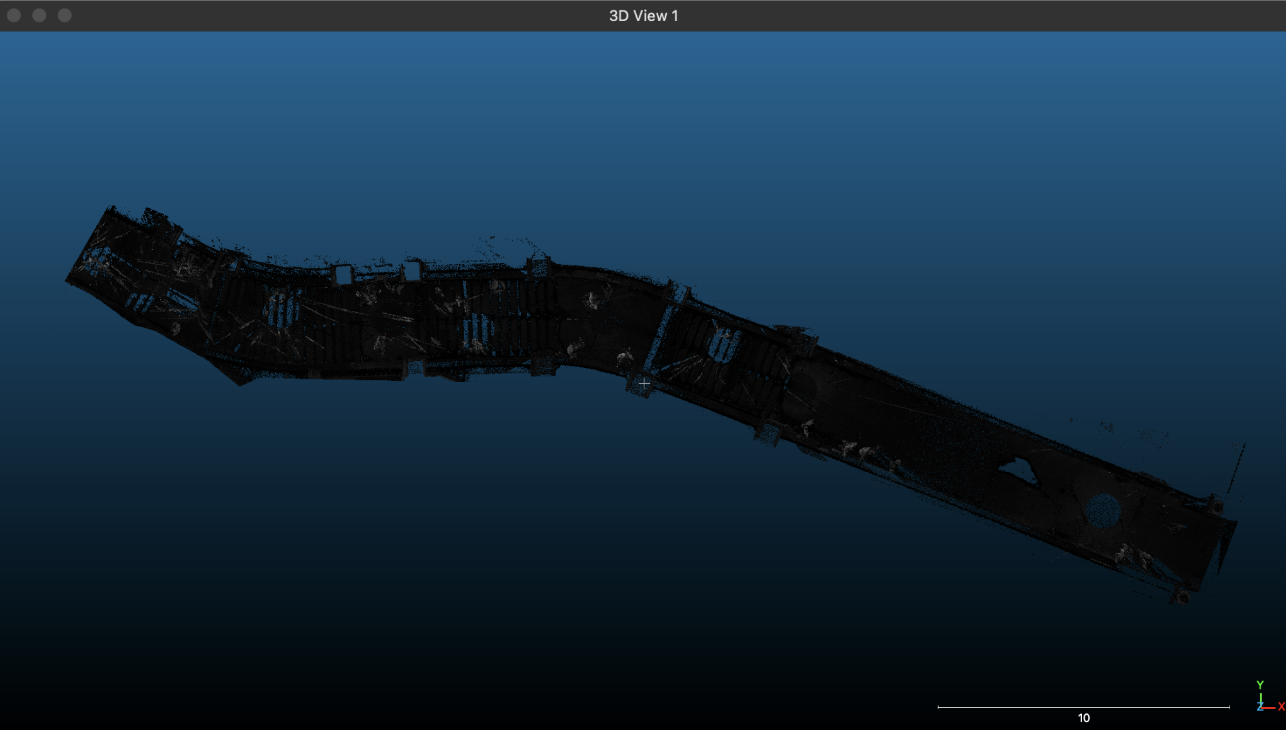

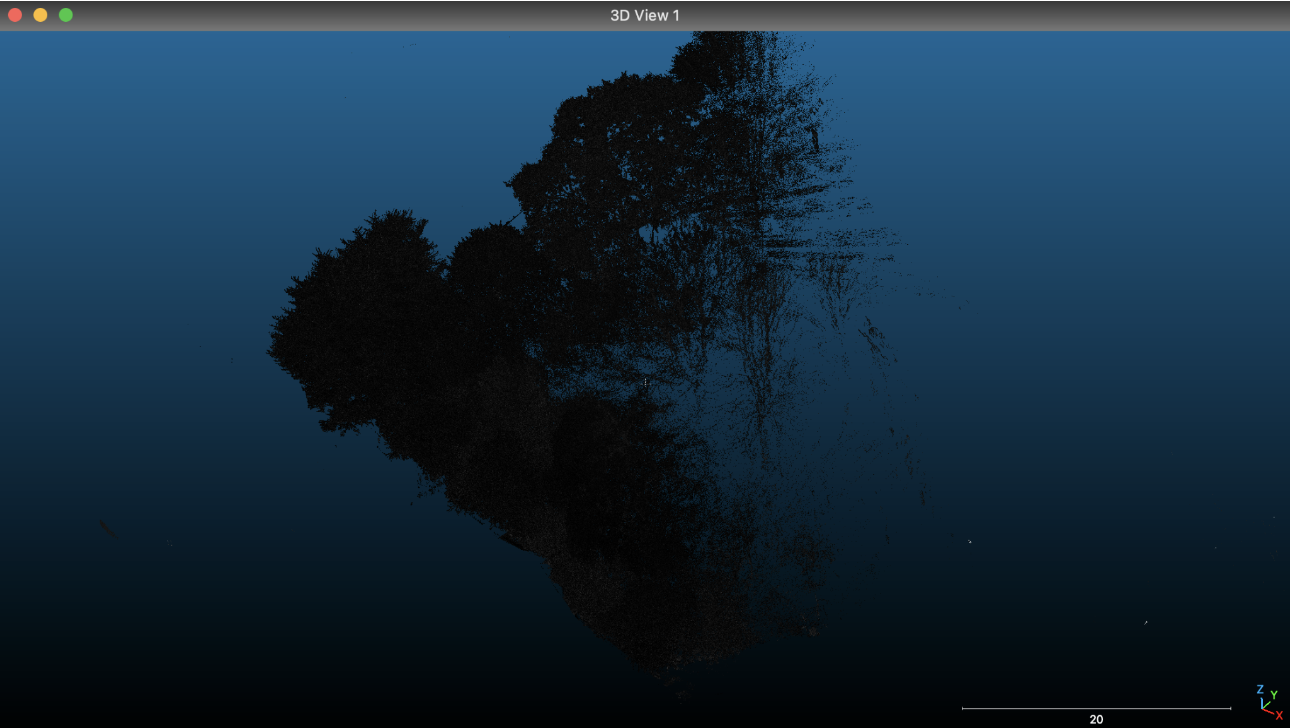

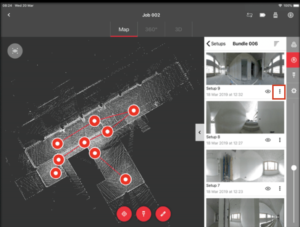

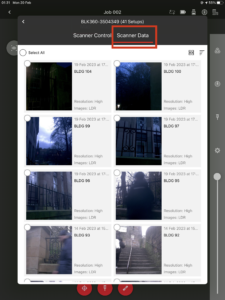

I am very grateful to my excellent team members. Firstly, I want to thank Allison and Yijun for working with me on the TouchDesigner design. We started from scratch and learned and explored together. Without them, it would have been difficult for me to learn so much. I also want to thank Molly and Dani for their efforts in setting up the exhibition and video rendering. They arranged everything perfectly on the day of the exhibition, which gave us enough time to solve any problems we encountered. Finally, thanks to David, Chenyu, Xiaoqing, and Yuanguang for their efforts in the sound design team, designing such a perfect immersive sound experience. In addition, I would also like to express my gratitude to our mentor Asad, who led us to explore and learn about LiDAR, an interesting tool that I believe I will use again in future projects. Thanks all for giving me an unforgettable team collaboration experience.

Yuxuan Guo