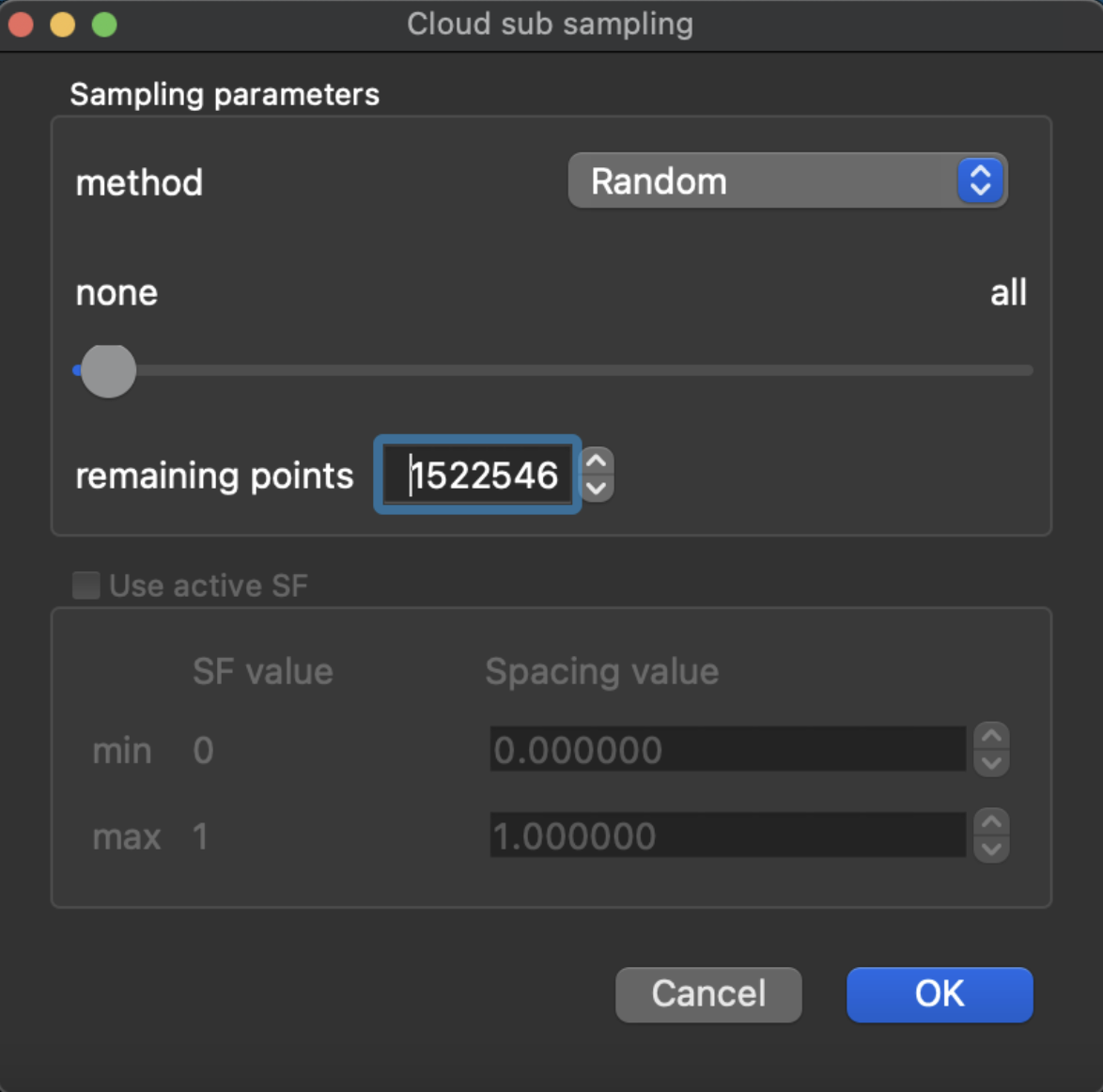

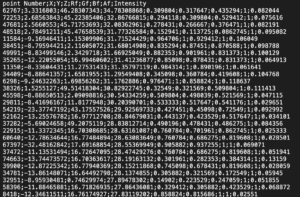

In order to export point cloud files to TouchDesigner for further processing, we can choose between ply, xyz and csv formats for export. By looking up some information, I have listed the characteristics of the three formats.

PLY (Polygon File Format):

- PLY is a versatile 3D model file format primarily used for storing 3D scan data and mesh data.

- It can store point coordinates (x, y, z), color information (r, g, b), normal information (nx, ny, nz), and face data, among other attributes.

- PLY comes in two formats: ASCII and binary. The ASCII format is more human-readable but has a larger file size, while the binary format has a smaller file size but is harder to read.

XYZ:

- XYZ is a simple text format mainly used for storing point cloud data.

- It only stores point coordinates (x, y, z), and sometimes includes color information (r, g, b).

- The format is simple, easy to read, and edit but does not support storing face data or normal information.

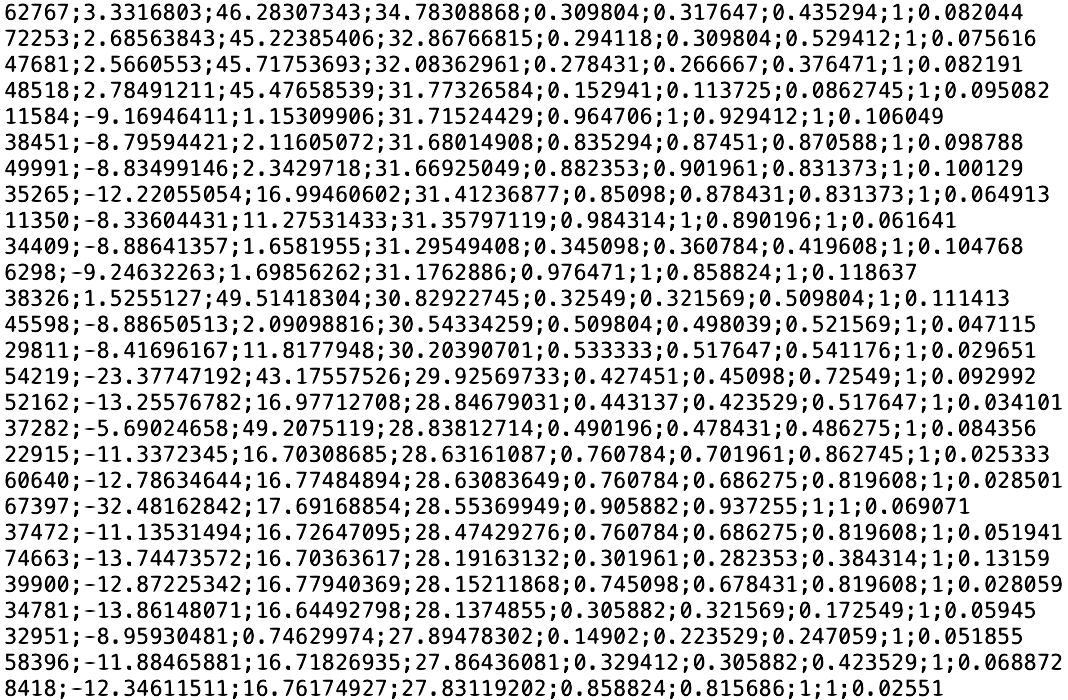

CSV (Comma Separated Values):

- CSV is a versatile text format used for storing tabular data and can be used for saving point cloud data.

- It can store point coordinates (x, y, z), color information (r, g, b), normal information (nx, ny, nz), etc., but requires custom column order and definitions.

- Similar to the XYZ format, it is easy to read and edit but does not support storing face data.

In summary, all three of the above formats were suitable for this project, but after testing and comparing them, we finally chose the plv format for export, taking into account file size and loading speed.

Reference list

Meijer, C., Goncalves, R., Renaud, N., Andela, B., Dzigan, Y., Diblen, F., Ranguelova, E., van den Oord, G., Grootes, M.W., Nattino, F., Ku, O. and Koma, Z. (2022). Laserchicken: toolkit for ALS point clouds. [online] GitHub. Available at: https://github.com/eEcoLiDAR/laserchicken/blob/master/Data_Formats_README.md [Accessed 27 Apr. 2023].

Yuxuan Guo