Link of PDF File:

Link of Performance Video:

Link of Related Files:

https://uoe-my.sharepoint.com/personal/s1934638_ed_ac_uk/_layouts/15/onedrive.aspx?id=%2Fpersonal%2Fs1934638%5Fed%5Fac%5Fuk%2FDocuments%2FPerformance%20File&view=0

dmsp-performance24

https://uoe-my.sharepoint.com/personal/s1934638_ed_ac_uk/_layouts/15/onedrive.aspx?id=%2Fpersonal%2Fs1934638%5Fed%5Fac%5Fuk%2FDocuments%2FPerformance%20File&view=0

The first thought that came to mind after the show was that we’d actually done all this stuff – the graphics, the music, the sound production, the props production and venue set-up, the live performance, etc. At the same time, I was glad that I didn’t make any mistakes with my live instrumentation or singing during the show, because that both the dance performers and I had some mental pressure about what was coming before the show started.

More than anything else, I am thankful and happy for this performance project. I am thankful to all the people who worked with me on this project, and to the teachers who gave us a lot of help and advice. This is the first time I’ve worked on a stage performance with people from different disciplines, and it was a novelty and a joy for me to work with them. Although we had a lot of work to do with the production of picture, sound and more, as well as the complicated rehearsal and set up of the scene, we had a lot to do.But after all the work was done, and especially after seeing the video of the official performance, the feeling of accomplishment was unparalleled.

In conclusion, it was an immensely valuable experience for me, and again, thank you to my group members, and also to Jules and Andrew who have been so helpful to us.

The overall audio system adhered to the initial design, primarily divided into two zones controlled by two computers. The only change was the relocation of the subwoofer from the front to the back of the stage. This adjustment was primarily made because the actors needed to move beneath the screen at the front, and placing the sound equipment there could potentially cause safety issues.

During the pre-show stage setup, our initial idea was to hang the speakers above the screen. However, due to safety regulations and time constraints, this could not be implemented, leading us to opt for a ground-based setup instead.

In the initial plan, the corridor scene included an audio-interactive system controlled by Geophone inputs, which was intended to trigger changes in the projector images. However, for unknown reasons, this setup consumed a substantial amount of processing power, causing instability in the operation of the two computers, despite it being just a simple video mixer for live feeds.

Consequently, we had to make a last-minute substitution with sound-activated light strips controlled by Arduino sensors. These strips were hung on bubble wrap that separated the audience area from the performance space, adding an interactive element to the stage setup.

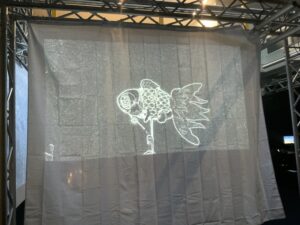

The video system consisted of three projectors and two televisions. The corridor projection used an NEC HD projector, employing a semi-transparent shower curtain as the projection screen, which allowed for viewing from both sides.

The main screen utilized an Optoma Short Throw Projector to minimize the distance between the projector and the screen. This particular feature enabled us to place the projector behind the screen, projecting the image in reverse, and thus freeing up ample space for performances on the main stage.

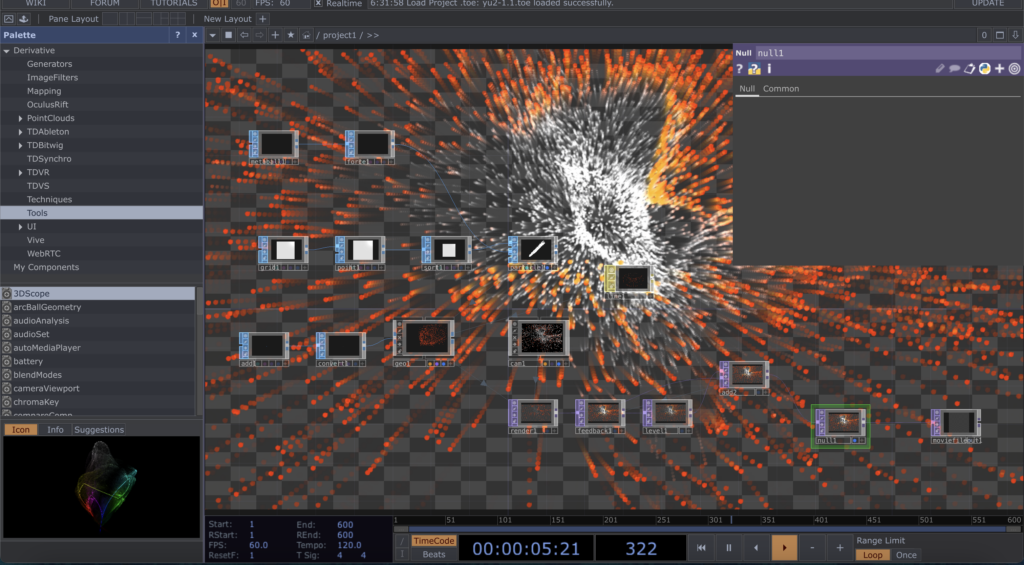

The projection on the left side of the stage employed an ASUS S1 Mobile Projector to project onto a transparent screen from the inside, with some of the light passing through the screen to create dynamic visual effects above the stage. The projection content consisted of variable graphics triggered by sound, created using TouchDesigner.

Implementing this setup, however, presented several challenges, the most significant being the length of the HDMI cables. Our system design intended for a Mac at the main control console to manage the television displays, the main stage sound system, and the main stage projection, ensuring synchronization of sound and visuals. This required HDMI cables of approximately 8 meters in length to avoid crossing performance areas. Unfortunately, on the day of the performance, the longer cables had already been borrowed from Bookit, leading to a lengthy search that eventually procured slightly shorter cables than needed. Safety concerns with the cables also arose during rehearsals; ultimately, we secured all cables to the floor with gaff tape to mitigate any hazards.

Google Drive 5.1 Surround Version Link: https://drive.google.com/file/d/1no6QsiduSEDFbP8MoLaNKxk5yf9KsKQ9/view?usp=share_link

The production of the audio segment commenced relatively late, initiated only after the total duration of the video was firmly established. The production adhered to previously established standards, utilizing a 5.1 surround sound format. Additionally, during rehearsals, we encountered challenges in visually guiding the audience to view specific scenes along the designed path. Consequently, we opted to use sound as the primary means to direct the audience’s attention.

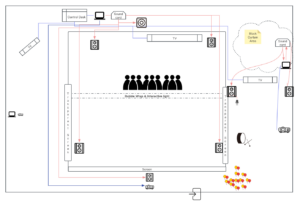

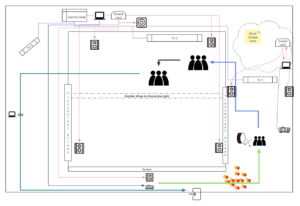

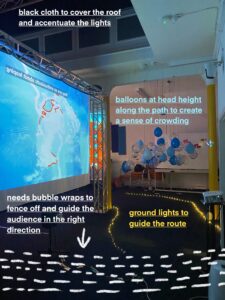

The diagram illustrates the audience’s walking path during the final performance. Initially, the audience proceeds through the green path where they first pass through suspended balloons, then see TV1 directly ahead and a projector to the left, displaying consistent content: AI-generated ocean animations and text animations designed using TouchDesigner. The ambient sound is stereo music. Concurrently, Xianni plays the hand drum in sync with the music, beginning from the middle of the piece and continues guiding the audience forward as the music nears its end. At this point, TV1 is moved aside to form a pathway, leading the audience into the main stage area (indicated by the blue route). Here, the large screen on TV2 shows a video clip of a carp in the air with a voice-over from the carp, “I see, I jumped over the dragon gate,” emitted from the Ls and Rs speakers. The projection screen and speakers at the front of the stage remain silent to focus the audience’s attention on the back of the stage. As the final words are spoken, the sound shifts from the back to the front; simultaneously, the television turns off and the main projector lights up, prompting the audience to turn and enjoy the performance directly in front of them. Thus, the guidance through sound is completed.

With only about a week remaining for audio production, the majority of the sound was edited and designed using sounds from personal and commercial sound libraries to save time. The project’s structure consisted of four main components: vocals, sound effects, ambiance, and music. Vocals included narration and demonic whispers, which were processed through pitch shifting, electronification, and overloading to achieve the desired effects. The ambiance was created using multitrack stereo with sound image variations to produce a surround sound track.

Sound effects were produced in a manner typical of film and television sound production: markers were initially set along the timeline, and the tracks were gradually filled to complement the music and convey emotions. We also experimented with various abstract processing techniques, fully utilizing the advantages of the surround sound track to create rapid, continuous sound image changes, reducing auditory fatigue for the audience. As for the music mix, since the music was initially in stereo format, we added a surround sound reverb send to compensate for the lack of rear surround sound.

The final performance went smoothly as a whole. We arranged the venue and set up the system one day in advance. On the day of the performance, we quickly completed the remaining layout work and then carried out three overall rehearsals, optimizing the details in the process of each rehearsal. For example, the approach time and scheduling of the fish, adjusting the audio pressure level to a reasonable range, and most importantly, we re-fixed our main projector. In the process of rehearsal, the small fish actor accidentally stepped on the line of the main projector when going up and down the stage, which caused the projector to be disconnected. We immediately reinforced the support and connection line placed on the projector to ensure that there would be no problems during the performance.

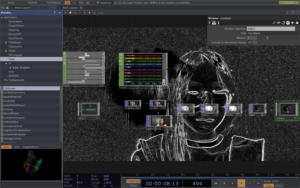

For the part I made, one of them was the touchdesigner line effect displayed on the side screen. My original plan was to use this style of screen display in the demon whispering part and the big fish leaping over the gantry to reflect the sense of terror, but I ignored the harmony between this screen and the screen displayed on the main screen, and their colors did not match well. If I want the color of the screen to be related to the home screen, the improvements I think about are: Install a blue atmosphere light at the bottom of the screen, because I want to make some live performance images on this screen rather than set videos in advance that can be played directly. However, I am not very familiar with the color display of touch designer, so this is the solution I can think of at present.

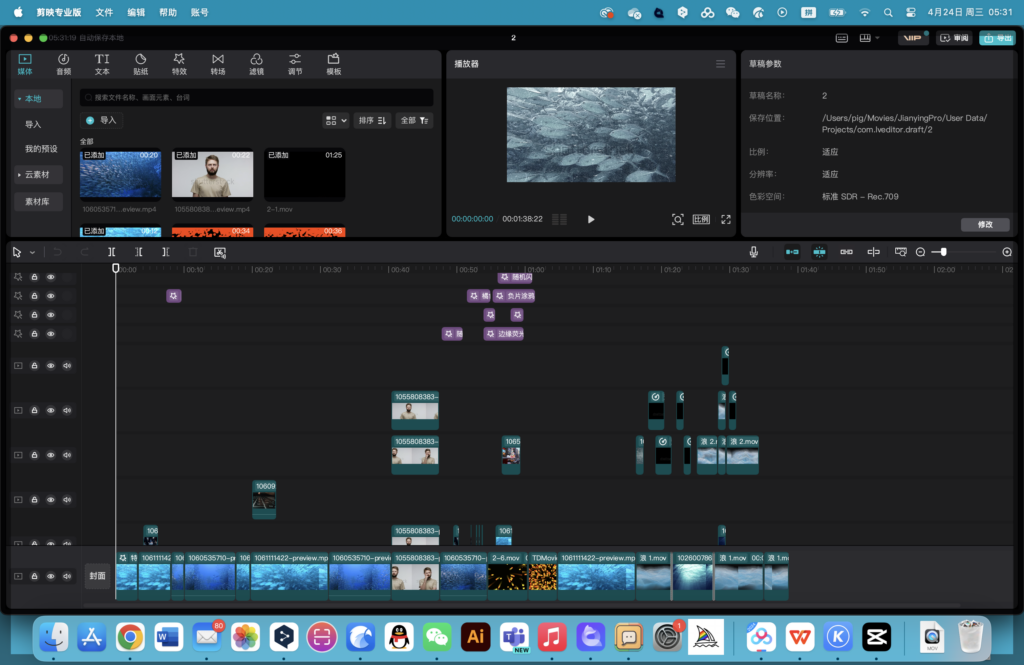

As the main person responsible for the production of the video, I completed the second and third parts of the video as well as the first half of the fourth part. The whole production process can be divided into several main parts:

Our video footage was mainly sourced from Shutterstock’s video library. After selecting the footage, I processed these videos with fine editing and effects to ensure that they would fit perfectly into our performance. For the gantry footage, I used TouchDesigner software to create a unique particle effect to enhance the visual impact.

In order to express the changing emotions of the carp at different stages of the dance, I have recorded several special expression performances. These expression videos will be played in the background of the dance, echoing the movements of the dancers. After recording, I carefully edited these videos and enhanced their artistic effects through post-processing to ensure that they could truly and vividly convey the inner world of the carp.

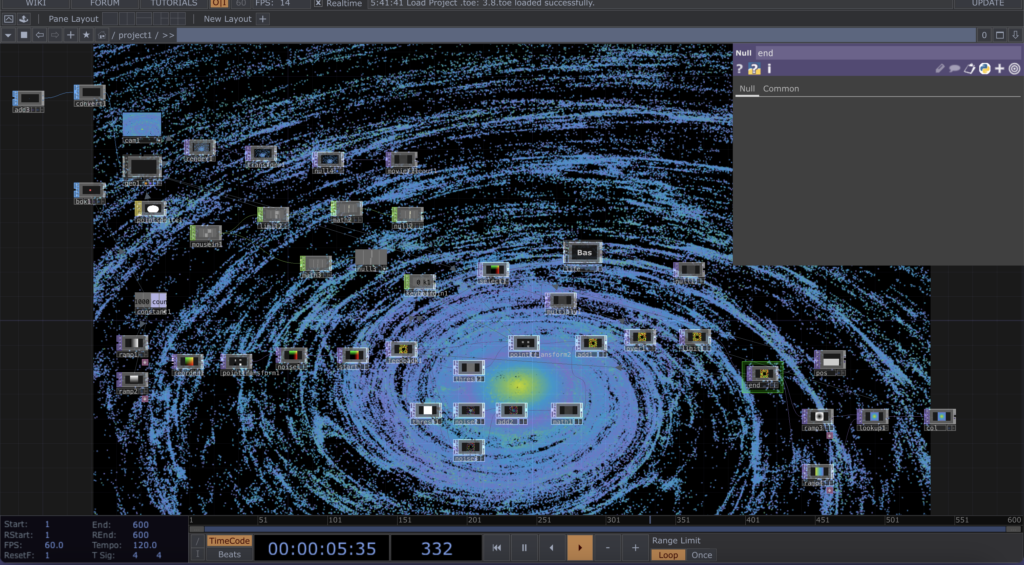

There are several key images in the video that required special production techniques. For example, the tornado, the waves and the fish swimming head-on were all particle animated using TouchDesigner software.

For the tornado animation, I designed a system that could be manipulated based on mouse position. By manipulating the mouse in the software, I recorded the ideal tornado motion trajectory to make it look more realistic and dynamic.

When creating the wave effect, I initially tried the sound control method, hoping that the waves would rise and fall with the rhythm of the music. However, after actual testing, the result was not satisfactory. After repeated tests, I finally chose to use constant value to simulate the movement of the waves, which can better control the overall effect of the picture.

The image of the fish swimming head-on is to create a feeling of suffocation and oppression. By adjusting the density, speed and direction of the particles, I succeeded in creating the effect of a large number of fish swimming head-on, which makes the audience feel as if they are in the water, feeling the helplessness and struggle with the carp.

For parts such as the heartbeat and the dragon gate, I used TouchDesigner to further process the footage video by adjusting the colours, contrast, particle effects, etc. to make the image more artistic and abstract to match the overall atmosphere of the performance.

For the part of the carp going up to the sky, we wanted to create a surreal and dreamy effect. For this, I used Runway, an AI-assisted video production tool. With Runway, we can transform still images into moving videos and add various creative effects. Using this technique, I managed to bring to life the scene of a carp going up to the sky, adding a touch of fantasy to the whole performance.

Our final show at Alison House Artium on April 5th at 4 pm marks the culmination of our efforts. I extend heartfelt thanks to everyone involved, particularly the professional dancers and small fish players whose contributions enriched the performance with skill and artistry. It’s important to acknowledge the dedication and hard work of each group member toward this achievement. Every individual’s significant efforts merit both encouragement and commendation. Lastly, I present the carefully edited full version of the live video, featuring multiple viewpoints, including audience perspective and fixed angles, to offer the most objective presentation possible.

I took notes on some of the advice given by the audience and teachers after formal performances.

We arrived early in the morning and after spending hours on the set, we began a tight rehearsal. At this stage, all team members contributed to the final sprint, and I am extremely proud of our capacity to learn from it and consistently reflect

At the beginning, I would like to explain the specific set design concept. When designing the entrance set, our primary concern was directing the audience correctly. This became evident during rehearsals when the first two audience members veered off course despite the floor light strips meant to guide them. To address this, I decided to enclose the walkway with bubble wrap, ensuring only one clear path for the audience to follow. This bubble wrap blocking the road will be removed immediately after the performance in the corridor, because it will be a key passageway for actors and dancers waiting for the main set to follow.

Here is the prelude – the first small screen projection, which serves to guide the audience to slowly integrate into the overall atmosphere and drive the mood to better provide an immersive performance subsequently. The projected content here does not need to show too much narrative but mainly live music and motion images produced by AI, as we prefer to achieve the purpose of driving the mood. We initially set it up with Xianni playing in the corner as the drummer. However, after a few rehearsals, we realised that it would be best toplace her on the role of lead, playing the drums in front of the audience and guiding them into the main stage. Such a decision enhances the performance, as it allows the audience to receive clearer information in the darkness to keep the story flowing.

Here we come to the main stage area! Firstly we demarcated the audience from the stage area, using bubble wrap and conspicuous sound-activated light strips as dividing lines. This is because we want to fix the scope of the performance to avoid dancing or actors accidentally injuring the audience. As you may see 3 individual screens are working together around the stage— the left and right ones show our interactives to echo the story and pull in the atmosphere. For example, for the Devil’s Whisper sequence, we would draw the viewer’s eye to the left by using real-time face imaging on the left screen to draw the eye to the left, while the main screen in the centre featured a silhouette of the dance. The purpose of this is to briefly take the audience out of the third view and into the first view of Carp, to feel the dark and depressing mood. The interaction on the right side varies the abstract graphics mainly according to the live sound. The reason we didn’t make it narrative is that I didn’t want too much screen switching to disrupt the audience’s thinking and the coherence of the story. Thus, its main role is also to render the atmosphere.

These rehearsals before the official performance were significant for us, as we identified and adjusted many problems in advance, removing obstacles for the subsequent performances. I’ve summarised the experience of a few rehearsals here, and the points that needed tweaking:

In order to enrich the main stage, I plan to add a secondary screen on the left side of the stage, and use the unique visual effect created by touch designer on the secondary screen to assist the main stage performance. The original plan was to set up a camera in front of the main stage, which could display the movement and outline of the dancer’s body on the screen when the dancer appeared in the dance. However, during the test, I found that the light on the main stage was not bright enough, and TouchDesigner could not recognize the characters on the stage, so we planned to set up LED lights on both sides of the stage. The light was heavy and we were worried about the safety risks of not hanging well, so I ended up using this interactive part for the main stage with the devil whispering part and the fish struggling as they jumped the gantry.

Since this idea was planned at a later stage, it was too late to prepare the Kinect camera. Instead, I sought ZigSim, an app on the mobile phone, which can use the mobile phone as a sensor and peripheral camera to transmit video signals from the mobile phone to TouchDesigner through the network.

The cache is used inTouchDesigner to save frames in the video and to obtain motion tracks through difference detection. Next, the motion contours are enhanced by adjusting brightness, contrast, and edge detection. To eliminate blemishes in the image, use a high filter size for blurring. Adjusting the minimum value of the Chroma Key can reduce the number of black pixels in the image and optimize the Settings for different environments.

YouTube link:https://youtu.be/wKjYGEWhjus?si=4RrCjlqhejq0Jhtm

In the fourth part, the story line is gradually coming to a climax and the protagonist is going through intense psychological changes and self-conflicts. At the same time, throughout the performance, the fourth part is the part that tends to show the dark line of the story most directly, i.e. the story of the carp living in the depressing waters and longing to cross the dragon’s gate, alluding to the fact that people in modern society are struggling in the crowded living environment.

Therefore, this part of the music was produced with a strong contemporary style and a lot of real-life sound samples, such as alarm clock samples, typing samples and loud vocals. At the same time, this part of the music is chaotic and noisy in general, using a lot of pre-produced piano tones and noise tones, trying to create a conflicting and dramatic aural effect with these dissonant musical elements.

YouTube link:https://youtu.be/hCjrMUIrq4o?si=-dBAfm2evsfplIbD

The song expresses the theme of the story (including the metaphorical part) relatively directly, elaborating on the dark end of the story. And during the process of making the music in the project, it occurred to me that, as a form of performance similar to a stage play, it might be possible to write a song similar to the style of an opera to create an ending similar to the curtain call of a film or stage play.

So I produced the song and created subtitles with lyrics similar to the end credits of the film. Since it’s a live singing performance, only the backing vocals part was uploaded, and the vocals were not pre-recorded.