Category: Invisible strings- Blog

Performance Part2-Technology

Processing

Background

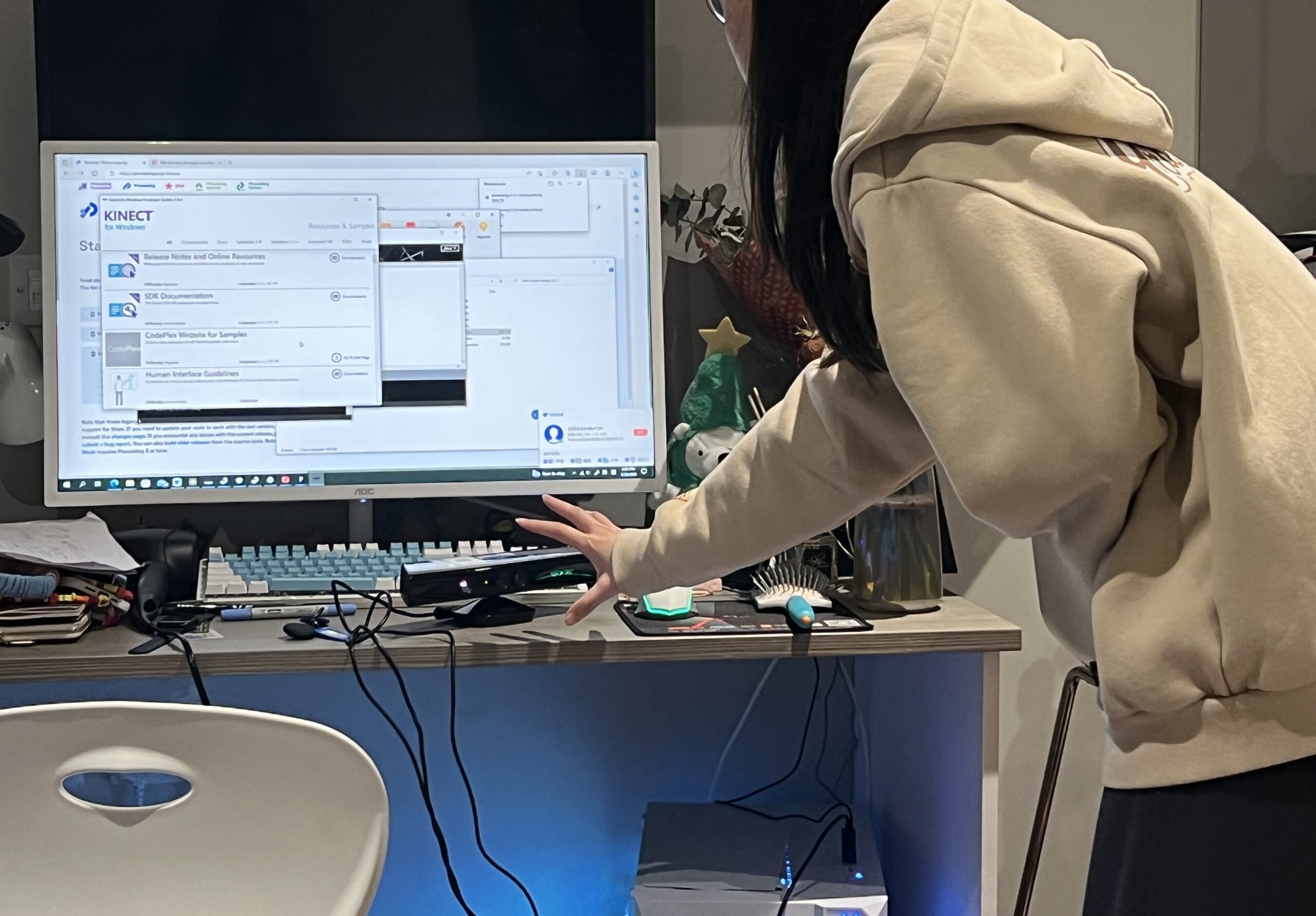

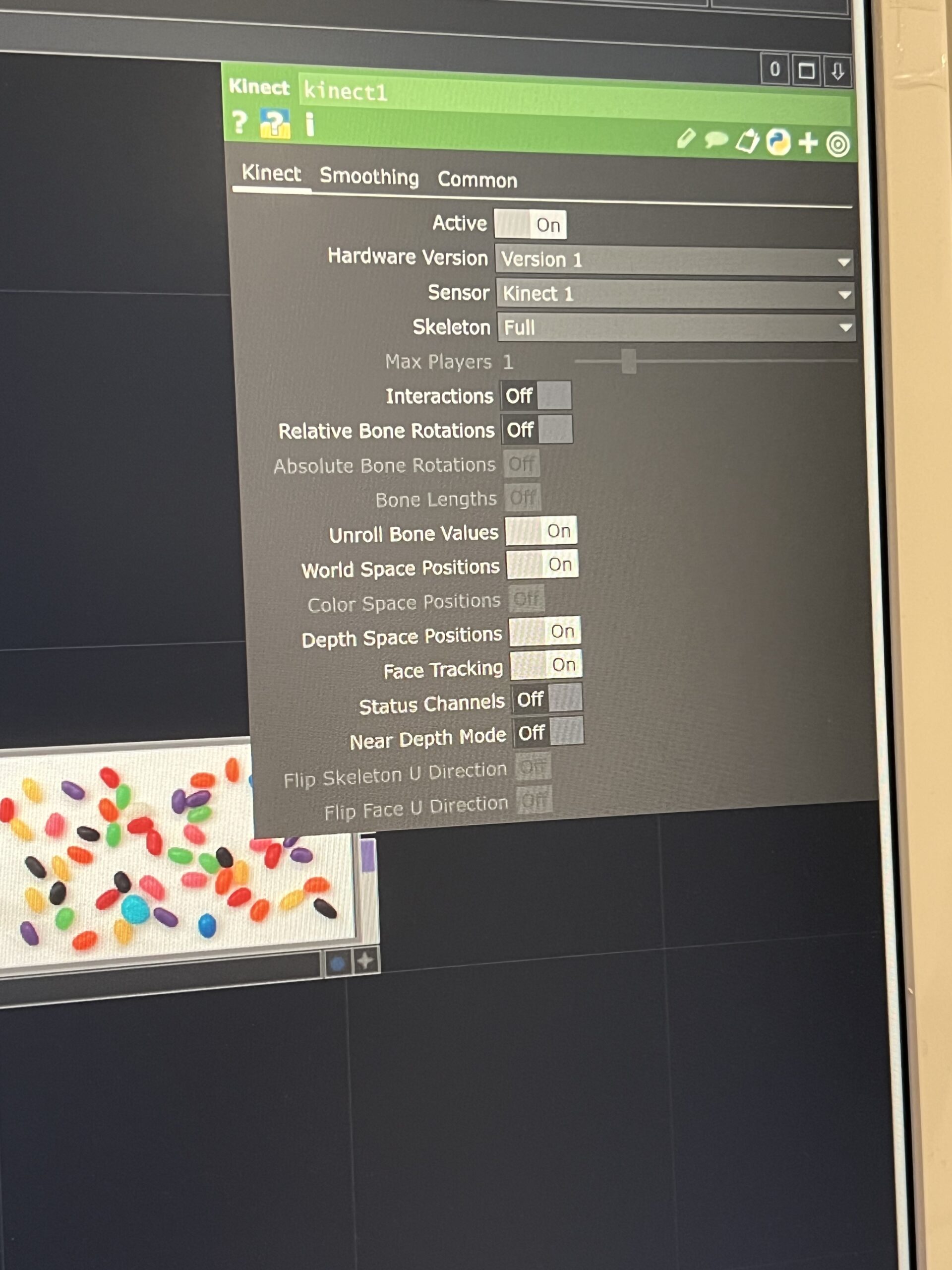

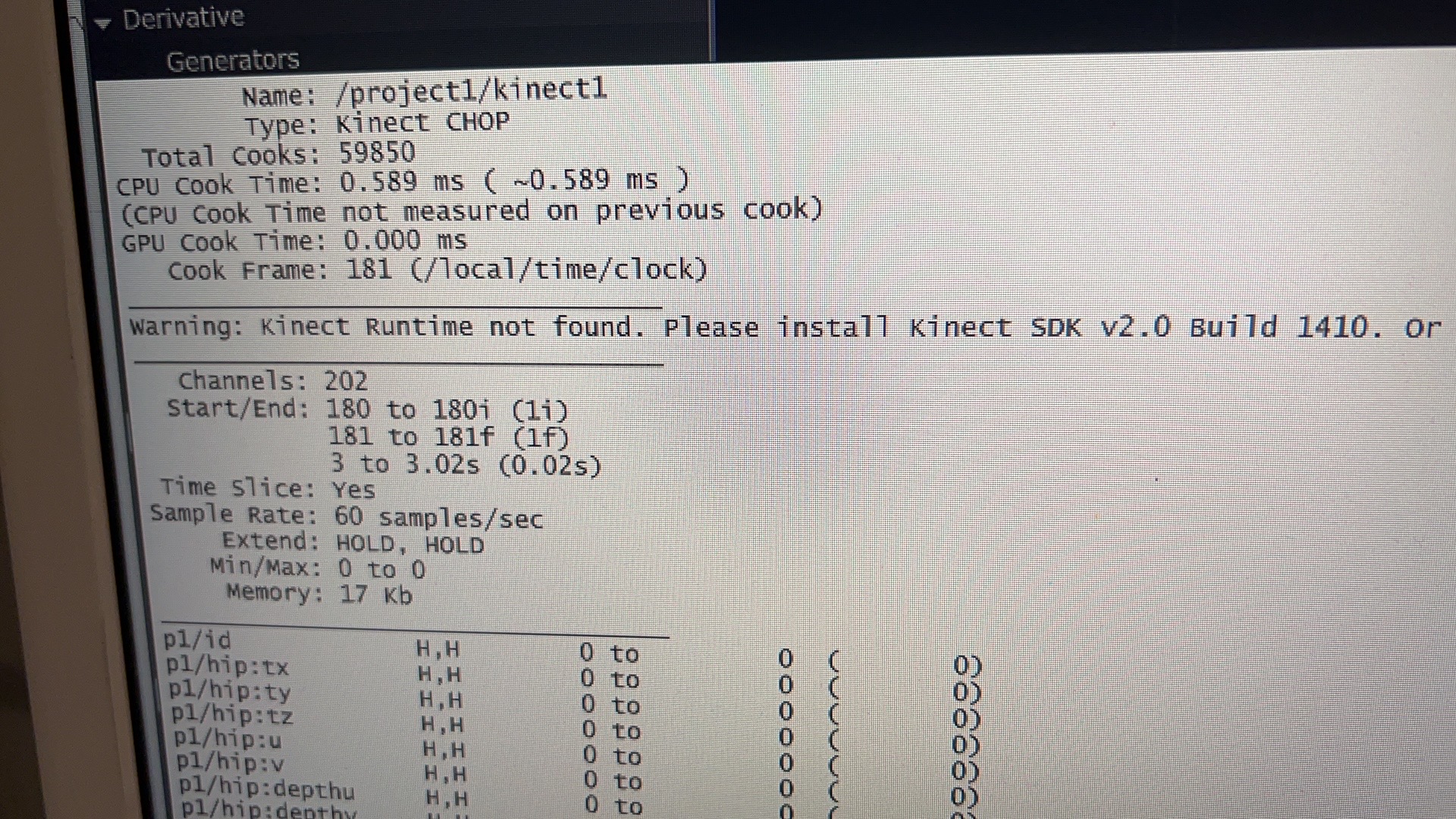

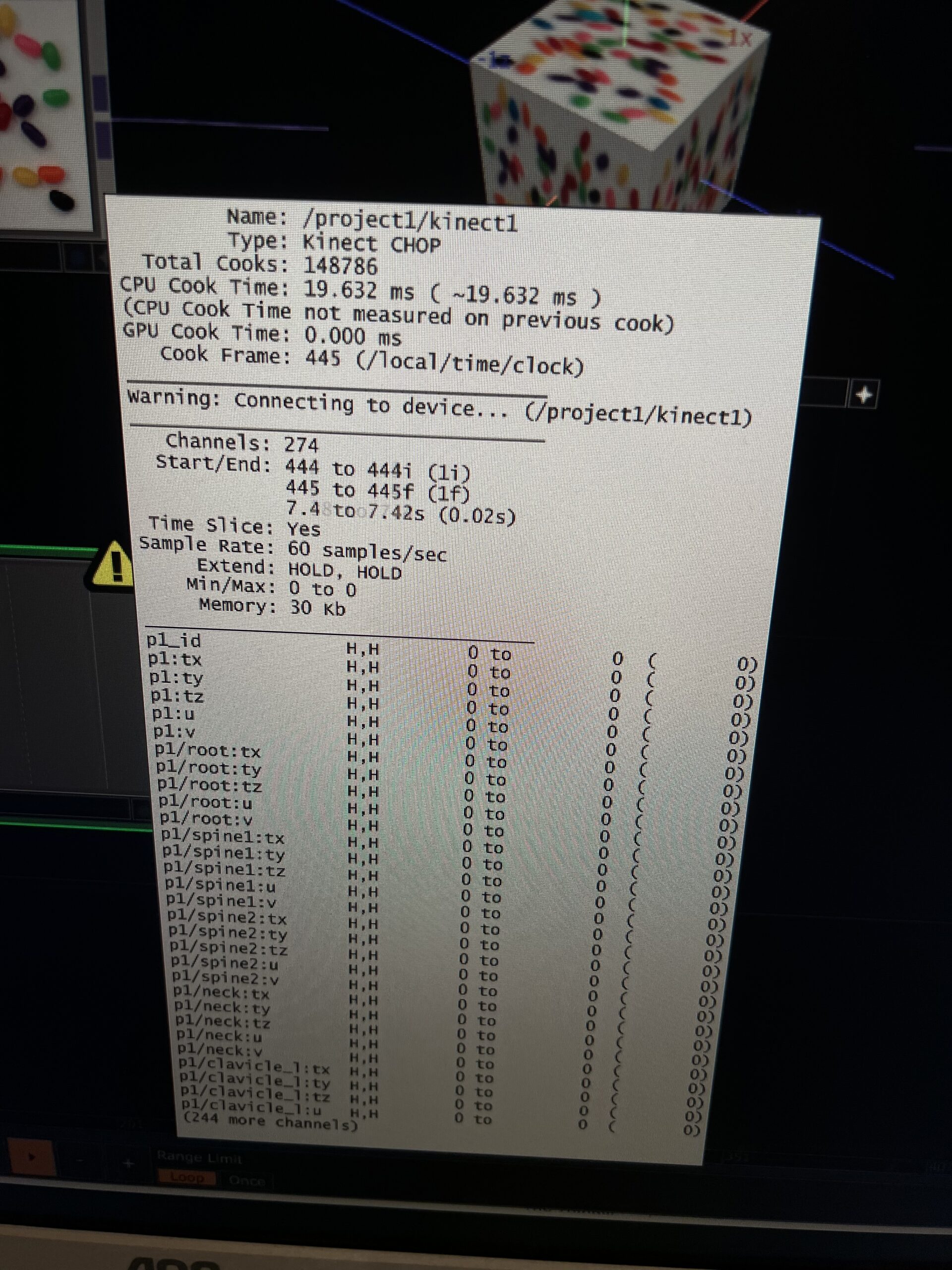

In the second part of our performance, we aimed to portray the inner conflict and struggle of the daughter for the audience. Therefore, we wanted to capture the actor’s movements and project them into a 3D particle space to depict the daughter’s inner world. Eventually, we chose the Kinect V2 sensor for this purpose. As the Kinect is an RGBD sensor, it can recognize the human body and automatically calculate the motion data of each joint based on the person’s proportion in real-time, capturing the person’s outline.

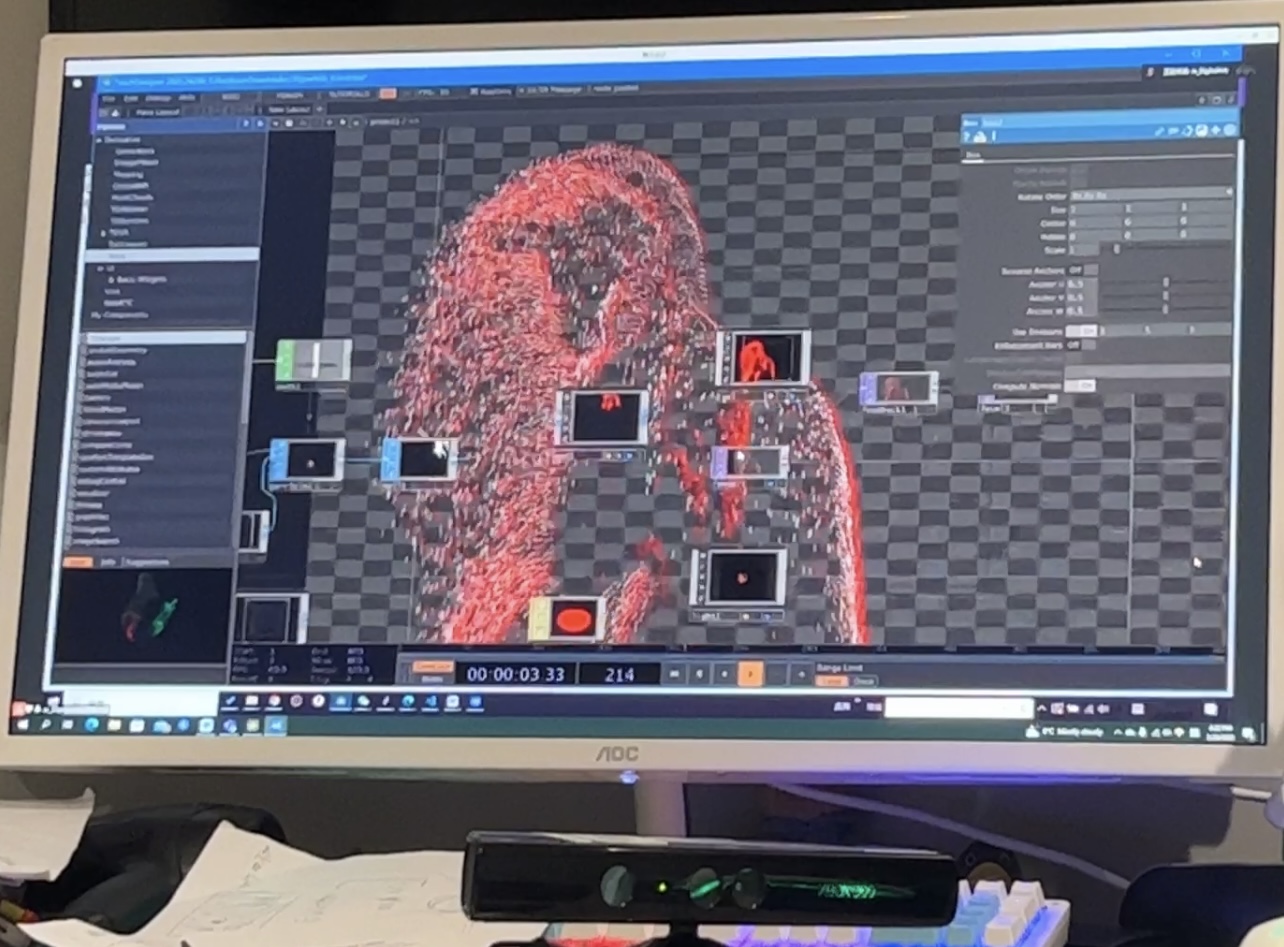

After selecting the sensor, we attempted to use Touch Designer to achieve the motion particle presentation. However, as some team members had more experience using Processing, we decided to use Processing to present the motion data captured by the Kinect for better visual effects.

Process

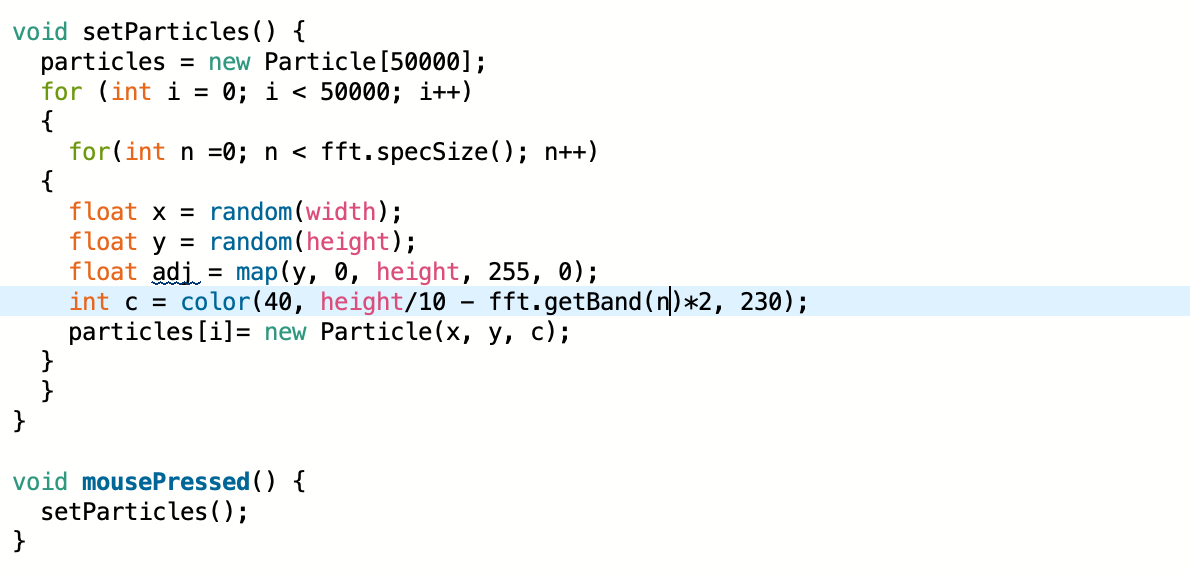

In the Processing coding, there are mainly three parts: Particle from Kinect capture, particle clustering and dispersion influenced by sound changes, and movement of a red wireframe.

Firstly, the setup() function initializes the libraries being called, sets up the screen, audio input, and particle array.

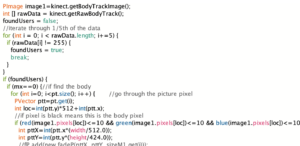

1)Kinect

We used the KinectPV2 and peasy libraries to access the Kinect camera and create an interactive camera controller. We called the Kinect data by defining “kinect = new KinectPV2(this)”. In the code, after initializing the Kinect sensor, It creates arrays and ArrayLists to store particle coordinates, image data, and particle size information. Using If statement, Processing checks whether the Kinect detects any human bodies. If the actress is detected, it iterates through the pixels of the body tracking image and looks for pixels that correspond to the user’s body. For each pixel found, it creates a particle-like effect at the corresponding location and adds it to the ArrayList of particles. If no actress is detected, it randomly generates new particles. The particle movement, dissipation, and iteration are also set up at the same time.

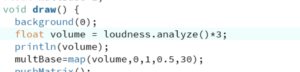

2)Sound

We also used the processing.sound library to capture audio input from the computer and extract volume information. By using “float volume = loudness.analyze()*5”, we can quickly adjust the sensitivity of how sound affects particle dissipation according to the impact of environmental noise during live performances.

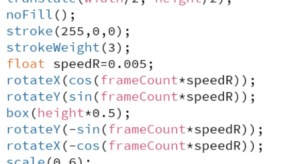

3)Red Box

We used P3D to draw a rotating 3D cube in space, controlled by “rotateY(-sin(frameCountspeedR));rotateX(-cos(frameCountspeedR));” to adjust its rotation speed. In the initial design, we used a white wireframe, but later changed the stroke color to red. During live testing, we found that the wireframe was not clear enough, so we adjusted the strokeweight() data to make the wireframe thicker, making it easier for the audience to see.

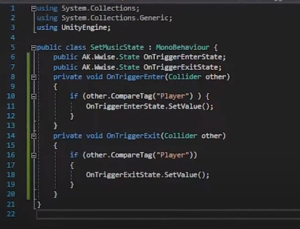

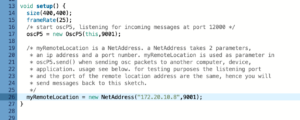

Max and Processing Connection

We intended to output the sound data generated by the performer in real time to the processing via Max, thus causing a diffusion of the visual particle effect to occur. The LAN is set up in the Arduino and Max is connected under the same port.

Using Max as the emitting device, the volume data is sent to the ip address where the processing is located, setting the data to vary between 0 and 1.

In the Processing part, we conducted tests on data reception using the test code from the OSC database based on relevant references we found online.

After modifying our own IP address and receiving port, we carried out sending and receiving simultaneously.

However, in the end, we did not receive any data from Max on the Processing side. Instead, Max received ‘123’ sent from Processing on the Max side. So we gave up this plan and still use environment sound to affect the particles.

However, in the end, we did not receive any data from Max on the Processing side. Instead, Max received ‘123’ sent from Processing on the Max side. So we gave up this plan and still use environment sound to affect the particles.

Performance Part2-Visual design

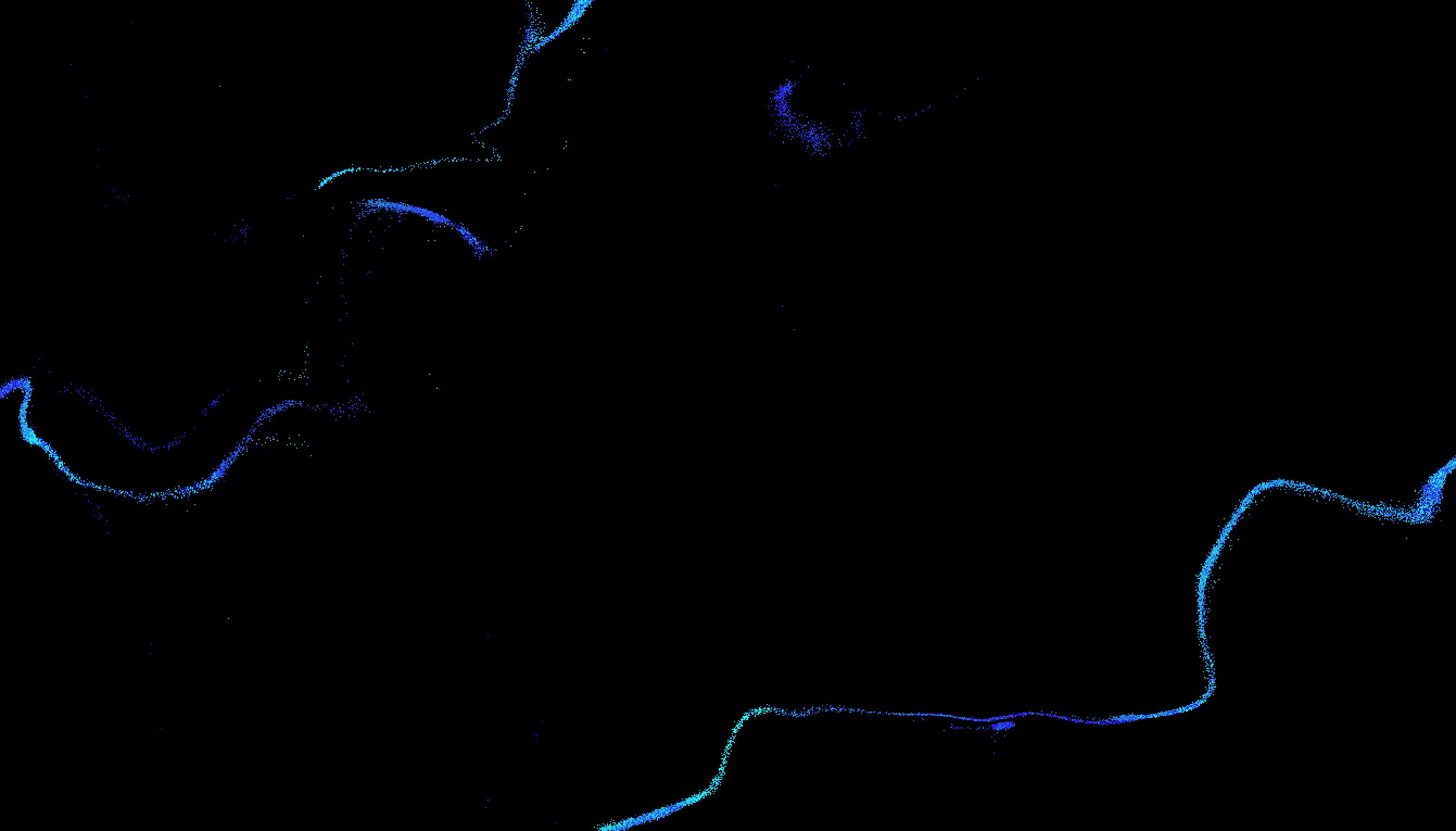

Regarding visual aspects, I believe that Part 2 holds the greatest significance within the entire performance. It serves as a bridge between the “daughter’s” struggle with self-awareness in Part 2 and the self-acceptance in Part 3. To portray the character of the “daughter,” we assigned a member of our group to play the role. We utilised particles to create portraits and employed an abstract technique to capture the essence of the “daughter’s” image. This was done because the “daughter” not only exists within the context of the film but also represents numerous children who experience oppression from their parents. The depiction of the particles used in the portrait can be observed in Figure 1

Fig.1 Processing visual video

Our group believed that Part 2 held significant importance in terms of visual elements, acting as a link between the “daughter’s” struggle with self-awareness in Part 2 and her subsequent reconciliation in Part 3. To depict the character of the “daughter,” we assigned a member of our group to play the role and utilised particles to construct an abstract portrait representing the “daughter’s” image. We opted for this technique as the “daughter’s” role in the film serves as a representation of numerous children who face oppression from their parents in real life. The particle depiction of the portrait can be seen in Figure 2.

The square shape in the background was intended to signify the invisible walls that a mother’s expectations create, binding her children. Initially, we planned to use a black-and-white colour scheme for the visual elements. However, following feedback from Jules after observing our performance on March 27th, 2023, we ultimately decided to alter the cube in the background to a bright red colour, creating a striking contrast between the black and white blocks.

Finally, we choreographed the movement of the actor portraying the “daughter,” who awakens in a world of her own inner self, surrounded by threads representing her mother’s expectations. She becomes panicked and attempts to break free, but instead is manipulated like a puppet, becoming entangled in the threads.

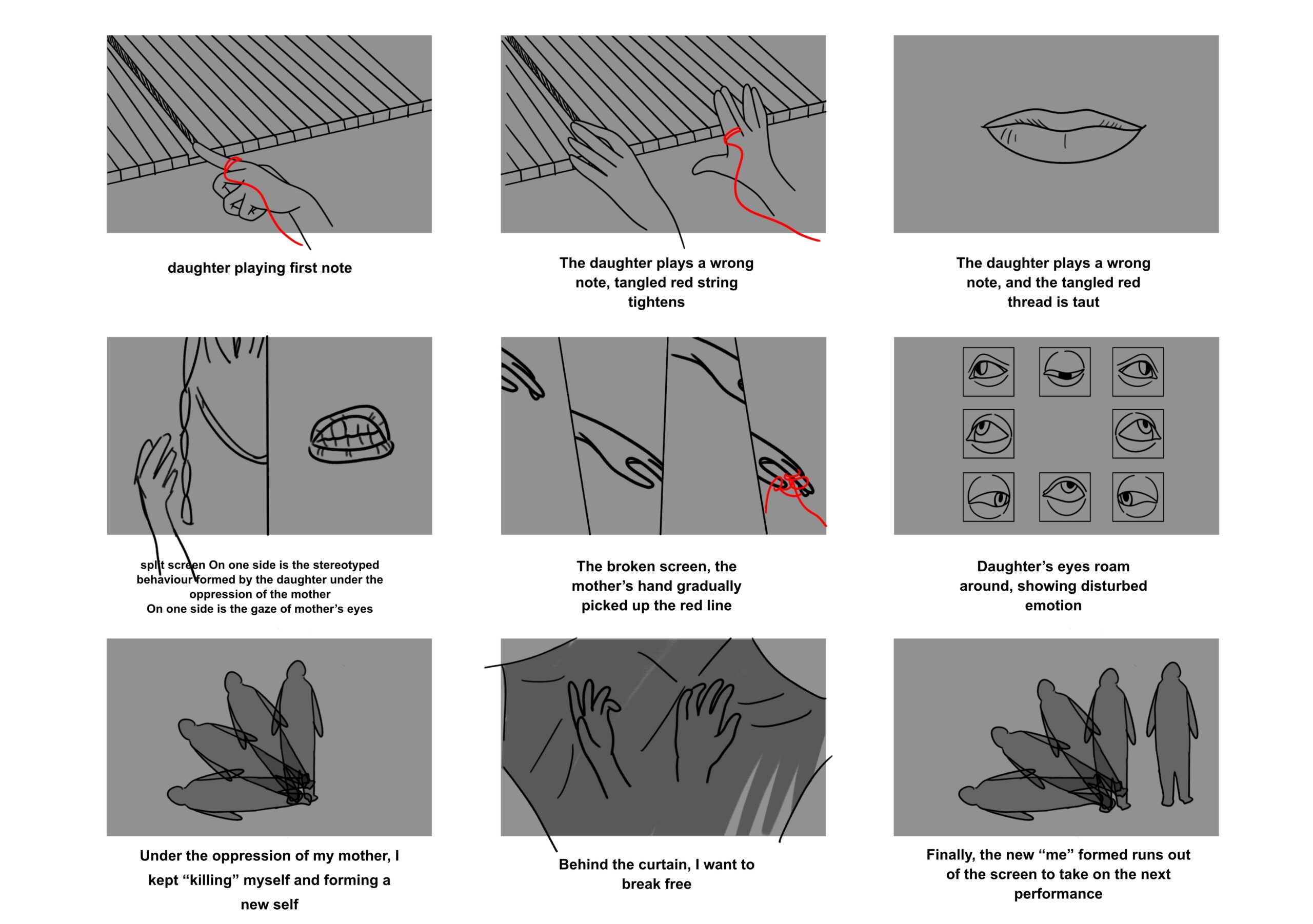

Performance part1-Video design

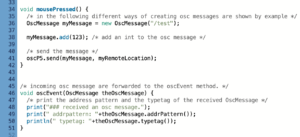

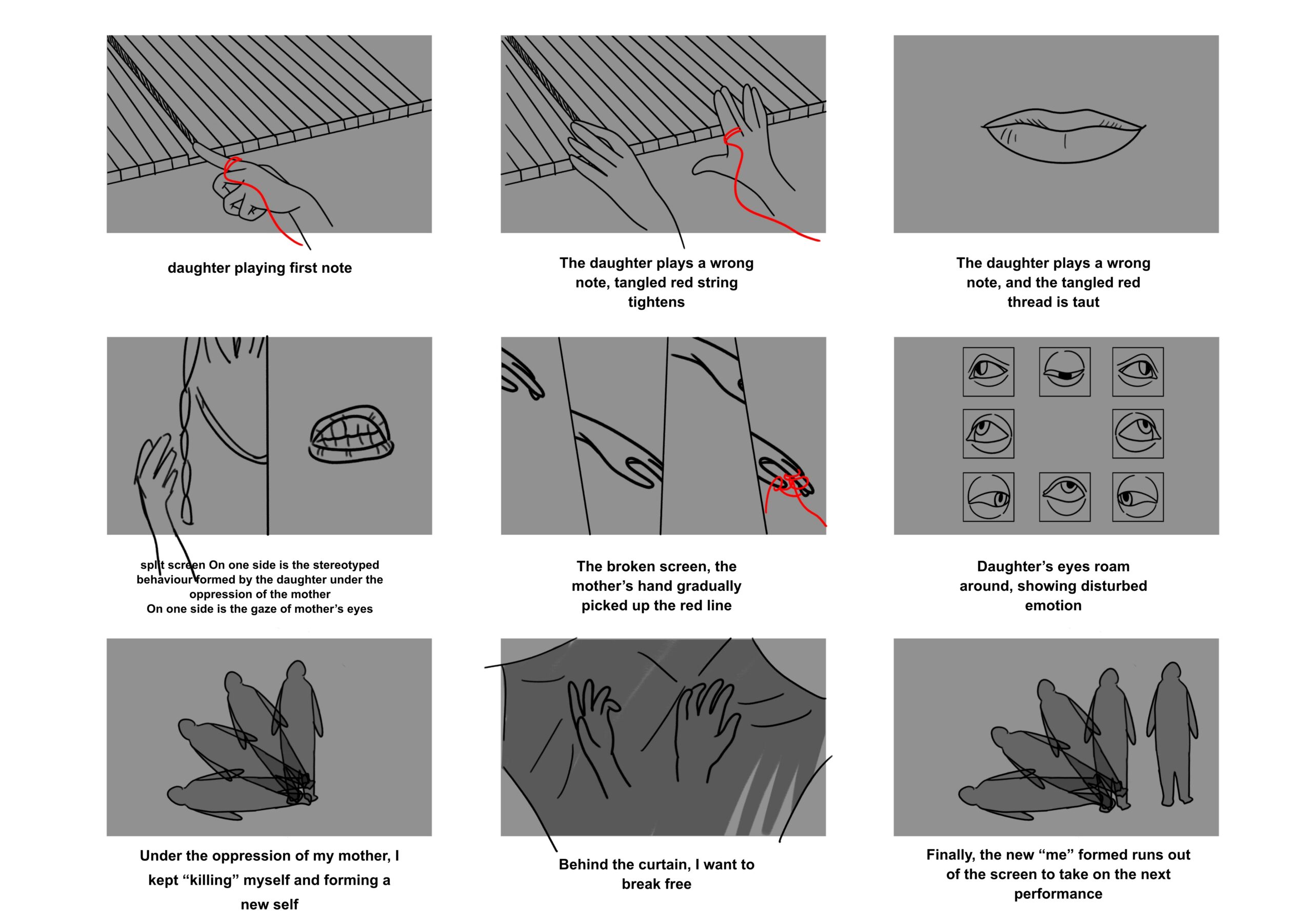

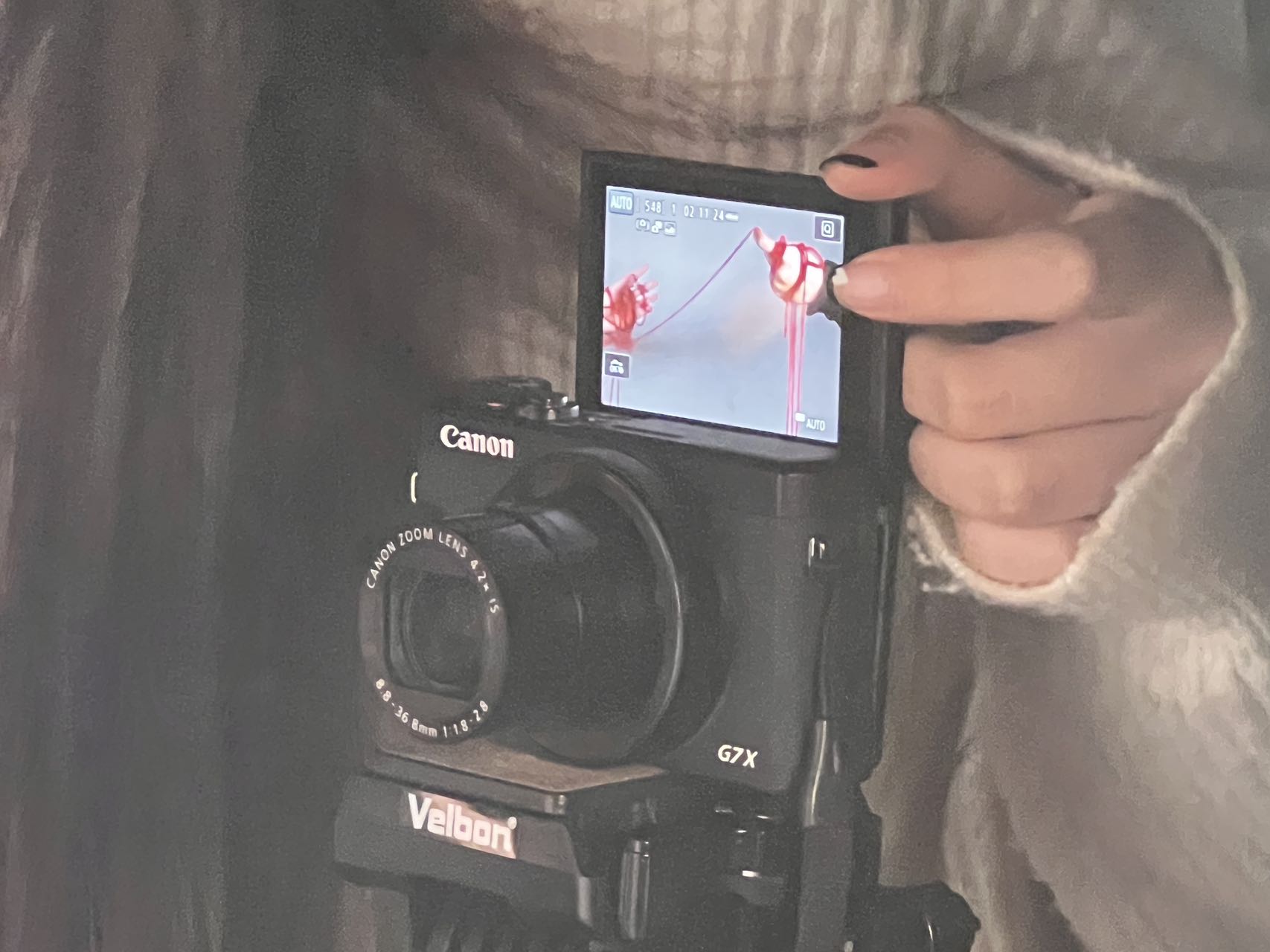

The first part of the main interaction design is the impact and change of the live real-time human voice input on the video screen. The visual design is dominated by the video projected on the curtain. The main content of the video is the creative concept of abstract plot expression using live filming, refining elements such as the mother’s reprimand and facial movements, and integrating abstract editing expression to present the audience with a conceptual video of the tension and oppression in the mother-daughter relationship. As the first act of the performance, the video plays the role of introducing the story and laying the emotional tone of the story, so that the audience can feel both the oppressive relationship between mother and daughter when watching the first act of the performance, and the flowing, unrestrained change of their own emotions.

The video focuses on the story of a daughter practicing piano who is constantly struggling under the oppressive scolding and gaze of her mother. In the design of the video script, some metaphors are used to express abstract emotions – “hand” as a metaphorical element to express emotions: a red thread tied on the finger as a clue and the beginning of the story, followed by a large and a small hand pulling each other, and a struggling hand behind the gauze. The two hands pulling each other, the struggling hands behind the gauze, etc., all express the mother’s constant manipulation and restraint of her daughter. The close-ups of the “mouth” and “eyes” are direct expressions of the mother’s attitude, and the close-up perspective highlights the intensity of her feelings.

In the shooting of the video, the props and the actors’ costumes and makeup were plain to create a sense of story, and the images of “mother” and “daughter” were clearly distinguished. In the editing and processing of the video, the overall tone of the original video was reduced from brightness to black and white to express the depression and suffocation between the mother and daughter relationship. The editing uses a “flashback” shot as a transition to express the mother’s control over her daughter as a subconscious irregular control and bondage, and the overlapping of the two scenes also expresses the struggle between the daughter’s psychology and reality.

Draft Script

Video Screenshot

Debugging touch deisgner &Part III

- On March 29, our group recorded the material needed for part 3, the content will be projected on a curtain in the form of projections, and the filmed characters will be post-processed into silhouettes to serve as a backdrop for the performers, and to interact with the actors and assist us in telling the story.

2.After debugging the touch designer, we realized the linear playback of sound and screen in the touch designer, and debugged j to solve the sound input and output problem of td, and tested the real-time sound input and screen interaction in the touch designer.

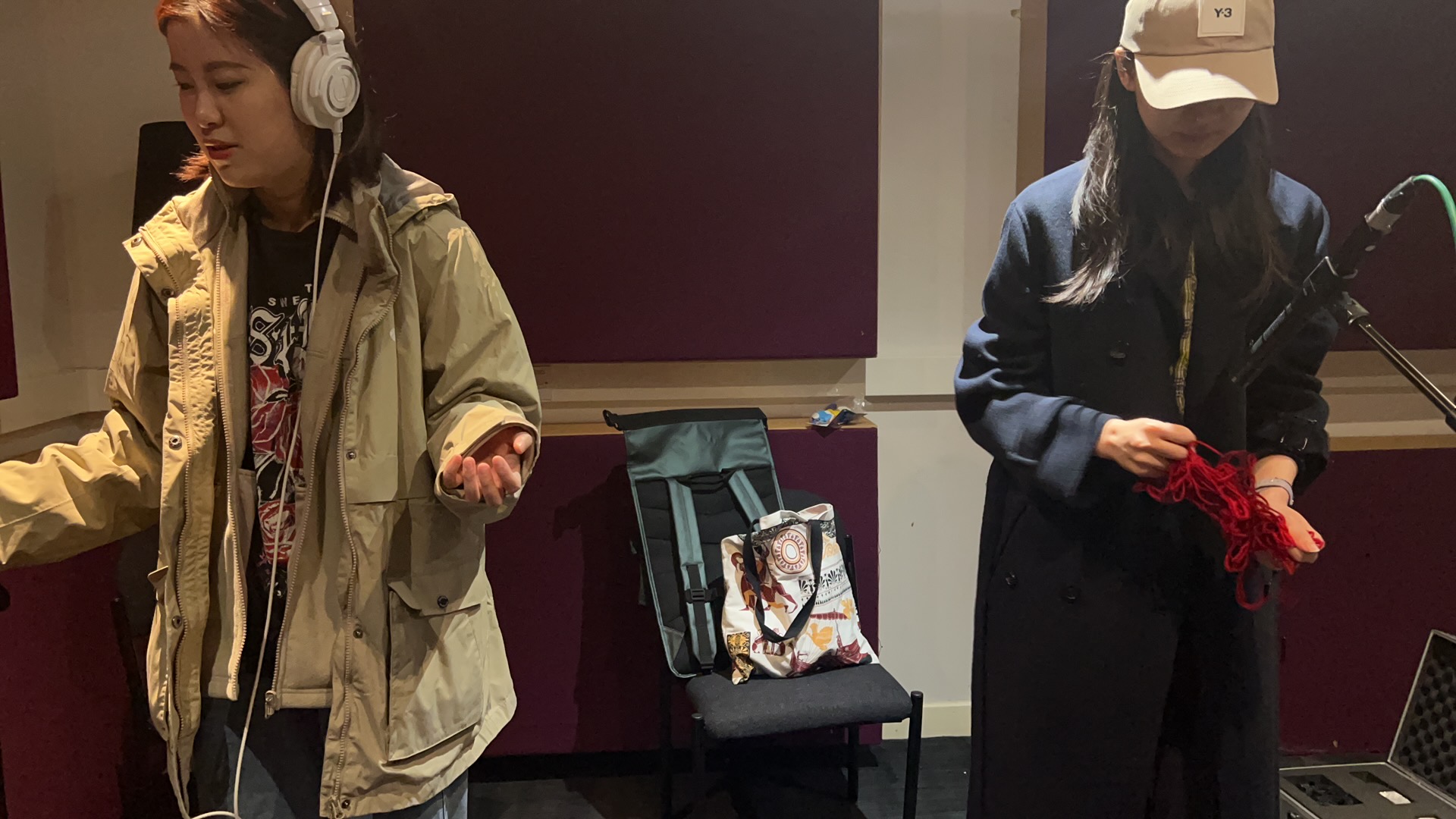

Recording&Crafting for Part 1

The first part of the project is the introduction to the scene in terms of sound that both fits the specific movements of the characters and conveys the depressingly low atmosphere contained in the images. The two main parts of the recorded sound samples are the two phases of the human voice, the multiple whispers and the single chant, and the recording of sounds with a sense of movement and rhythm with the objects used for filming.

Recording

Objects used: balloons, cloth curtains, woollen balls, piano, etc.

Equipment used: Sennheiser – MKH60, Zoom – F8, AKG – C414B XLS Pair

Time: 22nd March afternoon

Below is a selection of the recorded sounds.

Crafting

After sorting through all the recorded sounds, adding filters, reverbs, delays, and other effects makes the sounds more in tune with the narrative qualities.

from cloth curtains

double voices

voice with oVox

Mixing

Writing the musical parts, adding musical elements such as synth, violin, bass and piano to build the plot development of the images.

Interactive sound

In the first stage of the performance, an actor will play the role of a mother on set, feeding in an angry accusatory voice against her daughter in the picture in real time, the vocal will affect the picture and give it a distorted effect.

Meeting 23/3/2023

Kinect and TD test

Our group started working on our show mainly in three different directions. Firstly, the second part of our whole show involved motion capture, so we continued with Kinect and TD

Combined test, the 1st generation Kinect we rented before could not perform motion capture due to system problems. This week we bought a 2nd generation Kinect and initially completed the motion capture.

Sound creates

Flim edited

We made a preliminary edit of the video shot last week, and this part will be used in the first part of our performance. The interactive form is a live recording using live sound, and the change of the picture is realized through TD

Meeting 19/3/2023

The first part of our performance-interaction experiment film

Interactive form – sound control screen to achieve distortion and other effects

Software-Touch designer

Brief-We use the red line as a metaphor to represent the relationship between the daughter and the mother in the film, which runs through the entire first part of the experimental images.

Storyboard

19/3/2023

We filmed live, and here are the images we took on location, we completed the video content for the first part of the show, and then we needed to test the interaction between TD and video and sound.

Kinect test

17/3/2023

The second part of our performance uses motion capture, so we tested the Kinect; we will combine the Kinect and TD to achieve motion capture during the actor’s performance at the same time. Still, when we tested in the window system, we found that there is a problem with the Kinect can not be displayed; we still need to test further. If it proves that the Kinect can not be used by us, we can only use the ordinary camera, in the scene with light to achieve the motion capture.

Meeting-4

Suggestions for the addition of visual presentation and projections in the feedback, as well as restrictions on-site use, were considered

The performance form is changed from 3 links to 1 link, and our design focuses on the interaction of sound and dynamics

visual content

-Technical-level processing and touch designer-abstract particle content

-Narrative content-Experimental video

possible element use

-broken mirror

– white curtain

-Wire

– bell

We plan to change the final performance mode, we reduced the originally planned 3 sessions to 1, and we started to think and make the initial visual effects this week. We first experimented with Processing.

Experimental video reference

Meeting 13/2/2023

At the end of the morning tutorial we had a short meeting to discuss the projections that the tutor had suggested he wanted us to include in each session. At the same time we started to think about the venue and the effect we wanted to achieve with the sound overlay, which may require several attempts later on in the work