Personal content integration:

Category: AIW – Blog

Final Reflections – Shruti

My role

I was one of the digital artists in the group and performed the first part. I helped finalize the concept and participated in designing ‘our stage’ among other things but mainly focused on the visual performative aspect starting from nature to the polluted world. I also played a small role in making sure we had projectors during our practice sessions and for the performance.

Performance Day

The max patch I made, which I have discussed in a previous blog, had all the effects I wanted to use. The three folders contain the three different visual aids I needed – nature, pollution, and glitch effects. All these worked well during rehearsal and practice sessions.

But, twenty minutes before we had to perform, Vizzie (max) crashed thrice. I was able to restart it twice but it continued to crash and by this time the audience had already gathered and we were running a few minutes late.

I was very confused as to why it was crashing now when I’ve been working with this the same way for the past week. I realized then. I did add a new video, rather a large file into one of the folders during the rehearsal before the final performance we had and Vizzie did not like that. I quickly removed that video and reopened the software and added the folders again. The performance finally began.

The first show we had may have not been our best, lot of nerves and too many errors. I felt I was too worried about moving between visuals that I lost out on the effects and glitches I wanted to add.

Post my part, I was supposed to project garbage floating around onto the ground in a way of directing the audience to the next section. First, the Ipad failed to open, then the Pico projector did not work. Both of these functioned well during rehearsal.

We received some good constructive criticism and encouragement, and we went again.

As Jules said, the second time was much better! I was able to create more effects and make the visuals more chaotic, and thanks to Vibha who at the end of her performance added the sunrise and we synced up, completing the loop. No garbage was projected onto the floor, but that was a lesson learned.

I like to call the above screengrabs ‘Shades of Shruti’, as I performed the visuals sitting behind screen 1. The shades of the visuals as well as my nervousness are all too clear.

Reflection

This entire experience of collaborating with these talented individuals and creating this performance was a fun journey. I learned Vizzie, though mostly about how it does not work, and how to use a MIDI controller, but mainly working with sound designers. I will miss our design discussions.

Some of the challenges we did face were synchronizing both audio and video. They need visuals to create the sounds and we as digital designers found it hard to start something from scratch without having a clear brief. I guess that is a learning as well, not always would we have a clear indication of what is needed to begin working on anything. It is also important to stop ideating at some point and begin creating, which we started out later than we should have. Nonetheless, the performance had a narrative, start and end. But definitely has a lot of scope for improvement and additions.

I feel the future of this project can be an extended version where it runs in a continuous loop and even the audience has to move in a circle to view it. Both the visuals and sounds can be more immersive making the audience feel as if they are part of the performance, they could even trigger certain outcomes.

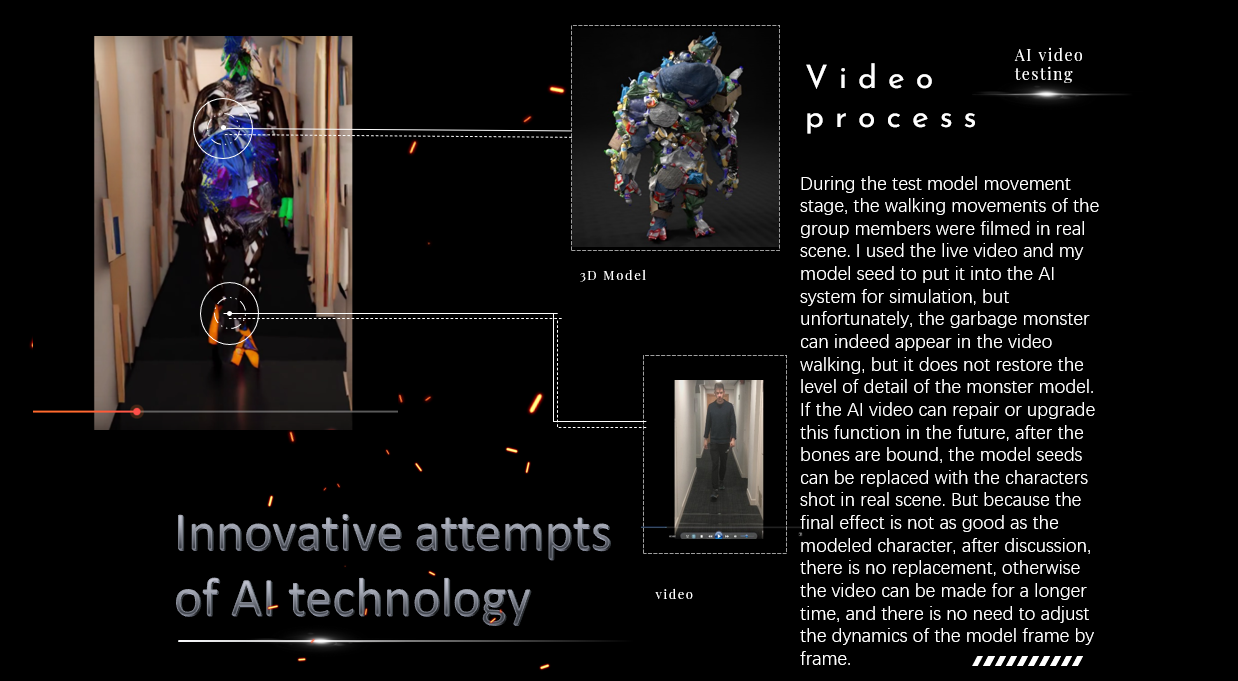

Monster video AI innovation attempt

It is an attempt of 3D video. It uses the 3D model seeds made by itself and the videos shot in real scenes to generate videos through AI intelligent algorithm, thus ensuring that the video prototype and 3D model prototype in the database are provided by itself and reducing the copyright problems that may be caused by AI.

I speculate that this will be a trend in the future of AI, ensuring that the original database model is provided by author.

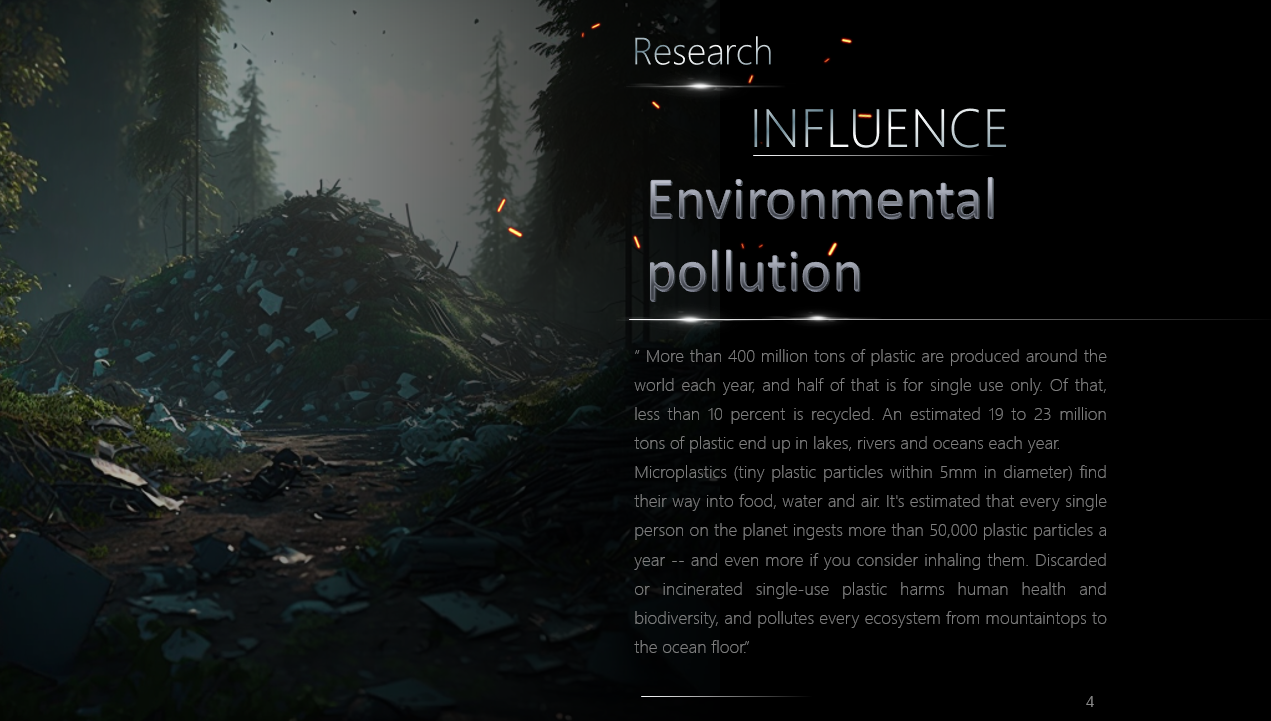

AIW-Initial brochure producction

I made the early production of the electronic brochure, and summarized the team production process and possible obstacles to the production according to team member personal write up and pictures. At the same time, it adds some missing contents, such as some environment-related research reports. This is just the pre-production, after discussion, the content is too tedious, and then vibna re-made.

Final Reflections – Owen

Please see the below PDF for Owen’s reflections on this project as a whole.

Creating videos of garbage

Owen and I (Vibha) created some videos of garbage on our own using items we found in the free store. This included some furry wires, stop watches, some sheets of wrap. I also bought some plastic bags. We used a DSLR camera with set lighting and a black screen to film most of our videos.

We weren’t too sure about the precise content of the videos and decided to brainstorm and experiment, and ended up filming a few interesting takes.

Our ‘film set’.

Our ‘film set’.

I dropped some garbage bags from a height to get the illusion of jelly fish floating in the sky.

We also created a little set with garbage being dumped into a pile add to the buildup of the garbage monster.

We made miniature garbage monsters using the furry wires and dangled the stop clocks with the wire to shoot a video of a pendulum like motion to indicate time running out.

Here are the extra videos we shot:

Test runs prior to performance

We had multiple test runs to ensure that we had sufficient practice for the performance. We had a discussion regarding what sort of projector we will be using and decided on a short throw projector because we had access to back projecting screens which prevented audience’s shadow onto screen. It also worked great for space constraints. We also booked a documentation web cam, midi controllers and speakers.

The first trial run was on Friday 31st march in studio 3, Alison house:

We used the short throw projector to superimpose Xu Yi’s garbage monster onto part 2 of the performance to check for issues. we found that a black background for the garbage monster worked best, we also segregated and divided Xu Yi’s videos so that we could use them across the performance to show the garbage monster forming. We also checked how the sounds were doing with the videos, and decided we needed more practice.

The second trial run was on Monday, 3rd March at Alison house:

We checked if the screens were working well, and set up our projectors to see how we need to place the tech during the performance. We ran into a couple of problems:

1. The pico and short throw projectors didn’t work well together, the intensity of projections varied too much to fix. We decided on two short throw projectors instead.

2. The layout of the walkthrough had had multiple changes because of availability of space. So we spoke to Jules, who suggested a number of interesting ways to use the space. Our issue now was how we’d direct the viewers from panel 1 to panel 2. We decided we would use an arrow at the end of Shruti’s performance to point to the direction the audience need to walk to reach panel 2. Also while playing Xu Yi’s garbage monster videos with the Pico projector, we realised we could create a pathway projection onto the ground to lead the viewers into our space.

3. We performed our visuals with sounds twice, and we still weren’t perfect and decided to practice again.

4. Exiting from one patch to another patch for part 2 of the performance seemed a little problematic, so I(Vibha) combined two patches to make a single patch. Unfortunately when I combined the third patch, Vizzie crashed, so I stuck to having 2 instead of 3 patches. I also found simple ways of transitioning within patches for the performance.

Last trial run was on Wednesday, 5th April.

We tested the sounds and visuals by connecting our systems to monitors. The transition between patches followed a different route here, which seemed like a minor issue, but apart from that we felt confident for our performance.

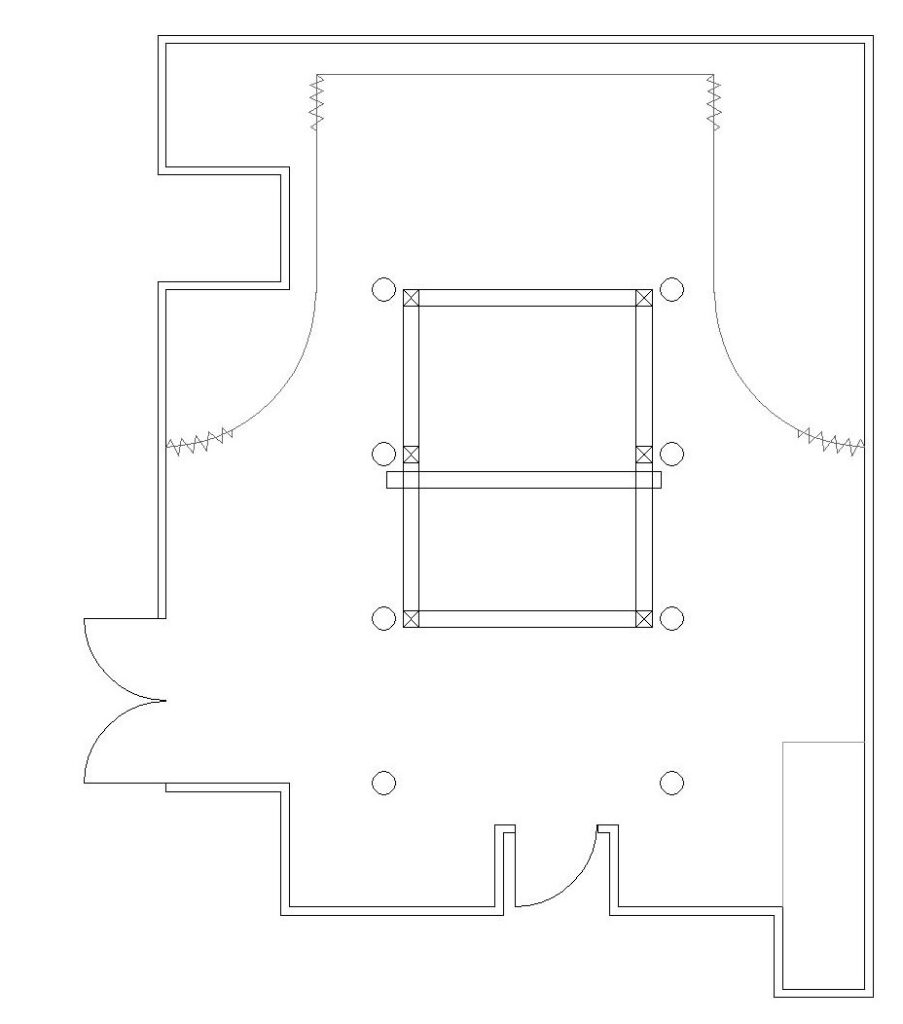

Our Stage

Initially, we had thought of using West Court at ECA to showcase our performance, but we soon changed it to the Atrium in Alison House. The main reason is the already existing truss support that can be used to hang screens and partitions.

Below shows the plan of the atrium, please note it is NOT to scale.

We decided to use the central space and with the help of the truss tie the screens. We weren’t able to establish a clear walk around within the room as planned before, but the audience does move from screen 1 to 2 as they move from my (Shruti) part to Vibha and Yi Xu’s part.

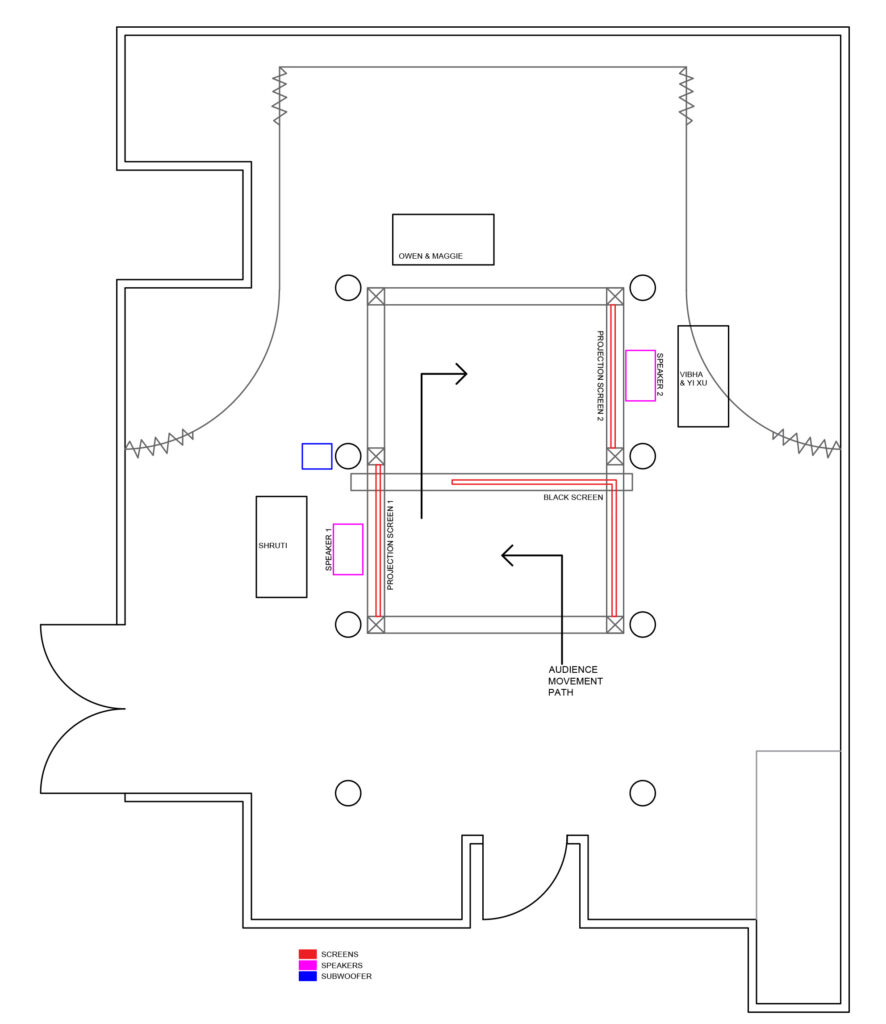

Below shows the stage we designed and the various places we positioned ourselves during the performance.

As seen, the audience enters the atrium and would first notice a black screen in front followed by the screen to the left. This is where part 1 is performed. The audience then moves through the gap and faces screen 2 to the right where the second half is performed. We were able to create an S-shaped movement pattern but a circle or a loop would’ve been more interesting as it would relate to our narrative and the audience would have an entrance/exit.

The digital designers sat behind their respective screens and the sound designers were in the corner of the S-shape. This way they could see both the screens and the audience.

Regarding the speaker setup, we decided to place 1 Mackie SRM450 on the ground tilted up 45 degrees behind each of the 2 screens. This seemed like an obvious choice, since the audience would be staring at each of the screens during the performance. This placement allowed us to have sound emitting only out of the speaker behind screen #1 during the first half of the performance, and then, when it was time for the audience to move to the second viewing area, we panned all of the sound to the speaker behind screen #2. This ushered in the audience effectively and was a creative use of a simple stereo setup.

We also placed a Genelec 7060 subwoofer at about the middle-left of the performance space. Since the low frequencies emitted by subwoofers are omnidirectional, we knew it didn’t matter precisely where the subwoofer was located or which direction it faced – we just put it roughly in the center of the room in order to emit an added ‘thump’, and it worked brilliantly!

Mengru and Vibha did some set designing by creating a boundary with plastic waste and even added some small lights which enhanced the stage.

25.03.23 rehearsal

Prep for Rehearsal

-I (Owen) am thinking about ways in which an Ableton Session View can be ‘performed’ live.

-Using MIDI controllers that have rotary dials and faders, it is possible to adjust ANY parameter inside Ableton. But which ones??? That is the important question.

-What I am going to try tomorrow during our rehearsal is using the Evolution U-Control. I will basically have 8 faders, each of which controls the level of a track in Ableton – so I can mix different ‘instruments’ together in order to progress the soundtrack. I can also select different ‘scenes’ in Session View (i.e. rows of MIDI clips) using the << and >> buttons. Then, I have plenty of dials (24 in total) left to manipulate various effects (effects TBD, but probably some kind of distortion for starters).

-Pictured below: Evolution U-Control (silver one in my lap); Faderfox FT3 and Korg nanoKontrol2 (black and silver ones on desk); Ableton Live Session View (on my laptop)

–

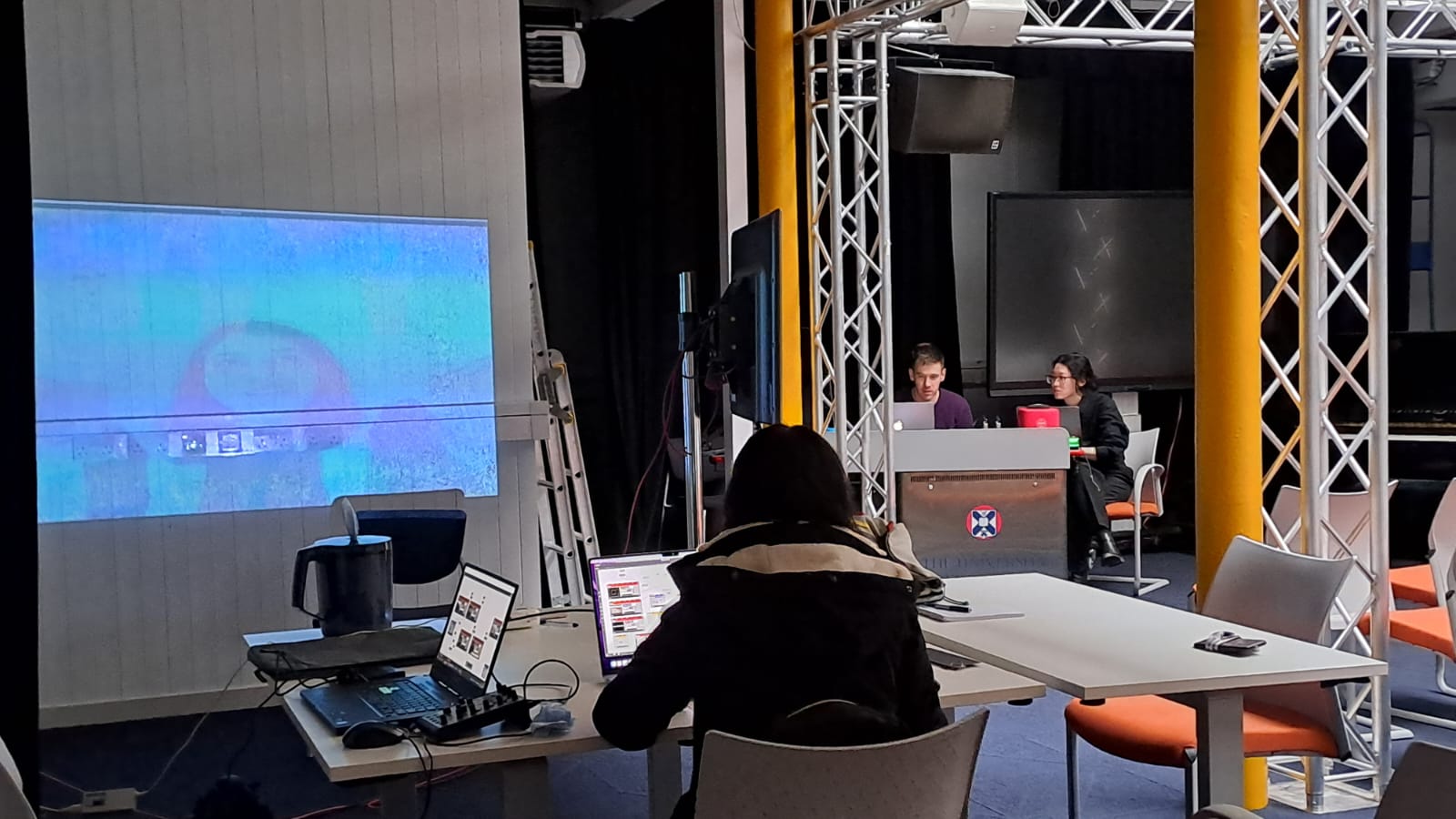

Rehearsal

We got together in the Atrium to begin practicing the actual performance. Vibha, Shruti and Yi worked on the visuals, while Maggie and Owen worked on the sound. The main takeaway is that we need to continue practicing, because you don’t really find out what doesn’t work until you fully rehearse. See pics from the rehearsal below.

Practicing projections on wall

Vibha working on her Vizzie patch

Vibha, Owen and Mengru practicing

22.03.23 Putting together music

Mengru and I (Owen) are writing the music for the performance. It is going to be a combination of sound design and music.

Today (22.03.23), I continued my work on the soundtrack. Using ONLY samples that Mengru and I recorded (hitting/squeezing plastic and metal bottles/cans etc.) and some light processing (resonator, reverb, boosting sub frequencies), I came up with the few bars of music below. I was pleasantly surprised with the result – the sounds from an empty plastic water bottle in particular were very useful in terms of adding texture and movement to the percussion layer.

Perhaps it would be beneficial for each of us to be assigned specific bars of music out of the entire timeline; or perhaps we should write the music for all bars together with each of us taking a specific component of the music (e.g. percussive vs melodic instruments). We will decide soon!