Touch Designer

Background

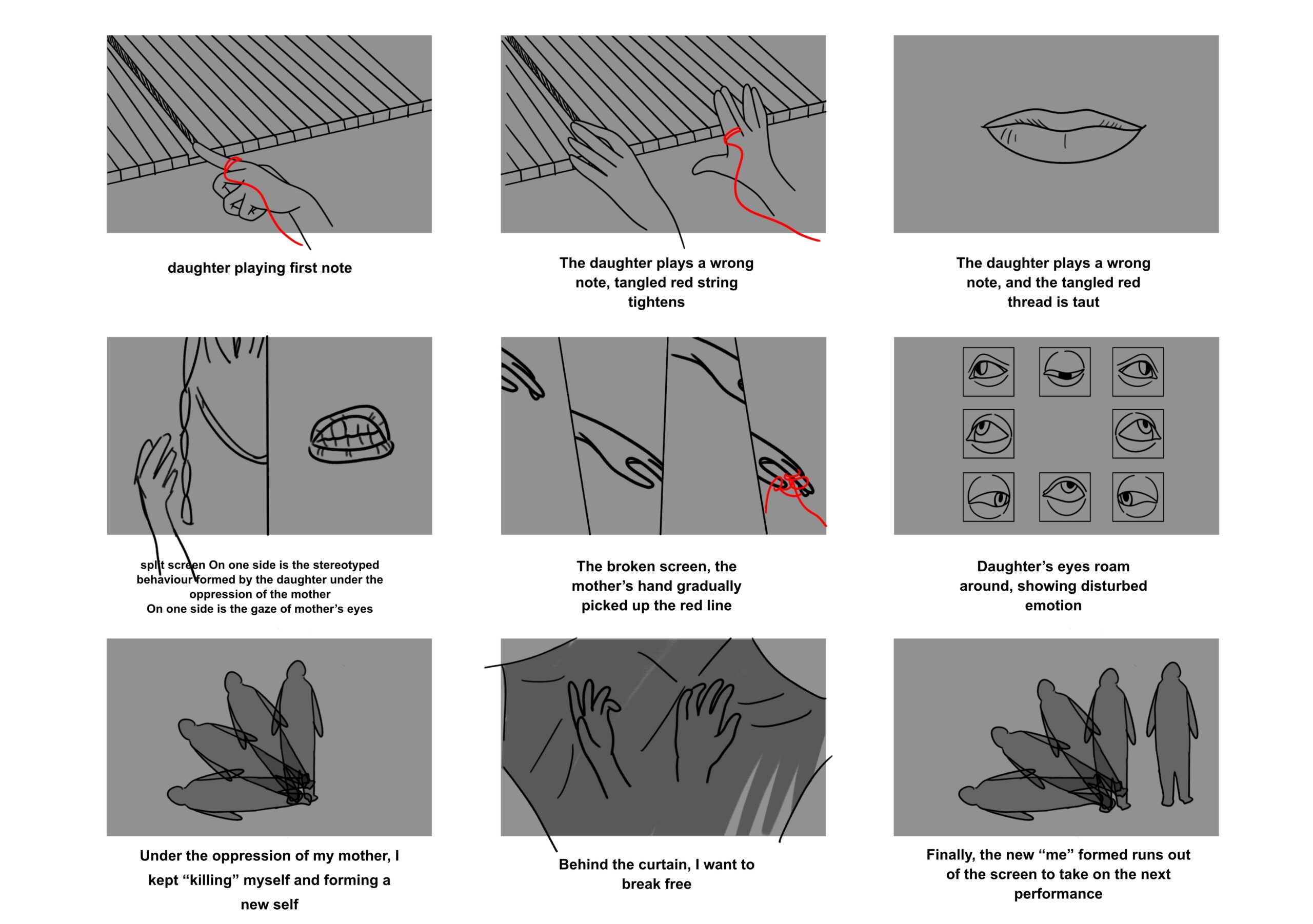

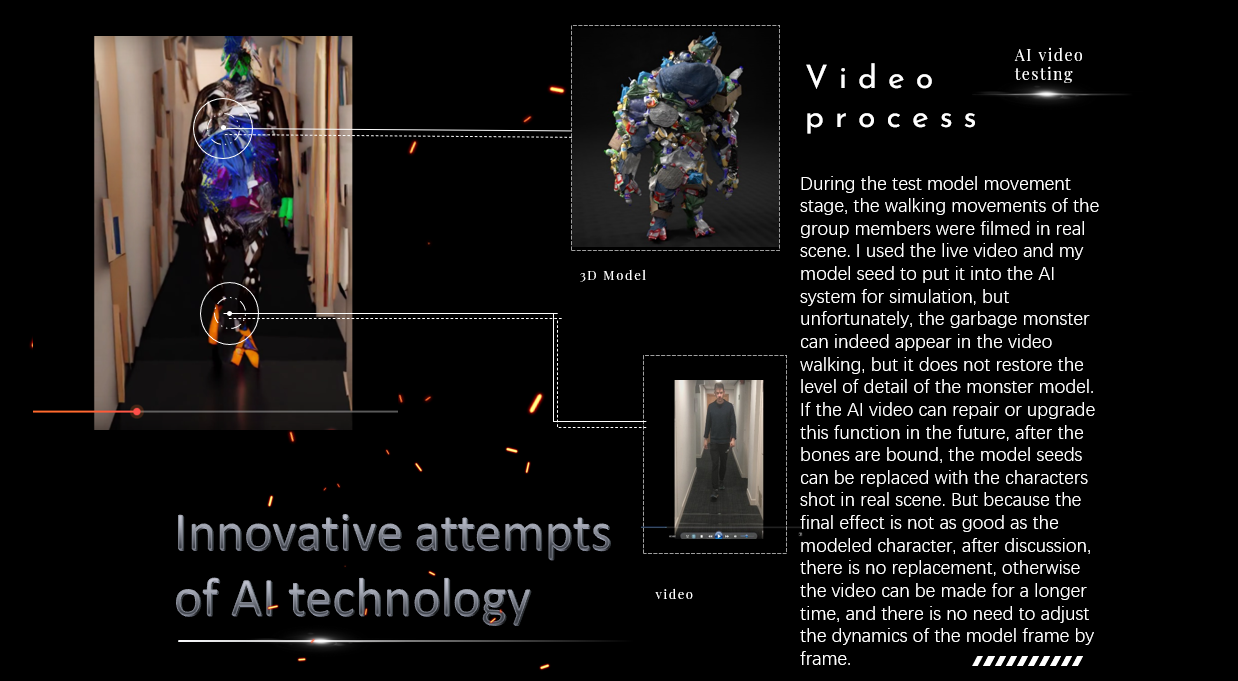

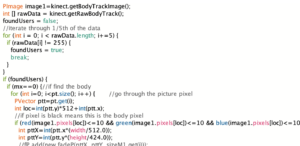

In the conception of Part 1, we hoped to present the beginning of the story of Autumn Sonata to the audience in a clear manner according to the development of the storyline. The team believed that short experimental film could help us to tell the story, so we shot an experimental film to showcase the fear and anxiety of the girl towards piano practice under the excessive expectations of her pianist mother. After completing the filming and editing of the short film, we imported the video into Touch Designer and searched for corresponding tutorials on the website to help us achieve the effect of garbled characters appearing on the screen according to changes in sound. The final result was the successful implementation of showing distorted images of the daughter in the video based on changes in sound, which conveyed the daughter’s anxiety under the excessive expectations of her mother.

Process

We found some video and text tutorials online, the main references are:

https://www.youtube.com/watch?v=IFegKFjtj80

https://www.youtube.com/watch?v=rvAB3Rzh7CI

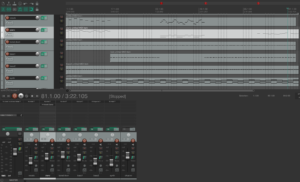

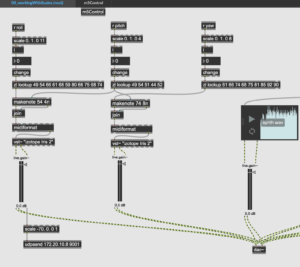

Here we have three parts: Sound Analysis, Video Changes and Background Music

1) Sound Analysis

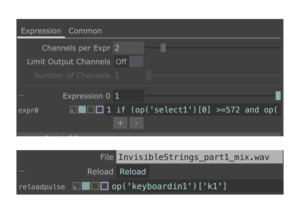

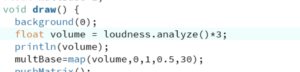

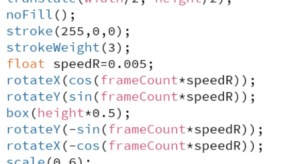

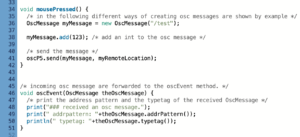

We used the Audio Device In controller as the source of the sound input, which is different from the video tutorials we referred to. Then we analyzed the relevant parameters of the sound according to the tutorials.

2) Video Changes

We divided the video editing into two identical parts: the main video changes and the daughter’s separate video in the mother-daughter frame. This was done so that we could later overlay the daughter’s video onto the right side of the original video to achieve the effect of the daughter’s part changes separately.

3) Background Music

We imported the background music file and added the Audio Device Out controller so that we could connect to the Audio Interface and provide the audience with better sound effects.

In addition to these three parts, we added controllers to better achieve the changes in certain parts of the video. We used code to set the controllers to only achieve the garbled effect in specific keyframe areas. We also set the reload shortcut code for the video and audio so that when we hit the “1” key on the keyboard, the sound and video will restart playing simultaneously.

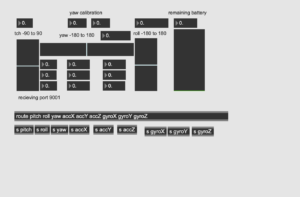

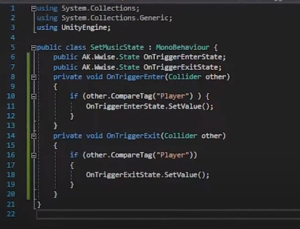

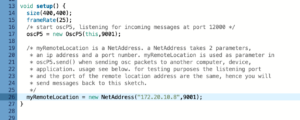

However, in the end, we did not receive any data from Max on the Processing side. Instead, Max received ‘123’ sent from Processing on the Max side. So we gave up this plan and still use environment sound to affect the particles.

However, in the end, we did not receive any data from Max on the Processing side. Instead, Max received ‘123’ sent from Processing on the Max side. So we gave up this plan and still use environment sound to affect the particles.