Mode elimination: taking the phases into account: 3

In this post we look at some of the fundamental problems involved in taking a conditional average over the high-wavenumber modes, while leaving the low-wavenumber modes unaffected.

Let us consider isotropic, stationary turbulence, with a velocity field in wavenumber space which is defined on $0\leq k \leq k_0$. Note that the maximum wavenumber $k_0$ is not the Kolmogorov dissipation wavenumber, although in both large-eddy simulation and in the application of renormalisation group (RG) to turbulence, it is often taken to be so. The only definition that I know of, is the one I put forward in 1986 [1], which is:\begin{equation}\int^{k_0}_0 2\nu_0 k^2 E(k)dk \approx \int^{\infty}_0 2 \nu_0 k^2 E(k)dk = \varepsilon, \end{equation} where $\nu_0$ is the kinematic viscosity of the fluid, $E(k)$ is the energy spectrum, and $\varepsilon$ is the dissipation rate. Obviously the value of $k_0$ depends on how closely the integral on the left approximates the actual dissipation rate, which corresponds to the upper limit on the integral being taken as infinity. Some people apparently find this definition puzzling, possibly because they are familiar with RG in the context of the theory of critical phenomena, where the maximum wavenumber is determined by the inverse of the lattice constant. In contrast, fluid dynamicists may find our definition here quite intuitive, as it is analogous to Prandtl’s definition of the laminar boundary layer.

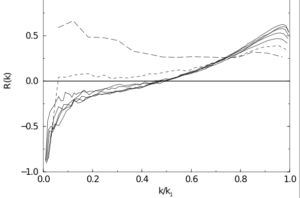

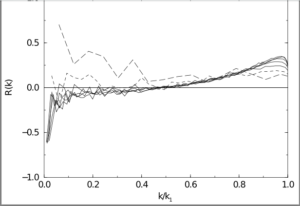

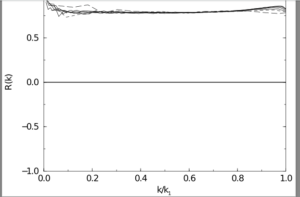

Something which may be counter-intuitive for many, is the choice of $k_0$ as the maximum wavenumber. This is because in RG we progressively eliminate modes in wavenumber bands: $k_1\leq k \leq k_0$, $k_2 \leq k \leq k_1$, $k_3\leq k \leq k_2$, and so on, where $k_n$ decreases with increasing integer $n$, until the iteration reaches a fixed point. Also, the fluid viscosity $\nu_0$ is so denoted, because it is progressively renormalized until it reaches a value $\nu_{n-1}=\nu_n \equiv \nu_N$, at the fixed point $n=N$.

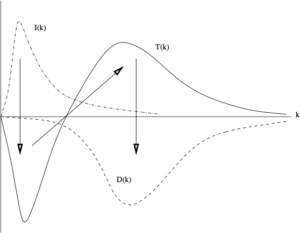

The first step in eliminating a band of modes is quite straightforward. We high-pass, and low-pass, filter the velocity field at $k=k_1$, thus: \begin{eqnarray} u^{-}(k,t) = u(k,t) \quad \mbox{for} \quad 0 \leq k \leq k_1; \nonumber\\ u^{+} (k,t)= u(k,t) \quad \mbox{for} \quad k_1 \leq k \leq k_2,\end{eqnarray} where we have adopted a simplified notation. Then we can substitute the decomposition given by equation (2) into the Navier-Stokes equation in wavenumber, and study the effect. However we will not pursue that here, and further details can be found in Section 5.1.1 of [2]. Instead, we will concentrate here on the following question: how do we average out the effect of the $u^+$ modes, while keeping the $u^-$ modes constant?

The condition for such an average can be written as: \begin{equation}\langle u^-(k,t)\rangle_c = u^{-}(k,t),\end{equation}where the subscript `$c$’ denotes `conditional’. We should also recall that isotropic turbulence requires a zero mean velocity, that is: $\langle u(k,t)\rangle=0$.

Actually, it would be quite simple to carry out such an average, provided that the velocity field $u(k,t)$ were multivariate normal. In that case, each of the various modes could be averaged out, independently of all the rest. However, the turbulent velocity field is not Gaussian so, in attempting to carry out a such an average, we would run into the following two problems.

First, we must satisfy the boundary condition between the two regions of $k$-space. Hence, \begin{equation} u^-(k_1,t)=u^+(k_1,t). \end{equation} This is the extreme case, where we would be trying to average out a high-$k$ mode while leaving the identical low-$k$ mode unaffected. At the very least, this draws attention to the need for scale separation.

Secondly, there are some questions about the nature of the averaging over modes, in terms of the averaging of the velocity field in real space. In order to consider this, let us introduce a combined Fourier transform and filter $F_T^{\pm}(k,x;t)$ acting on the velocity field $u(x,t)$, such that:\begin{equation}u^{\pm}(k,t)=F_T^{\pm}(k,x;t)u(x,t). \end{equation} Noting that both the Fourier transform and the filter are purely deterministic entities, the average can only act on the real-space velocity field, leading to zero!

So it seems that a simple filtered average, as used in various attempts at subgrid modelling or RG applied to turbulence, cannot be correct at a fundamental level. We will see in the next post how the introduction of a particular kind of conditional average led to a more satisfactory situation [3].

[1] W. D. McComb. Application of Renormalization Group methods to the subgrid modelling problem. In U. Schumann and R. Friedrich, editors, Direct and Large Eddy Simulation of Turbulence, pages 67- 81. Vieweg, 1986.

[2] W. David McComb. Homogeneous, Isotropic Turbulence: Phenomenology, Renormalization and Statistical Closures. Oxford University Press, 2014.

[3] W. D. McComb, W. Roberts, and A. G. Watt. Conditional-averaging procedure for problems with mode-mode coupling. Phys. Rev. A, 45(6):3507- 3515, 1992.