Superstitions in turbulence theory 2: that intermittency destroys scale-invariance!

Superstitions in turbulence theory 2: that intermittency destroys scale-invariance!

At the moment I am busy revising a paper (see [1] below) in order to meet the comments of the referees. As is so often the case, Referee 1 is supportive and Referee 2 is hostile. Naturally, Referee 2 writes at great length, so it is really a matter of rebuttal rather than our making changes. It seems clear that he is far from his comfort zone and his comments show that he has comprehensively misunderstood our paper. It also seems to me that he has not actually read certain key parts of the manuscript. For instance, he states: ‘The way how the authors use the word “scale-invariance” should be clarified’ (sic).

This is despite the fact that subsection 3.1 of the paper is titled ‘Scale-invariance of the inertial flux in the infinite Reynolds number limit’ and consists of only three paragraphs. It contains two equations, one of which states the criterion for an inertial range. This is followed by a sentence ending with “… where the fact that the criterion holds over a range of wavenumbers is usually referred to as scale-invariance.” Oh, and as regards ‘how the authors use the word’, we cite a number of references to show that others use the phrase, so we are not alone.

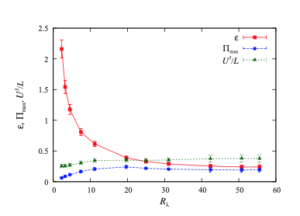

The next thing he says is: ‘We know from experimental evidence (intermittency) that scale invariance is broken in the inertial range.’ This is quite simply nonsense. In this context scale-invariance means that the inertial range is characterised by a constant flux over a range of wavenumbers, and this has been shown in many investigations. In fact there is no way in which intermittency, which is a single-realization characteristic, can affect mean quantities such as inertial flux or their properties such as scale-invariance. In a recent paper [2], we have shown that the ensemble average of intermittency vanishes. In the first figure below, we show the effect of using contours of isovorticity and the progressive effect of averaging over $N=1,\,2,\,5,\,10,\,25$ and $46$ realizations.

The effect of the averaging out with increasing number of realizations is evident. While the use of vorticity is more natural, the effect can perhaps be more clearly seen using the Q-criterion, as is done in the next figure.

Both figures are taken from the same stationary DNS of the Navier-Stokes equations. Further details can be found in reference [2].

Over the past three decades there has been an increasing body of evidence to the effect that intermittency does not affect the Kolmogorov spectrum. Any deviations are in fact due to the Kolmogorov conditions not being quite met. Presumably it will take a long time for rational enquiry to defeat superstition in this topic!

[1] W. D. McComb and S. R. Yoffe. The infinite Reynolds number limit and the quasi-dissipative anomaly. arXiv:2012.05614v2[physics.flu-dyn], 2021.

[2] S. R. Yoffe and W. D. McComb. Does intermittency affect the inertial transfer rate in stationary isotropic turbulence? arXiv:2107.09112v1 [physics.flu-dyn], 2021.