Theories versus formalisms

Theories versus formalisms.

After the catastrophe of quasi-normality, the modern era of turbulence theory began in the late 1950s, with a series of papers by Kraichnan in the Physical Review, culminating in the formal presentation of his direct-interaction approximation (DIA) in JFM in 1959 [1].

The next step was the paper by Wyld [2], which set out a formal treatment of the turbulence problem based on, and very much in the language of, quantum field theory. Wyld carried out a conventional perturbation theory, based on the viscous response of a fluid to a random stirring force. He showed how simple diagrams could be used with combinatorics to generate all the terms in an infinite series for the two-point correlation function. He also showed that terms could be classified by the topological properties of their corresponding diagrams. In this way, he found that one class of terms could be summed exactly and that another could be re-expressed in terms of partially summed series, thus introducing the idea of renormalization. In other words, the exact correlation could be expressed as an expansion in terms of itself and a renormalized response function (or propagator). In a sense, this could be regarded as a general solution of the problem, but obviously one that by itself does not provide a tractable theory. In short, it is a formalism.

As an aside, I should just mention that Wyld’s paper was evidently very much written for theoretical physicists. That is no reason why any competent applied mathematician shouldn’t follow it, but one suspects that few did. Also, the work has been subject to a degree of criticism: the current version may be found as the improved Wyld-Lee theory in #8 of the list of My Recent Papers on this website. But this does not affect anything I will say here and I will return to this topic in a future blog.

In contrast, Kraichnan began by introducing the infinitesimal response function $\hat{G}$, which connected an infinitesimal change in the stirring forces to an infinitesimal change in the velocity field. He made this the basis of what he claimed was an unconventional (superior?) perturbation theory, making use of ideas like weak dependence, maximal randomness, and direct interaction. Unfortunately these ideas did not attract general agreement, and I suspect that he found the refereeing process with JFM, and the subsequent experience of the Marseille Conference (see the previous blog), rather bruising. Apparently he said. `The optimism of British applied mathematicians is unbounded.’ Then after a pause. `From below.’ I was told this by Sam Edwards when I was a postgraduate student. Sam obviously appreciated the interplay of wit and cynicism.

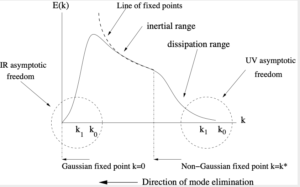

Now, in completing his theory, Kraichnan made the substitution $\hat{G}= G \equiv \langle \hat{G} \rangle$, which is in effect a mean-field approximation. So it is important to note that, when the conventional perturbation formalism of Wyld is truncated at second-order in the renormalized expansion, the equations of Kraichnan’s DIA are recovered. This is important because it suggests that this particular mean-field approximation is in fact justified. However, we know that Kraichnan came to the conclusion that his theory was wrong, at least in terms of its asymptotic behaviour at high Reynolds numbers: see the previous blog.

This has the immediate implication that Wyld’s formalism is also wrong, when truncated at second order. Which is also true of the later functional formalism of Martin, Siggia and Rose [3]. Kraichnan came to the conclusion that his DIA approach should be carried out in a mixed Eulerian-Lagrangian coordinate system; and, if correct, that would presumably also apply to the two formalisms. However, there is also the question of whether or not it is appropriate to treat the system response as one would in dynamical system theory. After all, the stirring forces in a fluid, first have to create the system, and only then do they maintain it against the dissipative effects of viscosity. We will return to this aspect in future blogs.

[1] R. H. Kraichnan. The structure of isotropic turbulence at very high Reynolds numbers. J. Fluid Mech., 5:497-543, 1959.

[2] H. W. Wyld Jr. Formulation of the theory of turbulence in an incompressible fluid. Ann. Phys, 14:143, 1961.

[3] P. C. Martin, E. D. Siggia, and H. A. Rose. Statistical Dynamics of Classical Systems. Phys. Rev. A, 8(1):423-437, 1973.